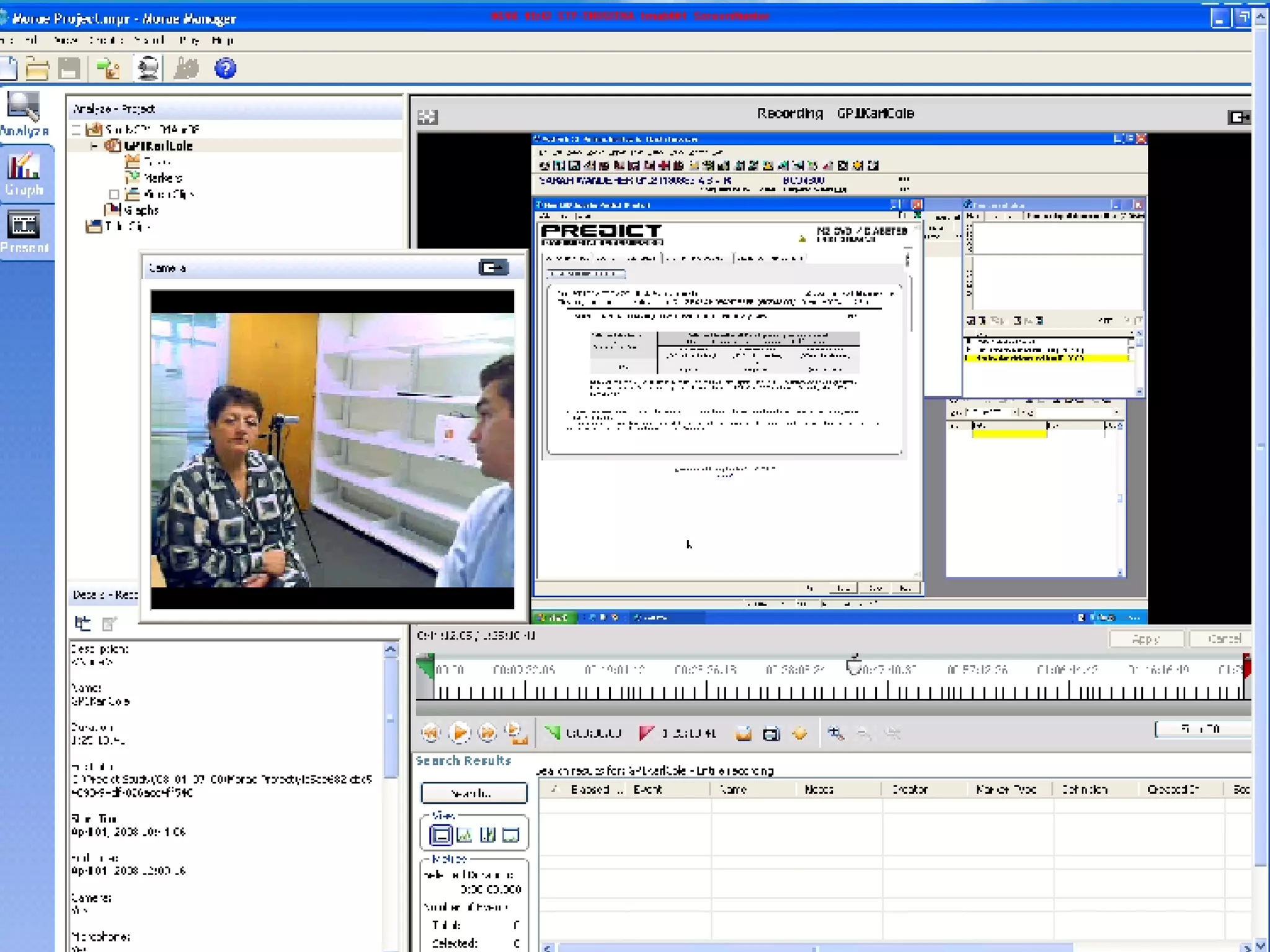

The document discusses the challenges and importance of electronic decision support systems (EDSS) in community healthcare, emphasizing the need for effective integration into clinician workflows and the promotion of evidence-based medicine. It highlights a study that identified key predictors of successful EDSS implementation and reviews failures from previous systems, cautioning against common pitfalls such as poor timing and usability. The authors propose improved evaluation methods, including video analyses and automated logging, to enhance usability and effectiveness in real-world settings.