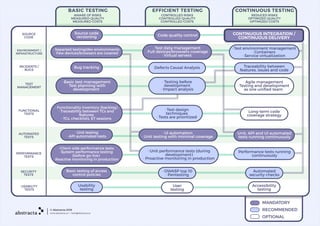

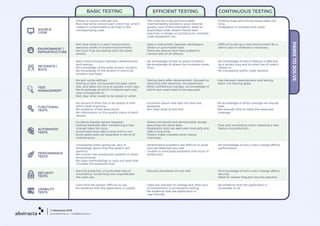

The document discusses the importance of continuous testing in a continuous delivery environment, emphasizing a shift towards more developer involvement and automation in the testing process. It outlines the challenges faced by testers, the need for risk control, and highlights benefits such as defect causal analysis and performance monitoring. Additionally, it details the issues stemming from poor testing practices and communication gaps between development and testing teams, leading to increased costs and inefficiencies.