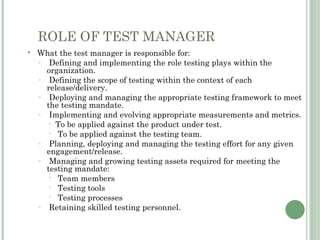

Test management is the practice of organizing and controlling the software testing process. It includes test planning, authoring, execution, and reporting. The test manager is responsible for defining the testing scope and framework, measuring test metrics, and managing the overall testing effort. Effective test management starts testing early, reuses test artifacts, focuses on requirements-based testing, and communicates status through defined metrics.

![TEST MANAGEMENT - PHASES

Test artifacts and resource organization

Test planning is the overall set of tasks that address the questions

of why, what, where, and when to test.

Test authoring is a process of capturing the specific steps required

to complete a given test.

Test execution consists of running the tests by assembling

sequences of test scripts into a suite of tests.

Test reporting is how the various results of the testing effort are

analyzed and communicated. This is used to determine the current

status of project testing, as well as the overall level of quality of the

application or system. 100%

90%

act. # of spec. TCs

Automatisation level

atc. autom. level [%]

3500

3000

planned autom. level [%] 80%

% of automated TCs

80%

# of specified TCs

70% 70%0%

7 69%

68% 68% 69% 2500

70% 64%

6 61%

60%0%

60% 57% 56% 58%

57%

54%4% 5 3%

5 53% 2000

51%

48%48%8%

47% 4

50% 43% 44%

42% 42%

39% 1500

40% 36% 3 5% 35% 36%6% 34%

36% 35% 3 6% 3 36%

35%

31%

28%

30% 1000

20%

500

10%

0% 0

CW13

CW14

CW15

CW16

CW17

CW18

CW19

CW20

CW21

CW22

CW23

CW24

CW25

CW26

CW27

CW28

CW29

CW30

CW31

CW32

CW33

CW34

CW35

CW36

CW37

CW38

CW39

CW40

CW41

CW42

CW43

CW44

CW45

CW46

CW48

CW49

CW50

CW51

CW47

LS 7 LS 7.1 LS 8 LS 9

Calender weeks / Delivery steps](https://image.slidesharecdn.com/testmanagement-120513141035-phpapp01/85/Test-management-4-320.jpg)