The document provides an overview of a vision-based place recognition system for autonomous robots. It discusses the framework of such a system, including sensing, pre-processing, feature extraction, training, classification, and post-processing. Local feature extraction is a key component, involving local feature detection to identify interest points and local feature descriptors to build representations around those points. The system aims to recognize places using visual cues in order to enable robot localization.

![Vision-Based Place Recognition for Autonomous Robot<br />Survey (1): Project Overview<br />Ahmed Abd El-Fattah, Ahmed Saher, Mourad Aly and Yasser Hassan<br />Dr. Mohammed Abd El-Megged and Dr. Safaa Amin<br />Ain Shams University, Faculty of Computer and Information Science, Egypt, Cairo<br />Abstract <br />In the current survey, an overview of the project will be provided, what is the meaning of project’s title? Where is the position of the project in SLAM? What is the location of the project in computer science field? The survey includes also the project’s architecture, taxonomies of feature detectors, feature descriptors, and classification algorithms. <br />Table of Contents TOC \o \"

1-3\"

\h \z \u Abstract PAGEREF _Toc152528506 \h 11. Introduction PAGEREF _Toc152528507 \h 32. Project’s position in computer science PAGEREF _Toc152528508 \h 33. Simultaneous Localization and Mapping “SLAM” PAGEREF _Toc152528509 \h 43.1 Localization PAGEREF _Toc152528510 \h 44. Vision-Based Place Recognition for autonomous robots PAGEREF _Toc152528511 \h 54.1. Autonomous Robots PAGEREF _Toc152528512 \h 54.2. Vision Based PAGEREF _Toc152528513 \h 54.3. Place Recognition PAGEREF _Toc152528514 \h 55.Framework of a vision-based place recognition system PAGEREF _Toc152528515 \h 65.1. Sensing PAGEREF _Toc152528516 \h 85.2. Pre-Processing PAGEREF _Toc152528517 \h 85.3. Feature Extraction PAGEREF _Toc152528518 \h 85.4. Training PAGEREF _Toc152528519 \h 95.5. Optimization PAGEREF _Toc152528520 \h 95.6. Classification PAGEREF _Toc152528521 \h 95.7. Post-Processing PAGEREF _Toc152528522 \h 96.Feature Extraction PAGEREF _Toc152528523 \h 106.1. Local Feature Detection Algorithms PAGEREF _Toc152528524 \h 116.1.1 Good features properties: PAGEREF _Toc152528525 \h 116.2. Local Feature Descriptor Algorithms PAGEREF _Toc152528526 \h 127. Classification PAGEREF _Toc152528527 \h 137.1 Supervised Learning: PAGEREF _Toc152528528 \h 137.1.1 Supervised Learning Steps: PAGEREF _Toc152528529 \h 137.1.2 Problems in supervised learning PAGEREF _Toc152528530 \h 157.2 Various classifiers PAGEREF _Toc152528531 \h 157.2.1 Similarity (Template Matching) PAGEREF _Toc152528532 \h 167.2.2 Probabilistic Classifiers PAGEREF _Toc152528533 \h 167.2.3 Decision Boundary Based Classifiers PAGEREF _Toc152528534 \h 167.3 Classifier Evaluation PAGEREF _Toc152528535 \h 16a. Conclusion PAGEREF _Toc152528536 \h 16b. Future Work PAGEREF _Toc152528537 \h 16c. References PAGEREF _Toc152528538 \h 17<br />1. Introduction<br />The project surveyed in this document is part of a SLAM project. SLAM is an acronym for Simultaneous localization and mapping, which is a technique used by robots and autonomous vehicles to build up a map within an unknown environment (without a prior knowledge), or to update a map within a known environment (with a prior knowledge from a given map) while at the same time keeping track of their current location. Both this document and project focus on vision-based place recognition for autonomous robots. These robots will be able to recognize their position autonomously which means that it will be able to perform desired tasks in unstructured environments without continuous human guidance.<br />2. Project’s position in computer science<br />Computer science may be divided into several fields. Our problem revolves mainly around the field of computer vision. Computer vision is the science and technology of machines that see, where see in this case means that the machine is able to extract information from an image that is necessary to solve some task. This means that computer vision is the construction of explicit, meaningful descriptions of physical objects from their images. The output of computer vision is a description or an interpretation or some quantitative measurements of the structures in the 3D scene [1]. Image processing and pattern recognition are among many techniques computer vision employs to achieve its goals as shown in Fig. 2.0.1. <br />Fig. 2.0.1 Image Processing and Pattern Recognition techniques computer vision employs to achieve its goals, Project lays in the green area.<br />Our project is a robot vision application, which applies computer vision techniques to robotics applications. Specifically, it studies the machine vision in the context of robot control and navigation [1].<br />3. Simultaneous Localization and Mapping “SLAM”<br />Simultaneous localization and mapping (SLAM) is a technique used by robots and autonomous vehicles to build up a map within an unknown environment (without a prior knowledge), or to update a map within a known environment (with a prior knowledge from a given map) while at the same time keeping track of their current location. Mapping is the problem of integrating the information gathered by a set of sensors into a consistent model and depicting that information as a given representation. It can be described by the first characteristic question what does the world look like? Central aspects in mapping are the representation of the environment and the interpretation of sensor data. In contrast to this, localization is the problem of estimating the place (and pose) of the robot relative to a map. In other words, the robot has to answer the second characteristic question, Where am I? Typically, solutions comprise tracking, where the initial place of the robot is known and global localization, in which no or just some a prior knowledge about the ambiance of the starting position is given [2]. Our project focuses on the localization problem, as it needs a previously generated map and the current input images to be able to localize the robot’s position.<br />3.1 Localization<br />Localization is a fundamental problem in mobile robotics. Most mobile robots must be able to locate themselves in their environment in order to accomplish their tasks. Localization has three methods geometric, topological and hybrid as shown in Fig.3.1.1.<br />Fig. 3.1.1. Localization methods geometric, topological and hybrid <br />Geometric approaches typically use a two-dimensional grid as a map representation. They attempt to keep track of the robot’s exact position with respect to the map’s coordinate system. Topological approaches use an adjacency graph as a map representation. They attempt to determine the node of the graph that corresponds to the robot’s location. Hybrid methods combine geometric and topological approaches [3].<br />Most of the recent work in the field of mobile robot localization focuses on geometric localization. In general, these geometric approaches are based on either map matching or landmark detection. Most map matching systems rely on an extended Kalman filter (EKF) that combines information from intrinsic sensors with information from extrinsic sensors to determine the current position. Good statistical models of the sensors and their uncertainties must be provided to the Kalman filter.<br />Landmark localization systems rely on either artificial or natural features of the environment. Artificial landmarks are easier to detect reliably than natural landmarks. However, artificial landmarks require modifications of the environment, such that systems based on natural landmarks are often preferred. Various features have been used as natural landmarks: corners, doors, overhead lights, air diffusers in ceilings, and distinctive buildings. Because most of the landmark-based localization systems are tailored for specific environments, they can rarely be easily applied to different environments [3].<br />4. Vision-Based Place Recognition for autonomous robots<br />4.1. Autonomous Robots<br />Autonomous robots are intelligent machines capable of performing tasks in the world by themselves, without explicit human control. Mobile Autonomous Robot (MAR) is a microprocessor based, programmable mobile robot, which can sense and react to its environment.<br />A fully autonomous robot has the ability to:<br />Gain information about the environment.](https://image.slidesharecdn.com/survey1projectoverview-101216115306-phpapp02/75/Survey-1-project-overview-1-2048.jpg)

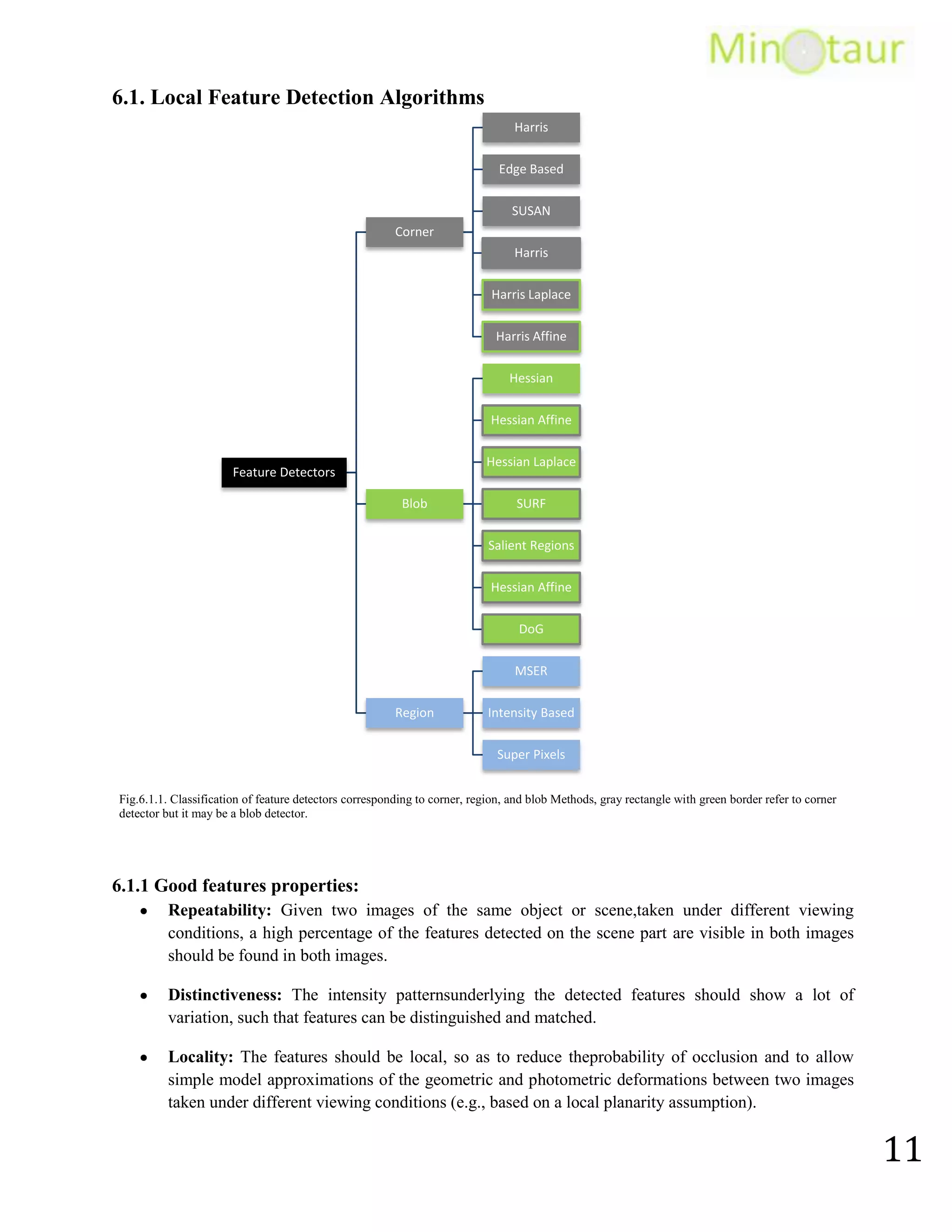

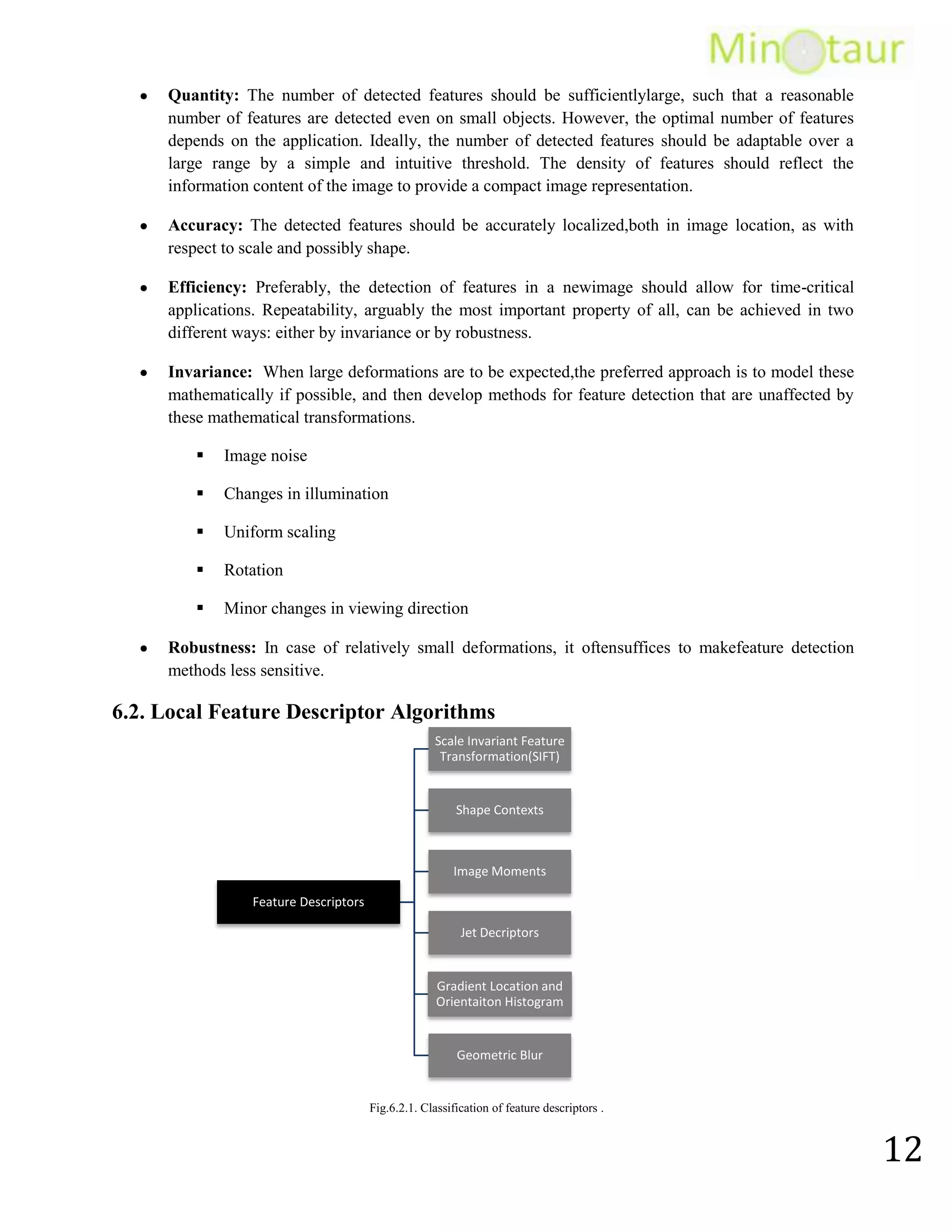

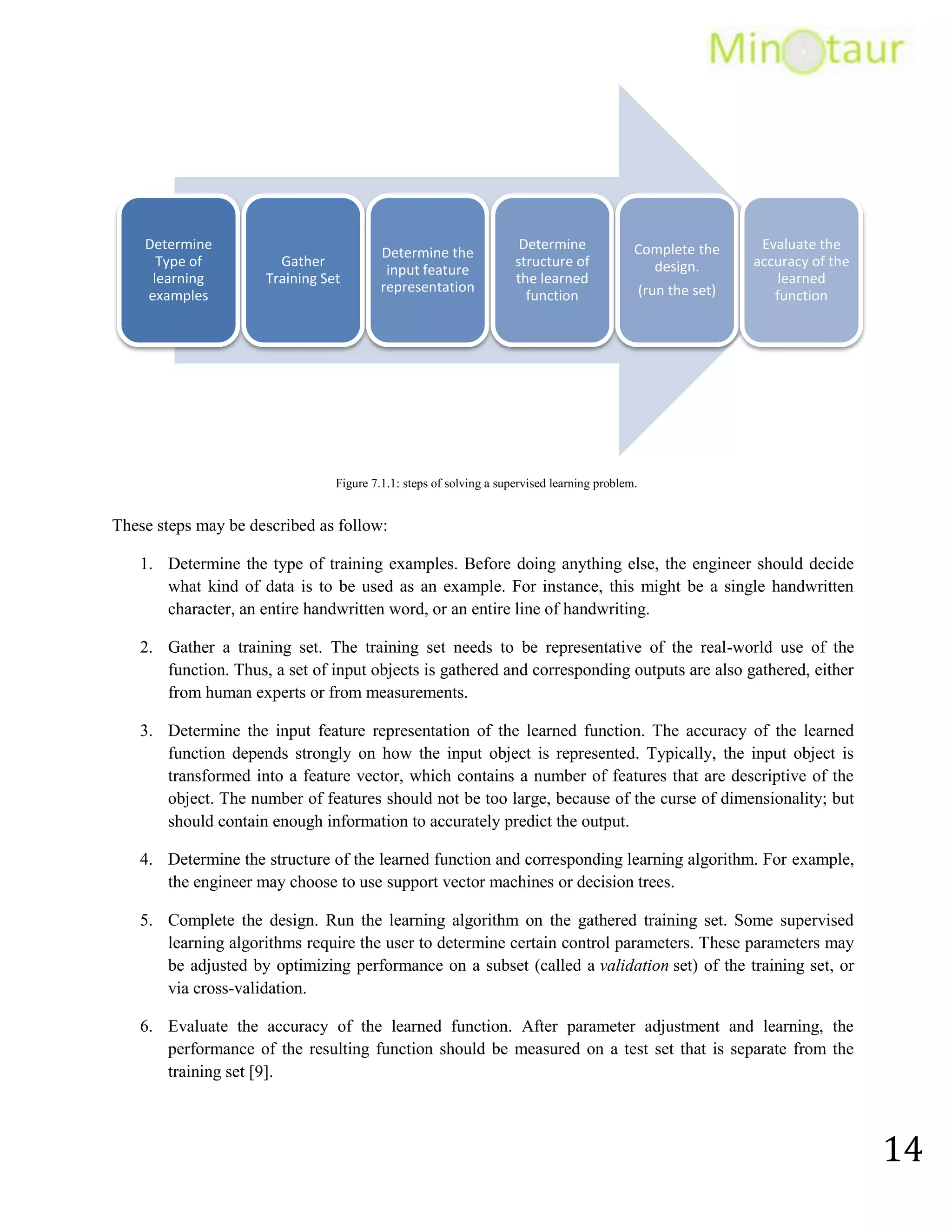

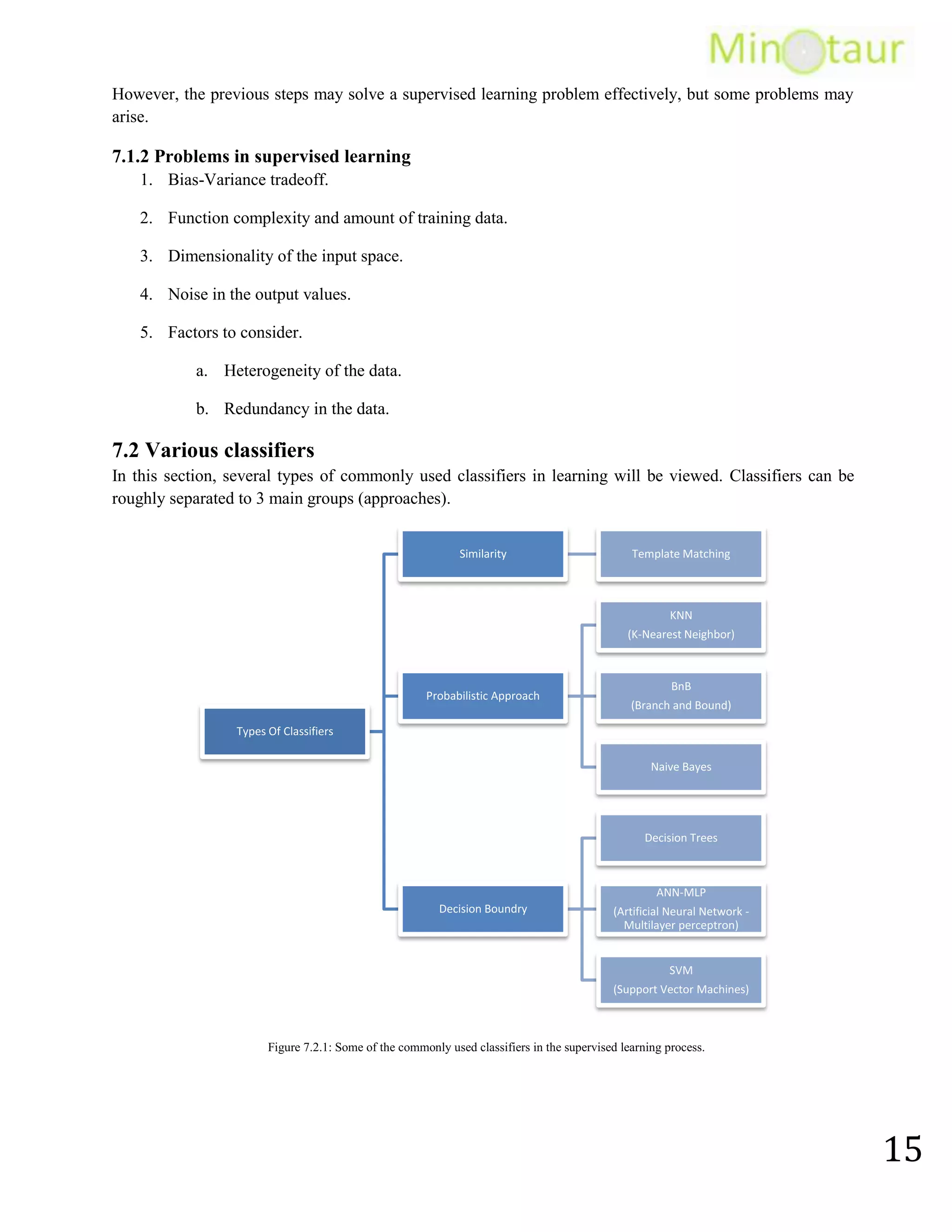

![Avoid situations that are harmful to people, property, or itself unless those are part of its design specifications.Human controls RC robots or Remote Control robots, and they can’t react to the environment by themselves [4].<br />4.2. Vision Based<br />A robust localization system requires an extrinsic sensor, which provides rich information in order to allow the system to reliably distinguish between adjacent locations. For this reason, we use a passive color vision camera as our extrinsic sensor. Because many places can easily be distinguished by their color appearance, we expect that color images provide sufficient information without the need for range data from additional sensors such as stereo cameras, sonars, or a laser rangefinder. Other systems uses another extrinsic sensor like range measurement device (Sonars, Laser Scanner). Nowadays, the range measurement devices usually used are laser scanners. Laser scanners are very precise, efficient, and the output does not require much computation to process. On the downside they are also very expensive. A SICK scanner costs about 5000USD. Problems with laser scanners are looking at certain surfaces including glass, where they can give very bad readings (data output). Also laser scanners cannot be used underwater since the water disrupts the light and the range is drastically reduced. Also there is the option of sonar. Sonar was used intensively some years ago. They are very cheap compared to laser scanners. However, Their measurements are not very good compared to laser scanners and they often give bad readings [5].<br />4.3. Place Recognition<br />The robotics community has mostly conducted the research in scene and/or place recognition to solve the problem of mobile robot navigation. Leonard and Durant summarized the general problem of mobile robot navigation by three questions: Where am I? Where am I going? And, How should I get there? However, most of the research that been conducted in this area tries to answer the first question, that is: robot positioning in its environment.<br />Many challenges face the problem of face recognition can be shown in Fig.4.3.1. <br />The fact that the objects, scenes and/or places appear to be largely variable in their visual appearances. Their visual appearances can change dramatically due to occlusion, cluttered background, noise and differently illumination and imaging conditions.<br />Recognition algorithms would perform differently in indoor and outdoor environments.<br />Recognition algorithms would perform differently with different environments.<br />Due to the very limited resources of a mobile robot, it’s difficult to find a solution that is both, resource efficient and accurate [5].<br />Fig.4.3.1. Showing the different dynamics that are common to the real world indoor environments [5].<br />The appearance of the room changes dramatically due to variation in illumination caused by different weather conditions (1st row). Variation caused by different viewpoints (2nd row). Additional variability caused by human activities is also apparent: a person appears to work in 4.3.1(a) and 4.3.1(c), the dustbin is full in 4.3.1(a) whereas it is empty in 4.3.1(b) [5].<br />5.Framework of a vision-based place recognition system<br />Any supervised recognition system contains all or some of the modules shown in figure.5.0.1.<br />Fig.5.0.1. Framework of a vision-based place recognition system [5].<br />In figure.5.0.1 main modules of the system are shown with yellow rectangles that constitute the overall operations in the training and the recognition processes. Data flow among the different modules is shown with arrow heads. Light gray rectangles describe the type of data generated at every stage of the two processes. Finally, the most fundamental modules, present in almost every pattern recognition system, are framed with a solid line [5].<br />The first three operations (sensing, feature extraction, training) are common for both the training and the recognition processes, therefore will be discussed first. <br />5.1. Sensing<br />The basic purpose of a sensor is to sense the environment and to store that information into a digital format. Two types of optical sensors that are commonly used for vision-based place recognition and localization of mobile robots include: a regular digital camera and an Omni-directional camera. Regular cameras are the most commonly found due to their nominal cost and good performance. Omni-directional cameras, on the other hand, provide a horizontal field of view of 3600, which simplifies recognition task [5].<br />Fig.5.1.1. Framework of a vision-based place recognition system [5].<br />5.2. Pre-Processing<br />Employing digital image processing techniques before any further processing enhances such images. Discussed certain problems intrinsic to digital imaging: tone reproduction, resolution, color balance, channel registration, bit depth, noise, clipping, compression and sharpening. In such a situation, each of the individual patterns is segmented for an effective recognition process known as segmentation. Another problem arises when the image pattern consists of several disconnected parts. In such a situation, each of the disconnected parts must be properly combined in order to form a coherent entity an operation known as grouping. After the pre-processing stage in the training process, the acquired image instances by the optical sensor are stored in a temporary storage before any further processing is performed. Whereas, in case of recognition process, acquired image instance is directly used for feature extraction when the purpose is to provide real-time target recognition [5].<br />5.3. Feature Extraction<br />Feature extraction is thus a process that extracts such features from the input image in order to give it a new representation. A desirable property of the new representation is that it should be insensitive to the variability that can occur within a class (within-class variability), and should emphasize pattern properties that are different for different classes (between-class variability). In other words, good features describe distinguishing/discriminative properties between different patterns. The desirable properties of the extracted features would be their invariance to translation, viewpoint change, scale change, illumination variations, and effects of small changes in the environment [5].<br />5.4. Training<br />Training is a process by which an appropriate learning method is trained on a representative set of samples of the underlying problem, in order to come up with a classifier. The Choosing of appropriate learning method depends on the choice of learning paradigm and the problem at the hand. In this thesis [5], Support Vector Machines (SVM) classifier has been employed as a learning method. SVM once trained, results in a model that is composed of support vectors (a selected set of training instances that summarize the whole feature space) and corresponding weight coefficients.<br />5.5. Optimization<br />Optimization is a major design concern in any recognition system, which aims for more robustness, computational efficiency, and low memory consumption. This is particularly crucial for systems that aim to work in real-time, and/or to perform continuous learning. In order to achieve these goals, the learning method can be improved by optimizing the learned model. After the optimization stage, the model is ready to be served as a knowledge model to the SVM classifier for the recognition of indoor places [5].<br />5.6. Classification<br />Classification is that stage in the system architecture, where the learned classifier incorporates the knowledge model for the actual recognition of indoor places. At this stage an input image instance, in the form of extracted features, is provided to the classifier. The classifier then assigns this input image instance to one of the predefined classes depending upon the knowledge that is stored in the knowledge model. The performance of any learning based recognition system heavily depends on [5]:<br />The quality of the classifier.<br />The performance of pre-processing operations such as noise removal and segmentation.<br />Feature extraction also plays an important role in providing quality features to the classifier.<br />The complexity of the decision function of the classifier.<br />5.7. Post-Processing<br />Up till this stage, a single level of classification has made the recognition decision about a particular input image instance, but it does not have to be the final decision layer of the recognition system. This is mainly because in many cases performance and robustness can be greatly improved by incorporating additional mechanisms. Such mechanisms can exploit multiple sources of information or just process the data produced by a single classifier. An example of the latest case would be incorporating the information of a place for object recognition. As already mentioned, the recognition performance can be improved by incorporating multiple cues. In this way, the recognition system comprises of multiple classifiers. In this thesis [5], post processing hasn’t been used, because the use of a single cue made by single classifier.<br />6. Feature Extraction<br />In case of visual data, such representation of features can be derived from the whole image (global features) or can be computed locally based on its salient parts (local features).<br />Local FeaturesGlobal FeaturesA local feature is an image pattern that differs from its immediate neighborhood. It is usually associated with a change of an image property or several properties simultaneously; the image properties commonly considered are intensity, color, and texture.Some measurements are taken from a region centered on a local feature and converted into descriptors. The descriptors can then be used for various applications.A set of local features can be used as a robust image representation, that allows recognizing objects or scenes without the need for segmentation.In the field of image retrieval, many global features have been proposed to describe the image content, with color histograms and variations thereof as a typical example.Global features cannot distinguish foreground from background, and mix information from both parts together.<br />In this thesis [5], evaluation of the performance of only local image features for indoor place recognition under varying imaging and illumination conditions has been made. The basic idea behind the local features is to represent the appearance of the input image only around a set of characteristic points known as the interest points/key points. The process of local features extraction mainly consists of two stages: interest points detection and descriptor building.<br />Interest Point Detection:<br />The purpose of an interest point detector is to identify a set of characteristic points in the input image that have the maximum possibility to be repeated again even in the presence of various transformations (as for instance scaling and rotation). Also more stable interest points means better performance.<br />Local Feature Descriptor:<br />For each of these interest points, a local feature descriptor is built to distinctively describe the local region around the interest point. In order to determine the resemblance between two images using such representation, the local descriptors from both of the images are matched. Therefore, the degree of resemblance is usually a function of the number of properly matched descriptors between the two images.<br />In addition, we typically require a sufficient number of feature regions to cover the target object, so that it can still be recognized under partial occlusion. This is achieved by the following feature extraction pipeline:<br /> 1. Find a set of distinctive key points.<br /> 2. Define a region around each key point in a scale- or affine-invariant manner.<br /> 3. Extract and normalize the region content.<br /> 4. Compute a descriptor from the normalized region.<br /> 5. Match the local descriptors.<br />6.1. Local Feature Detection Algorithms<br />Fig.6.1.1. Classification of feature detectors corresponding to corner, region, and blob Methods, gray rectangle with green border refer to corner detector but it may be a blob detector.<br />6.1.1 Good features properties:<br />Repeatability: Given two images of the same object or scene, taken under different viewing conditions, a high percentage of the features detected on the scene part are visible in both images should be found in both images.<br />Distinctiveness: The intensity patterns underlying the detected features should show a lot of variation, such that features can be distinguished and matched.<br />Locality: The features should be local, so as to reduce the probability of occlusion and to allow simple model approximations of the geometric and photometric deformations between two images taken under different viewing conditions (e.g., based on a local planarity assumption).<br />Quantity: The number of detected features should be sufficiently large, such that a reasonable number of features are detected even on small objects. However, the optimal number of features depends on the application. Ideally, the number of detected features should be adaptable over a large range by a simple and intuitive threshold. The density of features should reflect the information content of the image to provide a compact image representation.<br />Accuracy: The detected features should be accurately localized, both in image location, as with respect to scale and possibly shape.<br />Efficiency: Preferably, the detection of features in a new image should allow for time-critical applications. Repeatability, arguably the most important property of all, can be achieved in two different ways: either by invariance or by robustness.<br />Invariance: When large deformations are to be expected, the preferred approach is to model these mathematically if possible, and then develop methods for feature detection that are unaffected by these mathematical transformations.<br />Image noise<br />Changes in illumination<br />Uniform scaling<br />Rotation<br />Minor changes in viewing direction<br />Robustness: In case of relatively small deformations, it often suffices to make feature detection methods less sensitive.<br />6.2. Local Feature Descriptor Algorithms<br />Fig.6.2.1. Classification of feature descriptors .<br />7. Classification<br />In machine learning and pattern recognition, classification refers to an algorithmic procedure for assigning a given piece of input data into one of a given number of categories. An algorithm that implements classification, especially in a concrete implementation, is known as a classifier.<br />In more simple words, Classification means to resolve the class of an object, e.g., a ground vehicle vs. an aircraft [8].Machine learning may be divided into two main learning types:<br />Figure 7.0.1: Types of machine learning<br />A supervised learning procedure (classification) is a procedure that learns to classify new instances based on learning from a training set of instances that have been properly labeled by hand with the correct classes. However, an unsupervised procedure (clustering) involves grouping data into classes based on some measure of inherent similarity (e.g. the distance between instances, considered as vectors in a multi-dimensional vector space).<br />As our problem mainly revolves around supervised learning, only a detailed explanation of supervised learning methodology will be provided.<br />7.1 Supervised Learning:<br />Supervised learning is the machine-learning task of inferring a function from supervised training data. A supervised learning algorithm analyzes the training data and produces an inferred function, which is called a classifier (if the output is discrete) or a regression function (if the output is continuous).<br />7.1.1 Supervised Learning Steps:<br />In order to solve a certain problem using supervised learning, it is necessary to follow the steps in Figure 7.1.1.<br />Figure 7.1.1: steps of solving a supervised learning problem.<br />These steps may be described as follow:<br />Determine the type of training examples. Before doing anything else, the engineer should decide what kind of data is to be used as an example. For instance, this might be a single handwritten character, an entire handwritten word, or an entire line of handwriting.<br />Gather a training set. The training set needs to be representative of the real-world use of the function. Thus, a set of input objects is gathered and corresponding outputs are also gathered, either from human experts or from measurements.<br />Determine the input feature representation of the learned function. The accuracy of the learned function depends strongly on how the input object is represented. Typically, the input object is transformed into a feature vector, which contains a number of features that are descriptive of the object. The number of features should not be too large, because of the curse of dimensionality; but should contain enough information to accurately predict the output.<br />Determine the structure of the learned function and corresponding learning algorithm. For example, the engineer may choose to use support vector machines or decision trees.<br />Complete the design. Run the learning algorithm on the gathered training set. Some supervised learning algorithms require the user to determine certain control parameters. These parameters may be adjusted by optimizing performance on a subset (called a validation set) of the training set, or via cross-validation.<br />Evaluate the accuracy of the learned function. After parameter adjustment and learning, the performance of the resulting function should be measured on a test set that is separate from the training set [9].<br />However, the previous steps may solve a supervised learning problem effectively, but some problems may arise.<br />7.1.2 Problems in supervised learning<br />Bias-Variance tradeoff.<br />Function complexity and amount of training data.<br />Dimensionality of the input space.<br />Noise in the output values.<br />Factors to consider.<br />Heterogeneity of the data.<br />Redundancy in the data.<br />7.2 Various classifiers<br />In this section, several types of commonly used classifiers in learning will be viewed. Classifiers can be roughly separated to 3 main groups (approaches).<br />Figure 7.2.1: Some of the commonly used classifiers in the supervised learning process.<br />7.2.1 Similarity (Template Matching)<br />Is the most intuitive method, as Template matching is a simple task of performing a normalized cross-correlation between a template image (object in training set) and a new image to be classified. It is noticeable that template matching is the easiest method to be understood and implemented. However, template matching is well known to be an expensive operation when used in classifying against a large set of images.<br />7.2.2 Probabilistic Classifiers<br />Algorithms of this nature use statistical inference to find the best class for a given instance. Unlike other algorithms, which simply output a \"

best\"

class, probabilistic algorithms output a probability of the instance being a member of each of the possible classes. The best class is normally then selected as the one with the highest probability. However, such an algorithm has numerous advantages over non-probabilistic classifiers, because it can output a confidence value associated with its choice. Correspondingly, it can abstain when its confidence of choosing any particular output is too low. Because of the probabilities output, probabilistic classifiers can be more effectively incorporated into larger machine-learning tasks, in a way that partially or completely avoids the problem of error propagation [11].<br />7.2.3 Decision Boundary Based Classifiers<br />Decision boundary based classifiers are based on the concept of decision planes that define decision boundaries. A Decision Plane is a plane that separates between a set of objects having different class memberships [10].<br />7.3 Classifier Evaluation<br />Classifier performance depends greatly on the characteristics of the data to be classified. There is no single classifier that works best on all given. Various empirical tests have been performed to compare classifier performance and to find the characteristics of data that determine classifier performance. Determining a suitable classifier for a given problem is however still more an art than a science.<br />There are many measures that can be used to evaluate the quality of a classification system, such as precision and recall, and receiver operating characteristic (ROC) [9].<br />a. Conclusion<br />In brief words, this document included the main parts, algorithms, and methodologies that are used by most researches in the field of place recognition. It contained the basic steps of place recognition, and brief details on each step. Which give us an insight on the process of place recognition and how usually it works. Now, the problem is how to select the proper feature detectors, and the proper classifiers to solve the place recognition problem.<br />b. Future Work<br />Working on generation of survey number 2, which will contain an additional study of each algorithm mentioned, and the proposed approaches to solve our problem. After finishing survey #2 we will be able reduce the effort done on the research phase and start the design and implementation phase in parallel with the research phase.<br />c. References<br />[1] Computer vision and robot vision. [Online]. Available: http://en.wikipedia.org/wiki/Computer_vision](https://image.slidesharecdn.com/survey1projectoverview-101216115306-phpapp02/75/Survey-1-project-overview-4-2048.jpg)

![[2] SLAM. [Online]. Available: http://en.wikipedia.org/wiki/Simultaneous_localization_and_mapping](https://image.slidesharecdn.com/survey1projectoverview-101216115306-phpapp02/75/Survey-1-project-overview-5-2048.jpg)

![[3] Appearance-Based Place Recognition for Topological Localization, Iwan Ulrich and Illah Nourbakhsh. [Online]. Available: http://www.cis.udel.edu/~cer/arv/readings/paper_ulrich.pdf](https://image.slidesharecdn.com/survey1projectoverview-101216115306-phpapp02/75/Survey-1-project-overview-6-2048.jpg)

![[4] Autonomous Robot [Online]. Available: http://en.wikipedia.org/wiki/Autonomous_robot](https://image.slidesharecdn.com/survey1projectoverview-101216115306-phpapp02/75/Survey-1-project-overview-7-2048.jpg)

![[5] Vision-Based Indoor Place Recognition using Local Features, Muhammed muneeb ullah [Online]. Available: http://www.csc.kth.se/~pronobis/projects/msc/ullah2007msc.pdf](https://image.slidesharecdn.com/survey1projectoverview-101216115306-phpapp02/75/Survey-1-project-overview-8-2048.jpg)

![[6] Local Invariant Feature detectors, Tinne Tuytelaars and Krystian Mikolajczyk [Online]. Available: http://campar.in.tum.de/twiki/pub/Chair/TeachingWs09MATDCV/FT_survey_interestpoints08.pdf](https://image.slidesharecdn.com/survey1projectoverview-101216115306-phpapp02/75/Survey-1-project-overview-9-2048.jpg)

![[7] Comparison of Local Feature descriptors, Subhransu Maji [Online]. Available: http://www.eecs.berkeley.edu/~yang/courses/cs294-6/maji-presentation.pdf[8] “Evaluation of Bayes, ICA, PCA and SVM Methods for Classification”, V.C.Chen. Radar Division, US Naval Research Laboratory<br />[9] http://en.wikipedia.org/wiki/Supervised_learning<br />[10] http://www.statsoft.com/textbook/support-vector-machines/<br />[11] http://en.wikipedia.org/wiki/Classification_(machine_learning)<br />](https://image.slidesharecdn.com/survey1projectoverview-101216115306-phpapp02/75/Survey-1-project-overview-10-2048.jpg)