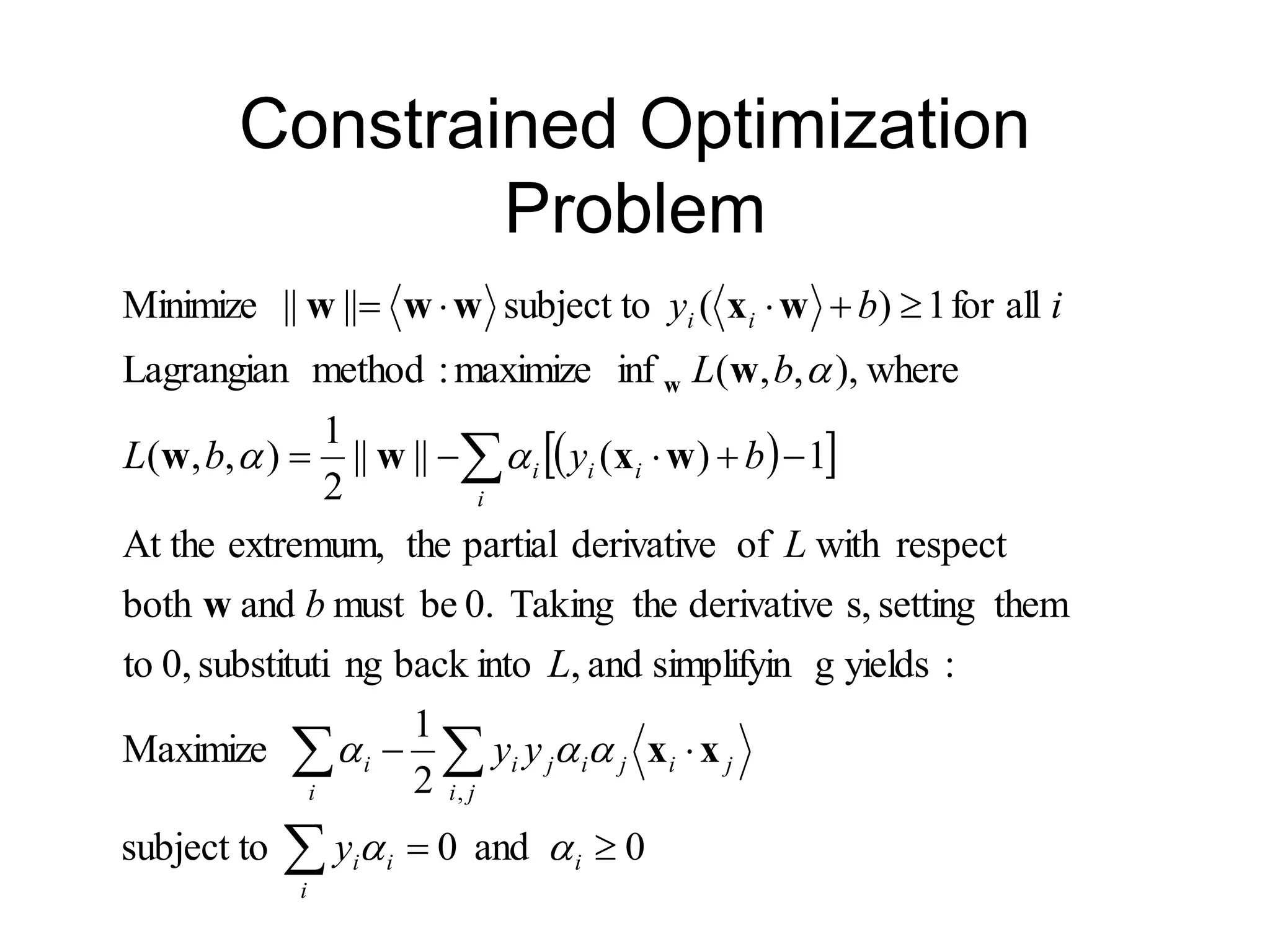

This document provides an introduction to support vector machines (SVMs). It discusses key concepts such as using a max-margin classifier to find the optimal separating hyperplane, using Lagrangian multipliers to solve constrained optimization problems, projecting data into higher dimensions to make it linearly separable, and how complexity depends on training examples rather than dimensionality. It also covers examples of using SVMs for classification tasks like protein localization and discusses overfitting issues.