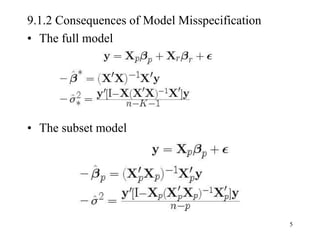

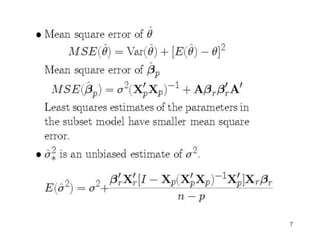

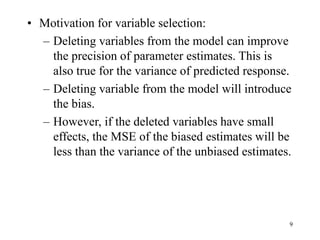

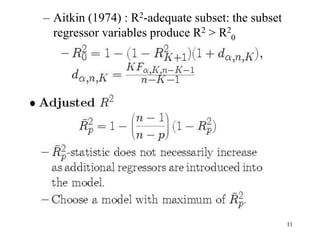

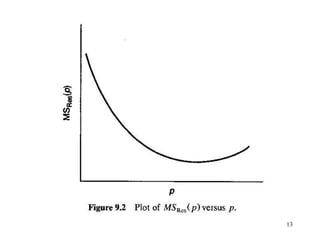

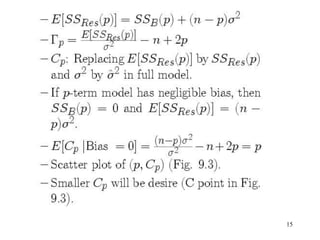

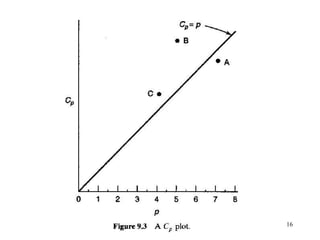

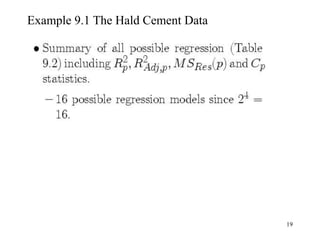

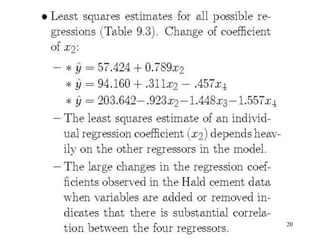

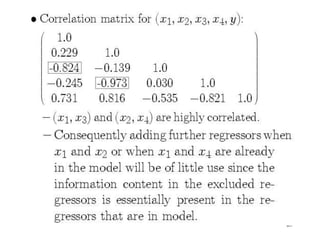

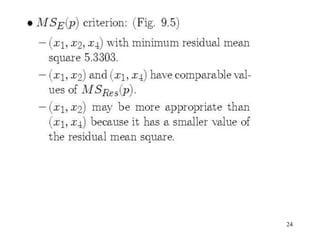

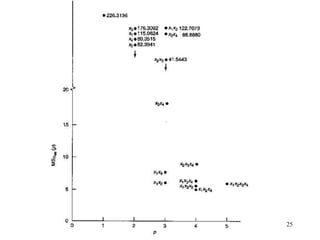

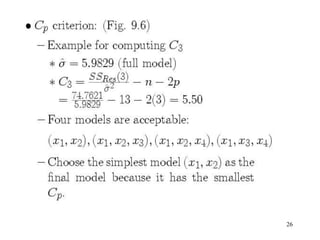

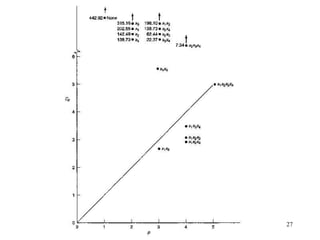

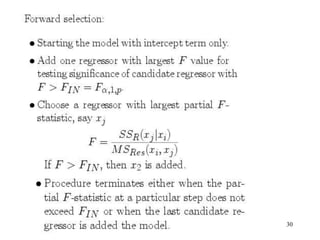

This document discusses variable selection and model building techniques. It introduces the variable selection problem of balancing including more variables for information versus fewer variables to reduce variance. Several variable selection algorithms are presented but may select different subsets. Iterative approaches are recommended to check model assumptions. Variable selection aims to improve precision but can introduce bias if unimportant variables are removed. Common evaluation criteria like R-squared and mean squared error are covered. Computational techniques like all-possible regressions, forward selection, backward elimination, and stepwise regression are described.