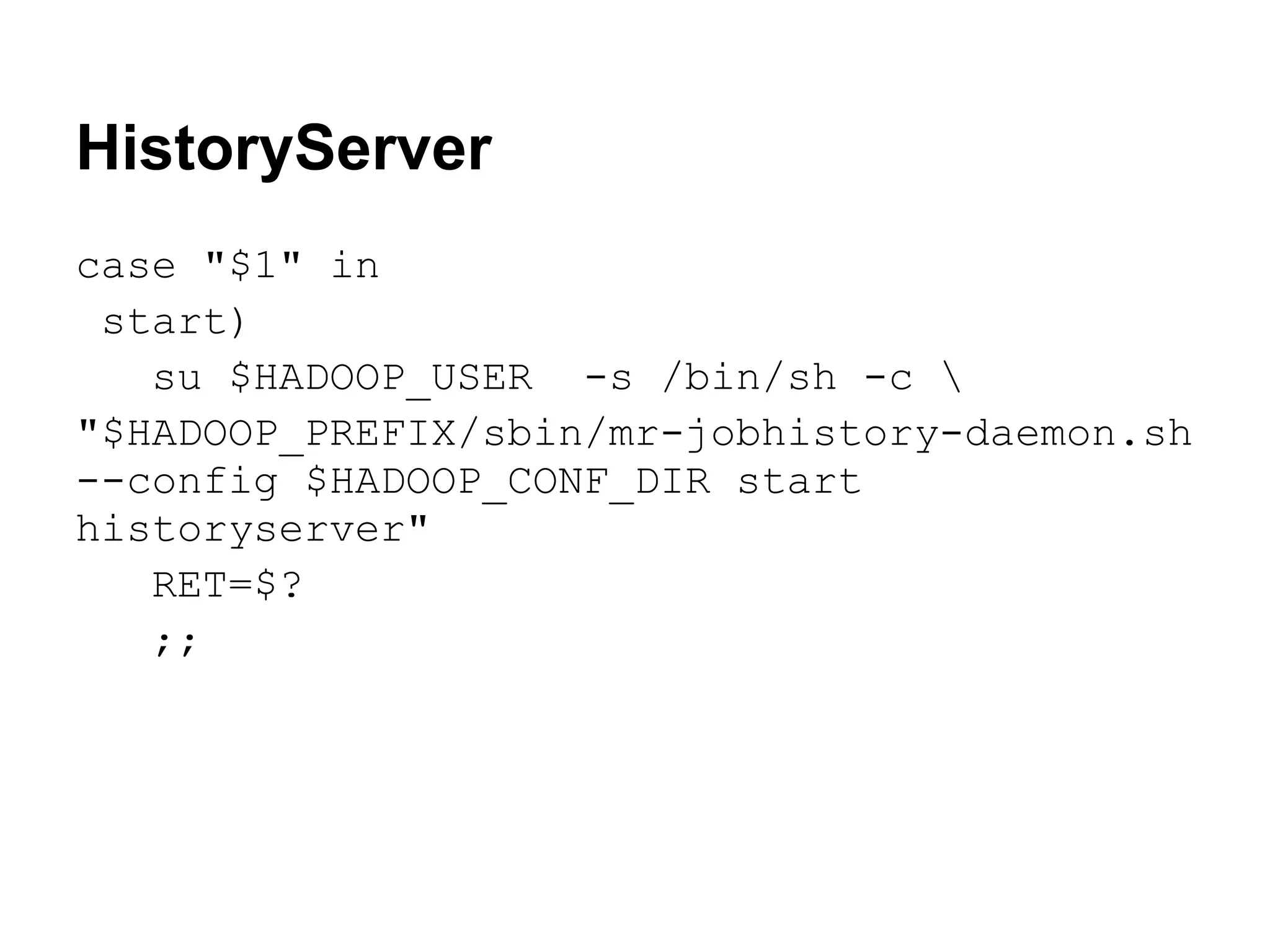

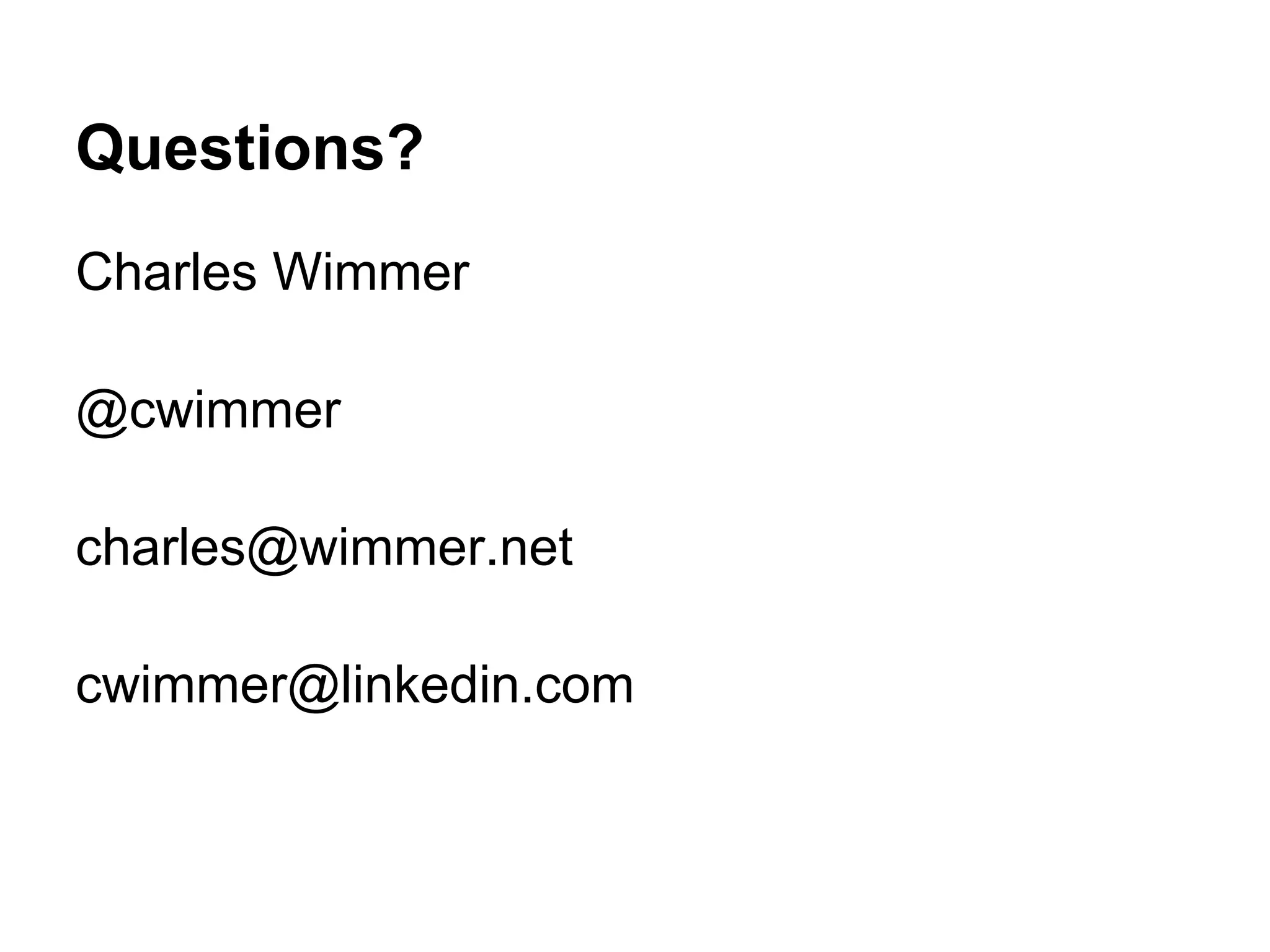

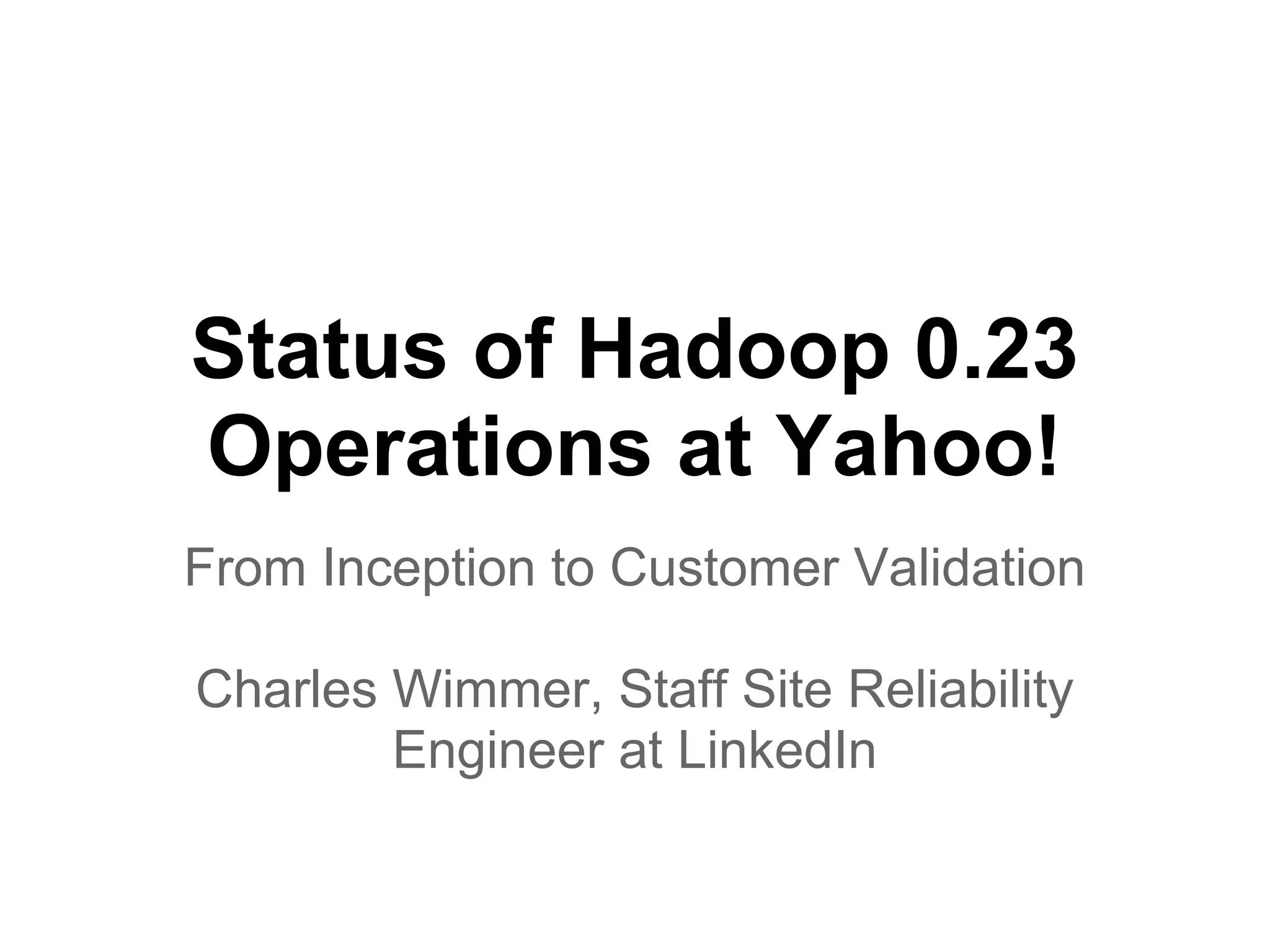

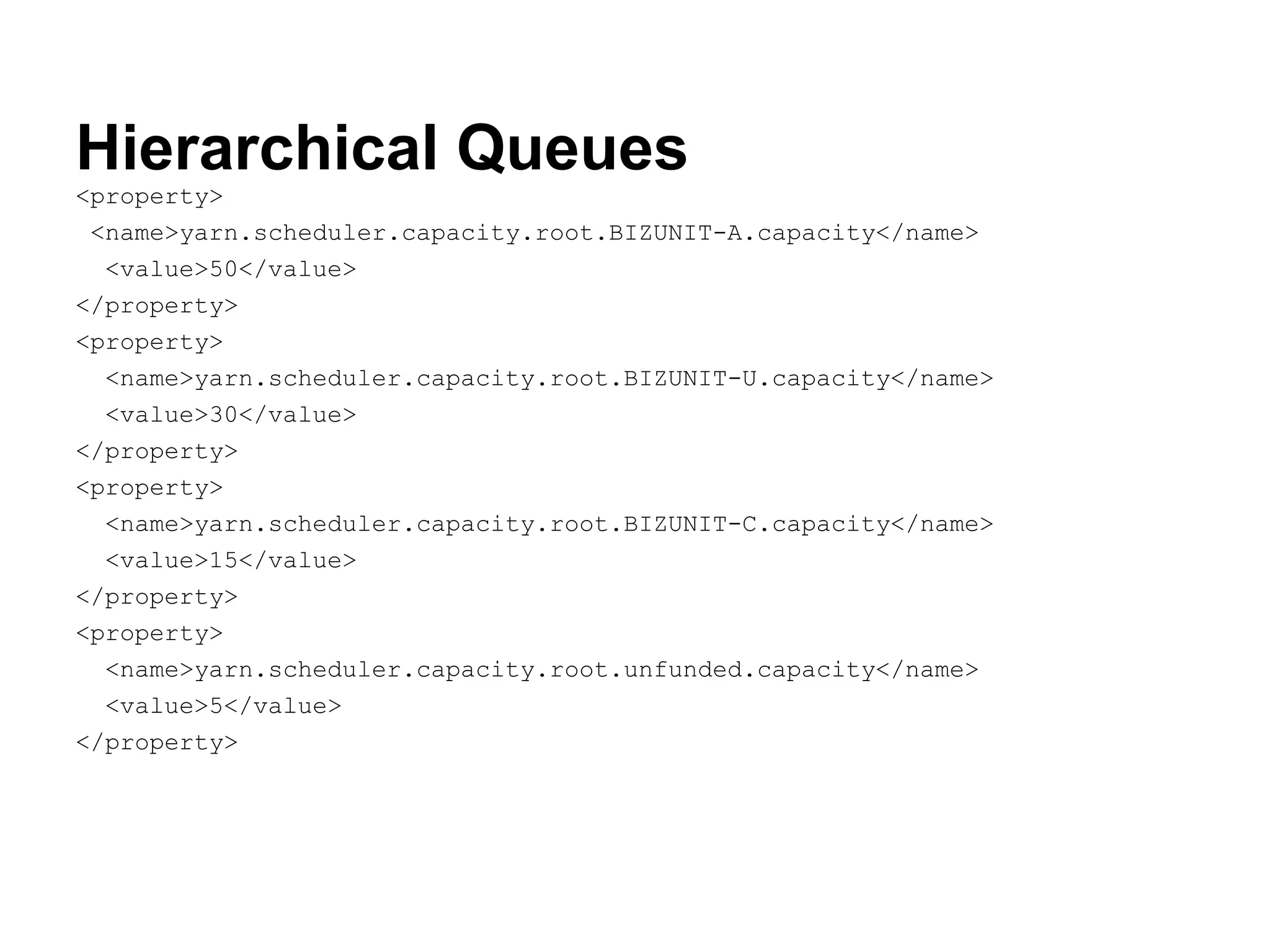

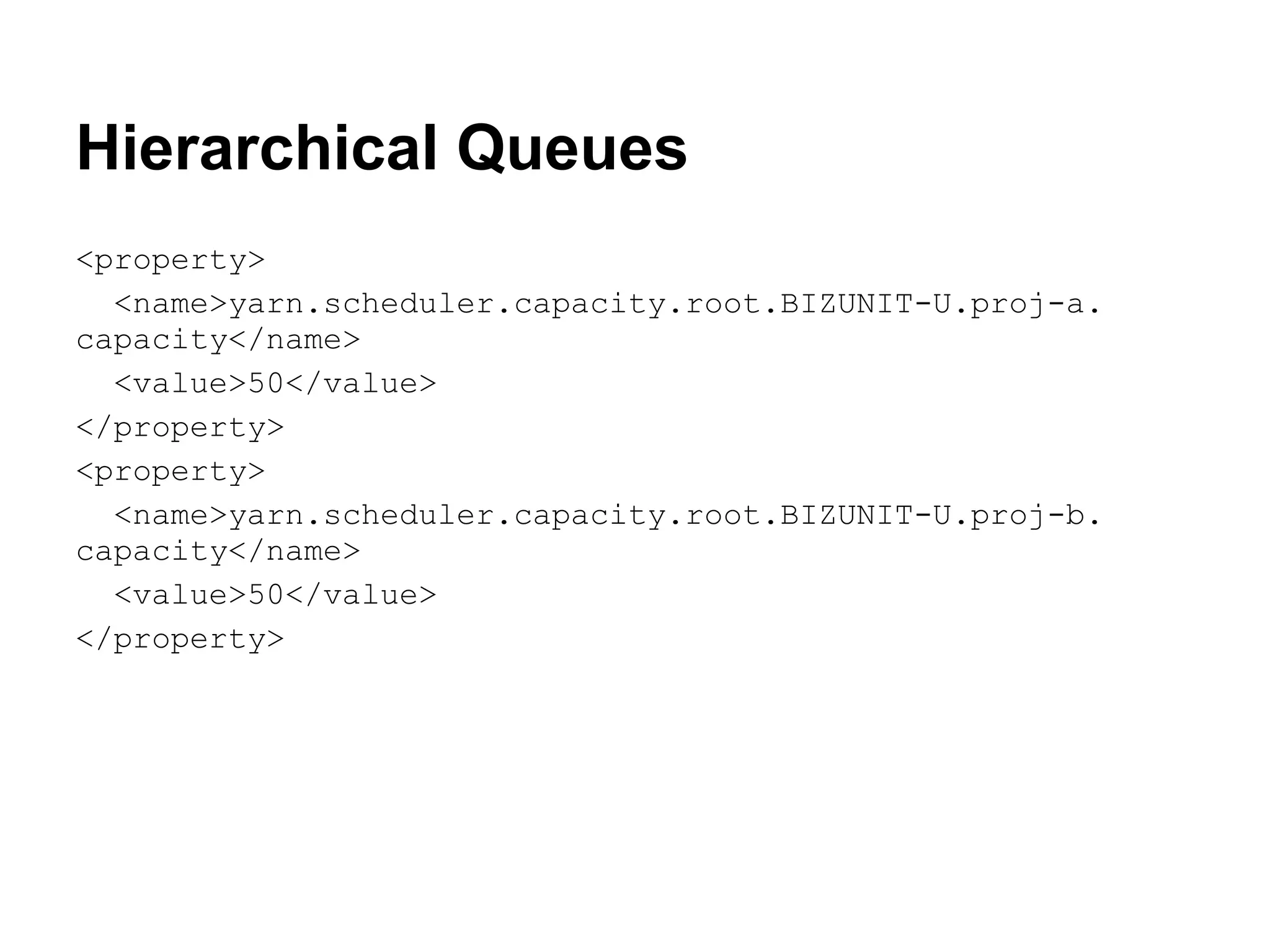

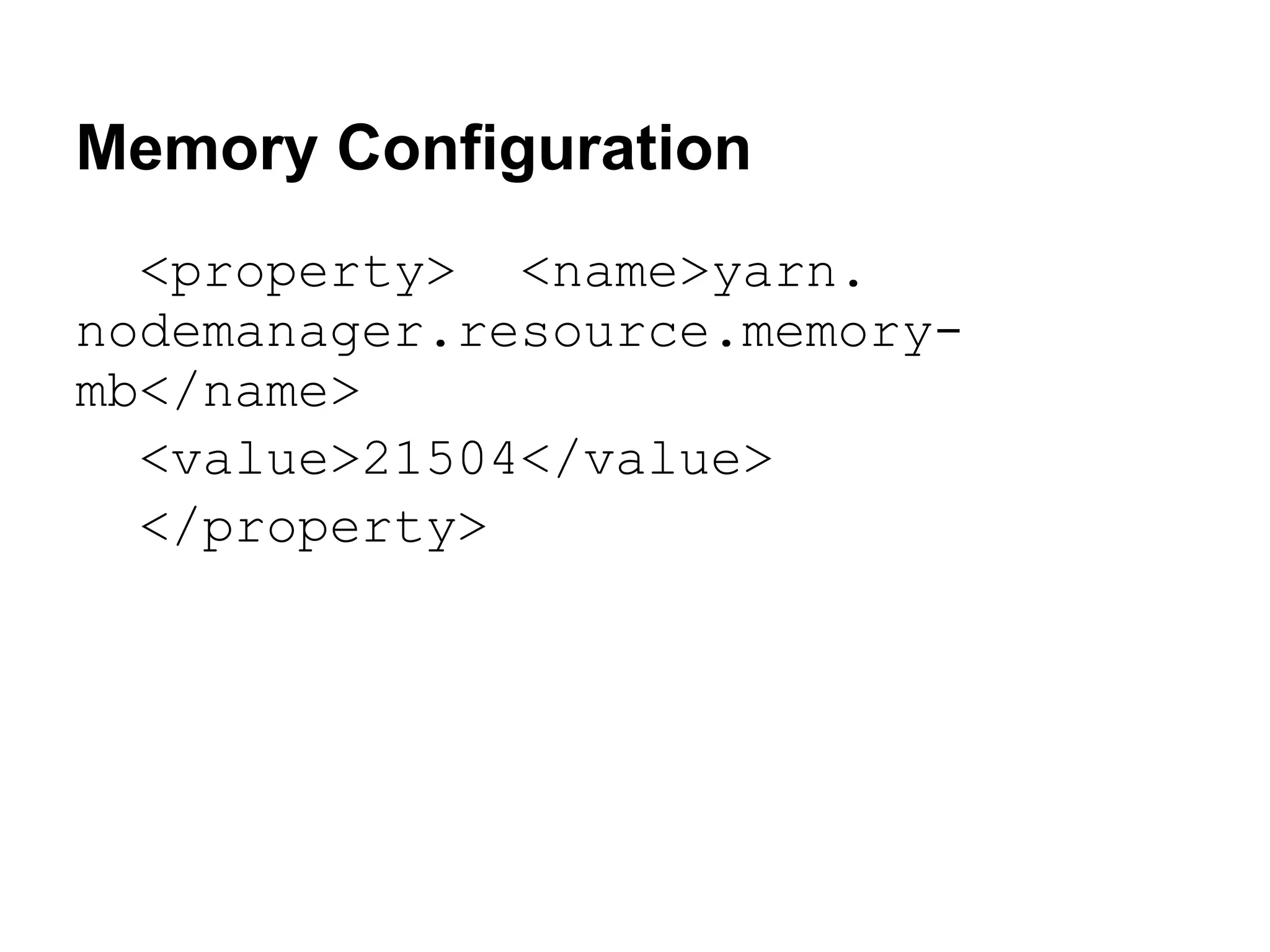

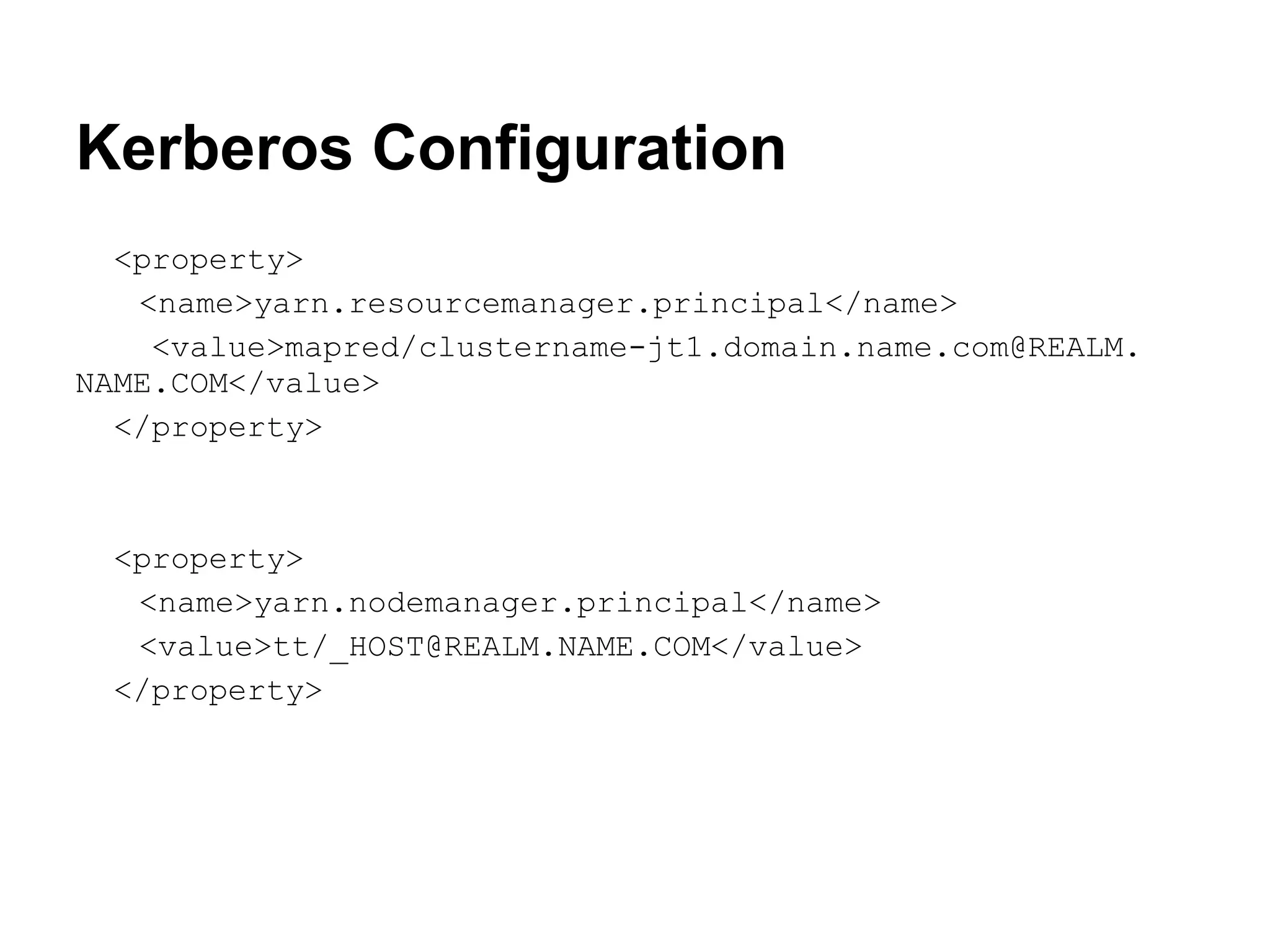

The document summarizes the process Yahoo! undertook to upgrade its Hadoop clusters from version 0.20 to 0.23. It involved providing a 420-node sandbox cluster running 0.23 for customer testing, configuring queues and memory settings, and adding Kerberos authentication. Init scripts were modified for the DataNode, NameNode, SecondaryNameNode, HistoryServer, ResourceManager and NodeManager to start the new Hadoop versions.

![DataNode/NameNode

start_20(){

. . .

}

start_next(){

. . .

}

if [ -x /home/gs/hadoop/current/bin/hdfs ] ; then

start_next $@

else

start_20 $@

fi](https://image.slidesharecdn.com/statusofhadoop23opsatyahoo-120620141901-phpapp01/75/Status-of-Hadoop-0-23-Operations-at-Yahoo-14-2048.jpg)

![SecondaryNameNode

function clean_checkpoint_dir {

CHECKPOINT_DIR=/grid/0/tmp/hadoop-

hdfs/dfs/namesecondary/current

if [ -d "$CHECKPOINT_DIR" ] ; then

DELETE_DIR=`mktemp -p /grid/0/tmp -d delete-XXXXXX`

if [ $? -eq 0 ] ; then

echo "moving $CHECKPOINT_DIR to ${DELETE_DIR}/ "

mv $CHECKPOINT_DIR ${DELETE_DIR}/

cat<<EOF | at now+1min 2>/dev/null

if [ -d $DELETE_DIR ] ; then

rm -rf --preserve-root $DELETE_DIR

fi

EOF

fi

fi

}](https://image.slidesharecdn.com/statusofhadoop23opsatyahoo-120620141901-phpapp01/75/Status-of-Hadoop-0-23-Operations-at-Yahoo-15-2048.jpg)