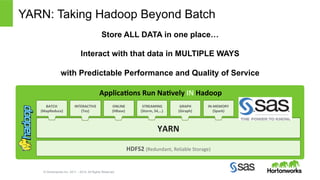

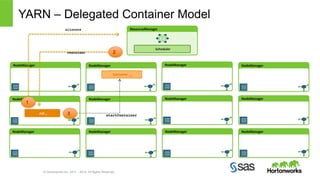

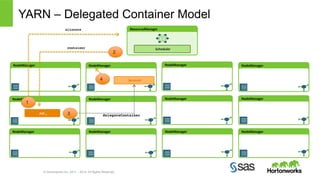

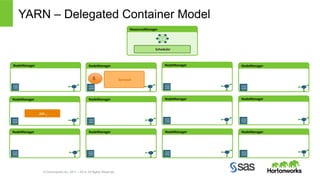

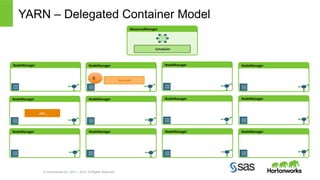

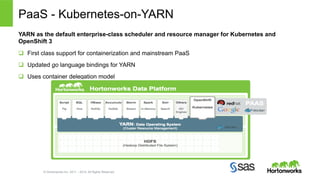

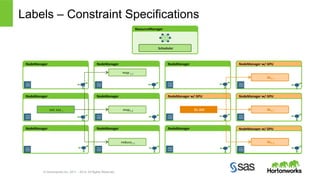

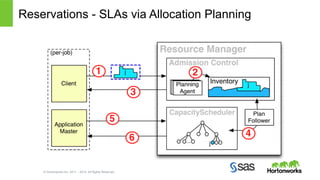

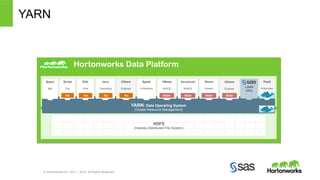

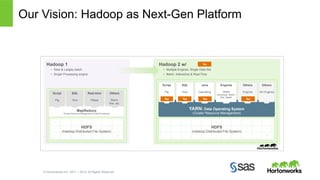

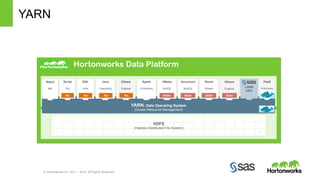

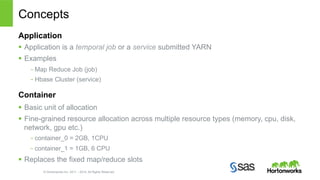

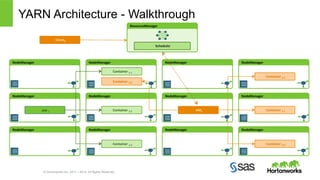

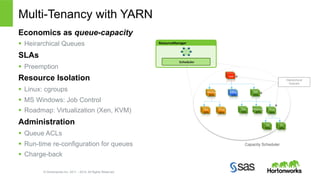

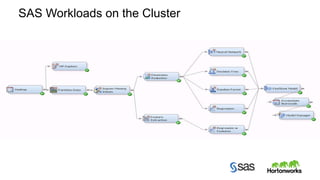

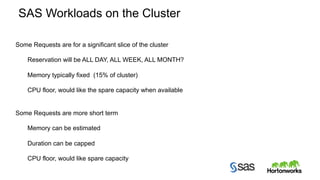

The document discusses combining SAS capabilities with Hadoop YARN. It provides an introduction to YARN and how it allows SAS workloads to run on Hadoop clusters alongside other workloads. The document also discusses resource settings for SAS workloads on YARN and upcoming features for YARN like delegated containers and Kubernetes integration.

![SAS Workloads – Resource Settings

if [ "$USER" = "lasradm" ]; then

# Custom settings for running under the lasradm account.

export TKMPI_ULIMIT="-v 50000000”

export TKMPI_MEMSIZE=50000

export TKMPI_CGROUP="cgexec -g cpu:75”

fi

# if [ "$TKMPI_APPNAME" = "lasr" ]; then

# Custom settings for a lasr process running under any account.

# export TKMPI_ULIMIT="-v 50000000"

# export TKMPI_MEMSIZE=50000

# export TKMPI_CGROUP="cgexec -g cpu:75"

Copyright © 2014, SAS Institute Inc. All rights reserved.](https://image.slidesharecdn.com/hortonworkssaswebinar-141105145957-conversion-gate01/85/Combine-SAS-High-Performance-Capabilities-with-Hadoop-YARN-23-320.jpg)