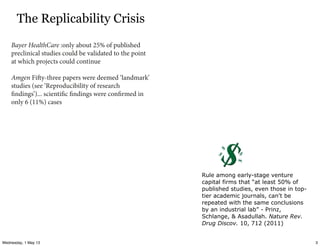

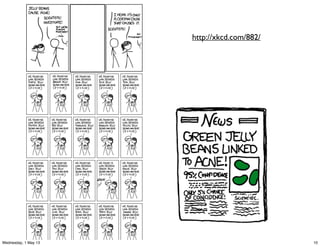

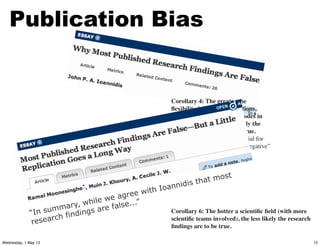

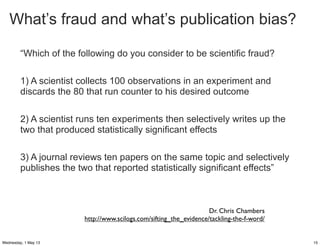

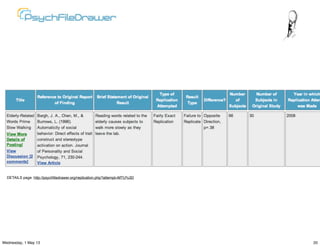

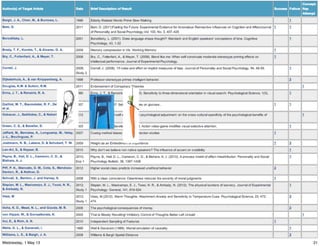

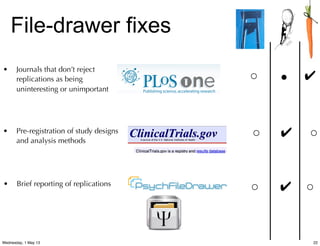

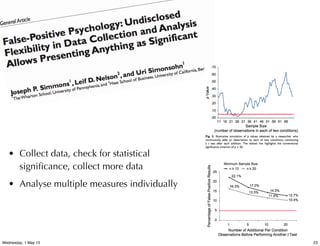

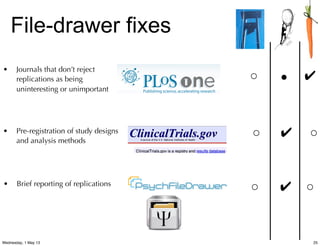

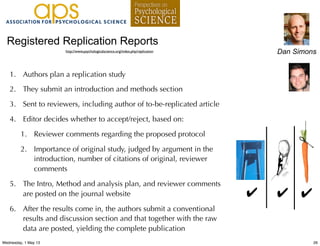

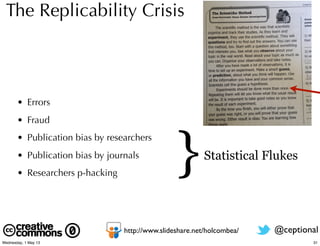

This document discusses the replicability crisis in science. It notes that while textbooks teach the importance of replicating experiments, few published studies actually report replications. This allows for biases like p-hacking and publication bias to inflate false positive rates. Reasons for the replicability crisis include errors, fraud, biases in what researchers and journals choose to publish, and exploiting flexibility in analysis. Solutions proposed include conducting and publishing more replication studies, pre-registering study designs, and making data and analyses more open through initiatives like registered replication reports and open science.