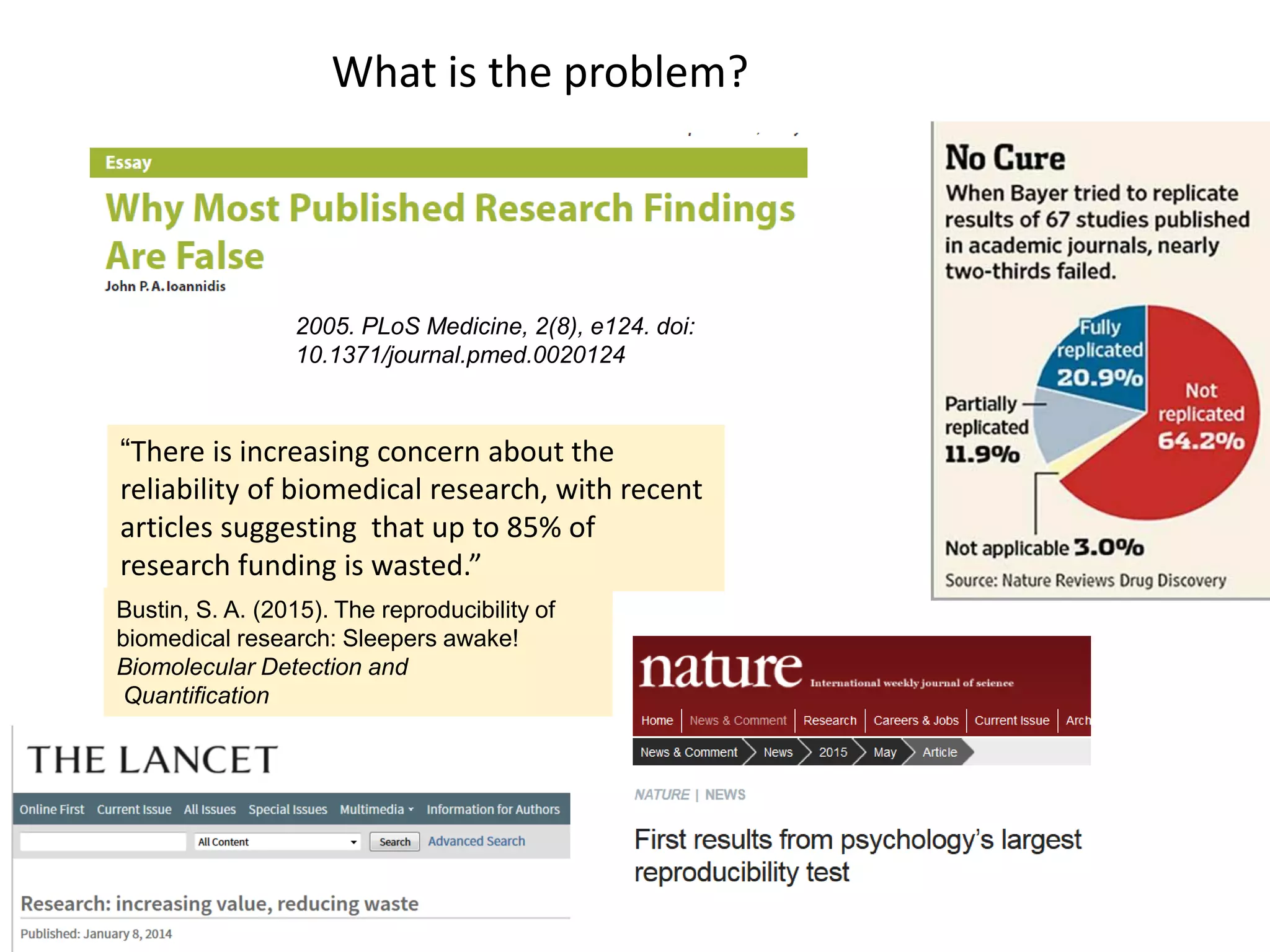

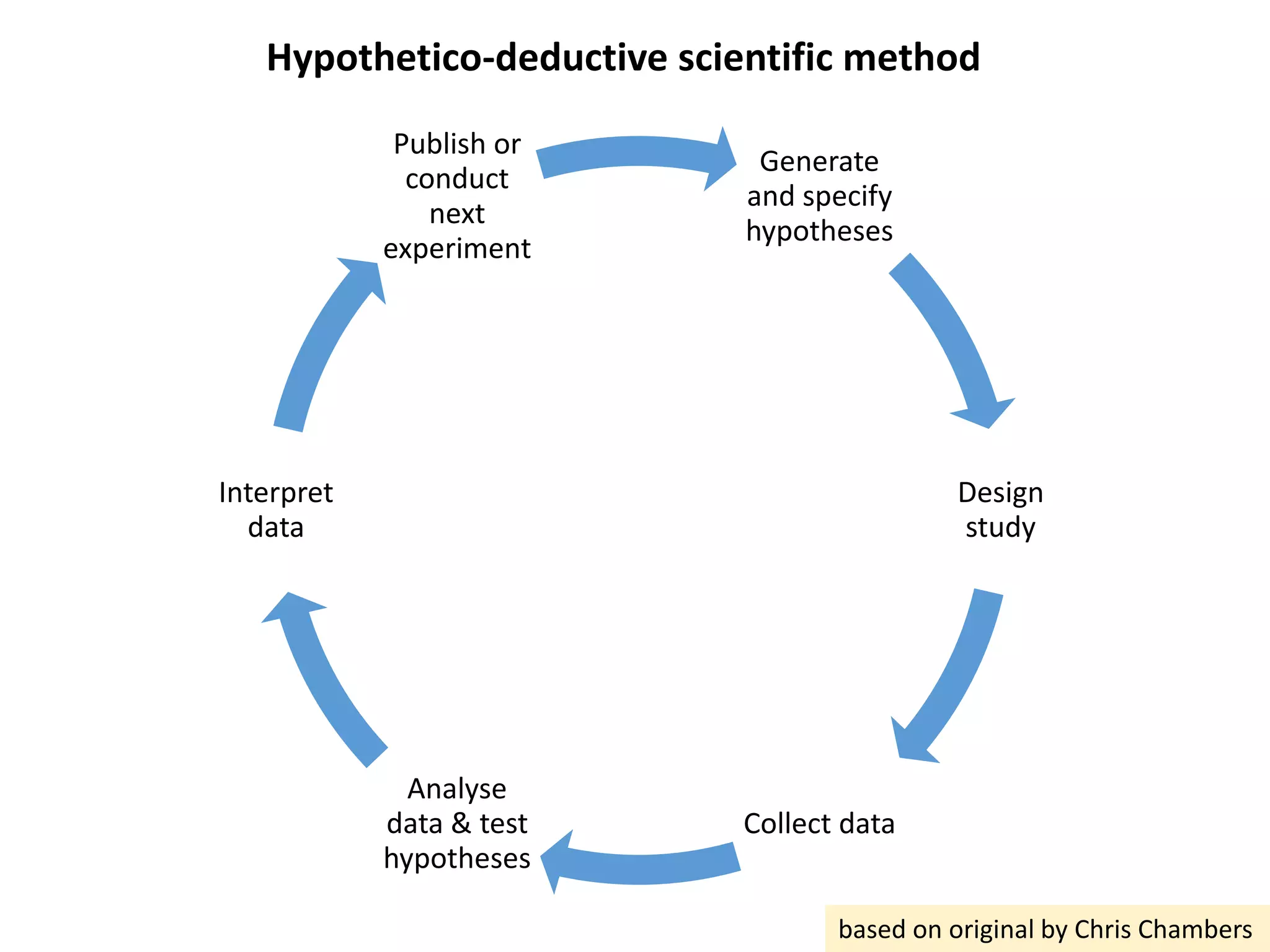

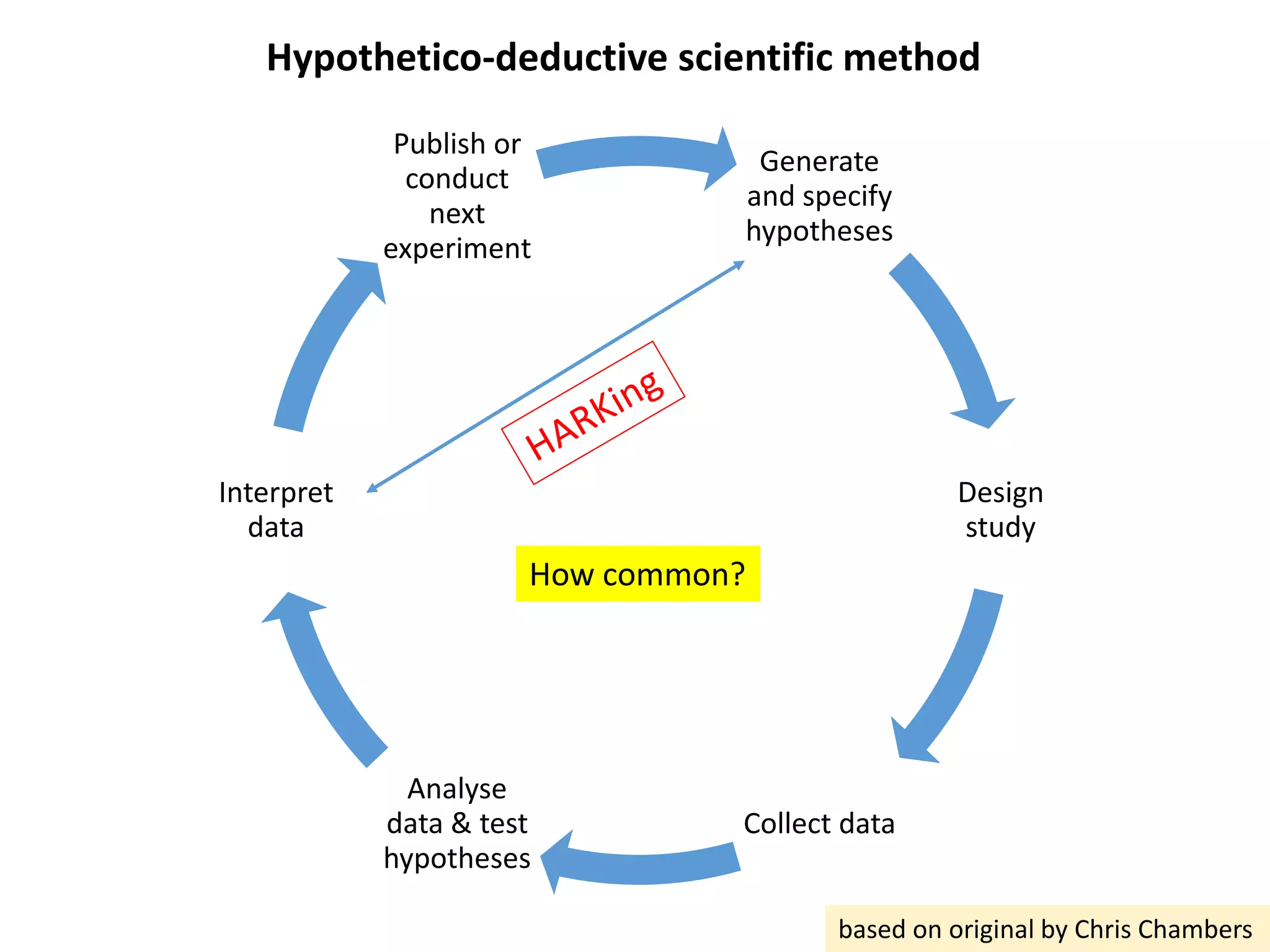

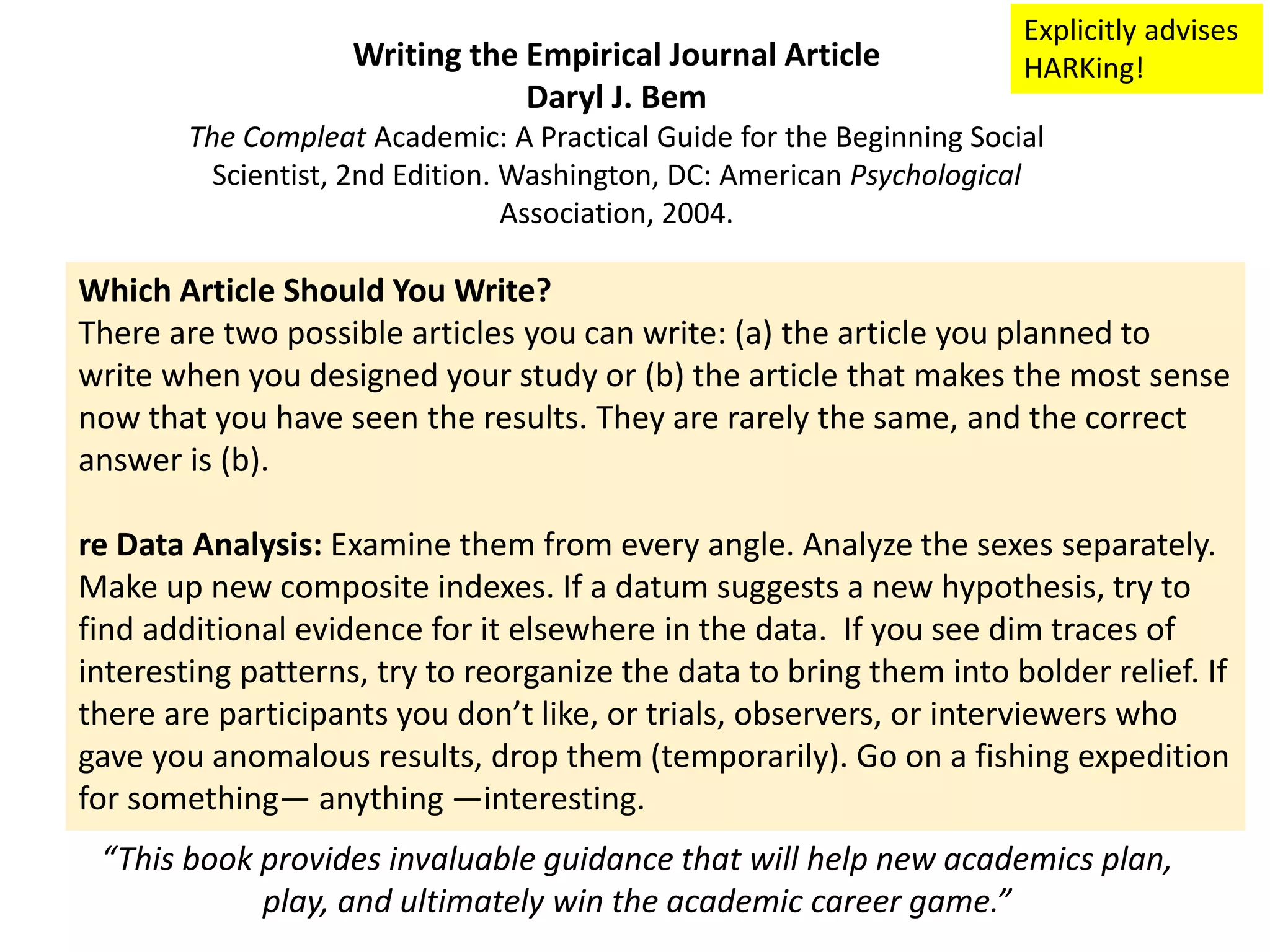

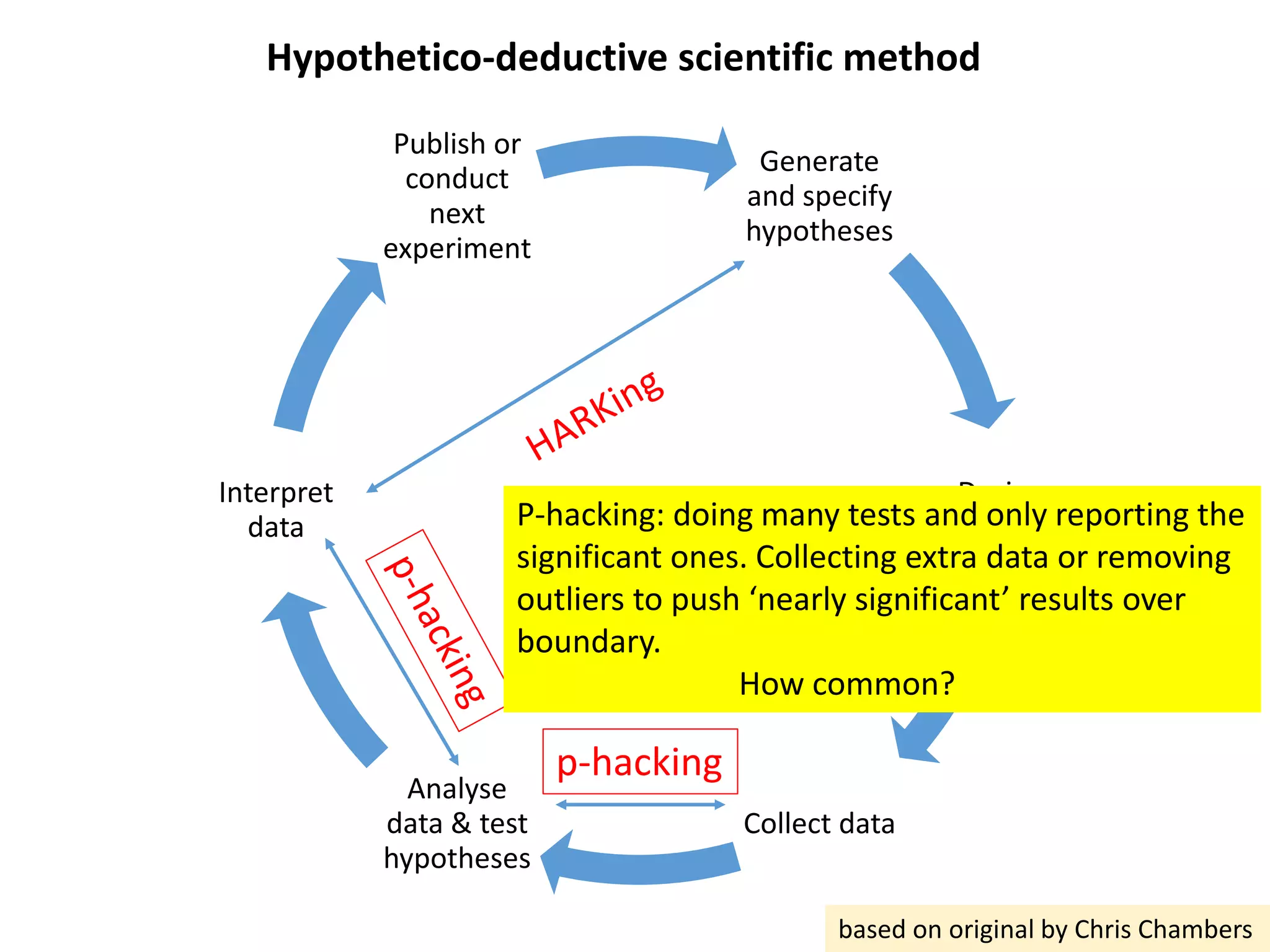

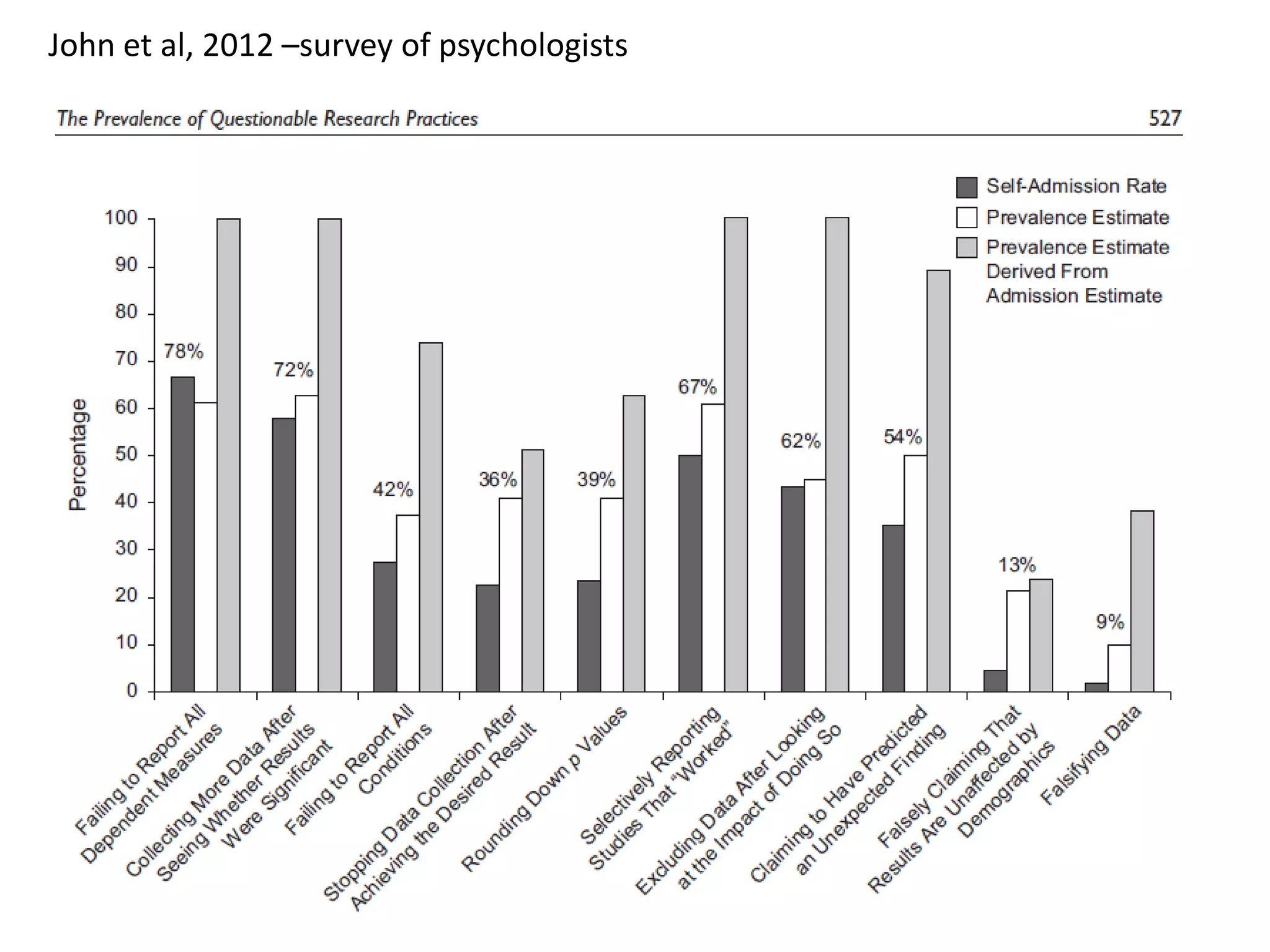

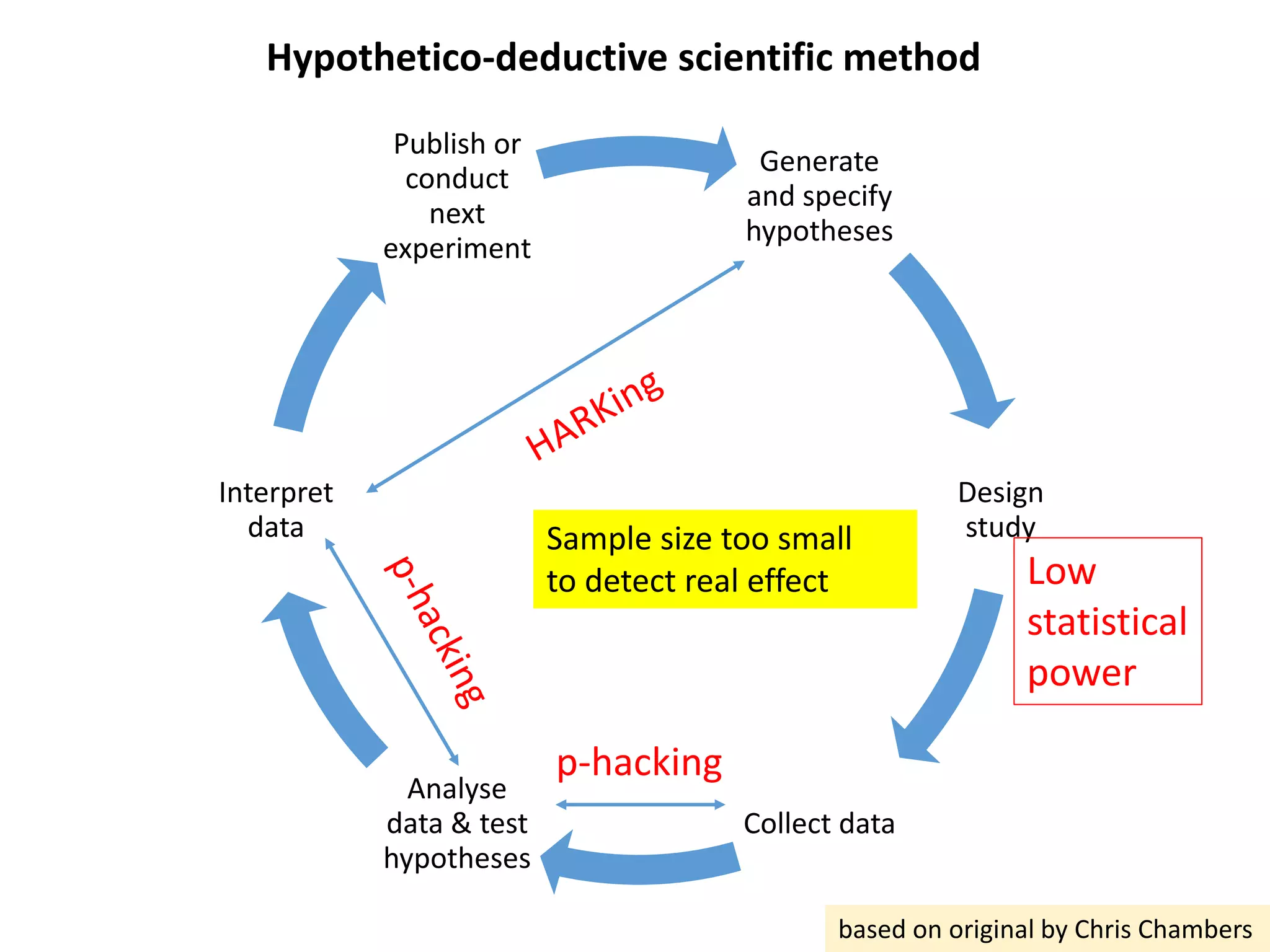

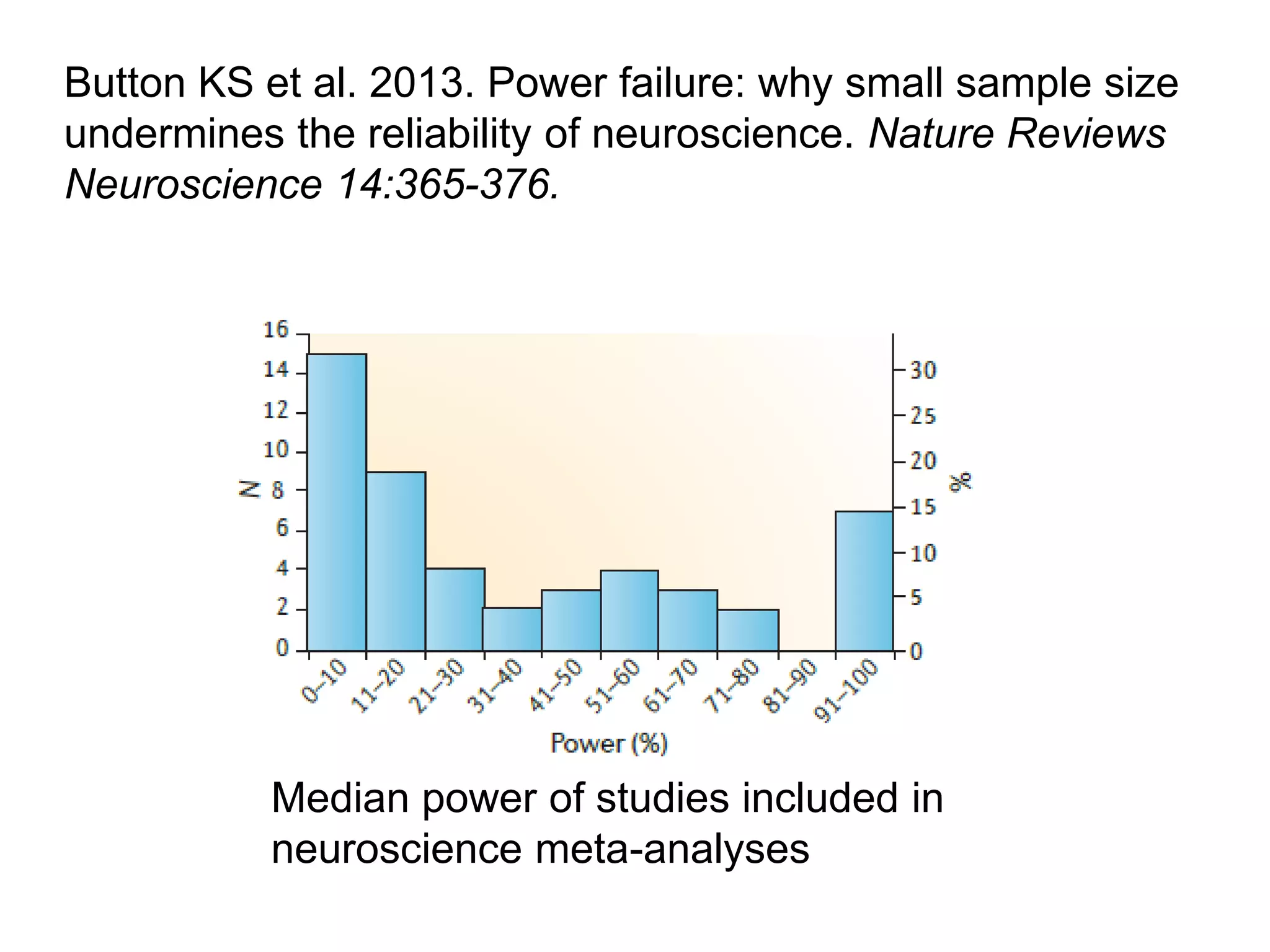

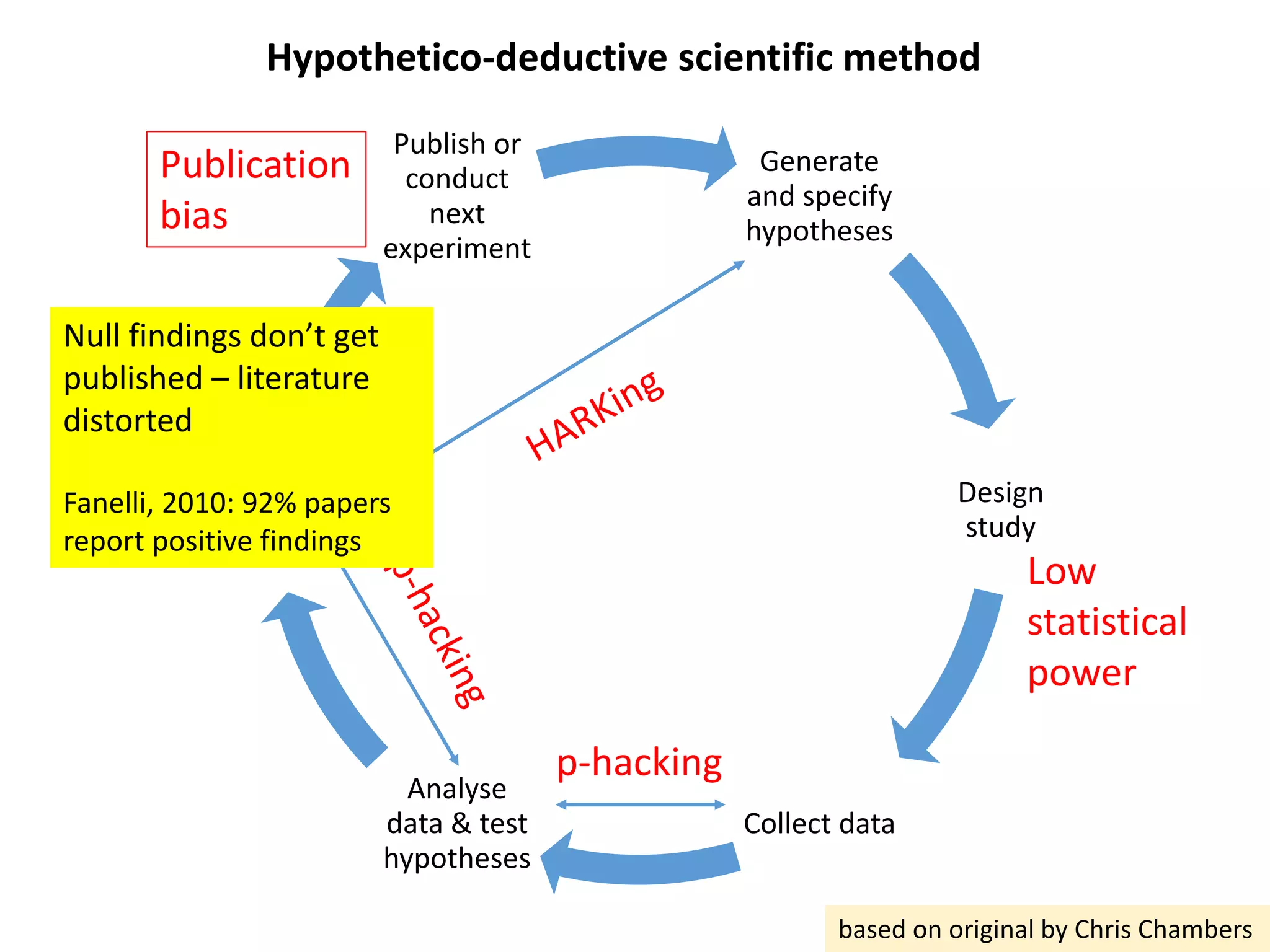

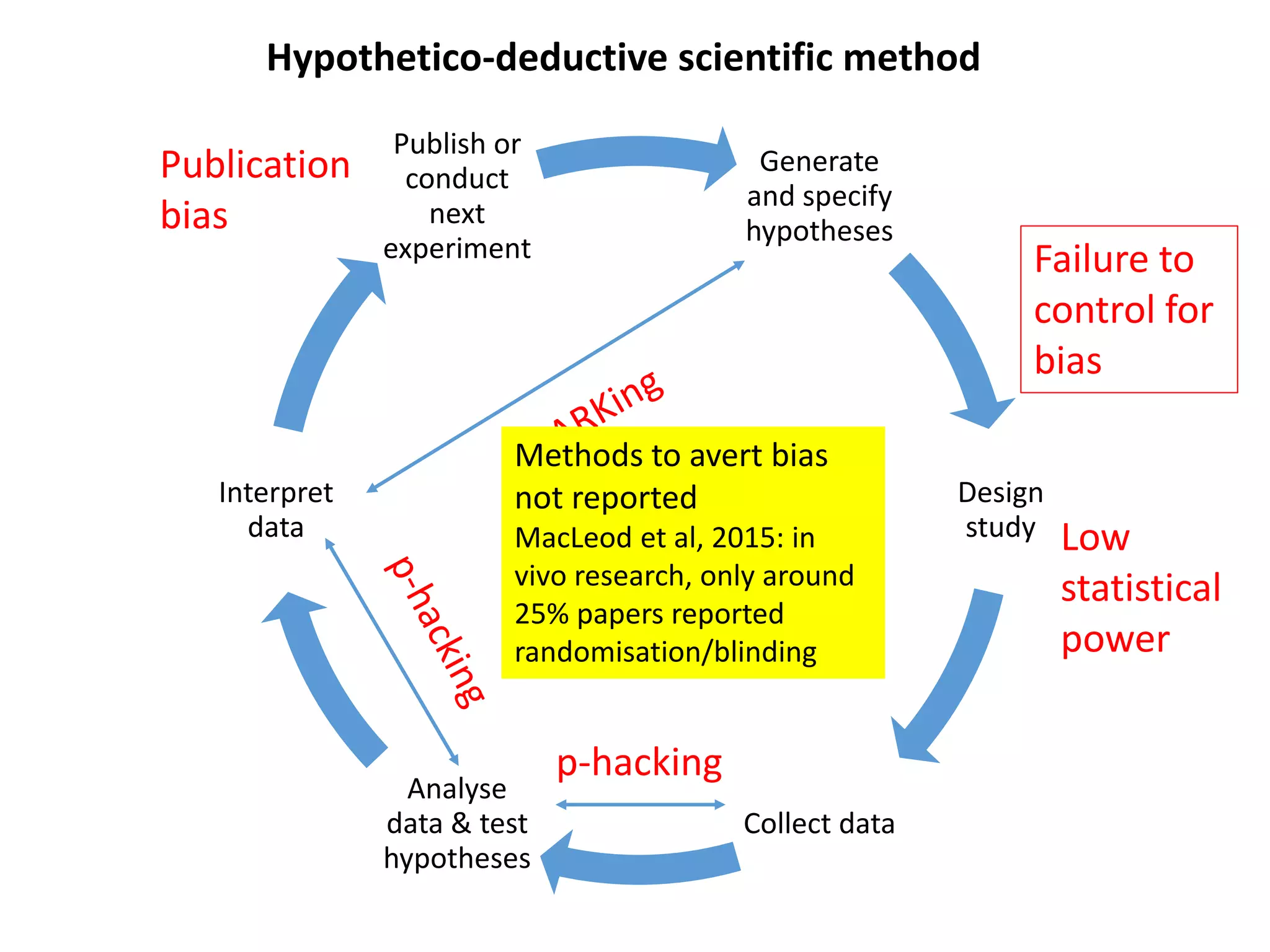

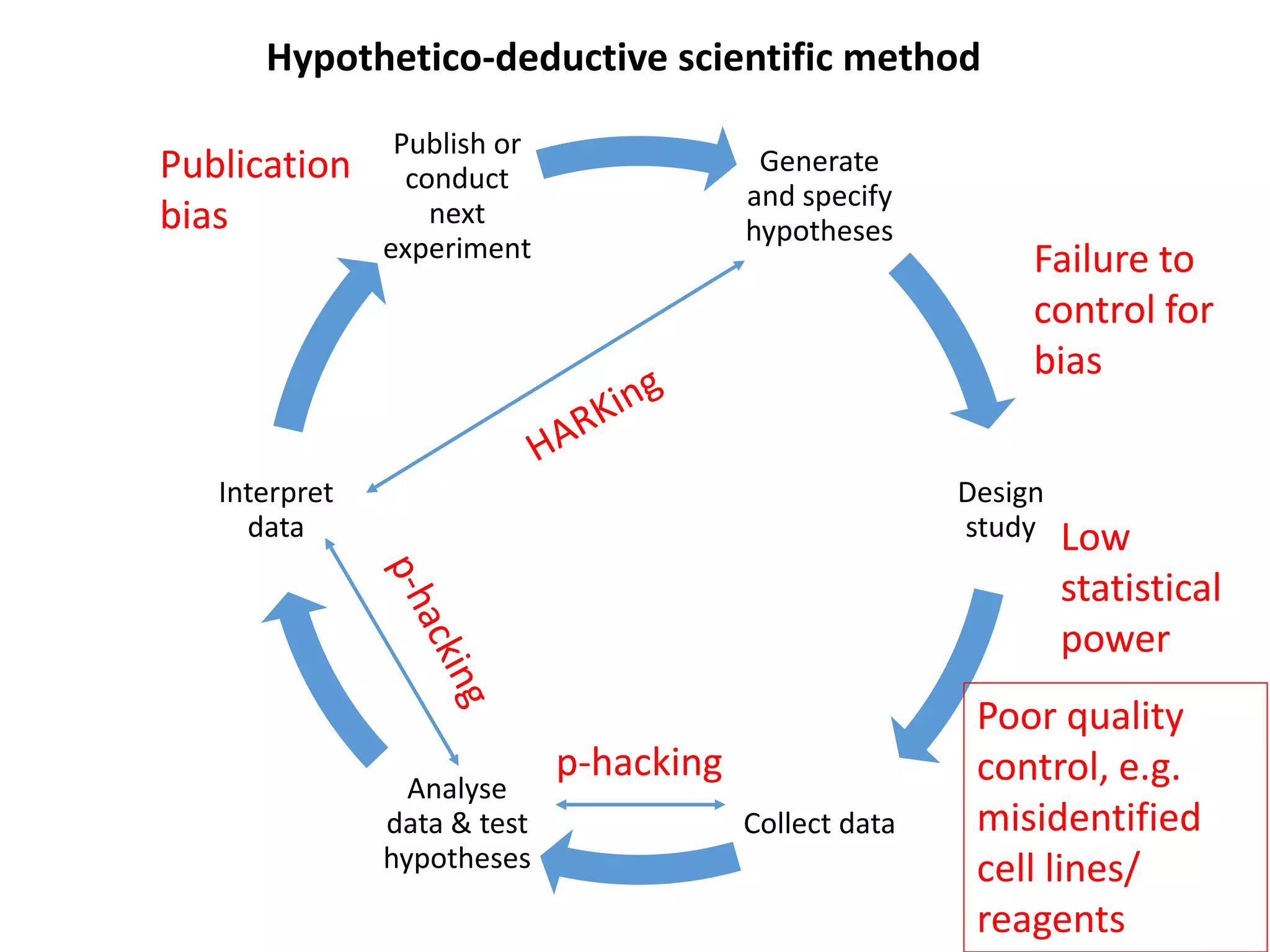

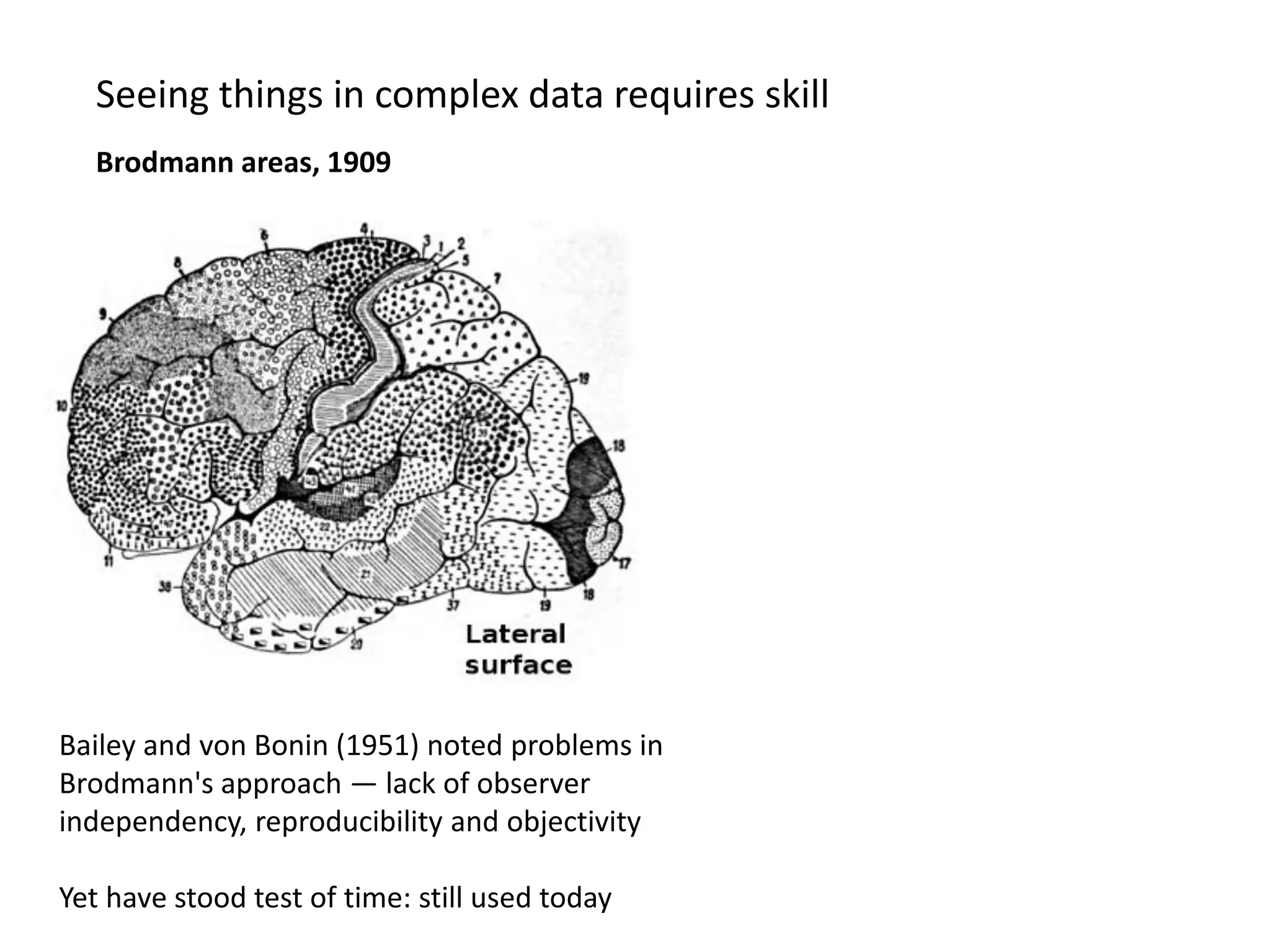

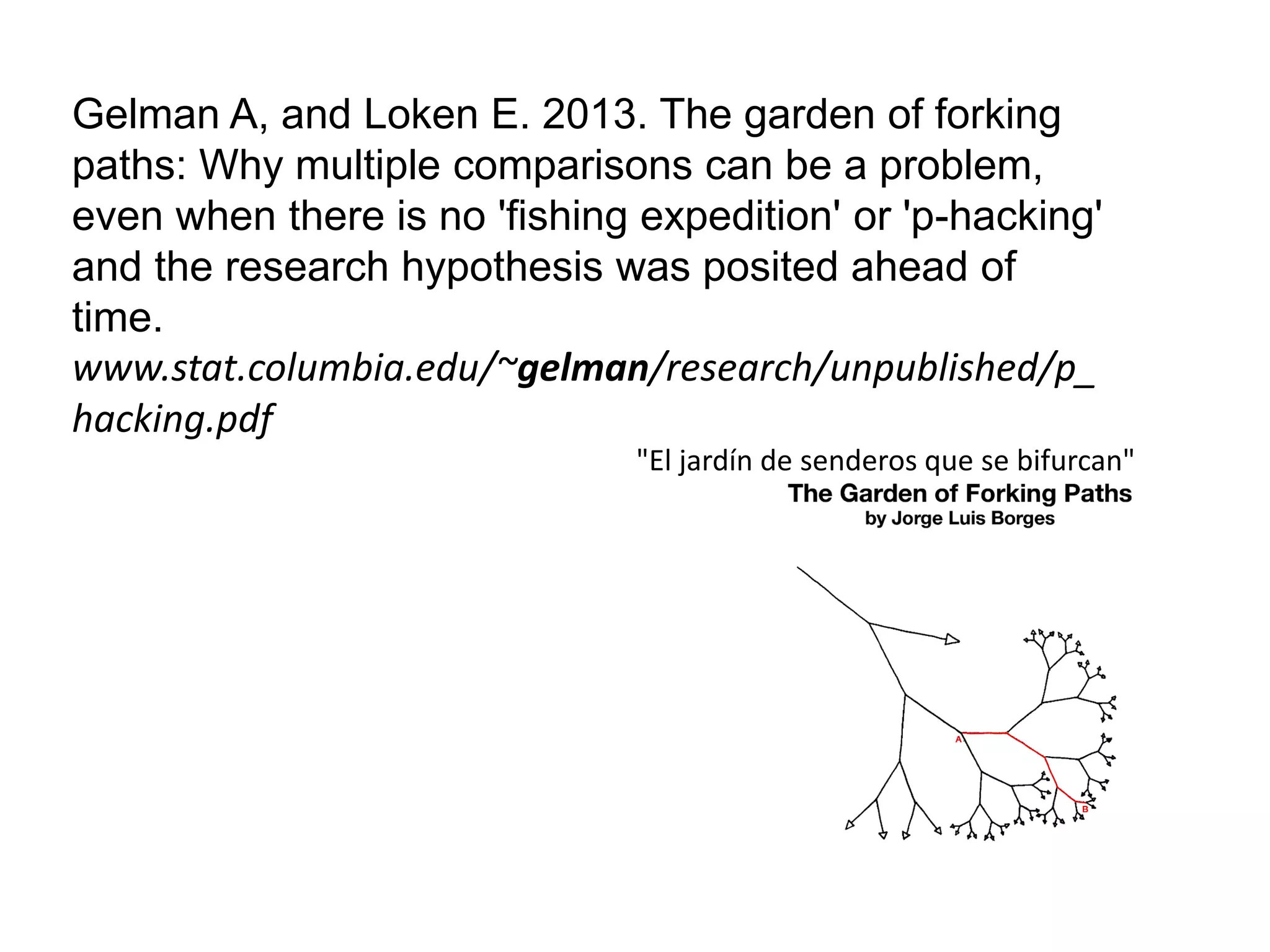

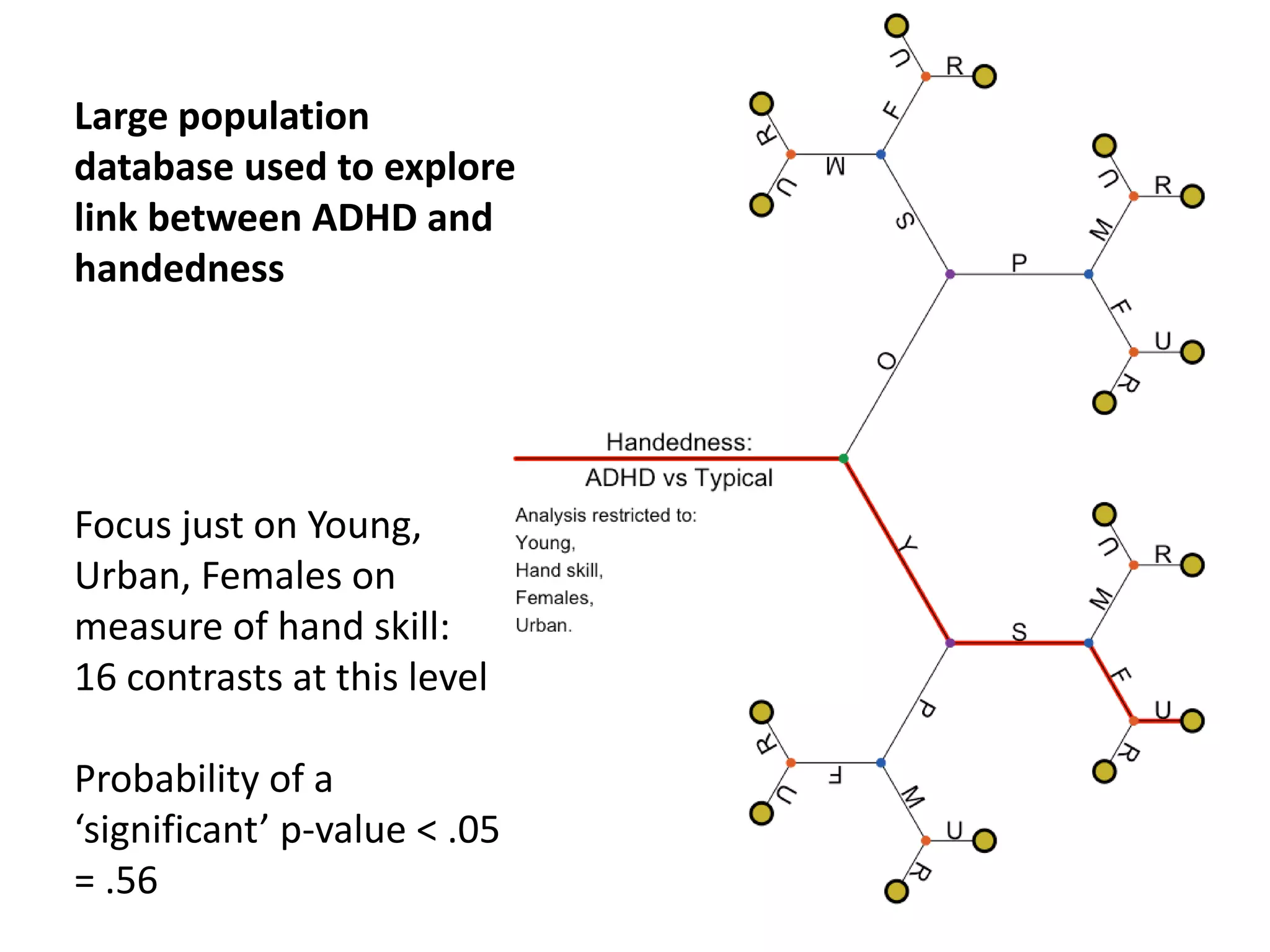

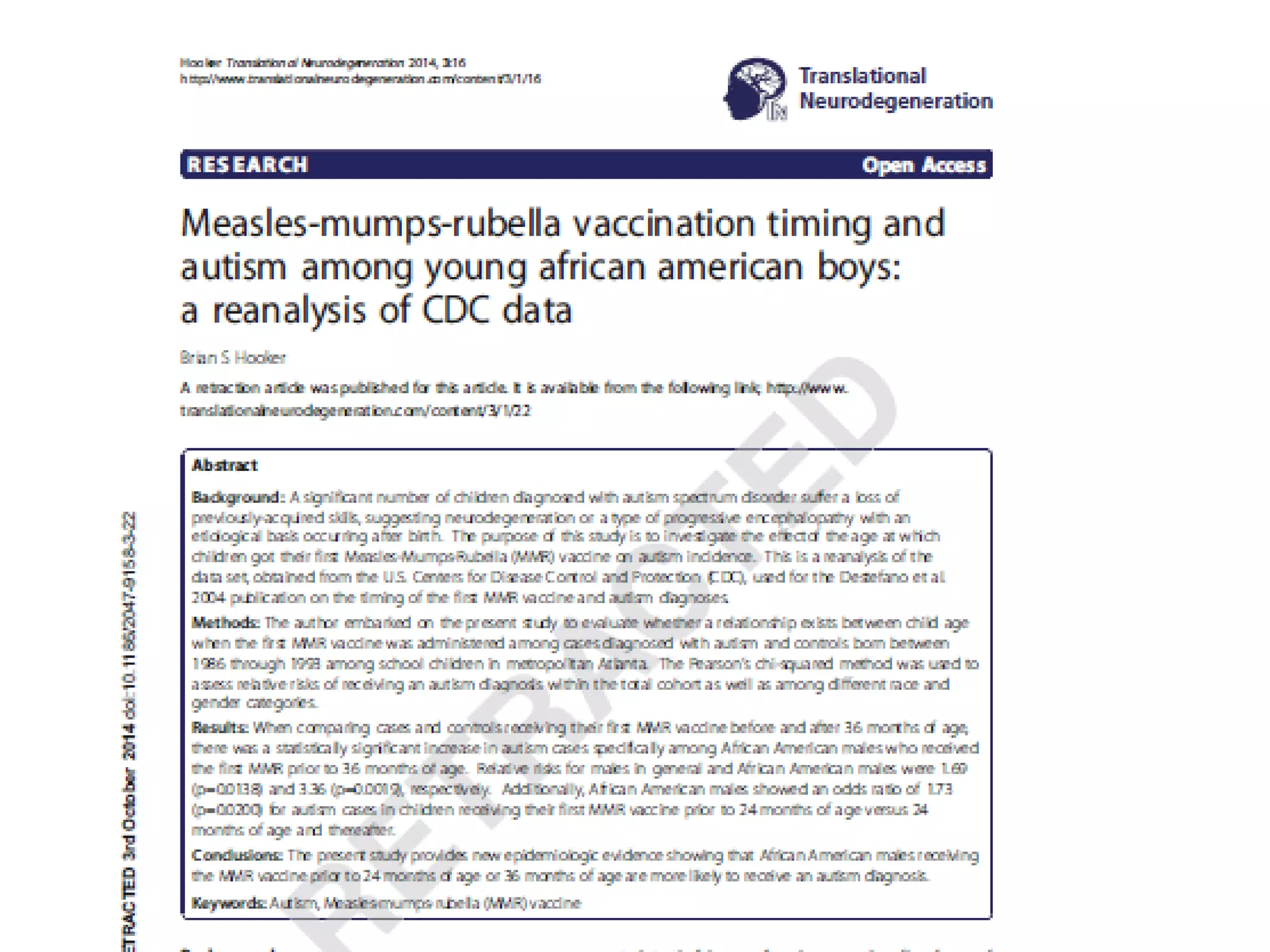

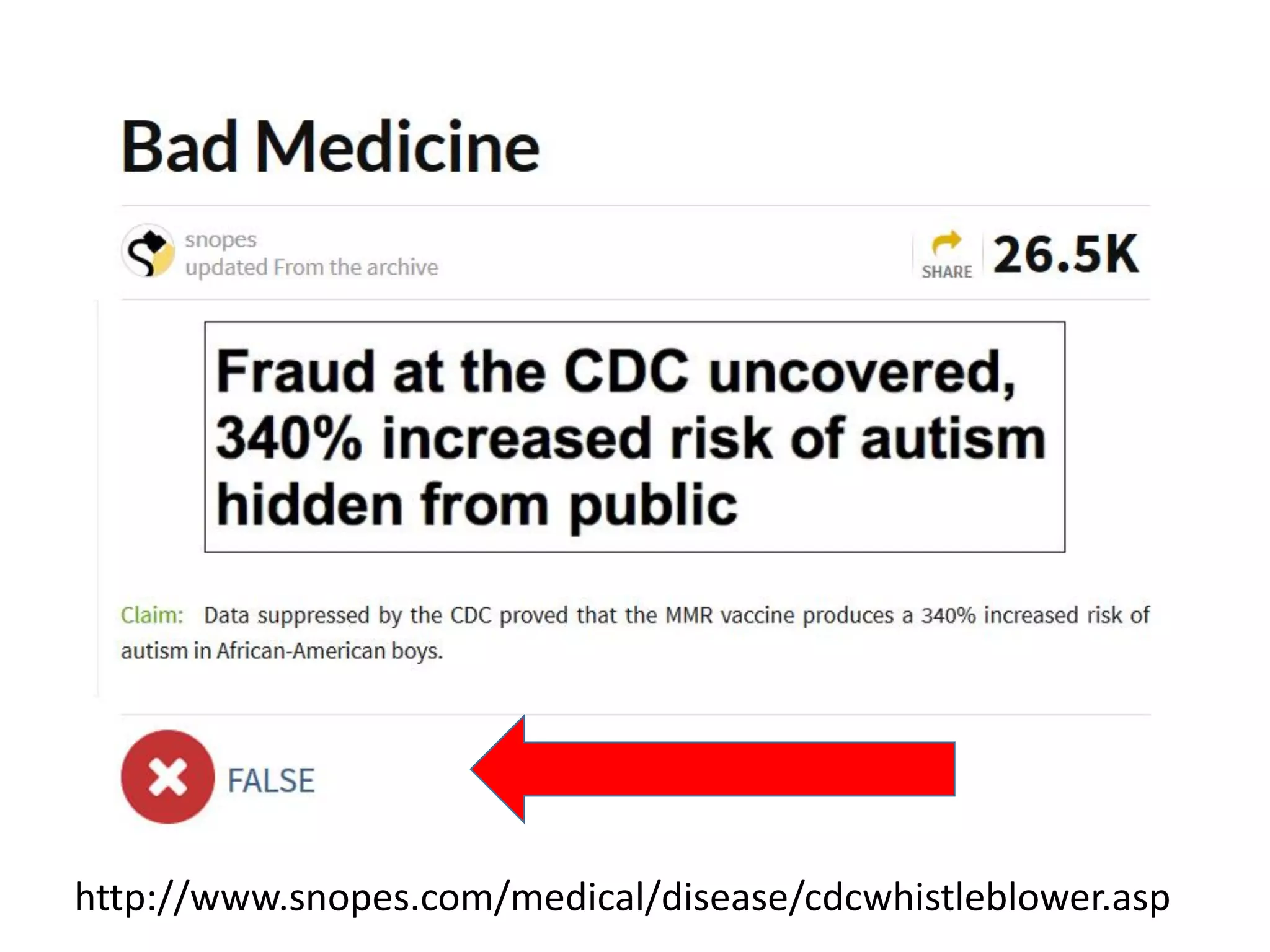

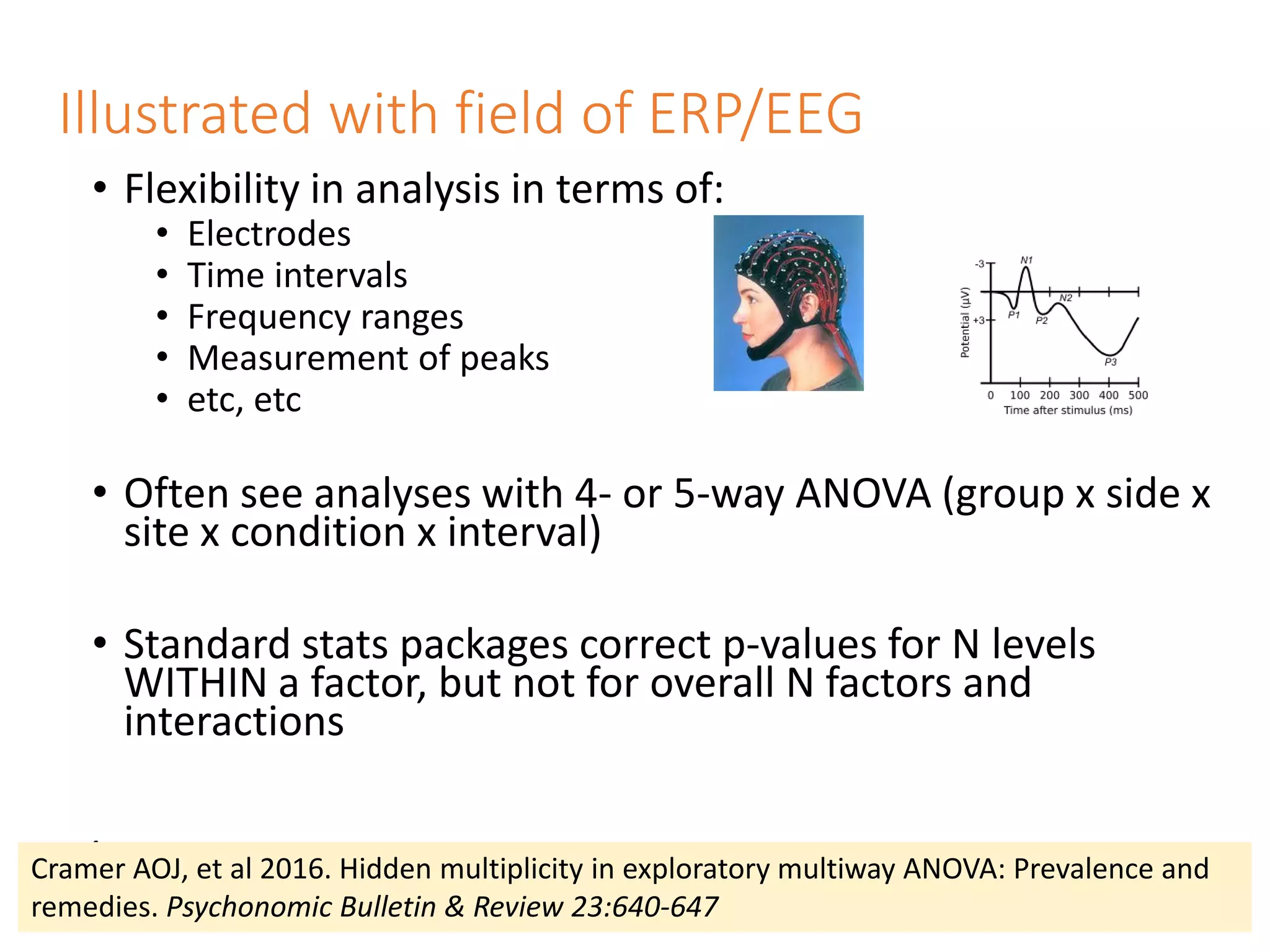

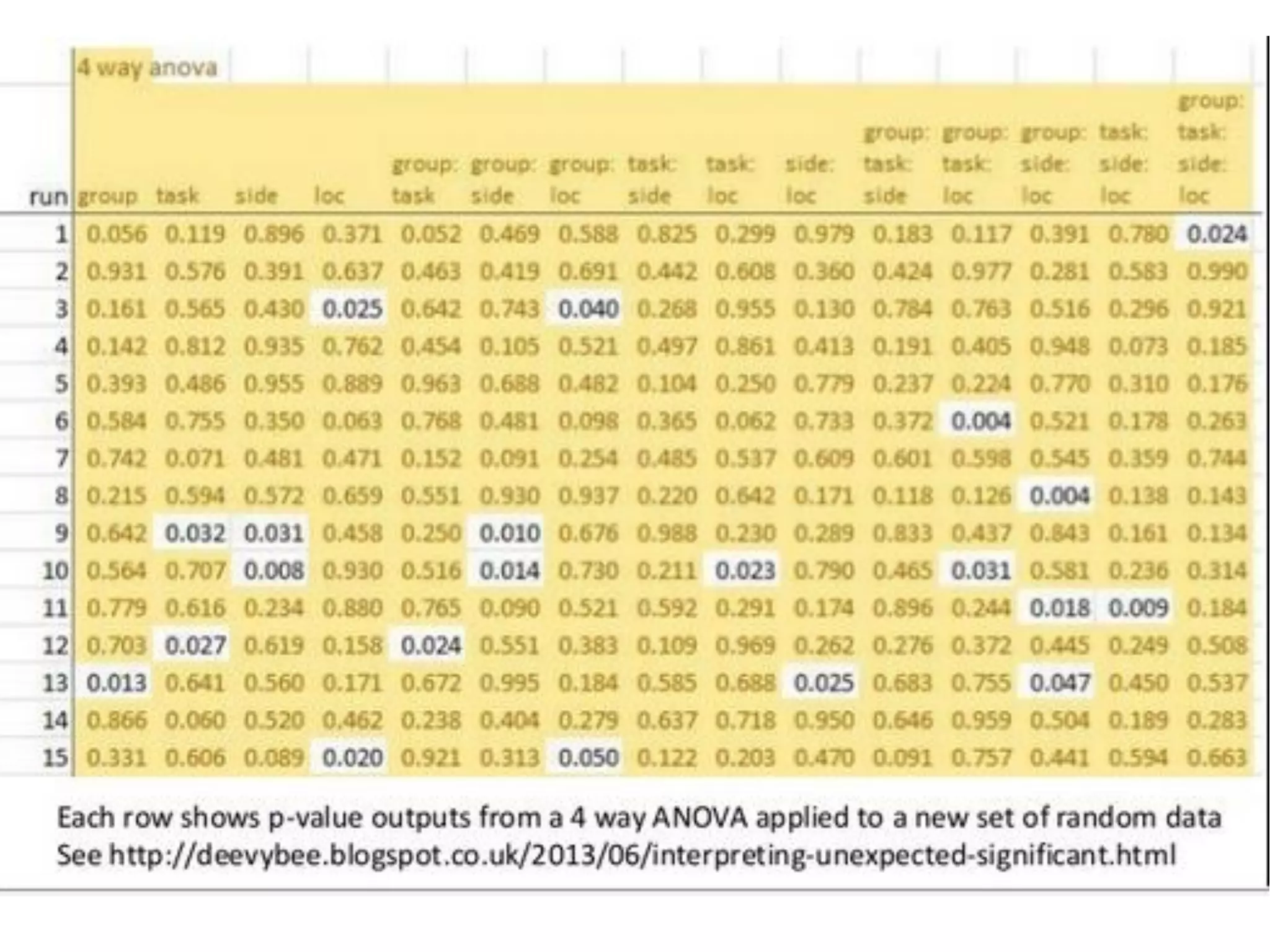

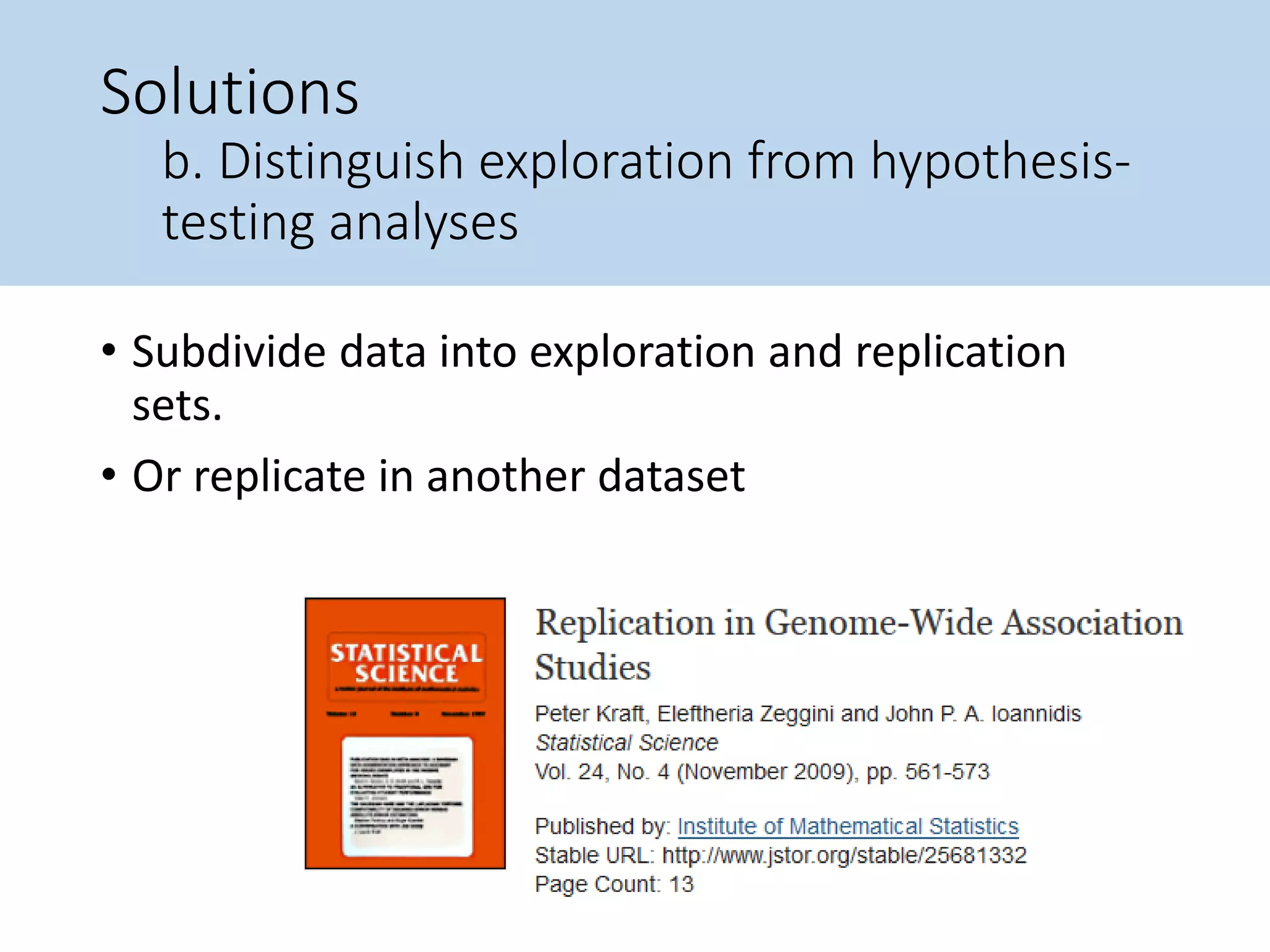

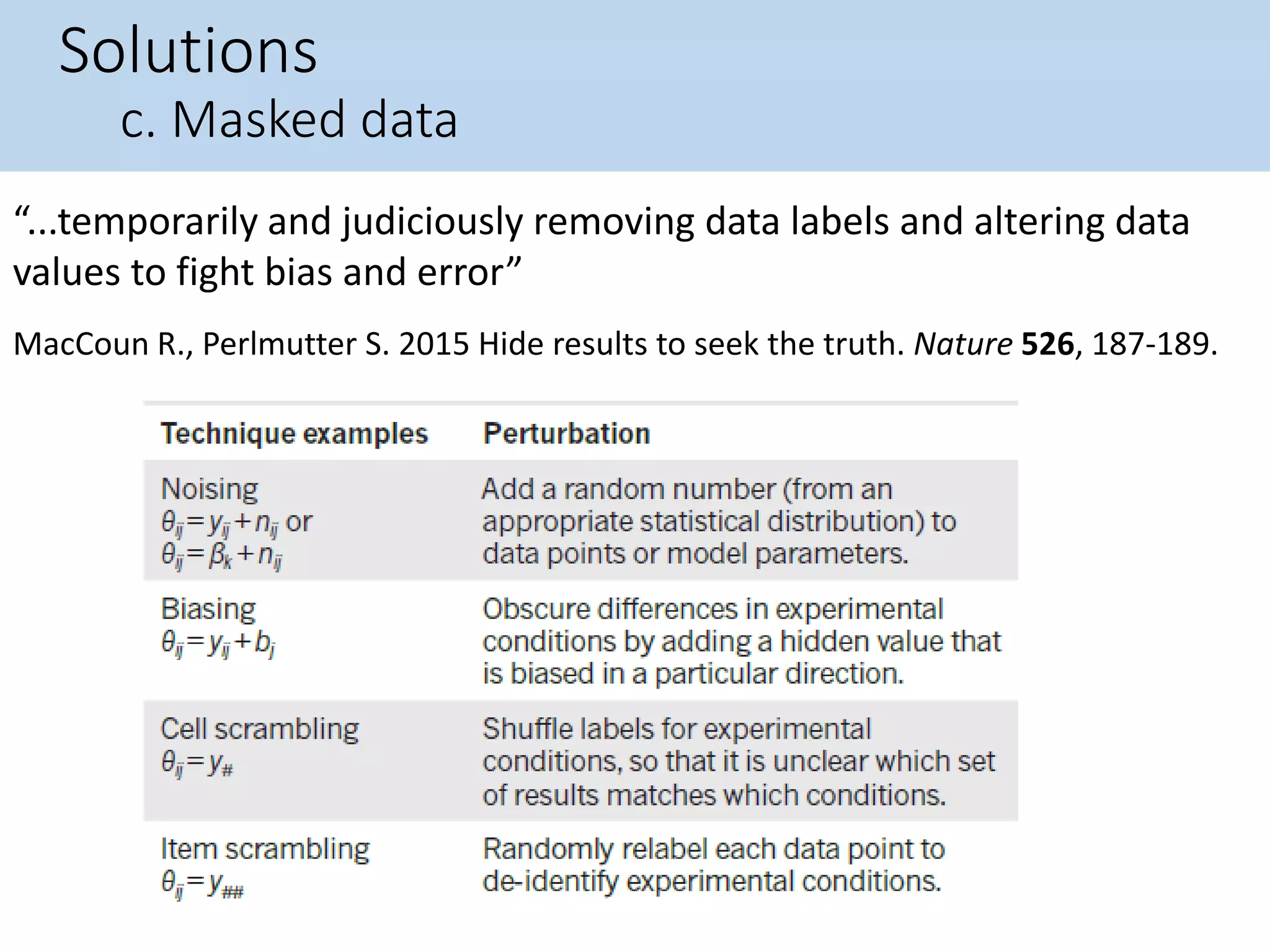

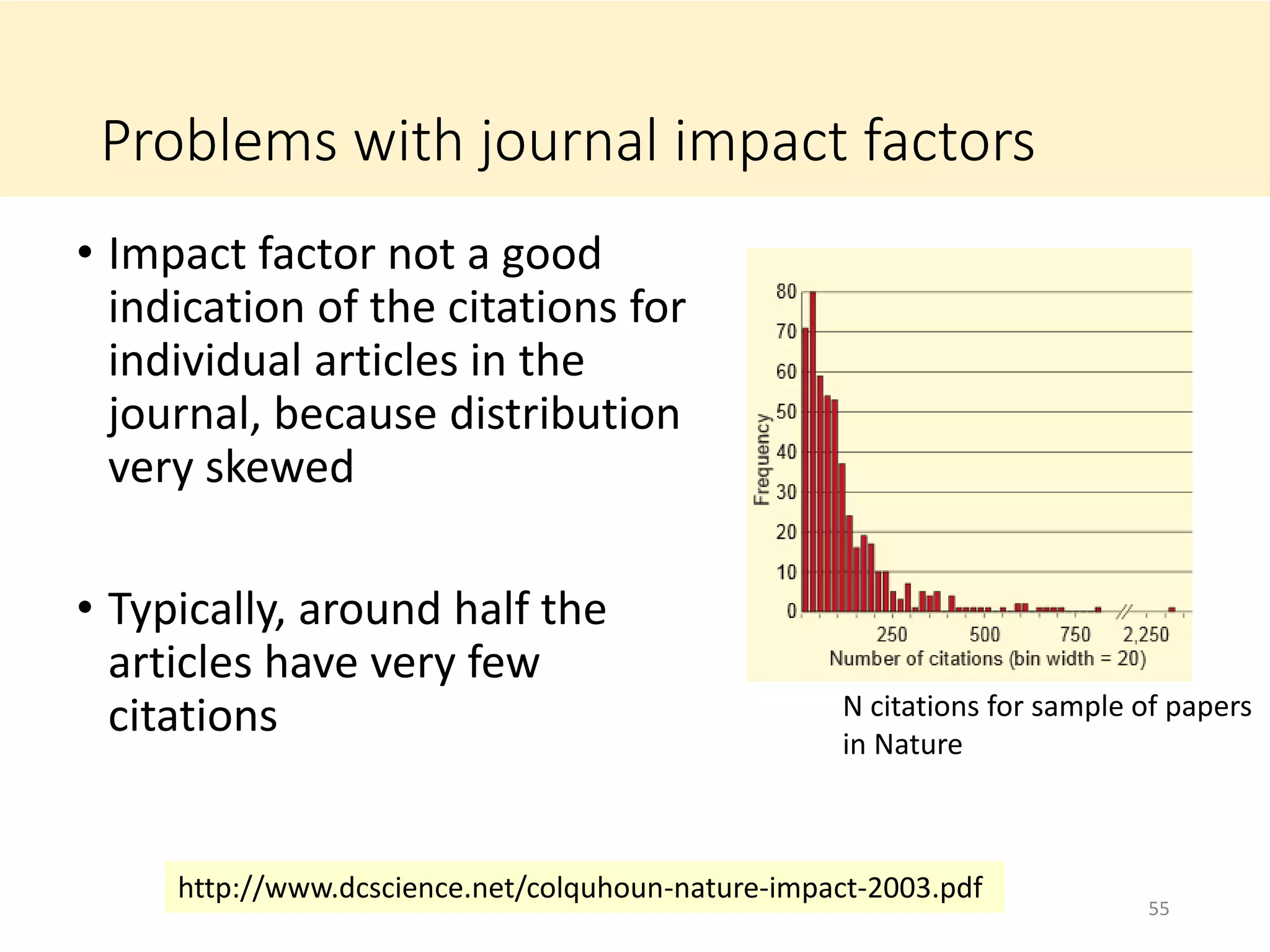

The document discusses the reproducibility crisis in science, particularly in biomedical research, highlighting that a significant portion of research funding may be wasted due to issues like p-hacking, low statistical power, and publication bias. It outlines historical concerns, current perceptions from researchers and the public, and suggests solutions such as preregistration of analyses, better data practices, and changes to funding incentives. The author emphasizes the need for accountability, improved methodology, and transparency in research practices to enhance reproducibility and reliability in scientific studies.