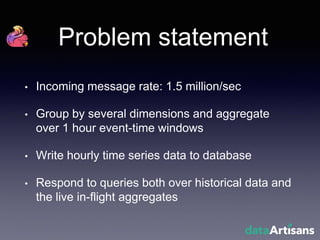

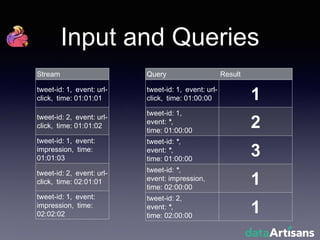

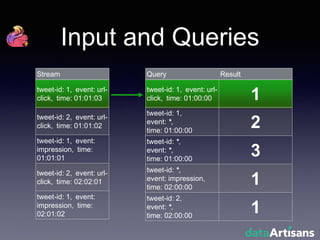

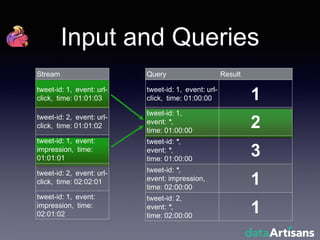

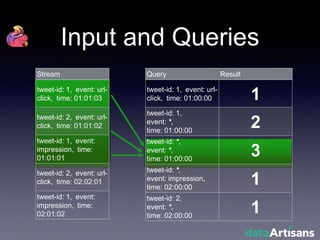

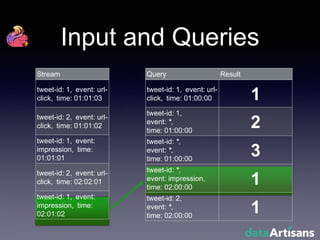

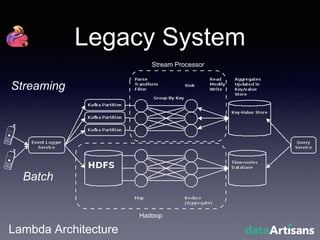

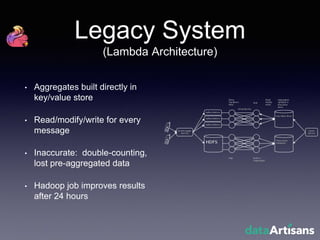

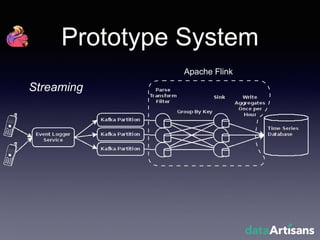

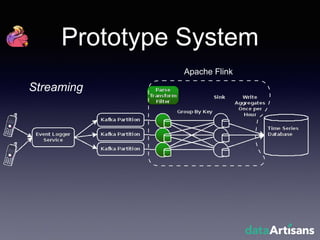

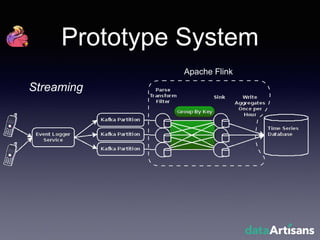

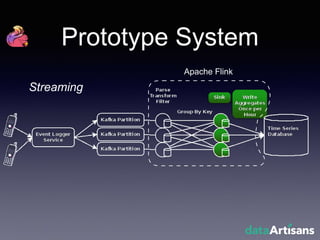

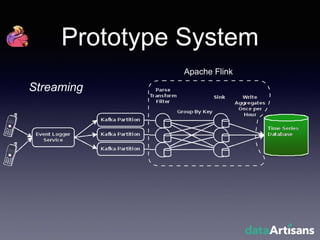

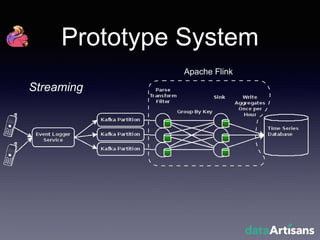

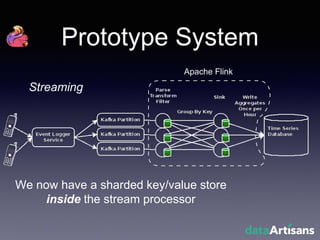

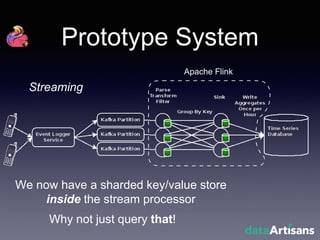

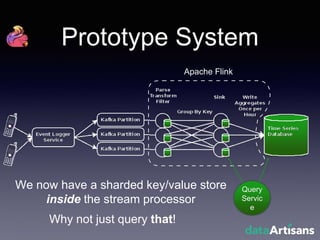

The document outlines the development of a prototype system using Apache Flink for stateful stream processing, aimed at eliminating the key-value store bottleneck and reducing hardware requirements by 200x while maintaining feature parity with existing systems. By leveraging Flink's fault-tolerant state management and built-in windowing, the system achieves real-time queries and exactly-once semantics for accurate data processing. Overall, the project demonstrates significant improvements in performance and resource efficiency for handling high-throughput event streams.