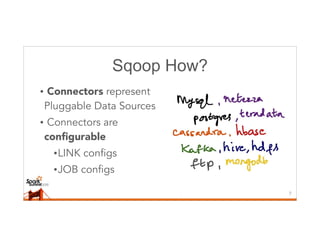

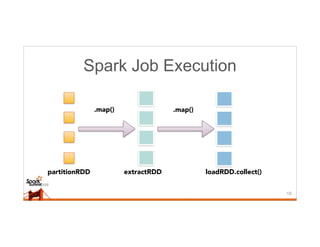

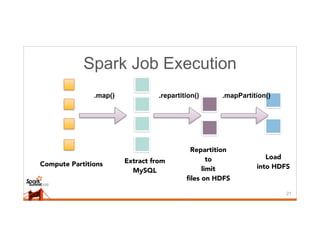

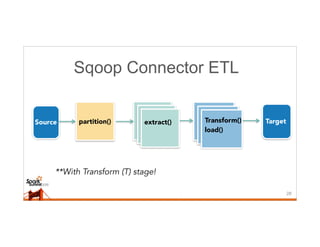

This document discusses running Sqoop jobs on Apache Spark for faster data ingestion into Hadoop. The authors describe how Sqoop jobs can be executed as Spark jobs by leveraging Spark's faster execution engine compared to MapReduce. They demonstrate running a Sqoop job to ingest data from MySQL to HDFS using Spark and show it is faster than using MapReduce. Some challenges encountered are managing dependencies and job submission, but overall it allows leveraging Sqoop's connectors within Spark's distributed processing framework. Next steps include exploring alternative job submission methods in Spark and adding transformation capabilities to Sqoop connectors.