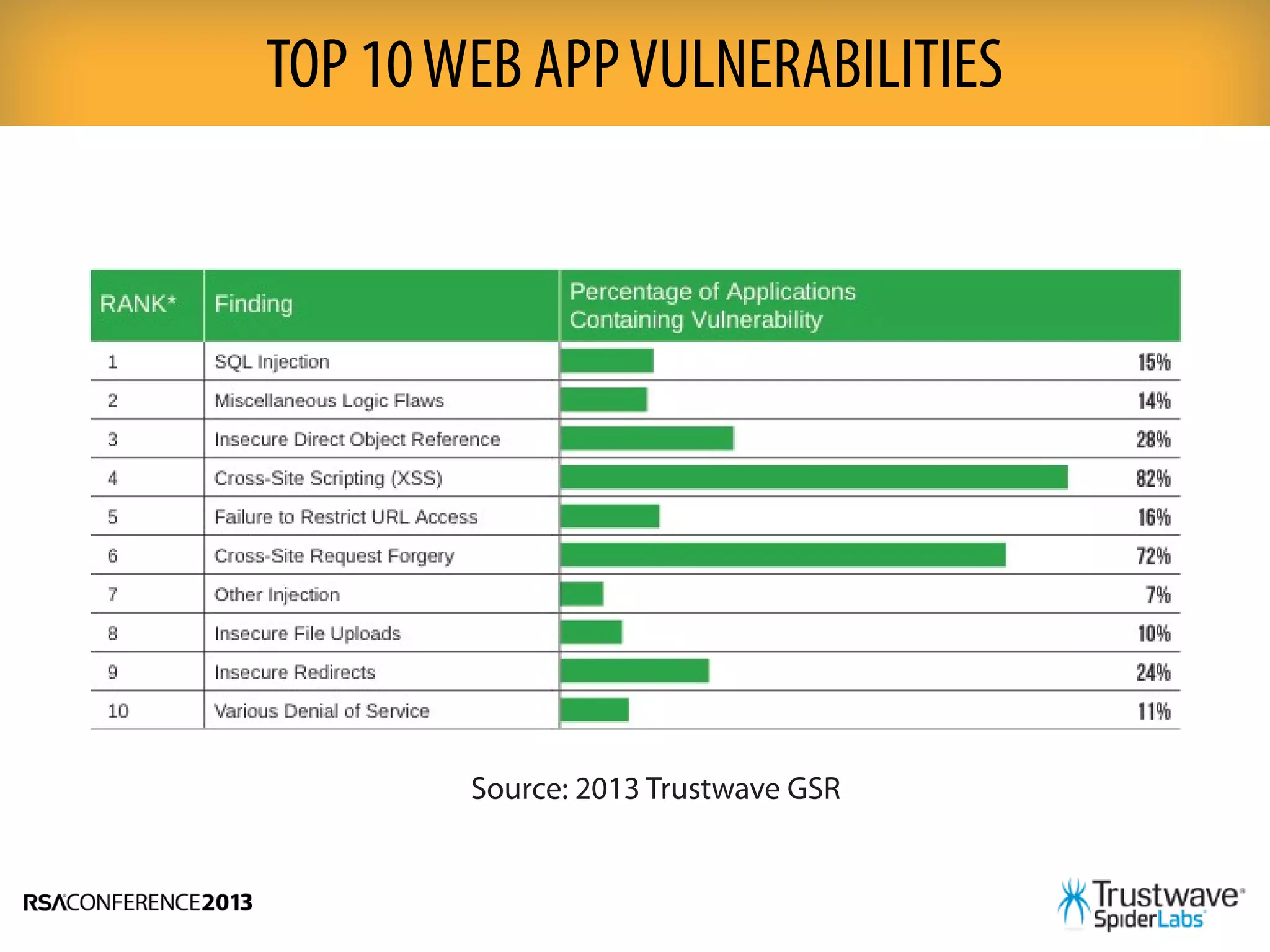

This document discusses the importance of taking a tailored approach to application security testing rather than relying on a one-size-fits-all method. It notes that automated scanning tools are best for modern web apps but may miss vulnerabilities in other types of apps. The document outlines several factors to consider when determining the appropriate security tests for a given application, such as its platform, age, and how it is used. It recommends combining multiple test types, such as automated scanning, manual penetration testing, and code reviews, to thoroughly assess application security.