Spark4

•

0 likes•45 views

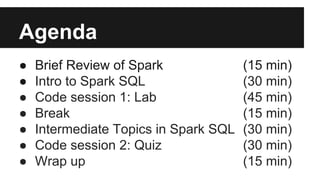

This document outlines an agenda for a presentation on Spark SQL. It includes a brief review of Spark, an introduction to Spark SQL lasting 30 minutes, a 45 minute hands-on coding lab, a 30 minute intermediate topics section, another 30 minute coding quiz, and a 15 minute wrap up.

Report

Share

Report

Share

Download to read offline

Recommended

Apache Spark for Library Developers with Erik Erlandson and William Benton

As a developer, data engineer, or data scientist, you’ve seen how Apache Spark is expressive enough to let you solve problems elegantly and efficient enough to let you scale out to handle more data. However, if you’re solving the same problems again and again, you probably want to capture and distribute your solutions so that you can focus on new problems and so other people can reuse and remix them: you want to develop a library that extends Spark.

You faced a learning curve when you first started using Spark, and you’ll face a different learning curve as you start to develop reusable abstractions atop Spark. In this talk, two experienced Spark library developers will give you the background and context you’ll need to turn your code into a library that you can share with the world. We’ll cover: Issues to consider when developing parallel algorithms with Spark, Designing generic, robust functions that operate on data frames and datasets, Extending data frames with user-defined functions (UDFs) and user-defined aggregates (UDAFs), Best practices around caching and broadcasting, and why these are especially important for library developers, Integrating with ML pipelines, Exposing key functionality in both Python and Scala, and How to test, build, and publish your library for the community.

We’ll back up our advice with concrete examples from real packages built atop Spark. You’ll leave this talk informed and inspired to take your Spark proficiency to the next level and develop and publish an awesome library of your own.

Analytics with Cassandra & Spark

Analytics with Cassandra, Spark and the Spark Cassandra Connector - Big Data User Group Karlsruhe & Stuttgart

Analytics with Cassandra, Spark & MLLib - Cassandra Essentials Day

This document provides an agenda for a presentation on Big Data Analytics with Cassandra, Spark, and MLLib. The presentation covers Spark basics, using Spark with Cassandra, Spark Streaming, Spark SQL, and Spark MLLib. It also includes examples of querying and analyzing Cassandra data with Spark and Spark SQL, and machine learning with Spark MLLib.

Apache Spark and DataStax Enablement

Introduction and background

Spark RDD API

Introduction to Scala

Spark DataFrames API + SparkSQL

Spark Execution Model

Spark Shell & Application Deployment

Spark Extensions (Spark Streaming, MLLib, ML)

Spark & DataStax Enterprise Integration

Demos

Zero to Streaming: Spark and Cassandra

Everything you need to know to start writing a streaming application connecting Apache Spark to Apache Cassandra.

Big data analytics with Spark & Cassandra

This document provides an agenda and overview of Big Data Analytics using Spark and Cassandra. It discusses Cassandra as a distributed database and Spark as a data processing framework. It covers connecting Spark and Cassandra, reading and writing Cassandra tables as Spark RDDs, and using Spark SQL, Spark Streaming, and Spark MLLib with Cassandra data. Key capabilities of each technology are highlighted such as Cassandra's tunable consistency and Spark's fault tolerance through RDD lineage. Examples demonstrate basic operations like filtering, aggregating, and joining Cassandra data with Spark.

Apache Spark RDD 101

The document discusses Resilient Distributed Datasets (RDDs) in Spark. It explains that RDDs hold references to partition objects containing subsets of data across a cluster. When a transformation like map is applied to an RDD, a new RDD is created to store the operation and maintain a dependency on the original RDD. This allows chained transformations to be lazily executed together in jobs scheduled by Spark.

Spark Streaming with Cassandra

Spark streaming can be used for near-real-time data analysis of data streams. It processes data in micro-batches and provides windowing operations. Stateful operations like updateStateByKey allow tracking state across batches. Data can be obtained from sources like Kafka, Flume, HDFS and processed using transformations before being saved to destinations like Cassandra. Fault tolerance is provided by replicating batches, but some data may be lost depending on how receivers collect data.

Recommended

Apache Spark for Library Developers with Erik Erlandson and William Benton

As a developer, data engineer, or data scientist, you’ve seen how Apache Spark is expressive enough to let you solve problems elegantly and efficient enough to let you scale out to handle more data. However, if you’re solving the same problems again and again, you probably want to capture and distribute your solutions so that you can focus on new problems and so other people can reuse and remix them: you want to develop a library that extends Spark.

You faced a learning curve when you first started using Spark, and you’ll face a different learning curve as you start to develop reusable abstractions atop Spark. In this talk, two experienced Spark library developers will give you the background and context you’ll need to turn your code into a library that you can share with the world. We’ll cover: Issues to consider when developing parallel algorithms with Spark, Designing generic, robust functions that operate on data frames and datasets, Extending data frames with user-defined functions (UDFs) and user-defined aggregates (UDAFs), Best practices around caching and broadcasting, and why these are especially important for library developers, Integrating with ML pipelines, Exposing key functionality in both Python and Scala, and How to test, build, and publish your library for the community.

We’ll back up our advice with concrete examples from real packages built atop Spark. You’ll leave this talk informed and inspired to take your Spark proficiency to the next level and develop and publish an awesome library of your own.

Analytics with Cassandra & Spark

Analytics with Cassandra, Spark and the Spark Cassandra Connector - Big Data User Group Karlsruhe & Stuttgart

Analytics with Cassandra, Spark & MLLib - Cassandra Essentials Day

This document provides an agenda for a presentation on Big Data Analytics with Cassandra, Spark, and MLLib. The presentation covers Spark basics, using Spark with Cassandra, Spark Streaming, Spark SQL, and Spark MLLib. It also includes examples of querying and analyzing Cassandra data with Spark and Spark SQL, and machine learning with Spark MLLib.

Apache Spark and DataStax Enablement

Introduction and background

Spark RDD API

Introduction to Scala

Spark DataFrames API + SparkSQL

Spark Execution Model

Spark Shell & Application Deployment

Spark Extensions (Spark Streaming, MLLib, ML)

Spark & DataStax Enterprise Integration

Demos

Zero to Streaming: Spark and Cassandra

Everything you need to know to start writing a streaming application connecting Apache Spark to Apache Cassandra.

Big data analytics with Spark & Cassandra

This document provides an agenda and overview of Big Data Analytics using Spark and Cassandra. It discusses Cassandra as a distributed database and Spark as a data processing framework. It covers connecting Spark and Cassandra, reading and writing Cassandra tables as Spark RDDs, and using Spark SQL, Spark Streaming, and Spark MLLib with Cassandra data. Key capabilities of each technology are highlighted such as Cassandra's tunable consistency and Spark's fault tolerance through RDD lineage. Examples demonstrate basic operations like filtering, aggregating, and joining Cassandra data with Spark.

Apache Spark RDD 101

The document discusses Resilient Distributed Datasets (RDDs) in Spark. It explains that RDDs hold references to partition objects containing subsets of data across a cluster. When a transformation like map is applied to an RDD, a new RDD is created to store the operation and maintain a dependency on the original RDD. This allows chained transformations to be lazily executed together in jobs scheduled by Spark.

Spark Streaming with Cassandra

Spark streaming can be used for near-real-time data analysis of data streams. It processes data in micro-batches and provides windowing operations. Stateful operations like updateStateByKey allow tracking state across batches. Data can be obtained from sources like Kafka, Flume, HDFS and processed using transformations before being saved to destinations like Cassandra. Fault tolerance is provided by replicating batches, but some data may be lost depending on how receivers collect data.

Spark Cassandra Connector Dataframes

The document discusses how the Spark Cassandra Connector works. It explains that the connector uses information about how data is partitioned in Cassandra nodes to generate Spark partitions that correspond to the token ranges in Cassandra. This allows data to be read from Cassandra in parallel across the Spark partitions. The connector also supports automatically pushing down filter predicates to the Cassandra database to reduce the amount of data read.

Spark cassandra connector.API, Best Practices and Use-Cases

- The document discusses Spark/Cassandra connector API, best practices, and use cases.

- It describes the connector architecture including support for Spark Core, SQL, and Streaming APIs. Data is read from and written to Cassandra tables mapped as RDDs.

- Best practices around data locality, failure handling, and cross-region/cluster operations are covered. Locality is important for performance.

- Use cases include data cleaning, schema migration, and analytics like joins and aggregation. The connector allows processing and analytics on Cassandra data with Spark.

Apache Spark - Basics of RDD | Big Data Hadoop Spark Tutorial | CloudxLab

Big Data with Hadoop & Spark Training: http://bit.ly/2L4rPmM

This CloudxLab Basics of RDD tutorial helps you to understand Basics of RDD in detail. Below are the topics covered in this tutorial:

1) What is RDD - Resilient Distributed Datasets

2) Creating RDD in Scala

3) RDD Operations - Transformations & Actions

4) RDD Transformations - map() & filter()

5) RDD Actions - take() & saveAsTextFile()

6) Lazy Evaluation & Instant Evaluation

7) Lineage Graph

8) flatMap and Union

9) Scala Transformations - Union

10) Scala Actions - saveAsTextFile(), collect(), take() and count()

11) More Actions - reduce()

12) Can We Use reduce() for Computing Average?

13) Solving Problems with Spark

14) Compute Average and Standard Deviation with Spark

15) Pick Random Samples From a Dataset using Spark

Lightning fast analytics with Spark and Cassandra

Spark is a fast and general engine for large-scale data processing. It provides APIs for Java, Scala, and Python that allow users to load data into a distributed cluster as resilient distributed datasets (RDDs) and then perform operations like map, filter, reduce, join and save. The Cassandra Spark driver allows accessing Cassandra tables as RDDs to perform analytics and run Spark SQL queries across Cassandra data. It provides server-side data selection and mapping of rows to Scala case classes or other objects.

Apache Spark - Dataframes & Spark SQL - Part 1 | Big Data Hadoop Spark Tutori...

Big Data with Hadoop & Spark Training: http://bit.ly/2sf2z6i

This CloudxLab Introduction to Spark SQL & DataFrames tutorial helps you to understand Spark SQL & DataFrames in detail. Below are the topics covered in this slide:

1) Introduction to DataFrames

2) Creating DataFrames from JSON

3) DataFrame Operations

4) Running SQL Queries Programmatically

5) Datasets

6) Inferring the Schema Using Reflection

7) Programmatically Specifying the Schema

Beyond the Query – Bringing Complex Access Patterns to NoSQL with DataStax - ...

Learn how to model beyond traditional direct access in Apache Cassandra. Utilizing the DataStax platform to harness the power of Spark and Solr to perform search, analytics, and complex operations in place on your Cassandra data!

Escape from Hadoop: Ultra Fast Data Analysis with Spark & Cassandra

The document discusses using Apache Spark and Apache Cassandra together for fast data analysis as an alternative to Hadoop. It provides examples of basic Spark operations on Cassandra tables like counting rows, filtering, joining with external data sources, and importing/exporting data. The document argues that Spark on Cassandra provides a simpler distributed processing framework compared to Hadoop.

DataSource V2 and Cassandra – A Whole New World

Data Source V2 has arrived for the Spark Cassandra Connector, but what does this mean for you? Speed, Flexibility and Usability improvements abound and we’ll walk you through some of the biggest highlights and how you can take advantage of them today.

Data analysis scala_spark

This document demonstrates how to use Scala and Spark to analyze text data from the Bible. It shows how to install Scala and Spark, load a text file of the Bible into a Spark RDD, perform searches to count verses containing words like "God" and "Love", and calculate statistics on the data like the total number of words and unique words used in the Bible. Example commands and outputs are provided.

Spark Cassandra Connector: Past, Present, and Future

The Spark Cassandra Connector allows integration between Spark and Cassandra for distributed analytics. Previously, integrating Hadoop and Cassandra required complex code and configuration. The connector maps Cassandra data distributed across nodes based on token ranges to Spark partitions, enabling analytics on large Cassandra datasets using Spark's APIs. This provides an easier method for tasks like generating reports, analytics, and ETL compared to previous options.

Using Spark to Load Oracle Data into Cassandra

The document discusses lessons learned from using Spark to load data from Oracle into Cassandra. It describes problems encountered with Spark SQL handling Oracle NUMBER and timeuuid fields incorrectly. It also discusses issues generating IDs across RDDs and limitations on returning RDDs of tuples over 22 items. The resources section provides references for learning more about Spark, Scala, and using Spark with Cassandra.

Apache Spark with Scala

Apache Spark is a fast, general engine for large-scale data processing. It supports batch, interactive, and stream processing using a unified API. Spark uses resilient distributed datasets (RDDs), which are immutable distributed collections of objects that can be operated on in parallel. RDDs support transformations like map, filter, and reduce and actions that return final results to the driver program. Spark provides high-level APIs in Scala, Java, Python, and R and an optimized engine that supports general computation graphs for data analysis.

Spark and Cassandra 2 Fast 2 Furious

Spark and Cassandra with the Datastax Spark Cassandra Connector

How it works and how to use it!

Missed Spark Summit but Still want to see some slides?

This slide deck is for you!

Time Series Processing with Apache Spark

Chronix Spark - a framework for time series processing with Apache Spark. Presentation from Apache Big Data, North America, 2016, Vancouver BC.

Time series with Apache Cassandra - Long version

Apache Cassandra has proven to be one of the best solutions for storing and retrieving time series data. This talk will give you an overview of the many ways you can be successful. We will discuss how the storage model of Cassandra is well suited for this pattern and go over examples of how best to build data models.

Cassandra and Spark: Optimizing for Data Locality-(Russell Spitzer, DataStax)

This document discusses how the Spark Cassandra Connector optimizes for data locality when performing analytics on Cassandra data using Spark. It does this by using the partition keys and token ranges to create Spark partitions that correspond to the data distribution across the Cassandra nodes, allowing work to be done locally to each data node without moving data across the network. This improves performance and avoids the costs of data shuffling.

Cassandra and Spark: Optimizing for Data Locality

This document discusses how the Spark Cassandra Connector optimizes for data locality when performing analytics on Cassandra data using Spark. It does this by using the partition keys and token ranges to create Spark partitions that correspond to the data distribution across the Cassandra nodes, allowing work to be done locally to each data node without moving data across the network. This improves performance and avoids the costs of data shuffling.

User Defined Aggregation in Apache Spark: A Love Story

This document summarizes a user's journey developing a custom aggregation function for Apache Spark using a T-Digest sketch. The user initially implemented it as a User Defined Aggregate Function (UDAF) but ran into performance issues due to excessive serialization/deserialization. They then worked to resolve it by implementing the function as a custom Aggregator using Spark 3.0's new aggregation APIs, which avoided unnecessary serialization and provided a 70x performance improvement. The story highlights the importance of understanding how custom functions interact with Spark's execution model and optimization techniques like avoiding excessive serialization.

Updates from Cassandra Summit 2016 & SASI Indexes

Updates from Cassandra Summit 2016 including a summary of technical notes from the keynote. Deeper dive on materialized views and SASI indexes.

Spark cassandra integration 2016

This document summarizes a presentation about using Spark with Apache Cassandra. It discusses using Spark jobs to load and transform data in Cassandra for purposes such as data import, cleaning, schema migration and analytics. It also covers aspects of the connector architecture like data locality, failure handling and cross-cluster operations. Examples are given of using Spark and Cassandra together for parallel data ingestion and top-K queries on a large dataset.

Spark Programming

Spark is an open-source cluster computing framework. It was developed in 2009 at UC Berkeley and open sourced in 2010. Spark supports batch, streaming, and interactive computations in a unified framework. The core abstraction in Spark is the resilient distributed dataset (RDD), which allows data to be partitioned across a cluster for parallel processing. RDDs support transformations like map and filter that return new RDDs and actions that return values to the driver program.

[DSC 2016] 系列活動:李泳泉 / 星火燎原 - Spark 機器學習初探![[DSC 2016] 系列活動:李泳泉 / 星火燎原 - Spark 機器學習初探](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[DSC 2016] 系列活動:李泳泉 / 星火燎原 - Spark 機器學習初探](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

此課程專為 Spark 入門者設計,在六小時帶您從無到有建置 Spark 開發環境,並以實作方式帶領您了解 Spark 機器學習函式庫 (MLlib) 的應用及開發。課程實作將以 Spark 核心之實作語言 - Scala 為主,搭配 Scala IDE eclipse 及相關 Library 建置本機開發環境,透過 IDE 強大的開發及偵錯功能加速開發流程;並介紹如何佈置至 Spark 平台,透過 Spark-submit 執行資料分析工作。本課程涵蓋機器學習中最常使用之分類、迴歸及分群方法,歡迎對 Spark 感興趣,卻不知從何下手;或想快速的對 Spark 機器學習有初步的了解的您參與!

More Related Content

What's hot

Spark Cassandra Connector Dataframes

The document discusses how the Spark Cassandra Connector works. It explains that the connector uses information about how data is partitioned in Cassandra nodes to generate Spark partitions that correspond to the token ranges in Cassandra. This allows data to be read from Cassandra in parallel across the Spark partitions. The connector also supports automatically pushing down filter predicates to the Cassandra database to reduce the amount of data read.

Spark cassandra connector.API, Best Practices and Use-Cases

- The document discusses Spark/Cassandra connector API, best practices, and use cases.

- It describes the connector architecture including support for Spark Core, SQL, and Streaming APIs. Data is read from and written to Cassandra tables mapped as RDDs.

- Best practices around data locality, failure handling, and cross-region/cluster operations are covered. Locality is important for performance.

- Use cases include data cleaning, schema migration, and analytics like joins and aggregation. The connector allows processing and analytics on Cassandra data with Spark.

Apache Spark - Basics of RDD | Big Data Hadoop Spark Tutorial | CloudxLab

Big Data with Hadoop & Spark Training: http://bit.ly/2L4rPmM

This CloudxLab Basics of RDD tutorial helps you to understand Basics of RDD in detail. Below are the topics covered in this tutorial:

1) What is RDD - Resilient Distributed Datasets

2) Creating RDD in Scala

3) RDD Operations - Transformations & Actions

4) RDD Transformations - map() & filter()

5) RDD Actions - take() & saveAsTextFile()

6) Lazy Evaluation & Instant Evaluation

7) Lineage Graph

8) flatMap and Union

9) Scala Transformations - Union

10) Scala Actions - saveAsTextFile(), collect(), take() and count()

11) More Actions - reduce()

12) Can We Use reduce() for Computing Average?

13) Solving Problems with Spark

14) Compute Average and Standard Deviation with Spark

15) Pick Random Samples From a Dataset using Spark

Lightning fast analytics with Spark and Cassandra

Spark is a fast and general engine for large-scale data processing. It provides APIs for Java, Scala, and Python that allow users to load data into a distributed cluster as resilient distributed datasets (RDDs) and then perform operations like map, filter, reduce, join and save. The Cassandra Spark driver allows accessing Cassandra tables as RDDs to perform analytics and run Spark SQL queries across Cassandra data. It provides server-side data selection and mapping of rows to Scala case classes or other objects.

Apache Spark - Dataframes & Spark SQL - Part 1 | Big Data Hadoop Spark Tutori...

Big Data with Hadoop & Spark Training: http://bit.ly/2sf2z6i

This CloudxLab Introduction to Spark SQL & DataFrames tutorial helps you to understand Spark SQL & DataFrames in detail. Below are the topics covered in this slide:

1) Introduction to DataFrames

2) Creating DataFrames from JSON

3) DataFrame Operations

4) Running SQL Queries Programmatically

5) Datasets

6) Inferring the Schema Using Reflection

7) Programmatically Specifying the Schema

Beyond the Query – Bringing Complex Access Patterns to NoSQL with DataStax - ...

Learn how to model beyond traditional direct access in Apache Cassandra. Utilizing the DataStax platform to harness the power of Spark and Solr to perform search, analytics, and complex operations in place on your Cassandra data!

Escape from Hadoop: Ultra Fast Data Analysis with Spark & Cassandra

The document discusses using Apache Spark and Apache Cassandra together for fast data analysis as an alternative to Hadoop. It provides examples of basic Spark operations on Cassandra tables like counting rows, filtering, joining with external data sources, and importing/exporting data. The document argues that Spark on Cassandra provides a simpler distributed processing framework compared to Hadoop.

DataSource V2 and Cassandra – A Whole New World

Data Source V2 has arrived for the Spark Cassandra Connector, but what does this mean for you? Speed, Flexibility and Usability improvements abound and we’ll walk you through some of the biggest highlights and how you can take advantage of them today.

Data analysis scala_spark

This document demonstrates how to use Scala and Spark to analyze text data from the Bible. It shows how to install Scala and Spark, load a text file of the Bible into a Spark RDD, perform searches to count verses containing words like "God" and "Love", and calculate statistics on the data like the total number of words and unique words used in the Bible. Example commands and outputs are provided.

Spark Cassandra Connector: Past, Present, and Future

The Spark Cassandra Connector allows integration between Spark and Cassandra for distributed analytics. Previously, integrating Hadoop and Cassandra required complex code and configuration. The connector maps Cassandra data distributed across nodes based on token ranges to Spark partitions, enabling analytics on large Cassandra datasets using Spark's APIs. This provides an easier method for tasks like generating reports, analytics, and ETL compared to previous options.

Using Spark to Load Oracle Data into Cassandra

The document discusses lessons learned from using Spark to load data from Oracle into Cassandra. It describes problems encountered with Spark SQL handling Oracle NUMBER and timeuuid fields incorrectly. It also discusses issues generating IDs across RDDs and limitations on returning RDDs of tuples over 22 items. The resources section provides references for learning more about Spark, Scala, and using Spark with Cassandra.

Apache Spark with Scala

Apache Spark is a fast, general engine for large-scale data processing. It supports batch, interactive, and stream processing using a unified API. Spark uses resilient distributed datasets (RDDs), which are immutable distributed collections of objects that can be operated on in parallel. RDDs support transformations like map, filter, and reduce and actions that return final results to the driver program. Spark provides high-level APIs in Scala, Java, Python, and R and an optimized engine that supports general computation graphs for data analysis.

Spark and Cassandra 2 Fast 2 Furious

Spark and Cassandra with the Datastax Spark Cassandra Connector

How it works and how to use it!

Missed Spark Summit but Still want to see some slides?

This slide deck is for you!

Time Series Processing with Apache Spark

Chronix Spark - a framework for time series processing with Apache Spark. Presentation from Apache Big Data, North America, 2016, Vancouver BC.

Time series with Apache Cassandra - Long version

Apache Cassandra has proven to be one of the best solutions for storing and retrieving time series data. This talk will give you an overview of the many ways you can be successful. We will discuss how the storage model of Cassandra is well suited for this pattern and go over examples of how best to build data models.

Cassandra and Spark: Optimizing for Data Locality-(Russell Spitzer, DataStax)

This document discusses how the Spark Cassandra Connector optimizes for data locality when performing analytics on Cassandra data using Spark. It does this by using the partition keys and token ranges to create Spark partitions that correspond to the data distribution across the Cassandra nodes, allowing work to be done locally to each data node without moving data across the network. This improves performance and avoids the costs of data shuffling.

Cassandra and Spark: Optimizing for Data Locality

This document discusses how the Spark Cassandra Connector optimizes for data locality when performing analytics on Cassandra data using Spark. It does this by using the partition keys and token ranges to create Spark partitions that correspond to the data distribution across the Cassandra nodes, allowing work to be done locally to each data node without moving data across the network. This improves performance and avoids the costs of data shuffling.

User Defined Aggregation in Apache Spark: A Love Story

This document summarizes a user's journey developing a custom aggregation function for Apache Spark using a T-Digest sketch. The user initially implemented it as a User Defined Aggregate Function (UDAF) but ran into performance issues due to excessive serialization/deserialization. They then worked to resolve it by implementing the function as a custom Aggregator using Spark 3.0's new aggregation APIs, which avoided unnecessary serialization and provided a 70x performance improvement. The story highlights the importance of understanding how custom functions interact with Spark's execution model and optimization techniques like avoiding excessive serialization.

Updates from Cassandra Summit 2016 & SASI Indexes

Updates from Cassandra Summit 2016 including a summary of technical notes from the keynote. Deeper dive on materialized views and SASI indexes.

Spark cassandra integration 2016

This document summarizes a presentation about using Spark with Apache Cassandra. It discusses using Spark jobs to load and transform data in Cassandra for purposes such as data import, cleaning, schema migration and analytics. It also covers aspects of the connector architecture like data locality, failure handling and cross-cluster operations. Examples are given of using Spark and Cassandra together for parallel data ingestion and top-K queries on a large dataset.

What's hot (20)

Spark cassandra connector.API, Best Practices and Use-Cases

Spark cassandra connector.API, Best Practices and Use-Cases

Apache Spark - Basics of RDD | Big Data Hadoop Spark Tutorial | CloudxLab

Apache Spark - Basics of RDD | Big Data Hadoop Spark Tutorial | CloudxLab

Apache Spark - Dataframes & Spark SQL - Part 1 | Big Data Hadoop Spark Tutori...

Apache Spark - Dataframes & Spark SQL - Part 1 | Big Data Hadoop Spark Tutori...

Beyond the Query – Bringing Complex Access Patterns to NoSQL with DataStax - ...

Beyond the Query – Bringing Complex Access Patterns to NoSQL with DataStax - ...

Escape from Hadoop: Ultra Fast Data Analysis with Spark & Cassandra

Escape from Hadoop: Ultra Fast Data Analysis with Spark & Cassandra

Spark Cassandra Connector: Past, Present, and Future

Spark Cassandra Connector: Past, Present, and Future

Cassandra and Spark: Optimizing for Data Locality-(Russell Spitzer, DataStax)

Cassandra and Spark: Optimizing for Data Locality-(Russell Spitzer, DataStax)

User Defined Aggregation in Apache Spark: A Love Story

User Defined Aggregation in Apache Spark: A Love Story

Similar to Spark4

Spark Programming

Spark is an open-source cluster computing framework. It was developed in 2009 at UC Berkeley and open sourced in 2010. Spark supports batch, streaming, and interactive computations in a unified framework. The core abstraction in Spark is the resilient distributed dataset (RDD), which allows data to be partitioned across a cluster for parallel processing. RDDs support transformations like map and filter that return new RDDs and actions that return values to the driver program.

[DSC 2016] 系列活動:李泳泉 / 星火燎原 - Spark 機器學習初探![[DSC 2016] 系列活動:李泳泉 / 星火燎原 - Spark 機器學習初探](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[DSC 2016] 系列活動:李泳泉 / 星火燎原 - Spark 機器學習初探](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

此課程專為 Spark 入門者設計,在六小時帶您從無到有建置 Spark 開發環境,並以實作方式帶領您了解 Spark 機器學習函式庫 (MLlib) 的應用及開發。課程實作將以 Spark 核心之實作語言 - Scala 為主,搭配 Scala IDE eclipse 及相關 Library 建置本機開發環境,透過 IDE 強大的開發及偵錯功能加速開發流程;並介紹如何佈置至 Spark 平台,透過 Spark-submit 執行資料分析工作。本課程涵蓋機器學習中最常使用之分類、迴歸及分群方法,歡迎對 Spark 感興趣,卻不知從何下手;或想快速的對 Spark 機器學習有初步的了解的您參與!

Artigo 81 - spark_tutorial.pdf

This document provides an overview of Apache Spark, an open-source cluster computing framework. It discusses Spark's history and community growth. Key aspects covered include Resilient Distributed Datasets (RDDs) which allow transformations like map and filter, fault tolerance through lineage tracking, and caching data in memory or disk. Example applications demonstrated include log mining, machine learning algorithms, and Spark's libraries for SQL, streaming, and machine learning.

Intro to Spark

This document provides an introduction and overview of Apache Spark. It discusses why Spark is useful, describes some Spark basics including Resilient Distributed Datasets (RDDs) and DataFrames, and gives a quick tour of Spark Core, SQL, and Streaming functionality. It also provides some tips for using Spark and describes how to set up Spark locally. The presenter is introduced as a data engineer who uses Spark to load data from Kafka streams into Redshift and Cassandra. Ways to learn more about Spark are suggested at the end.

OCF.tw's talk about "Introduction to spark"

在 OCF and OSSF 的邀請下分享一下 Spark

If you have any interest about 財團法人開放文化基金會(OCF) or 自由軟體鑄造場(OSSF)

Please check http://ocf.tw/ or http://www.openfoundry.org/

另外感謝 CLBC 的場地

如果你想到在一個良好的工作環境下工作

歡迎跟 CLBC 接洽 http://clbc.tw/

Osd ctw spark

Spark is a fast and general engine for large-scale data processing. It runs on Hadoop clusters through YARN and Mesos, and can also run standalone. Spark is up to 100x faster than Hadoop for certain applications because it keeps data in memory rather than disk, and it supports iterative algorithms through its Resilient Distributed Dataset (RDD) abstraction. The presenter provides a demo of Spark's word count algorithm in Scala, Java, and Python to illustrate how easy it is to use Spark across languages.

20130912 YTC_Reynold Xin_Spark and Shark

In this talk, we present two emerging, popular open source projects: Spark and Shark. Spark is an open source cluster computing system that aims to make data analytics fast — both fast to run and fast to write. It outperform Hadoop by up to 100x in many real-world applications. Spark programs are often much shorter than their MapReduce counterparts thanks to its high-level APIs and language integration in Java, Scala, and Python. Shark is an analytic query engine built on top of Spark that is compatible with Hive. It can run Hive queries much faster in existing Hive warehouses without modifications.

These systems have been adopted by many organizations large and small (e.g. Yahoo, Intel, Adobe, Alibaba, Tencent) to implement data intensive applications such as ETL, interactive SQL, and machine learning.

Spark devoxx2014

The document is a presentation about Apache Spark, which is described as a fast and general engine for large-scale data processing. It discusses what Spark is, its core concepts like RDDs, and the Spark ecosystem which includes tools like Spark Streaming, Spark SQL, MLlib, and GraphX. Examples of using Spark for tasks like mining DNA, geodata, and text are also presented.

Apache Spark

This document provides an overview of Apache Spark, including its core concepts, transformations and actions, persistence, parallelism, and examples. Spark is introduced as a fast and general engine for large-scale data processing, with advantages like in-memory computing, fault tolerance, and rich APIs. Key concepts covered include its resilient distributed datasets (RDDs) and lazy evaluation approach. The document also discusses Spark SQL, streaming, and integration with other tools.

Apache Spark Overview @ ferret

Apache Spark is a fast and general engine for large-scale data processing. It was originally developed in 2009 and is now supported by Databricks. Spark provides APIs in Java, Scala, Python and can run on Hadoop, Mesos, standalone or in the cloud. It provides high-level APIs like Spark SQL, MLlib, GraphX and Spark Streaming for structured data processing, machine learning, graph analytics and stream processing.

Spark core

Apache Spark is a fast and general engine for big data processing, with built-in modules for streaming, SQL, machine learning and graph processing.

Dive into spark2

Abstract –

Spark 2 is here, while Spark has been the leading cluster computation framework for severl years, its second version takes Spark to new heights. In this seminar, we will go over Spark internals and learn the new concepts of Spark 2 to create better scalable big data applications.

Target Audience

Architects, Java/Scala developers, Big Data engineers, team leaders

Prerequisites

Java/Scala knowledge and SQL knowledge

Contents:

- Spark internals

- Architecture

- RDD

- Shuffle explained

- Dataset API

- Spark SQL

- Spark Streaming

From Query Plan to Query Performance: Supercharging your Apache Spark Queries...

The SQL tab in the Spark UI provides a lot of information for analysing your spark queries, ranging from the query plan, to all associated statistics. However, many new Spark practitioners get overwhelmed by the information presented, and have trouble using it to their benefit. In this talk we want to give a gentle introduction to how to read this SQL tab. We will first go over all the common spark operations, such as scans, projects, filter, aggregations and joins; and how they relate to the Spark code written. In the second part of the talk we will show how to read the associated statistics to pinpoint performance bottlenecks.

Introduction to Apache Spark

This presentation is an introduction to Apache Spark. It covers the basic API, some advanced features and describes how Spark physically executes its jobs.

Introduction to Spark - DataFactZ

We are a company driven by inquisitive data scientists, having developed a pragmatic and interdisciplinary approach, which has evolved over the decades working with over 100 clients across multiple industries. Combining several Data Science techniques from statistics, machine learning, deep learning, decision science, cognitive science, and business intelligence, with our ecosystem of technology platforms, we have produced unprecedented solutions. Welcome to the Data Science Analytics team that can do it all, from architecture to algorithms.

Our practice delivers data driven solutions, including Descriptive Analytics, Diagnostic Analytics, Predictive Analytics, and Prescriptive Analytics. We employ a number of technologies in the area of Big Data and Advanced Analytics such as DataStax (Cassandra), Databricks (Spark), Cloudera, Hortonworks, MapR, R, SAS, Matlab, SPSS and Advanced Data Visualizations.

This presentation is designed for Spark Enthusiasts to get started and details of the course are below.

1. Introduction to Apache Spark

2. Functional Programming + Scala

3. Spark Core

4. Spark SQL + Parquet

5. Advanced Libraries

6. Tips & Tricks

7. Where do I go from here?

Introduction to apache spark

we will see an overview of Spark in Big Data. We will start with an introduction to Apache Spark Programming. Then we will move to know the Spark History. Moreover, we will learn why Spark is needed. Afterward, will cover all fundamental of Spark components. Furthermore, we will learn about Spark’s core abstraction and Spark RDD. For more detailed insights, we will also cover spark features, Spark limitations, and Spark Use cases.

Streaming Big Data with Spark, Kafka, Cassandra, Akka & Scala (from webinar)

This document provides an overview of streaming big data with Spark, Kafka, Cassandra, Akka, and Scala. It discusses delivering meaning in near-real time at high velocity and an overview of Spark Streaming, Kafka and Akka. It also covers Cassandra and the Spark Cassandra Connector as well as integration in big data applications. The presentation is given by Helena Edelson, a Spark Cassandra Connector committer and Akka contributor who is a Scala and big data conference speaker working as a senior software engineer at DataStax.

Apache spark - Architecture , Overview & libraries

This document provides an overview of Apache Spark, an open-source unified analytics engine for large-scale data processing. It discusses Spark's core APIs including RDDs and transformations/actions. It also covers Spark SQL, Spark Streaming, MLlib, and GraphX. Spark provides a fast and general engine for big data processing, with explicit operations for streaming, SQL, machine learning, and graph processing. The document includes installation instructions and examples of using various Spark components.

An Overview of Apache Spark

This lecture was intended to provide an introduction to Apache Spark's features and functionality and importance of Spark as a distributed data processing framework compared to Hadoop MapReduce. The target audience was MSc students with programming skills at beginner to intermediate level.

Cassandra and SparkSQL: You Don't Need Functional Programming for Fun with Ru...

Did you know almost every feature of the Spark Cassandra connector can be accessed without even a single Monad! In this talk I’ll demonstrate how you can take advantage of Spark on Cassandra using only the SQL you already know! Learn how to register tables, ETL data, and analyze query plans all from the comfort of your very own JDBC Client. Find out how you can access Cassandra with ease from the BI tool of your choice and take your analysis to the next level. Discover the tricks of debugging and analyzing predicate pushdowns using the Spark SQL Thrift Server. Preview the latest developments of the Spark Cassandra Connector.

Similar to Spark4 (20)

From Query Plan to Query Performance: Supercharging your Apache Spark Queries...

From Query Plan to Query Performance: Supercharging your Apache Spark Queries...

Streaming Big Data with Spark, Kafka, Cassandra, Akka & Scala (from webinar)

Streaming Big Data with Spark, Kafka, Cassandra, Akka & Scala (from webinar)

Apache spark - Architecture , Overview & libraries

Apache spark - Architecture , Overview & libraries

Cassandra and SparkSQL: You Don't Need Functional Programming for Fun with Ru...

Cassandra and SparkSQL: You Don't Need Functional Programming for Fun with Ru...

More from poovarasu maniandan

Spark7

The document summarizes the major new features in Apache Spark 2.3, including continuous processing for low-latency streaming, Spark running on Kubernetes, improved PySpark performance using Pandas UDFs, machine learning capabilities on streaming data, and image reading support. Some key updates are continuous processing for streaming with latency of ~1ms and at-least once semantics, Spark's ability to run natively on Kubernetes clusters, and Pandas UDFs in PySpark providing a 3x to 100x performance boost over row-at-a-time UDFs. The speaker is the Spark 2.3 release manager and discusses these topics at the Spark Summit on June 6, 2018.

Spark3

This document discusses using DataFrames in Spark from a data analyst's perspective. It provides an overview of Spark for data analysts, the Spark execution model, and some case studies. The key points are:

- DataFrames in Spark allow analysts to analyze all data faster without extra data copies by bringing analysis to the data.

- Transformations like joins, groups, and aggregations can be narrow transformations that operate on each partition separately or wide transformations that require data shuffling between partitions.

- Organizing data by date partitions (year/month/day) and repartitioning before partitioning can improve query performance for date-based queries and avoid creating too many small files.

Spark2

This document discusses leveraging Spark ML for real-time credit card approvals at a large financial institution. The existing system had challenges with scalability, limited model accuracy from limited data, and siloed data scientists. The new solution uses Spark Streaming, Spark ML, Kafka and other tools for a scalable, collaborative platform. Models are trained on large datasets using Spark ML pipelines and scored in real-time using Spark Streaming to provide sub-second responses to credit card applicants.

Ml3

This document provides an overview of machine learning and feature engineering. It discusses how machine learning can be used for tasks like classification, regression, similarity matching, and clustering. It explains that feature engineering involves transforming raw data into numeric representations called features that machine learning models can use. Different techniques for feature engineering text and images are presented, such as bag-of-words and convolutional neural networks. Dimensionality reduction through principal component analysis is demonstrated. Finally, information is given about upcoming machine learning tutorials and Dato's machine learning platform.

Ml8

Artificial intelligence is being used in several agricultural applications with benefits such as increased yields, reduced costs, and improved sustainability. Some examples described are automated irrigation systems that precisely apply water to save resources; robotic strawberry harvesters that increase productivity and address labor shortages; and driverless tractors that allow more efficient use of equipment. Overall, AI and automation in agriculture are helping to boost outputs while minimizing environmental impacts through optimized resource use.

Ml2

Microsoft R Server allows users to run R code on large datasets in a distributed, parallel manner across SQL Server, Spark, and Hadoop without code changes. It provides scalable machine learning algorithms and tools to operationalize models for real-time scoring. The document discusses how R code can be run remotely on Hadoop and Spark clusters using technologies like RevoScaleR and Sparklyr for scalability.

Ml7

Carol Smith gave a presentation on AI and machine learning. She began by defining AI as machines that exhibit intelligence, perceive their environment, and take actions to maximize success. She then provided several examples of AI and cognitive computing to illustrate what intelligence systems need, what they perceive, and what actions they take. Throughout the presentation, she emphasized that AI systems are dependent on human experts, require vast amounts of data and annotation to develop, and are only as good and unbiased as the data used to train them. She concluded by discussing the importance of guiding AI development with principles of purpose, transparency, and skills to ensure systems benefit humanity.

Ml5

This document discusses machine learning concepts and techniques for categorization, popularity, and sequence labeling. It introduces linear models, decision trees, ensemble methods, and evaluation metrics. The document aims to provide a self-contained tutorial and explain the notation used. It outlines examples of machine learning applications and discusses encoding objects with features, the machine learning framework, and specific techniques like perceptrons, logistic regression, decision trees, boosting, and hidden Markov models.

Blue arm

This document describes a Bluetooth-based home automation and security system using ARM processors. An ARM9 board runs a graphical user interface (GUI) application developed in Visual Basic .NET to control home appliances connected to an ARM7 board via Bluetooth. The ARM7 relays commands from the ARM9 to devices like lights, fans, and doors. The system aims to provide remote home monitoring and assistance for elderly and disabled individuals in a low-cost way. Development tools like Wince 6.0, MDK-ARM, and C/C++ are used to program the ARM boards and interface with Bluetooth modules and home devices.

Literature survey

This document summarizes several home automation and security systems proposed in previous research papers. It describes systems that use password protected door locks, car theft prevention that disconnects the ignition, home security using web cameras or SMS to detect intruders, a wireless sensor network and GSM based system to detect intrusion and fires remotely, and a low cost system that can operate in internal or external modes to detect security issues and notify users or emergency services. The systems aim to automate tasks and increase security and safety in homes and vehicles.

Home security system using internet of things

1. The document discusses the design of an inexpensive home security system using Internet of Things (IoT) technology.

2. It proposes using a microcontroller, magnetic reed sensor to monitor doors/windows, a buzzer for alarms, and WiFi to connect and send notifications. This allows for easy setup and low maintenance at a low cost.

3. Previous research on IoT home security is reviewed, discussing methods using cameras, GSM, fingerprints, as well as robust and energy efficient systems. Each has advantages and disadvantages in terms of cost, functionality, and security.

rescue robot

The document discusses a robot and its control section. It provides top and front views of the robot while lifting a human and moving. It also shows the robot and control sections when turned off.

More from poovarasu maniandan (12)

Recently uploaded

一比一原版(UCSB毕业证)圣塔芭芭拉社区大学毕业证如何办理

UCSB毕业证文凭证书【微信95270640】☀《圣塔芭芭拉社区大学毕业证购买》Q微信95270640《UCSB毕业证可查真实》文凭、本科、硕士、研究生学历都可以做,留信认证的作用:

1:该专业认证可证明留学生真实留学身份。

2:同时对留学生所学专业等级给予评定。

3:国家专业人才认证中心颁发入库证书

4:这个入网证书并且可以归档到地方

5:凡是获得留信网入网的信息将会逐步更新到个人身份内,将在网内查询个人身份证信息后,同步读取人才网入库信息。

6:个人职称评审加20分。

7:个人信誉贷款加10分。

8:在国家人才网主办的全国网络招聘大会中纳入资料,供国家500强等高端企业选择人才《文凭UCSB毕业证书原版制作UCSB成绩单》仿制UCSB毕业证成绩单圣塔芭芭拉社区大学学位证书pdf电子图》。

如果您是以下情况,我们都能竭诚为您解决实际问题:【公司采用定金+余款的付款流程,以最大化保障您的利益,让您放心无忧】

1、在校期间,因各种原因未能顺利毕业,拿不到官方毕业证+微信95270640

2、面对父母的压力,希望尽快拿到圣塔芭芭拉社区大学圣塔芭芭拉社区大学本科毕业证成绩单;

3、不清楚流程以及材料该如何准备圣塔芭芭拉社区大学圣塔芭芭拉社区大学本科毕业证成绩单;

4、回国时间很长,忘记办理;

5、回国马上就要找工作,办给用人单位看;

6、企事业单位必须要求办理的;

面向美国乔治城大学毕业留学生提供以下服务:

【★圣塔芭芭拉社区大学圣塔芭芭拉社区大学本科毕业证成绩单毕业证、成绩单等全套材料,从防伪到印刷,从水印到钢印烫金,与学校100%相同】

【★真实使馆认证(留学人员回国证明),使馆存档可通过大使馆查询确认】

【★真实教育部认证,教育部存档,教育部留服网站可查】

【★真实留信认证,留信网入库存档,可查圣塔芭芭拉社区大学圣塔芭芭拉社区大学本科毕业证成绩单】

我们从事工作十余年的有着丰富经验的业务顾问,熟悉海外各国大学的学制及教育体系,并且以挂科生解决毕业材料不全问题为基础,为客户量身定制1对1方案,未能毕业的回国留学生成功搭建回国顺利发展所需的桥梁。我们一直努力以高品质的教育为起点,以诚信、专业、高效、创新作为一切的行动宗旨,始终把“诚信为主、质量为本、客户第一”作为我们全部工作的出发点和归宿点。同时为海内外留学生提供大学毕业证购买、补办成绩单及各类分数修改等服务;归国认证方面,提供《留信网入库》申请、《国外学历学位认证》申请以及真实学籍办理等服务,帮助众多莘莘学子实现了一个又一个梦想。

专业服务,请勿犹豫联系我

如果您真实毕业回国,对于学历认证无从下手,请联系我,我们免费帮您递交

诚招代理:本公司诚聘当地代理人员,如果你有业余时间,或者你有同学朋友需要,有兴趣就请联系我

你赢我赢,共创双赢

你做代理,可以帮助圣塔芭芭拉社区大学同学朋友

你做代理,可以拯救圣塔芭芭拉社区大学失足青年

你做代理,可以挽救圣塔芭芭拉社区大学一个个人才

你做代理,你将是别人人生圣塔芭芭拉社区大学的转折点

你做代理,可以改变自己,改变他人,给他人和自己一个机会娃于是天天扳着手指算计着读书也格外刻苦无奈时间总过得太慢太慢每次父亲往家打电话山娃总抢着接听一个劲地提醒父亲别忘了正月说的话电话那头总会传来父亲嘿嘿的笑连连说记得记得但别忘了拿奖状进城啊考试一结束山娃就迫不及待地给父亲挂电话:爸我拿奖了三好学生接我进城吧父亲果然没有食言第二天就请假回家接山娃离开爷爷奶奶的那一刻山娃又伤心得泪如雨下宛如军人奔赴前线般难舍和悲壮卧空调大巴挤长蛇列车山娃发现车上挤满了乡

按照学校原版(Greenwich文凭证书)格林威治大学毕业证快速办理

出售买大学文凭【微信:176555708】【(Greenwich毕业证书)格林威治大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

按照学校原版(KCL文凭证书)伦敦国王学院毕业证快速办理

咨询办理ps毕业证【微信:176555708】【(KCL毕业证书)伦敦国王学院毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

按照学校原版(Birmingham文凭证书)伯明翰大学|学院毕业证快速办理

加急购买全套证件文凭【微信:176555708】【(Birmingham毕业证书)伯明翰大学|学院毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

按照学校原版(Adelaide文凭证书)阿德莱德大学毕业证快速办理

怎样办理复制【微信:176555708】【(Adelaide毕业证书)阿德莱德大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

一比一原版(Greenwich文凭证书)格林威治大学毕业证如何办理

毕业原版【微信:176555708】【(Greenwich毕业证书)格林威治大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

按照学校原版(UST文凭证书)圣托马斯大学毕业证快速办理

精仿办理本科毕业证【微信:176555708】【(UST毕业证书)圣托马斯大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

LORRAINE ANDREI_LEQUIGAN_GOOGLE CALENDAR

Google Calendar is a versatile tool that allows users to manage their schedules and events effectively. With Google Calendar, you can create and organize calendars, set reminders for important events, and share your calendars with others. It also provides features like creating events, inviting attendees, and accessing your calendar from mobile devices. Additionally, Google Calendar allows you to embed calendars in websites or platforms like SlideShare, making it easier for others to view and interact with your schedules.

加急办理美国南加州大学毕业证文凭毕业证原版一模一样

原版一模一样【微信:741003700 】【美国南加州大学毕业证文凭】【微信:741003700 】学位证,留信认证(真实可查,永久存档)offer、雅思、外壳等材料/诚信可靠,可直接看成品样本,帮您解决无法毕业带来的各种难题!外壳,原版制作,诚信可靠,可直接看成品样本。行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备。十五年致力于帮助留学生解决难题,包您满意。

本公司拥有海外各大学样板无数,能完美还原海外各大学 Bachelor Diploma degree, Master Degree Diploma

1:1完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。材料咨询办理、认证咨询办理请加学历顾问Q/微741003700

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

一比一原版(IIT毕业证)伊利诺伊理工大学毕业证如何办理

IIT本科学位证成绩单【微信95270640】(伊利诺伊理工大学毕业证成绩单本科学历)Q微信95270640(补办IIT学位文凭证书)伊利诺伊理工大学留信网学历认证怎么办理伊利诺伊理工大学毕业证成绩单精仿本科学位证书硕士文凭证书认证Seneca College diplomaoffer,Transcript办理硕士学位证书造假伊利诺伊理工大学假文凭学位证书制作IIT本科毕业证书硕士学位证书精仿伊利诺伊理工大学学历认证成绩单修改制作,办理真实认证、留信认证、使馆公证、购买成绩单,购买假文凭,购买假学位证,制造假国外大学文凭、毕业公证、毕业证明书、录取通知书、Offer、在读证明、雅思托福成绩单、假文凭、假毕业证、请假条、国际驾照、网上存档可查!

办国外伊利诺伊理工大学伊利诺伊理工大学毕业证offer教育部学历学位认证留信认证大使馆认证留学回国人员证明修改成绩单信封申请学校offer录取通知书在读证明offer letter。

快速办理高仿国外毕业证成绩单:

1伊利诺伊理工大学毕业证+成绩单+留学回国人员证明+教育部学历认证(全套留学回国必备证明材料给父母及亲朋好友一份完美交代);

2雅思成绩单托福成绩单OFFER在读证明等留学相关材料(申请学校转学甚至是申请工签都可以用到)。

3.毕业证 #成绩单等全套材料从防伪到印刷从水印到钢印烫金高精仿度跟学校原版100%相同。

专业服务请勿犹豫联系我!联系人微信号:95270640诚招代理:本公司诚聘当地代理人员如果你有业余时间有兴趣就请联系我们。

国外伊利诺伊理工大学伊利诺伊理工大学毕业证offer办理过程:

1客户提供办理信息:姓名生日专业学位毕业时间等(如信息不确定可以咨询顾问:我们有专业老师帮你查询);

2开始安排制作毕业证成绩单电子图;

3毕业证成绩单电子版做好以后发送给您确认;

4毕业证成绩单电子版您确认信息无误之后安排制作成品;

5成品做好拍照或者视频给您确认;

6快递给客户(国内顺丰国外DHLUPS等快读邮寄)。哪里父母对我们的爱和思念为我们的生命增加了光彩给予我们自由追求的力量生活的力量我们也不忘感恩正因为这股感恩的线牵着我们使我们在一年的结束时刻义无反顾的踏上了回家的旅途人们常说父母恩最难回报愿我能以当年爸爸妈妈对待小时候的我们那样耐心温柔地对待我将渐渐老去的父母体谅他们以反哺之心奉敬父母以感恩之心孝顺父母哪怕只为父母换洗衣服为父母喂饭送汤按摩酸痛的腰背握着父母的手扶着他们一步一步地慢慢散步.让我们间

按照学校原版(Westminster文凭证书)威斯敏斯特大学毕业证快速办理

加急购买办理毕业证【微信:176555708】【(Westminster毕业证书)威斯敏斯特大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

一比一原版(Adelaide文凭证书)阿德莱德大学毕业证如何办理

毕业原版【微信:176555708】【(Adelaide毕业证书)阿德莱德大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

一比一原版(Monash文凭证书)莫纳什大学毕业证如何办理

毕业原版【微信:176555708】【(Monash毕业证书)莫纳什大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

按照学校原版(UAL文凭证书)伦敦艺术大学毕业证快速办理

出售假学位证【微信:176555708】【(UAL毕业证书)伦敦艺术大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

一比一原版(TheAuckland毕业证书)新西兰奥克兰大学毕业证如何办理

原版定制新西兰奥克兰大学毕业证【微信:176555708】【TheAuckland毕业证书成绩单-学位证】【微信:176555708】(留信学历认证永久存档查询)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

◆◆◆◆◆ — — — — — — — — 【留学教育】留学归国服务中心 — — — — — -◆◆◆◆◆

【主营项目】

一.毕业证【微信:176555708】成绩单、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理各国各大学文凭(一对一专业服务,可全程监控跟踪进度)

如果您处于以下几种情况:

◇在校期间,因各种原因未能顺利毕业……拿不到官方毕业证【微信:176555708】

◇面对父母的压力,希望尽快拿到;

◇不清楚认证流程以及材料该如何准备;

◇回国时间很长,忘记办理;

◇回国马上就要找工作,办给用人单位看;

◇企事业单位必须要求办理的

◇需要报考公务员、购买免税车、落转户口

◇申请留学生创业基金

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分→ 【关于价格问题(保证一手价格)

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

选择实体注册公司办理,更放心,更安全!我们的承诺:可来公司面谈,可签订合同,会陪同客户一起到教育部认证窗口递交认证材料,客户在教育部官方认证查询网站查询到认证通过结果后付款,不成功不收费!

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

学历顾问:微信:176555708

按照学校原版(UVic文凭证书)维多利亚大学毕业证快速办理

不能毕业办理文凭'毕业证购买'【微信:176555708】【(UVic毕业证书)维多利亚大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

Why is the AIS 140 standard Mandatory in India?

The Indian government has been working over the past few years to include elements of ITS in the transport sector. This standard ensures the optimal operation of the current transport infrastructure. It also increases the efficiency, safety, comfort, and quality of the system. That is why the government created the AIS-140 standard. Compliance with this standard means all vehicles used for public transit must have panic buttons and vehicle tracking modules installed. Nevertheless, in future in the standard protocol of AIS-140 you can expect fare collection and CCTV capabilities.

Get more information here: https://blog.watsoo.com/2023/12/27/all-about-prithvi-ais-140-gps-vehicle-tracker/

按照学校原版(SUT文凭证书)斯威本科技大学毕业证快速办理

退学办理电子版【微信:176555708】【(SUT毕业证书)斯威本科技大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

按照学校原版(AU文凭证书)英国阿伯丁大学毕业证快速办理

出售毕业典礼【微信:176555708】【(AU毕业证书)英国阿伯丁大学毕业证】【微信:176555708】成绩单、外壳、offer、留信学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路),我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

→ 【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

Recently uploaded (20)

Production.pptxd dddddddddddddddddddddddddddddddddd

Production.pptxd dddddddddddddddddddddddddddddddddd

Spark4

- 1. Agenda ● Brief Review of Spark (15 min) ● Intro to Spark SQL (30 min) ● Code session 1: Lab (45 min) ● Break (15 min) ● Intermediate Topics in Spark SQL (30 min) ● Code session 2: Quiz (30 min) ● Wrap up (15 min)

- 2. Spark Review By Aaron Merlob

- 3. Apache Spark ● Open-source cluster computing framework ● “Successor” to Hadoop MapReduce ● Supports Scala, Java, and Python! https://en.wikipedia.org/wiki/Apache_Spark

- 4. Spark Core + Libraries https://spark.apache.org

- 5. Resilient Distributed Dataset ● Distributed Collection ● Fault-tolerant ● Parallel operation - Partitioned ● Many data sources Implementation... RDD - Main Abstraction

- 7. Lazily Evaluated How Good Is Aaron’s Presentation? Immutable Lazily Evaluated Cachable Type Inferred

- 9. Type Inferred (Scala) Immutable Lazily Evaluated Cachable Type Inferred

- 11. Cache & Persist Transformed RDDs recomputed each action Store RDDs in memory using cache (or persist)

- 12. SparkContext. ● Your way to get data into/out of RDDs ● Given as ‘sc’ when you launch Spark shell. For example: sc.parallelize() SparkContext

- 13. Transformation vs. Action? val data = sc.parallelize(Seq( “Aaron Aaron”, “Aaron Brian”, “Charlie”, “” )) val words = data.flatMap(d => d.split(" ")) val result = words.map(word => (word, 1)). reduceByKey((v1, v2) => v1 + v2) result.filter( kv => kv._1.contains(“a”) ).count() result.filter{ case (k, v) => v > 2 }.count()

- 14. Transformation vs. Action? val data = sc.parallelize(Seq( “Aaron Aaron”, “Aaron Brian”, “Charlie”, “” )) val words = data.flatMap(d => d.split(" ")) val result = words.map(word => (word, 1)). reduceByKey((v1, v2) => v1 + v2) result.filter( kv => kv._1.contains(“a”) ).count() result.filter{ case (k, v) => v > 2 }.count()

- 15. Transformation vs. Action? val data = sc.parallelize(Seq( “Aaron Aaron”, “Aaron Brian”, “Charlie”, “” )) val words = data.flatMap(d => d.split(" ")) val result = words.map(word => (word, 1)). reduceByKey((v1, v2) => v1 + v2) result.filter( kv => kv._1.contains(“a”) ).count() result.filter{ case (k, v) => v > 2 }.count()

- 16. Transformation vs. Action? val data = sc.parallelize(Seq( “Aaron Aaron”, “Aaron Brian”, “Charlie”, “” )) val words = data.flatMap(d => d.split(" ")) val result = words.map(word => (word, 1)). reduceByKey((v1, v2) => v1 + v2) result.filter( kv => kv._1.contains(“a”) ).count() result.filter{ case (k, v) => v > 2 }.count()

- 17. Transformation vs. Action? val data = sc.parallelize(Seq( “Aaron Aaron”, “Aaron Brian”, “Charlie”, “” )) val words = data.flatMap(d => d.split(" ")) val result = words.map(word => (word, 1)). reduceByKey((v1, v2) => v1 + v2).cache() result.filter( kv => kv._1.contains(“a”) ).count() result.filter{ case (k, v) => v > 2 }.count()

- 18. Spark SQL By Aaron Merlob

- 19. Spark SQL RDDs with Schemas!

- 20. Spark SQL RDDs with Schemas! Schemas = Table Names + Column Names + Column Types = Metadata

- 21. Schemas ● Schema Pros ○ Enable column names instead of column positions ○ Queries using SQL (or DataFrame) syntax ○ Make your data more structured ● Schema Cons ○ ?? ○ ?? ○ ??

- 22. Schemas ● Schema Pros ○ Enable column names instead of column positions ○ Queries using SQL (or DataFrame) syntax ○ Make your data more structured ● Schema Cons ○ Make your data more structured ○ Reduce future flexibility (app is more fragile) ○ Y2K

- 23. HiveContext val sqlContext = new org.apache.spark.sql. hive.HiveContext(sc)

- 24. HiveContext val sqlContext = new org.apache.spark.sql. hive.HiveContext(sc) FYI - a less preferred alternative: org.apache.spark.sql.SQLContext

- 25. DataFrames Primary abstraction in Spark SQL Evolved from SchemaRDD Exposes functionality via SQL or DF API SQL for developer productivity (ETL, BI, etc) DF for data scientist productivity (R / Pandas)

- 26. Live Coding - Spark-Shell Maven Packages for CSV and Avro org.apache.hadoop:hadoop-aws:2.7.1 com.amazonaws:aws-java-sdk-s3:1.10.30 com.databricks:spark-csv_2.10:1.3.0 com.databricks:spark-avro_2.10:2.0.1 spark-shell --packages $SPARK_PKGS

- 27. Live Coding - Loading CSV val path = "AAPL.csv" val df = sqlContext.read. format("com.databricks.spark.csv"). option("header", "true"). option("inferSchema", "true"). load(path) df.registerTempTable("stocks")

- 28. Caching If I run a query twice, how many times will the data be read from disk?

- 29. Caching If I run a query twice, how many times will the data be read from disk? 1. RDDs are lazy. 2. Therefore the data will be read twice. 3. Unless you cache the RDD, All transformations in the RDD will execute on each action.

- 30. Caching Tables sqlContext.cacheTable("stocks") Particularly useful when using Spark SQL to explore data, and if your data is on S3. sqlContext.uncacheTable("stocks")

- 31. Caching in SQL SQL Command Speed `CACHE TABLE sales;` Eagerly `CACHE LAZY TABLE sales;` Lazily `UNCACHE TABLE sales;` Eagerly

- 32. Caching Comparison Caching Spark SQL DataFrames vs caching plain non-DataFrame RDDs ● RDDs cached at level of individual records ● DataFrames know more about the data. ● DataFrames are cached using an in-memory columnar format.

- 33. Caching Comparison What is the difference between these: (a) sqlContext.cacheTable("df_table") (b) df.cache (c) sqlContext.sql("CACHE TABLE df_table")

- 34. Lab 1 Spark SQL Workshop

- 35. Spark SQL, the Sequel By Aaron Merlob

- 36. Live Coding - Filetype ETL ● Read in a CSV ● Export as JSON or Parquet ● Read JSON

- 37. Live Coding - Common ● Show ● Sample ● Take ● First

- 38. Read Formats Format Read Parquet sqlContext.read.parquet(path) ORC sqlContext.read.orc(path) JSON sqlContext.read.json(path) CSV sqlContext.read.format(“com.databricks.spark.csv”).load(path)

- 39. Write Formats Format Write Parquet sqlContext.write.parquet(path) ORC sqlContext.write.orc(path) JSON sqlContext.write.json(path) CSV sqlContext.write.format(“com.databricks.spark.csv”).save(path)

- 40. Schema Inference Infer schema of JSON files: ● By default it scans the entire file. ● It finds the broadest type that will fit a field. ● This is an RDD operation so it happens fast. Infer schema of CSV files: ● CSV parser uses same logic as JSON parser.

- 41. User Defined Functions How do you apply a “UDF”? ● Import types (StringType, IntegerType, etc) ● Create UDF (in Scala) ● Apply the function (in SQL) Notes: ● UDFs can take single or multiple arguments ● Optional registerFunction arg2: ‘return type’