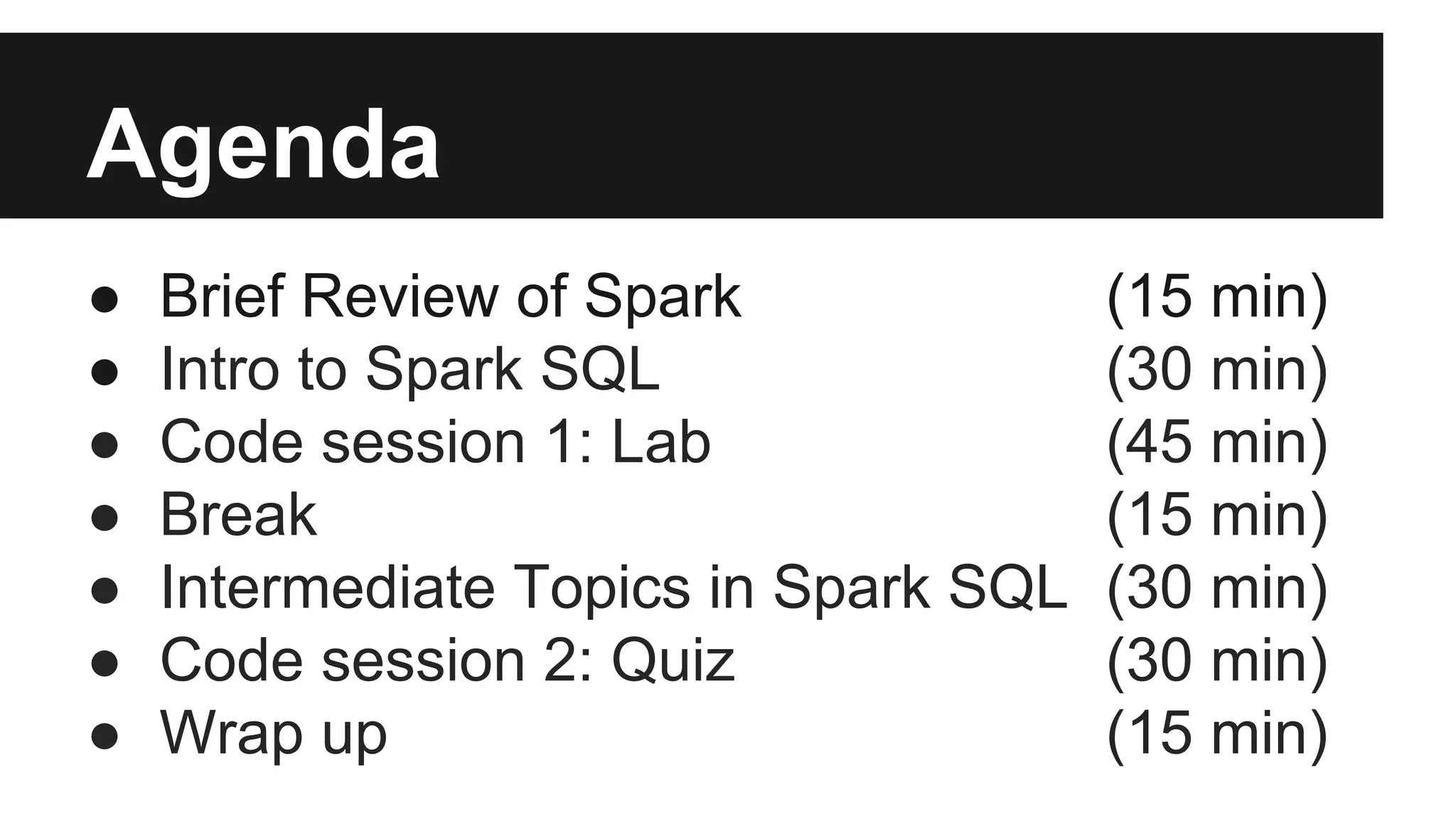

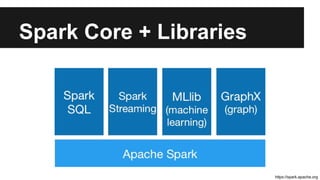

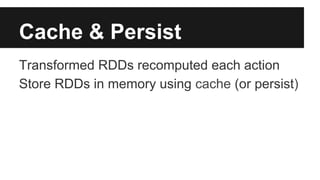

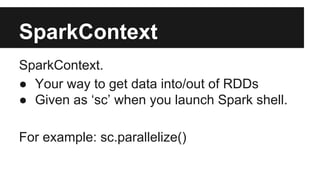

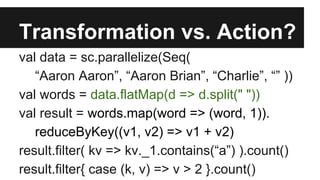

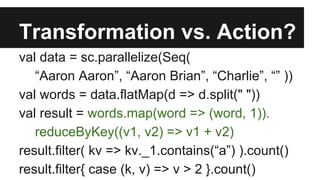

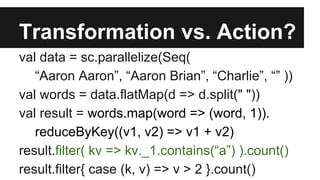

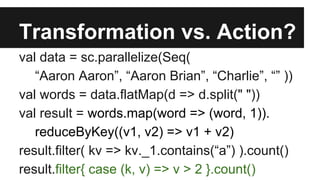

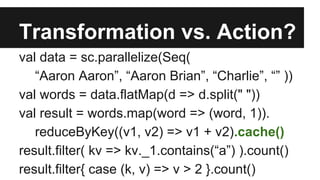

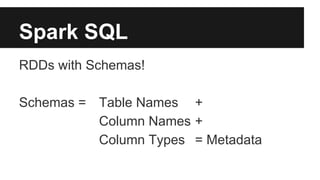

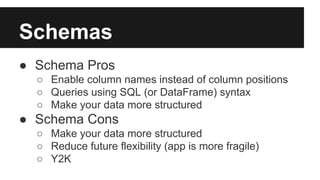

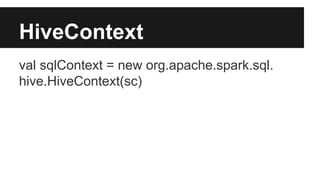

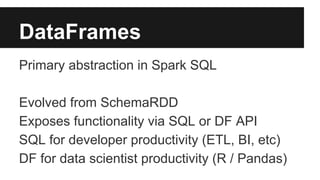

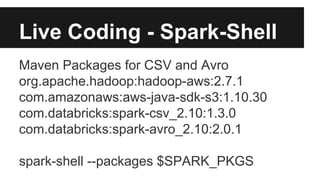

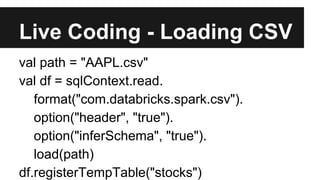

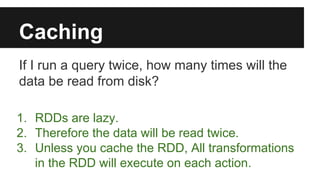

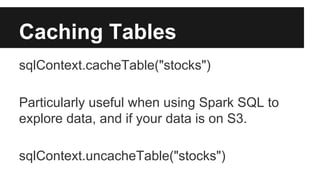

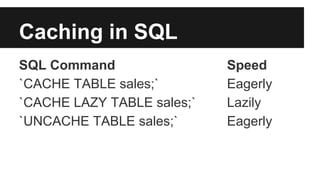

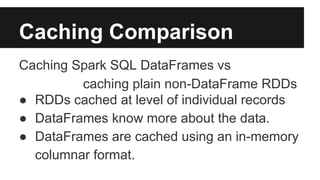

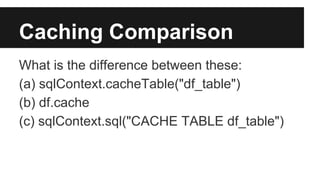

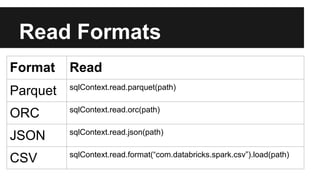

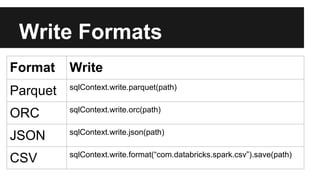

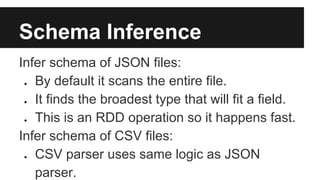

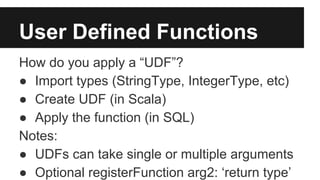

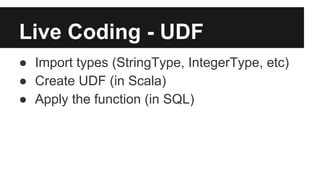

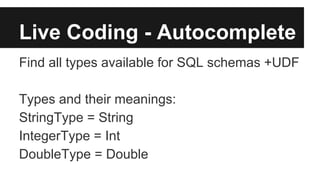

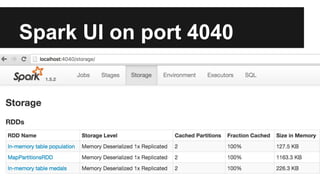

The document outlines an agenda for an Apache Spark workshop, including topics such as Spark SQL, RDD operations, and user-defined functions (UDFs). It discusses the framework's features, advantages of using schemas in Spark SQL, and practical coding sessions. Additionally, the document addresses caching data and different data formats for reading and writing within Spark, emphasizing efficiency and productivity for developers and data scientists.