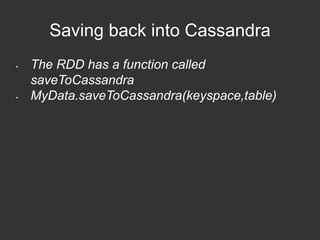

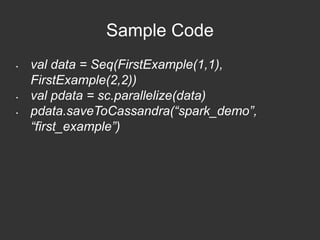

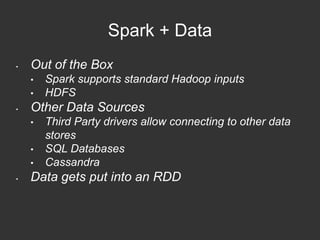

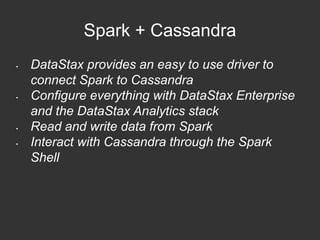

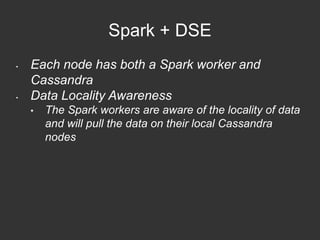

The document introduces using Apache Spark with Cassandra, demonstrating basic concepts and code examples using the Movielens 10M dataset. It covers the resilient distributed dataset (RDD), transformations and actions within Spark, and how to read from and write to Cassandra using Spark's capabilities. Additional topics include Spark's integration with Datastax and data locality awareness for optimized performance.

![Sample Code

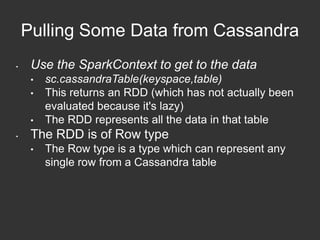

• Reading From Cassandra:

• val data = sc.cassandraTable(“spark_demo”,

“first_example” )

• data is an RDD of type CassandraRow. To

access values you need to use the get[A]

method:

• data.first.get[Int]("value")](https://image.slidesharecdn.com/sparkintroppt-141029122417-conversion-gate02/85/Spark-Introduction-21-320.jpg)

• Now we get an RDD of MyData elements](https://image.slidesharecdn.com/sparkintroppt-141029122417-conversion-gate02/85/Spark-Introduction-22-320.jpg)

• This returns an RDD of type FirstExample:

• data.first.Value](https://image.slidesharecdn.com/sparkintroppt-141029122417-conversion-gate02/85/Spark-Introduction-23-320.jpg)