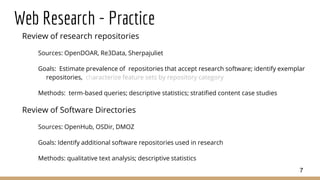

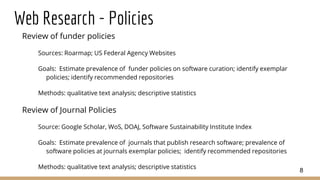

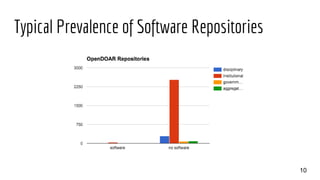

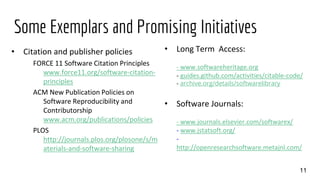

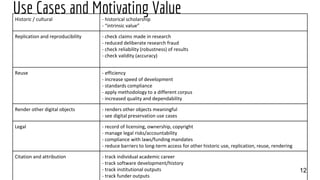

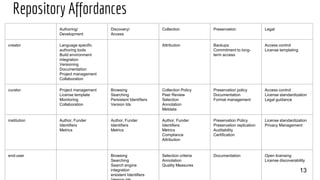

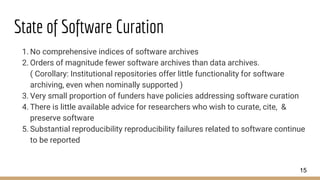

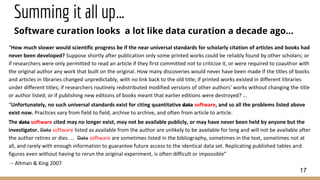

This document presents an environmental scan of software repositories for research, highlighting the current state of software curation, citation practices, and policies affecting software preservation. It identifies challenges such as the lack of comprehensive indices and funder support for software curation, contrasting it with the advancements seen in data curation. The document emphasizes the need for standardized practices for citing and preserving research software to facilitate reproducibility and long-term access.

![Disclaimer

These opinions are my own, they are not the opinions of MIT, any of

the project funders, nor (with the exception of co-authored

previously published work) my collaborators

Secondary disclaimer:

“It’s tough to make predictions, especially about the future!”

-- Attributed to Woody Allen, Yogi Berra, Niels Bohr, Vint Cerf, Winston Churchill, Confucius, Disreali [sic], Freeman Dyson,

Cecil B. Demille, Albert Einstein, Enrico Fermi, Edgar R. Fiedler, Bob Fourer, Sam Goldwyn, Allan Lamport, Groucho Marx,

Dan Quayle, George Bernard Shaw, Casey Stengel, Will Rogers, M. Taub, Mark Twain, Kerr L. White, etc.

2](https://image.slidesharecdn.com/spnforumpresentation-altman-160809015123/85/Software-Repositories-for-Research-An-Environmental-Scan-2-320.jpg)