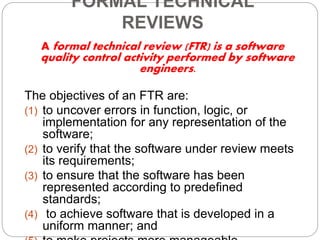

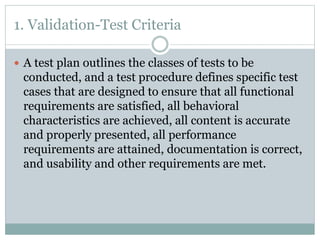

The document discusses software quality and achieving high quality software. It notes that software companies often deliver software with known bugs and that low quality software increases risks for developers and users. It also discusses the costs of quality and how management decisions impact quality. Achieving quality involves software engineering methods, project management techniques, quality control, and quality assurance. Reviews, testing, and validation are important parts of the quality process.