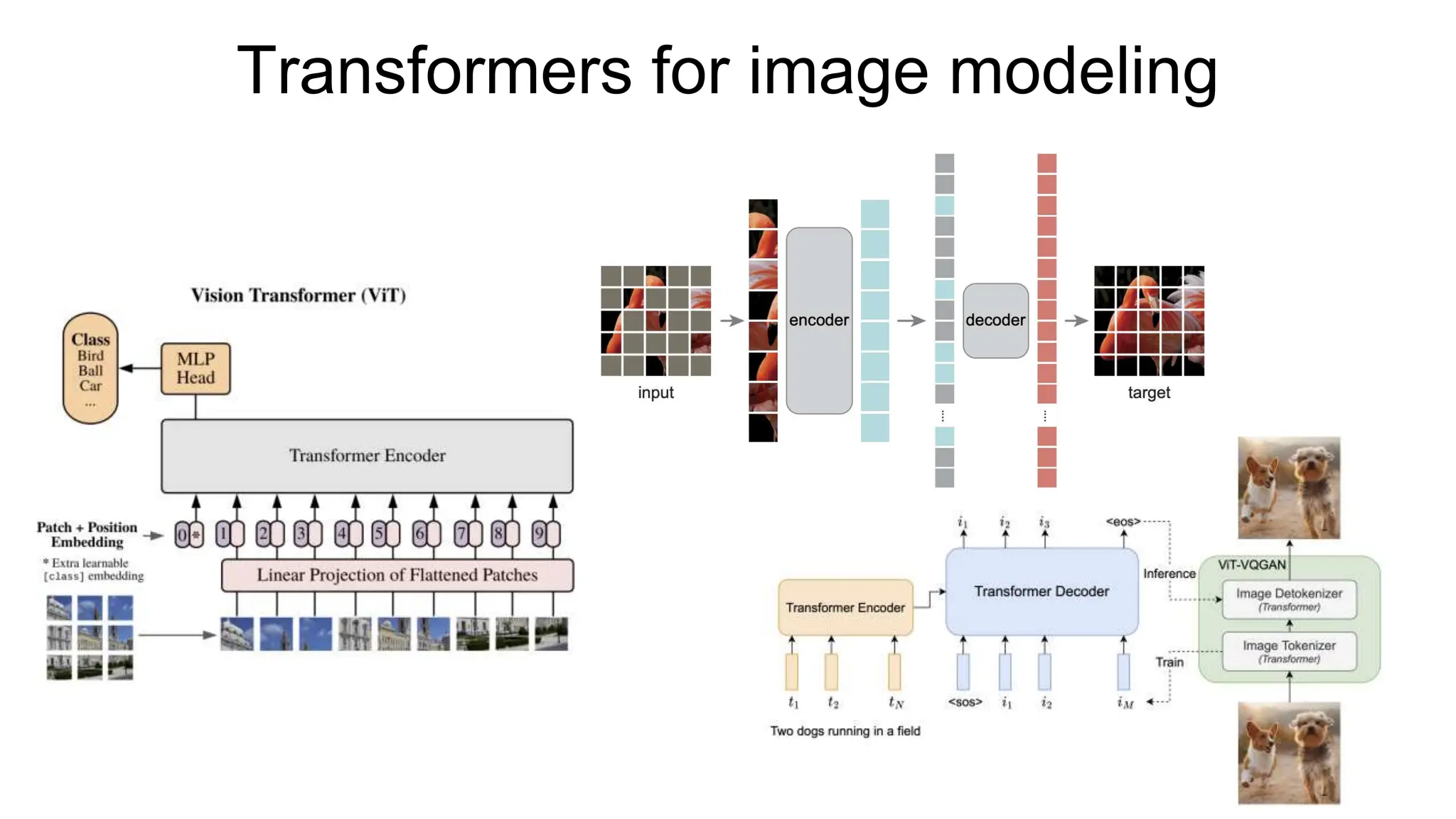

The document discusses the application of transformers in vision tasks, outlining various architectures and self-supervised learning methods. It critiques previous approaches to image modeling using self-attention, their complexities, and results that often do not justify their use over convolutional networks. Additionally, it introduces methods like Vision Transformer (ViT) and Swin Transformer, highlighting advancements in efficiency and new self-supervised techniques like DINO and masked autoencoders.