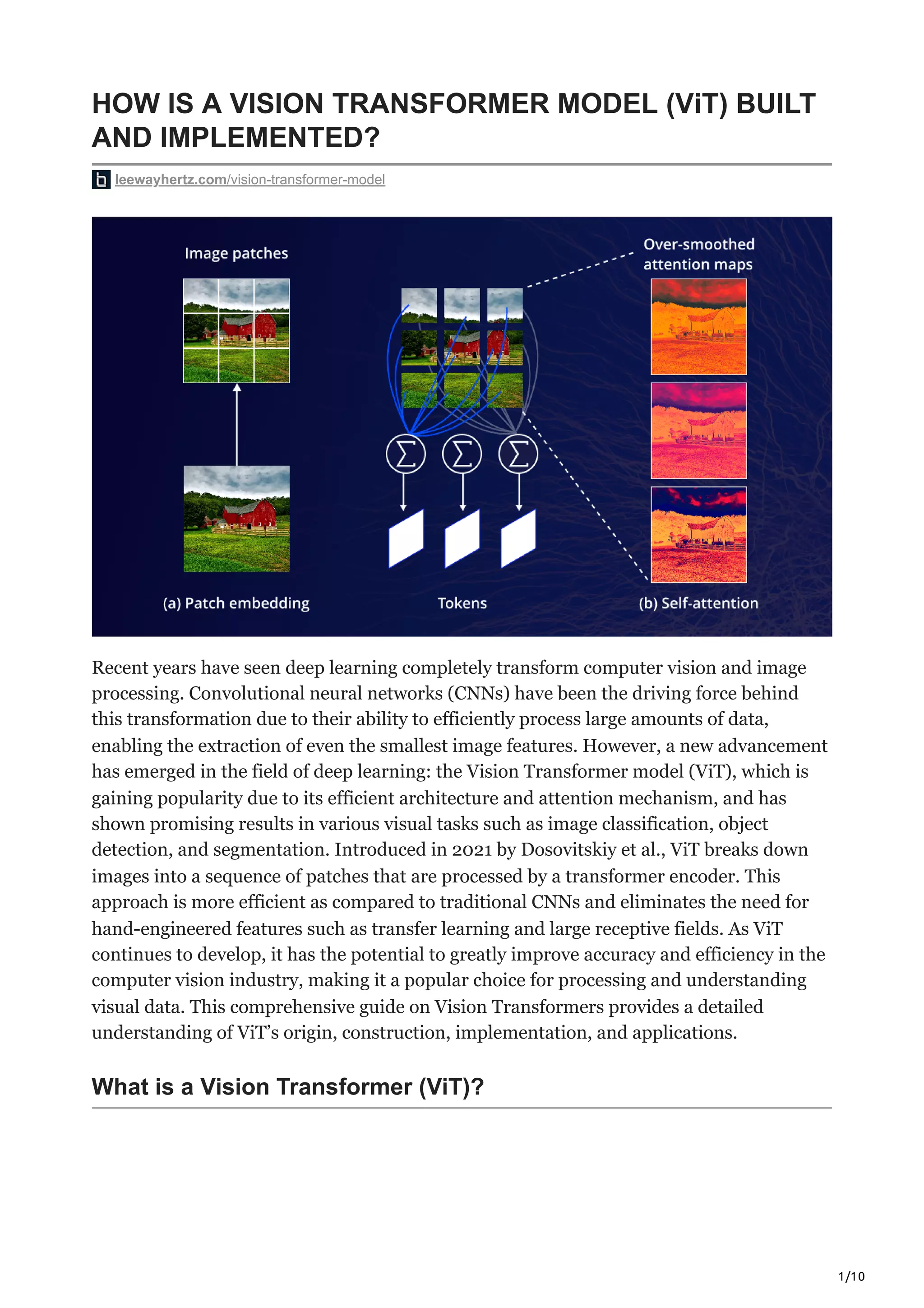

The Vision Transformer model (ViT) is a novel deep learning architecture for computer vision tasks that utilizes a transformer approach to process images as sequences of patches. By applying self-attention mechanisms, ViT captures global contextual information and exhibits competitive performance compared to traditional convolutional neural networks (CNNs) in various applications such as image classification, object detection, and anomaly detection. Its scalability and efficiency, coupled with the ability for pre-training on large datasets, make ViT a promising tool for advancing automation in image recognition and related fields.