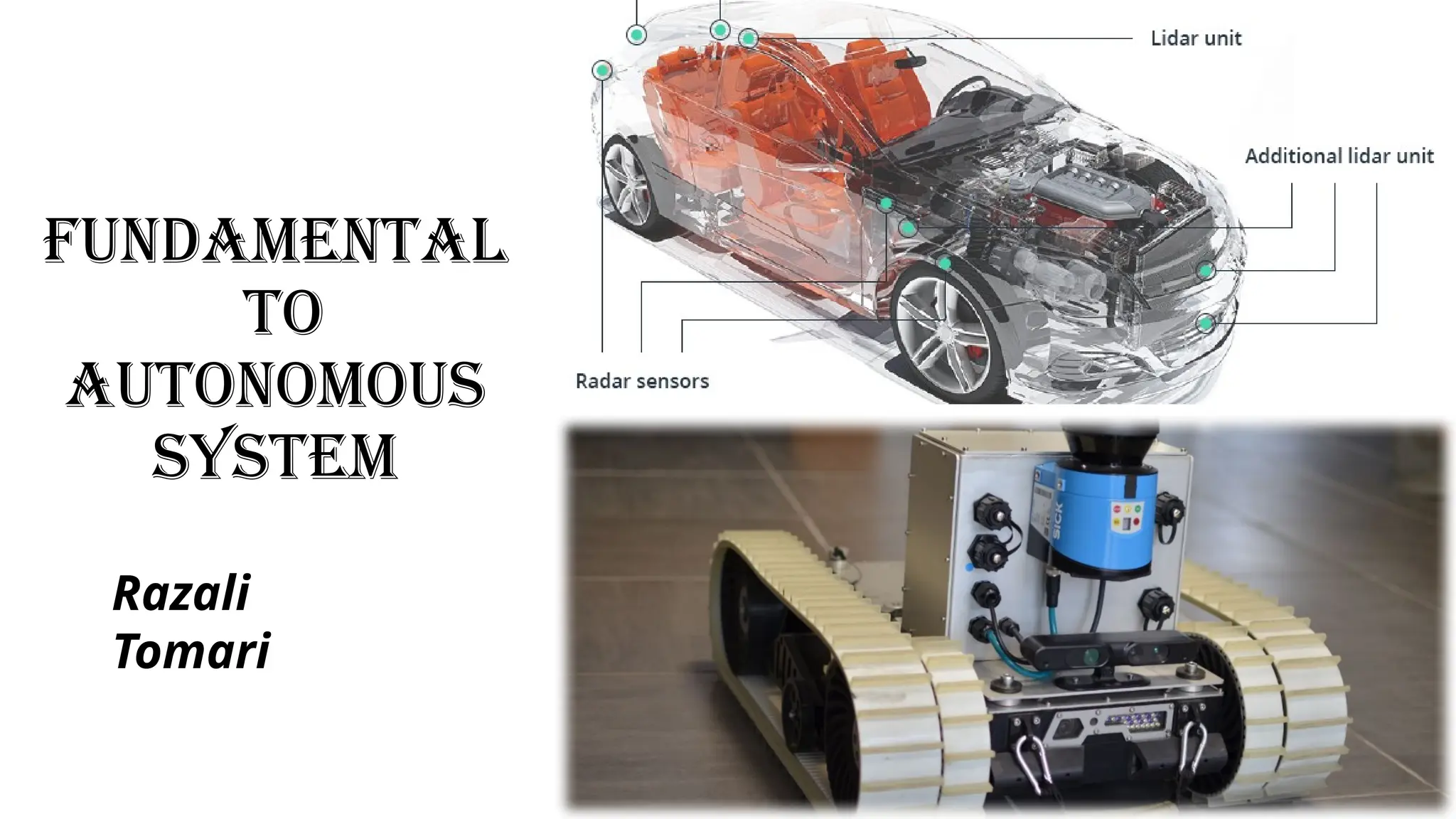

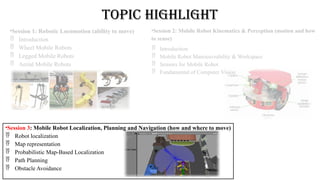

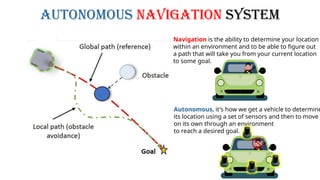

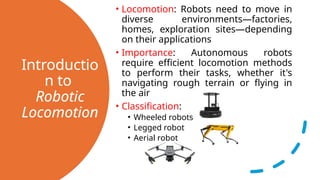

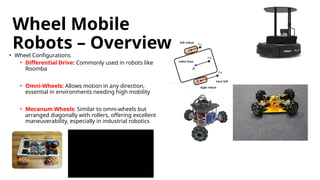

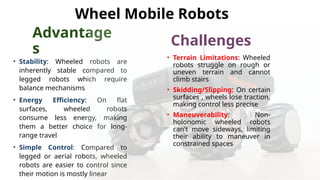

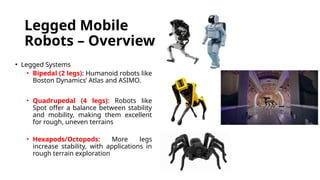

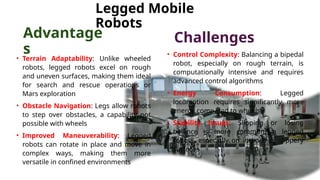

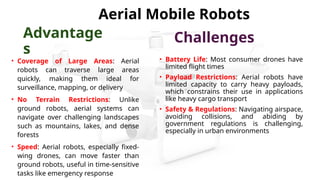

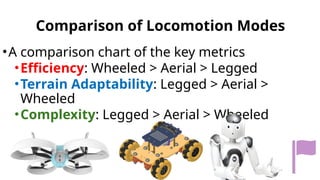

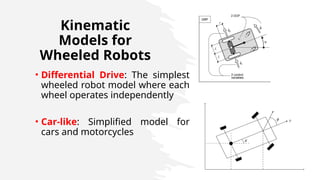

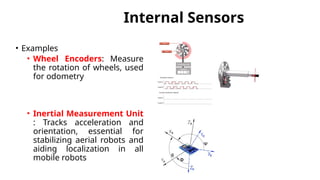

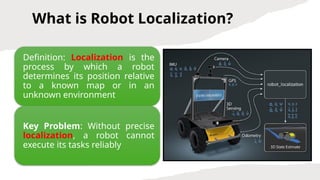

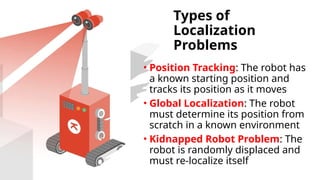

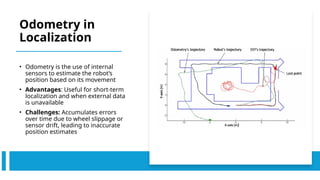

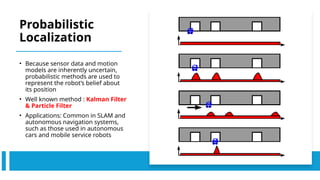

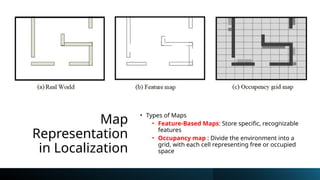

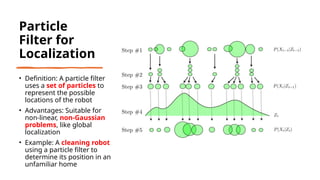

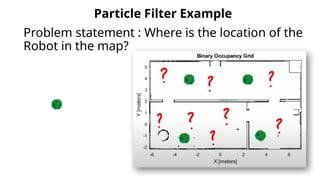

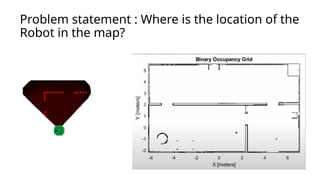

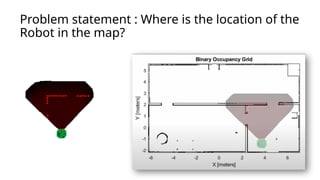

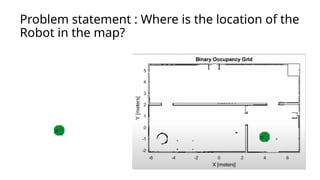

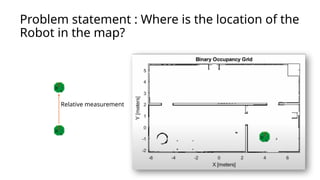

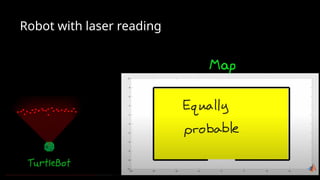

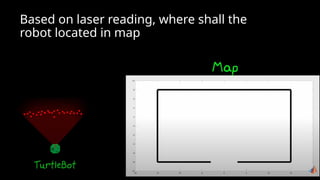

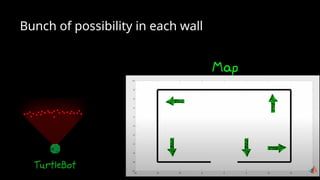

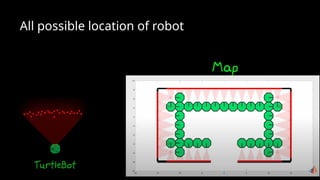

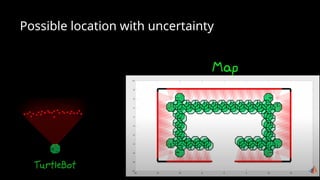

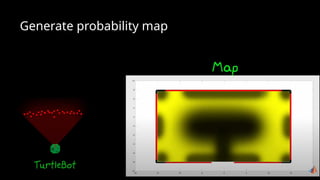

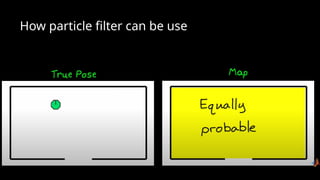

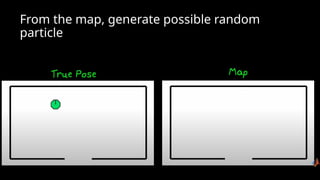

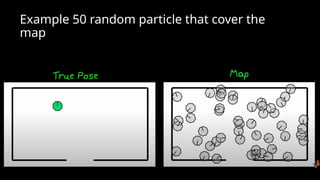

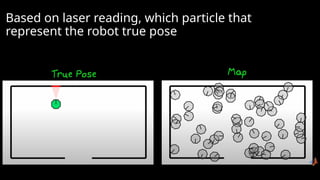

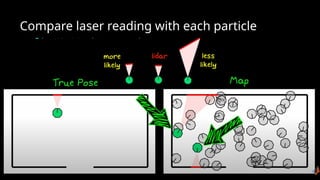

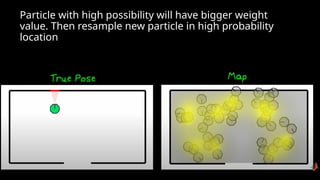

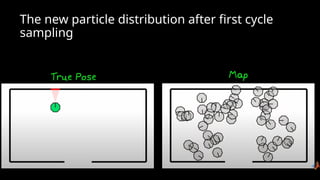

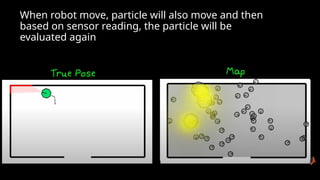

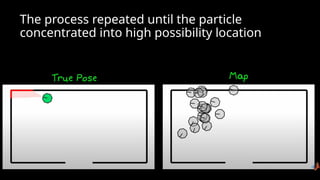

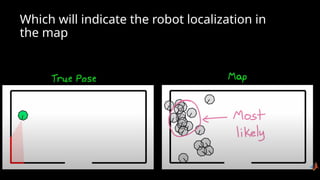

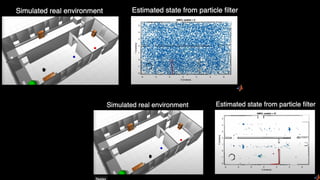

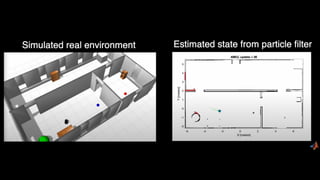

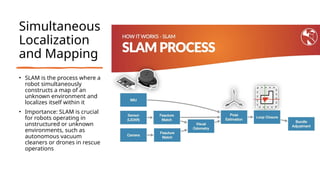

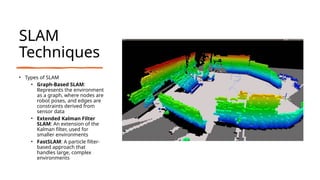

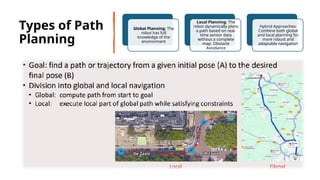

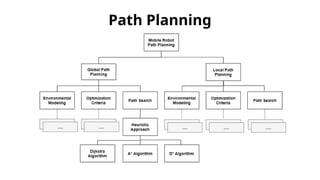

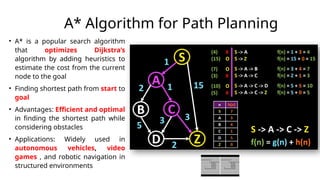

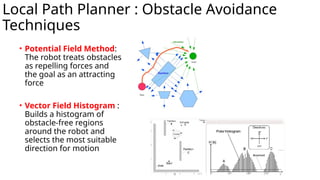

The document details various aspects of autonomous robotic systems, focusing on locomotion, kinematics, perception, localization, planning, and navigation. It covers types of mobile robots, including wheeled, legged, and aerial robots, discussing advantages and challenges for each type. Additionally, the importance of sensor integration, localization techniques like SLAM, and path planning algorithms like A* are emphasized for enhancing robotic navigation capabilities.