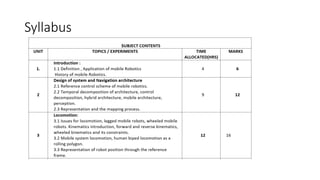

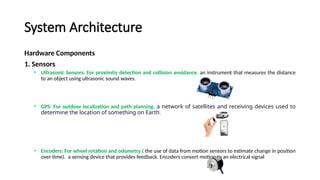

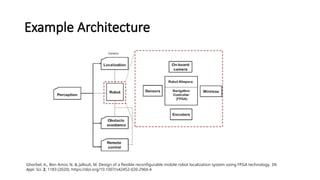

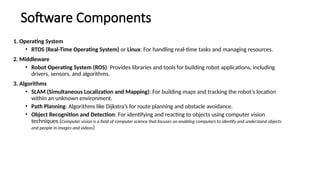

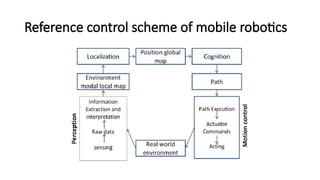

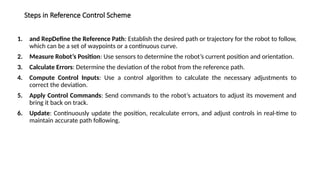

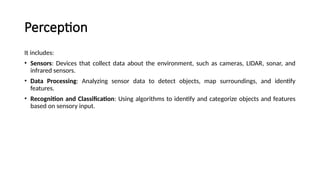

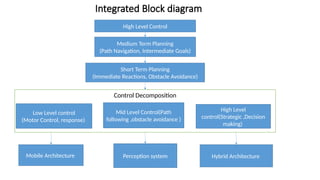

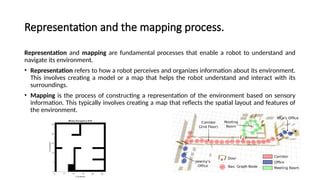

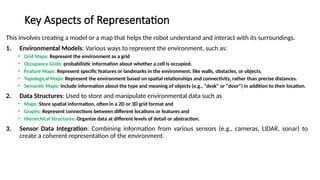

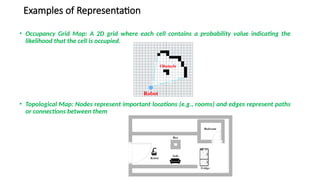

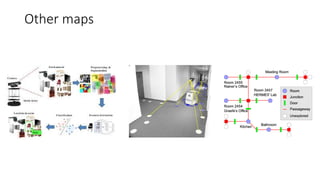

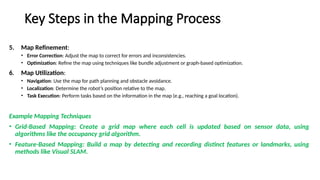

The document provides a comprehensive overview of mobile robotics, including its definition, classification, applications, and historical development. It details the components involved in system design, architecture, and navigation, emphasizing the integration of sensors, actuators, and algorithms necessary for autonomous operation. The document highlights various application fields such as healthcare, agriculture, and military, as well as advances from early automata to modern robotics technologies.