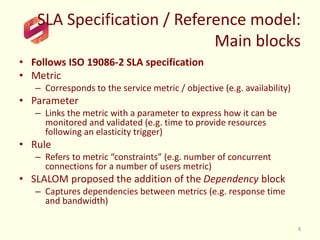

The document provides an overview of the SLALOM project, which aims to develop a core service level agreement (SLA) specification that can serve as a basis for interactions between cloud service providers and customers. It discusses SLALOM's contributions, including an SLA specification model that extends existing standards and an abstract definition of metrics that clarifies measurement ambiguities. An example is provided mapping the Amazon EC2 SLA to the SLALOM framework to demonstrate how it handles definitions at the sample, period, and metric levels.