The document outlines the Sicomoro-CM project, which is focused on developing trustworthy systems through the collaboration of multiple Madrid universities, with a funding of approximately 635,088.65€ over four years. It details specific work packages aimed at methodologies for high-quality software development, covering various technical aspects such as model verification, validation, and cloud system applications. The project emphasizes the importance of formal methods, model-driven engineering, and tool integration to enhance software reliability across different domains.

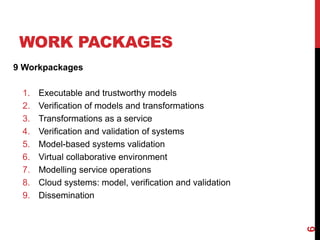

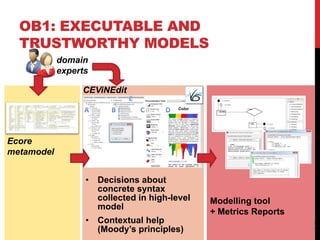

![OB1: EXECUTABLE AND

TRUSTWORTHY MODELS

1. Specify DSLs in a cost-effective way

2. Analyse DSL semantics

• Operational

• Denotational

Results:

• Transformation reuse (in-place, out-place) [dLG17]

• Develop DSLs through examples [LGG17]

• Evaluate effectiveness of concrete syntax [GVB17]

7

[dLG17] de Lara, Guerra: A Posteriori Typing for Model-Driven Engineering: Concepts, Analysis,

and Applications. ACM TOSEM. 25(4): 31:1-31:60 (2017)

[LGG17] López-Fernández, Garmendia, Guerra, de Lara: Example-Based Generation of

Graphical Modelling Environments. ECMFA 2016: 101-117

[GVB17] Granada, Vara, Brambilla, Bollati, Marcos. Analysing the cognitive effectiveness of the

webML visual notation. Software and System Modeling, 16(1):195-227, 2017](https://image.slidesharecdn.com/sicomoro-170718154616/85/SICOMORO-7-320.jpg)

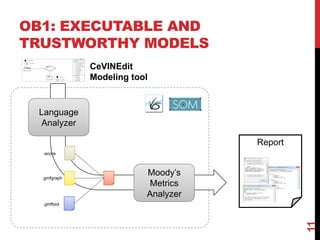

![OB2: VERIFICATION OF MODELS

AND TRANSFORMATIONS

12

1. Analyse properties of (meta-)models

2. Analyse properties of transformations

• Model-to-model, Model-to-text, In-place

Results:

• Static analysis of ATL [SGdL17]

• Traceability analysis [JVBM15]

• DSLs for meta-model validation & verification [LGdL17]

[LGdL17] López-Fernández, Guerra, de Lara: Combining unit and specification-based testing for

meta-model validation and verification. Inf. Syst. 62: 104-135 (2016)

[SGdL17] Sánchez-Cuadrado, Guerra, de Lara. Static analysis of model transformations. IEEE

TSE, in press(1):1-32, 2017

[JVBM15] Jimenez, Vara, Bollati, Marcos. Metagem-trace: Improving trace generation in model

transformation by leveraging the role of transformation models. SCICO, 98:3-27, 2015.](https://image.slidesharecdn.com/sicomoro-170718154616/85/SICOMORO-12-320.jpg)

![OB3: TRANSFORMATIONS AS

A SERVICE

16

1. Optimize and execute transformations (aaS) in the cloud

2. Distributed, streaming transformations

Results:

• Distil [SGdL15]

• Collaborations with other groups:

• ATLANMOD [BTS16]

• L’Aquila – MDEForge [RRP16]

• Datalyzer (ongoing)

[SGdL15] Carrascal, Sánchez-Cuadrado, de Lara. Building MDE cloud services with Distil. In

CloudMDE@MODELS, CEUR Workshop Proceedings 1563, pp.19-24, 2015.

[BTS16] Benelallam, Tisi, Sánchez-Cuadrado, de Lara, Cabot. Efficient model partitioning for

distributed model transformations. SLE’16, pp 226-238.

[RRP16] Di Rocco, Di Ruscio, Pierantonio, Sánchez Cuadrado, de Lara, Guerra. Using ATL

Transformation Services in the MDEForge Collaborative Modeling Platform. ICMT 2016: 70-78](https://image.slidesharecdn.com/sicomoro-170718154616/85/SICOMORO-16-320.jpg)

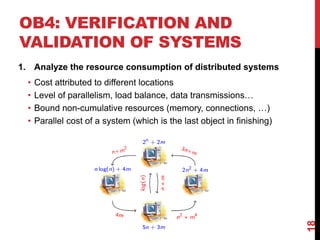

![OB4: VERIFICATION AND

VALIDATION OF SYSTEMS

Results:

• Resource analysis with cost centers [AACGGPG15]

• Performance indicators [ACPR15]

• Peak cost analysis [ACR15]

• Parallel cost analysis [ACJR15]

• Resource analysis in Software Product Lines [ZAV16]

19

[AACGGPG15] Object-Sensitive Cost Analysis for Concurrent Objects, STVR, 25(3):218-

271

[ACPR15] Quantified Abstract Configurations of Distributed, FAoC 27(4):665-699

[ACR15] Non-Cumulative Resource Analysis. TACAS’15, 9035 of LNCS, 85-100

[ACJR15] Parallel Cost Analysis of Distributed Systems, SAS’15, 9291 of LNCS, 275-292

[ZAV16] Resource-usage-aware configuration in software product lines, JLAMP 85(1),

2016, 173-199](https://image.slidesharecdn.com/sicomoro-170718154616/85/SICOMORO-19-320.jpg)