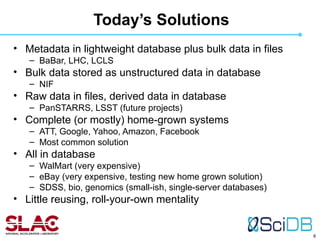

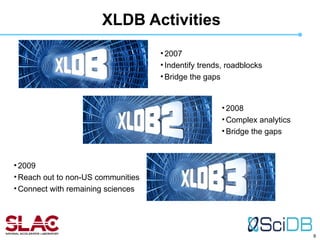

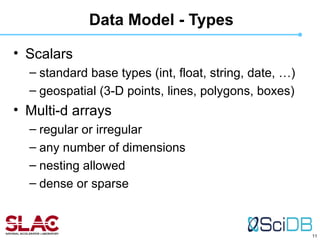

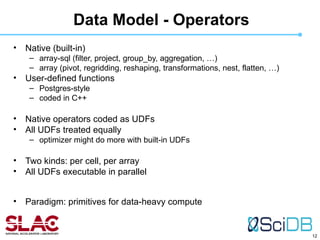

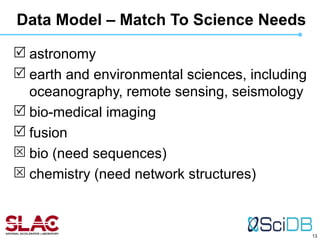

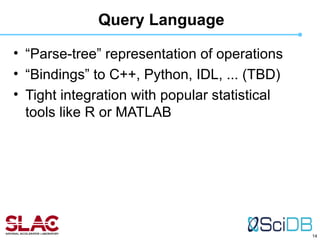

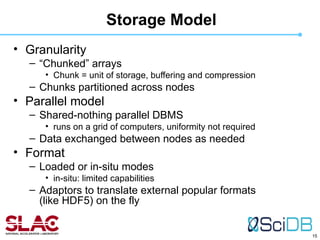

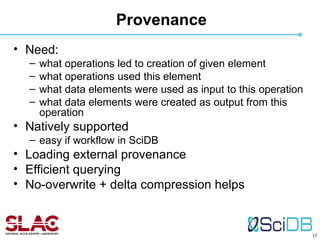

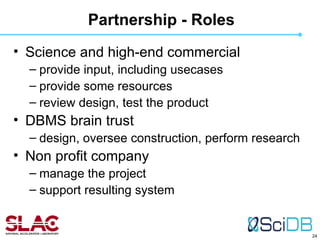

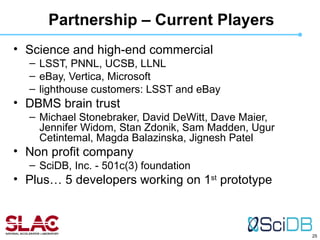

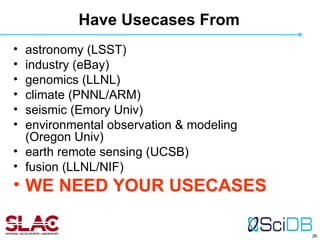

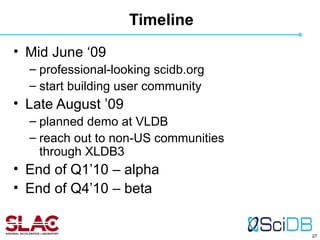

The document discusses the need for a new open source database management system called SciDB to address the challenges of storing and analyzing extremely large scientific datasets. SciDB is being designed to handle petabyte-scale multidimensional array data with native support for features important to science like provenance tracking, uncertainty handling, and integration with statistical tools. An international partnership involving scientists, database experts, and a nonprofit company is developing SciDB with initial funding and use cases coming from astronomy, industry, genomics and other domains.