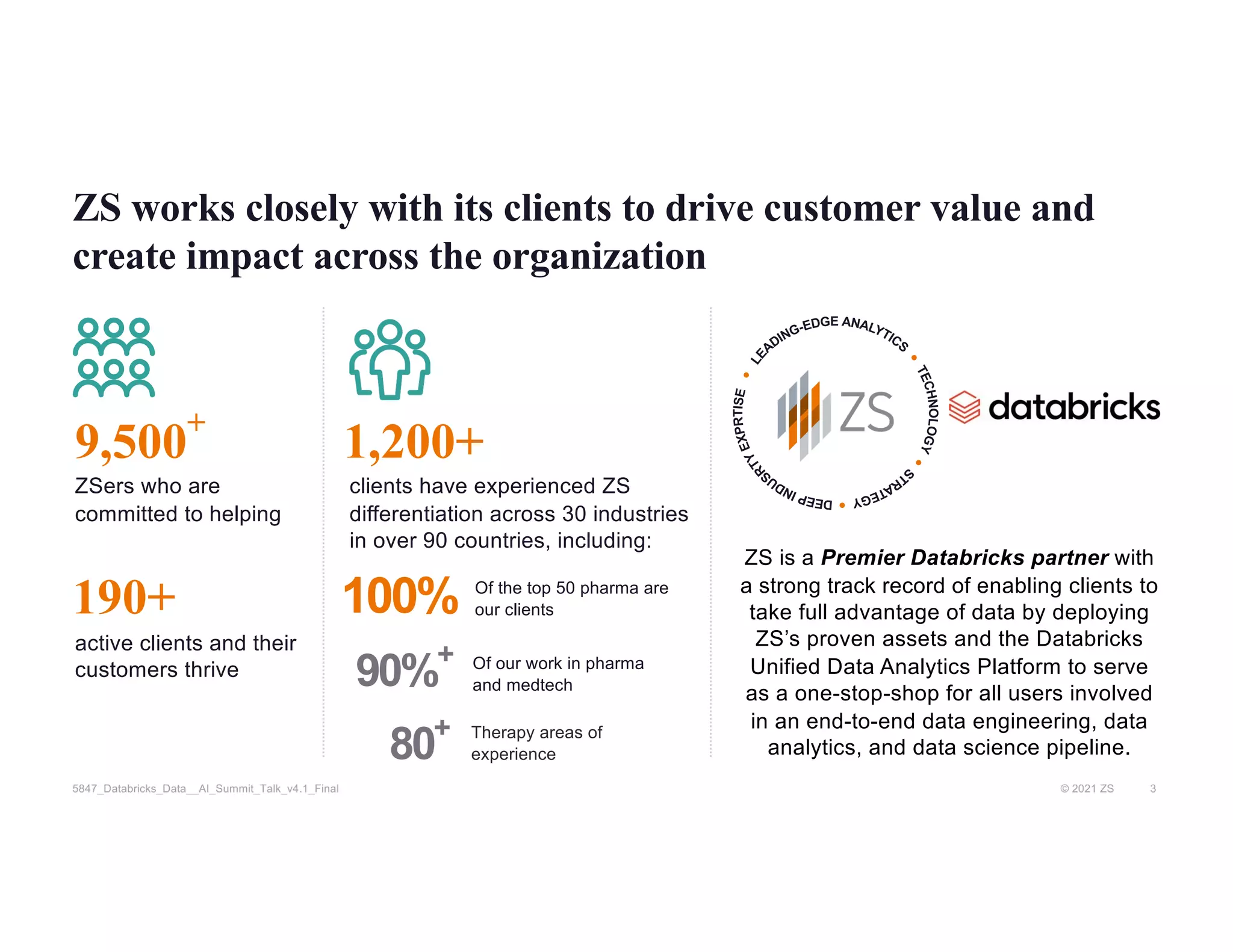

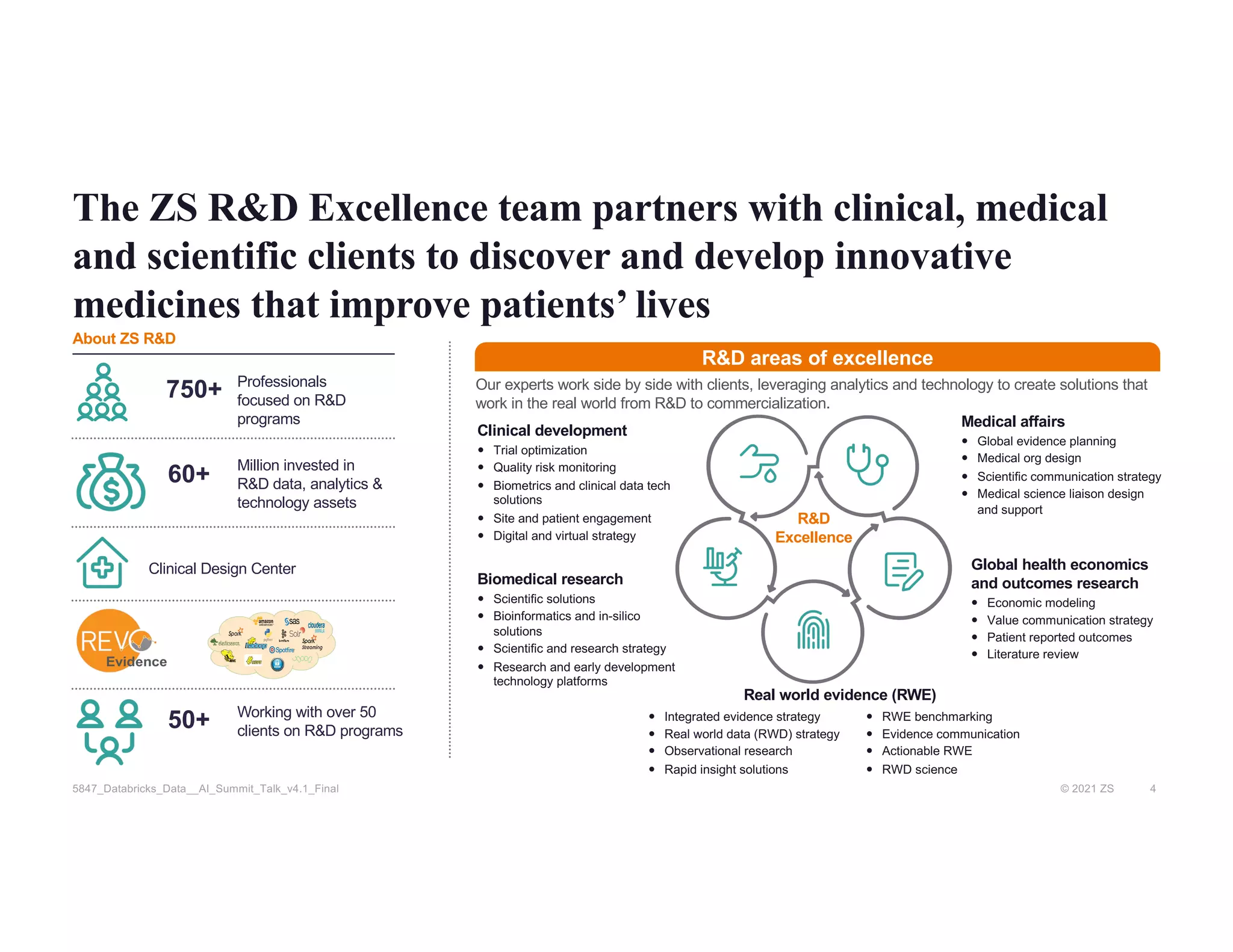

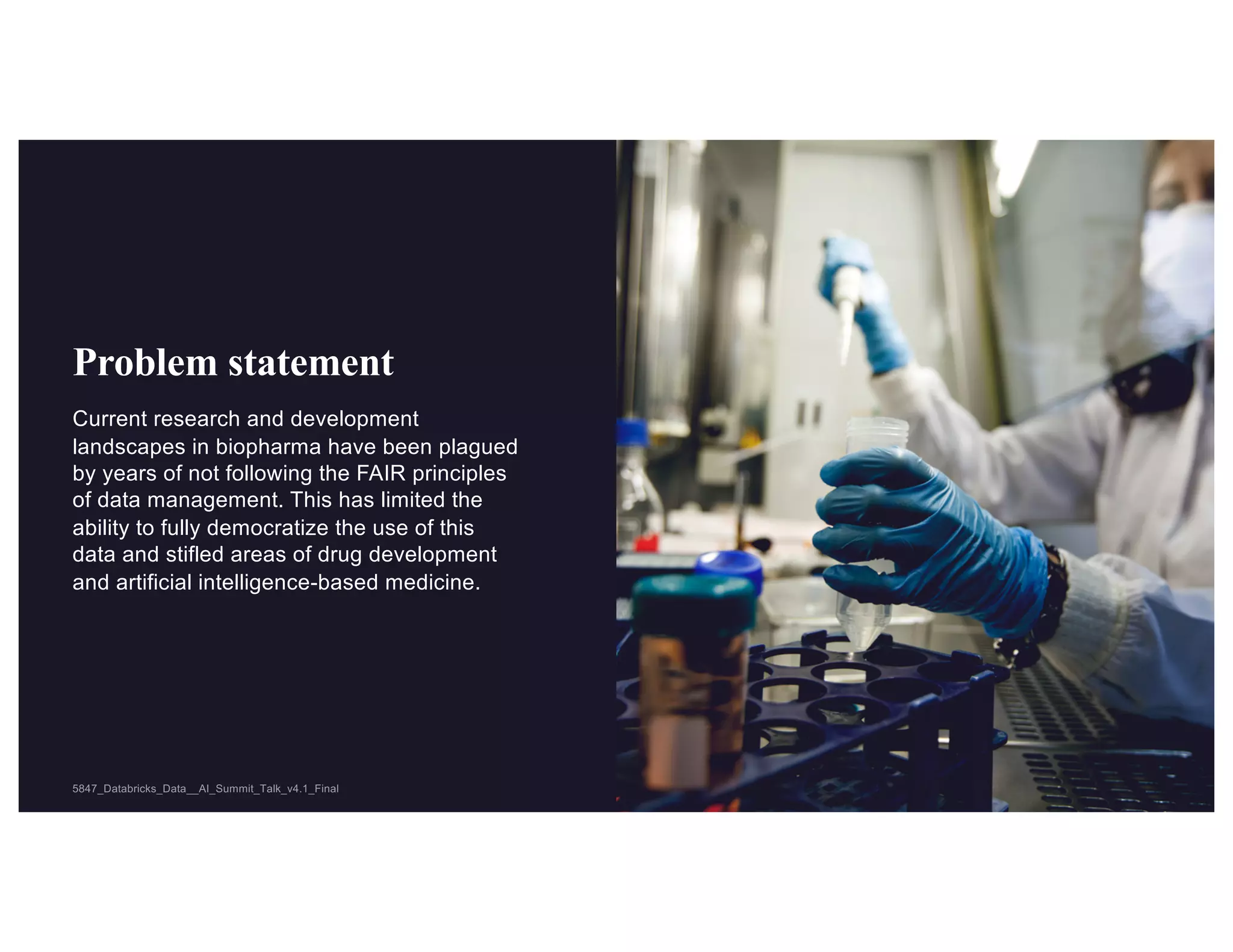

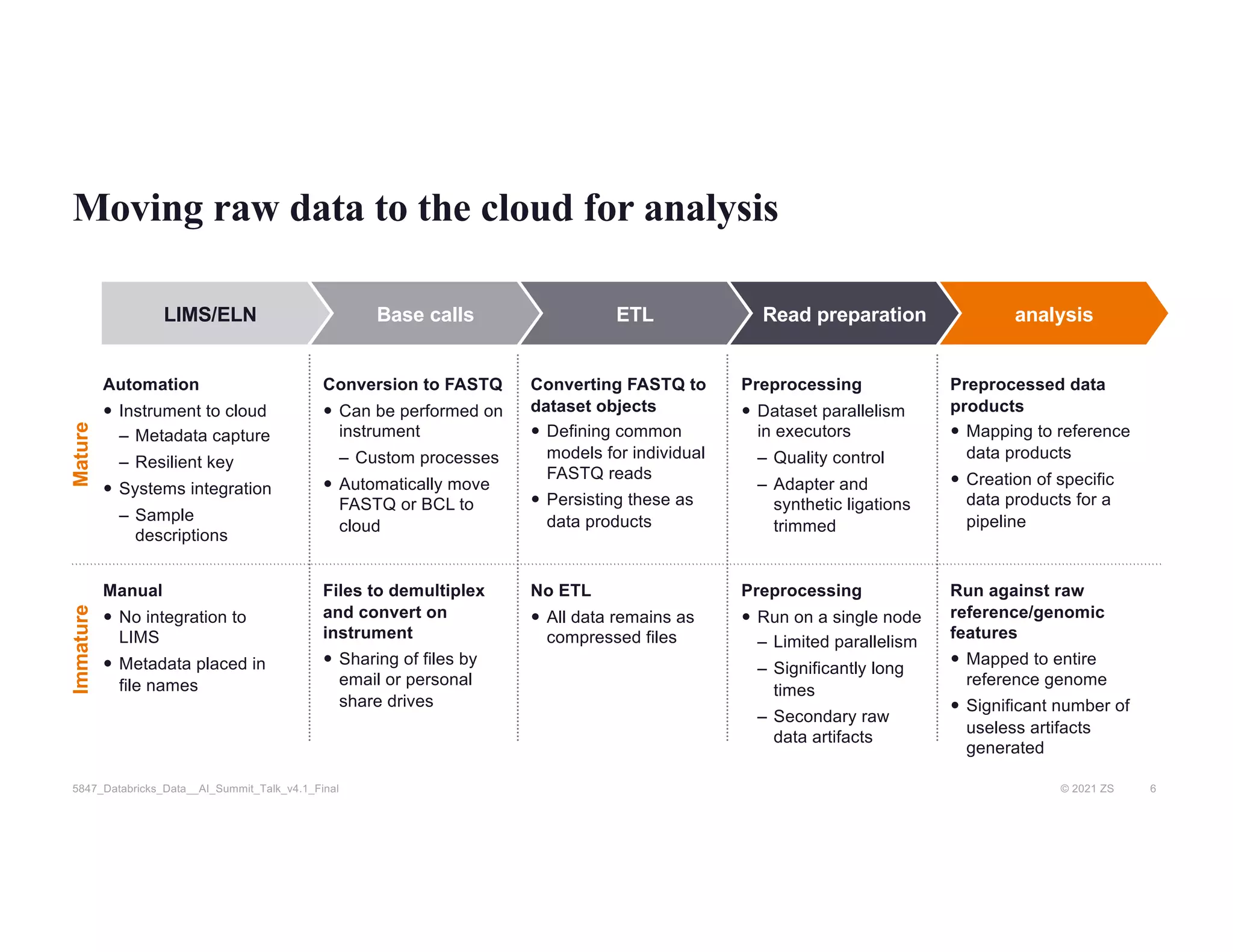

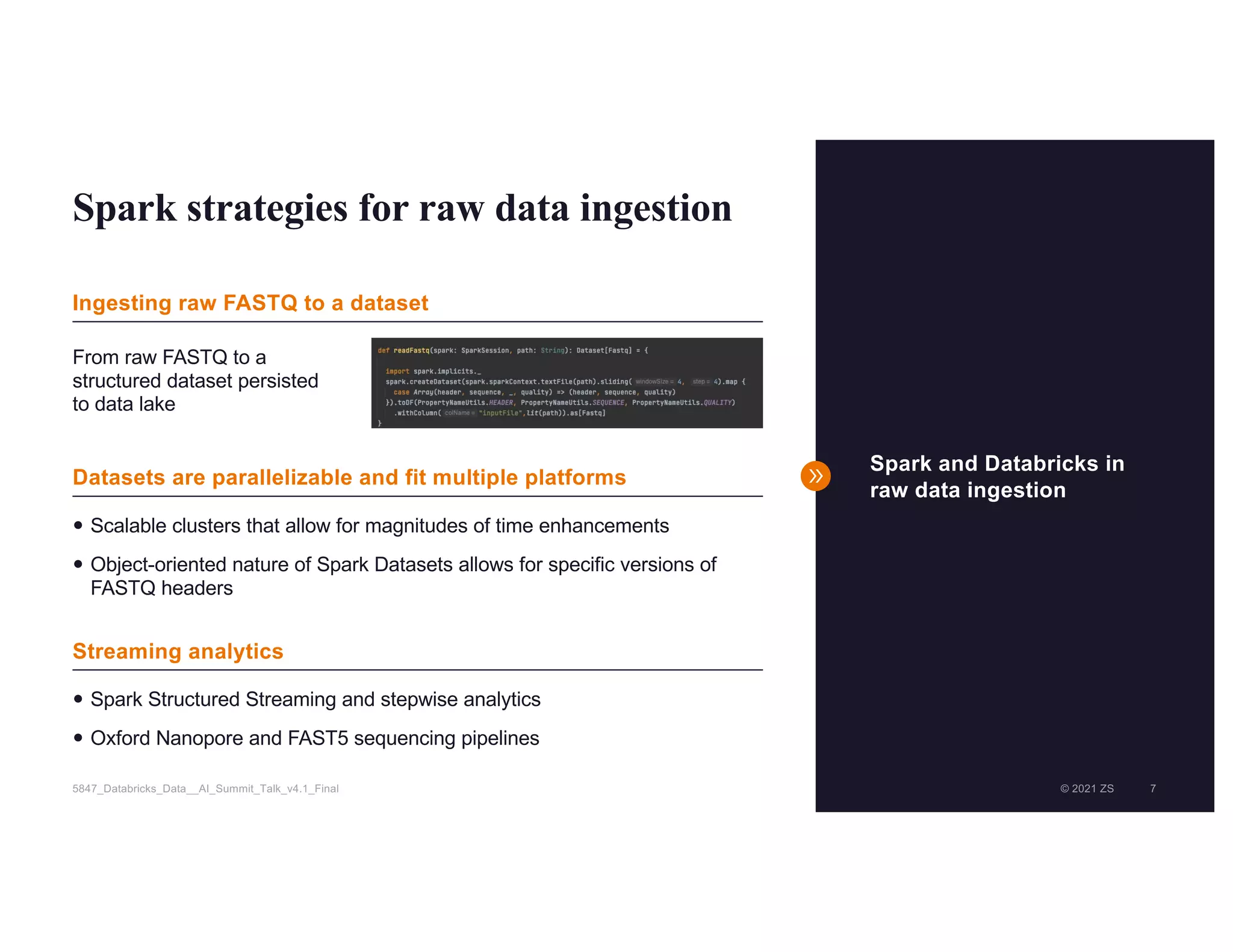

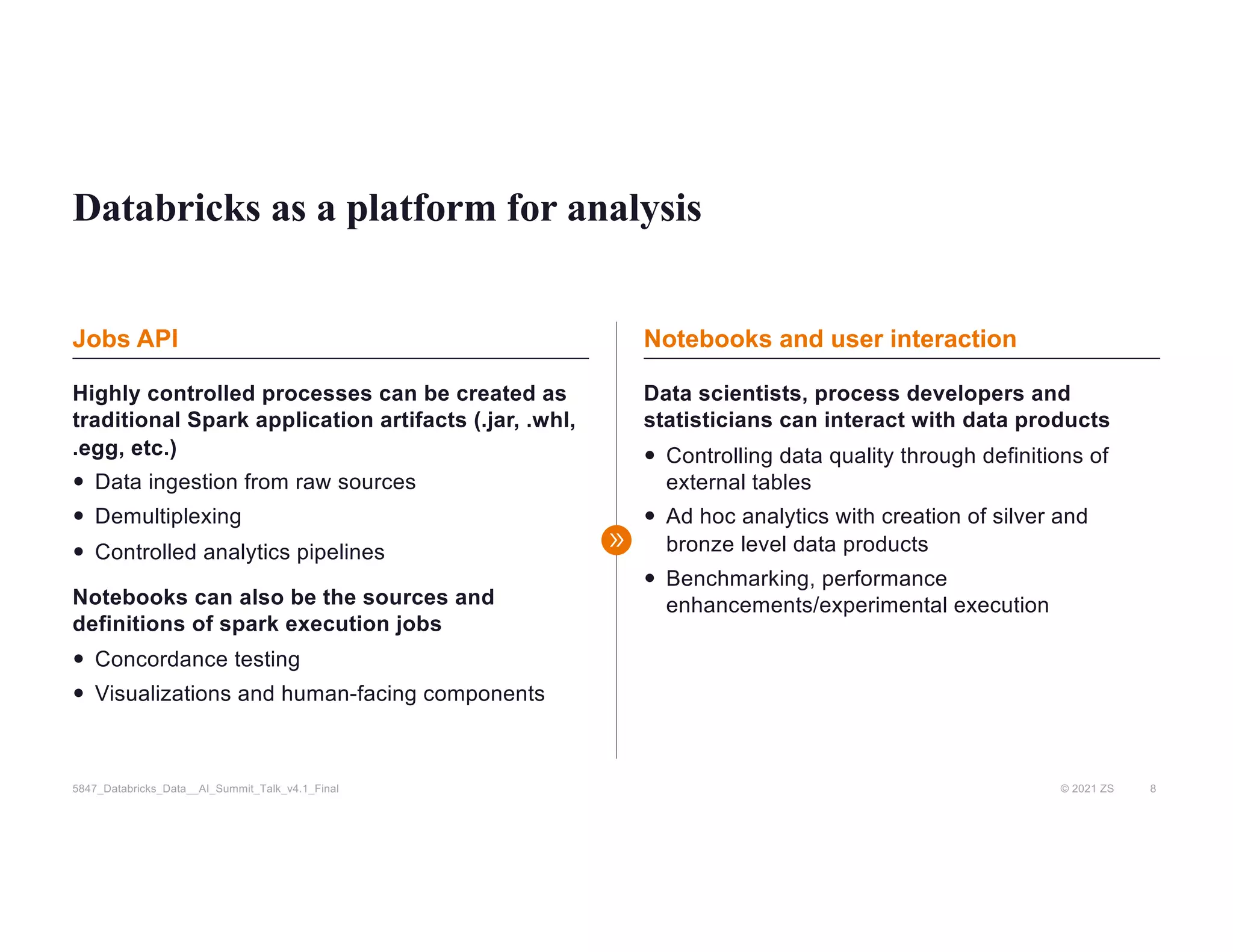

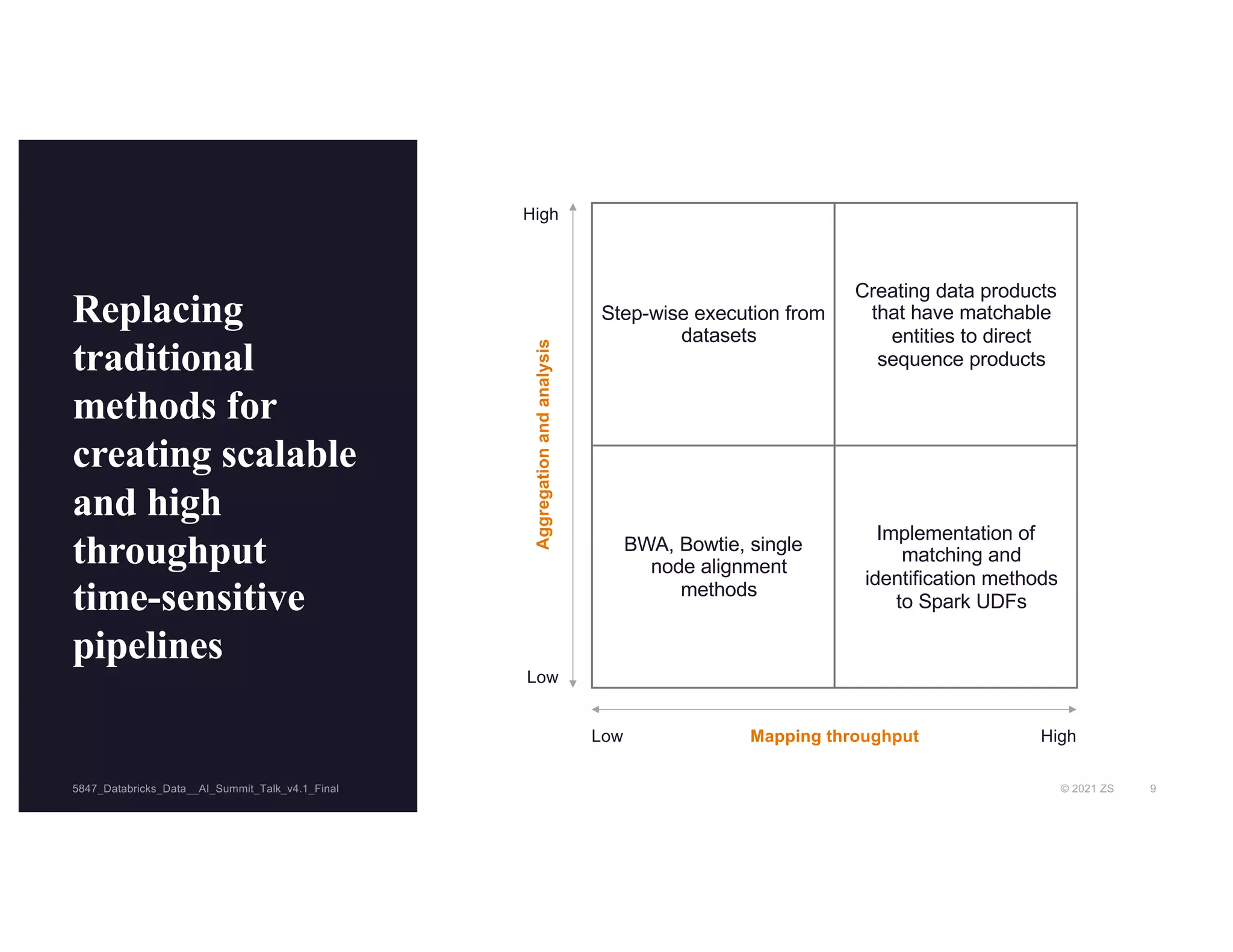

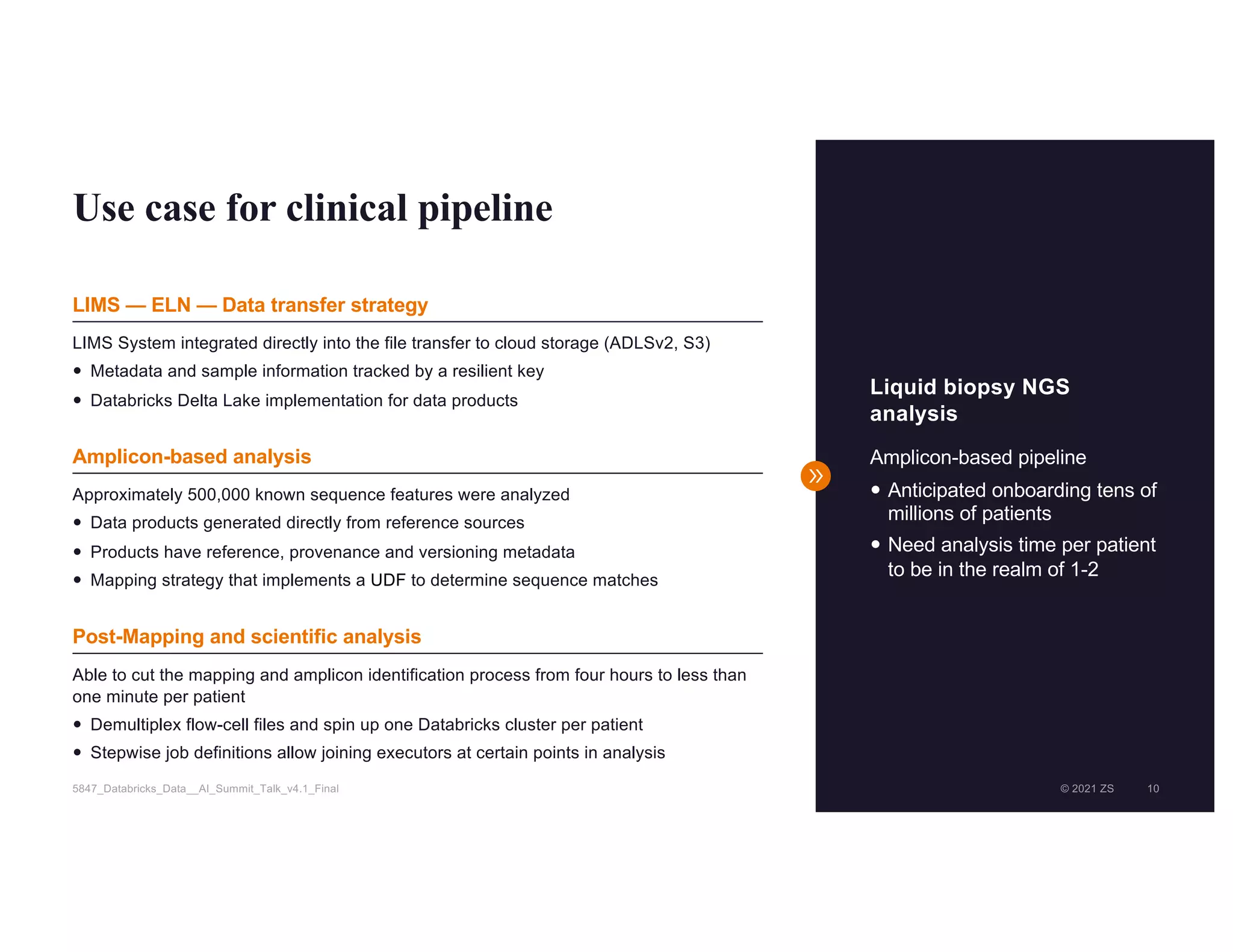

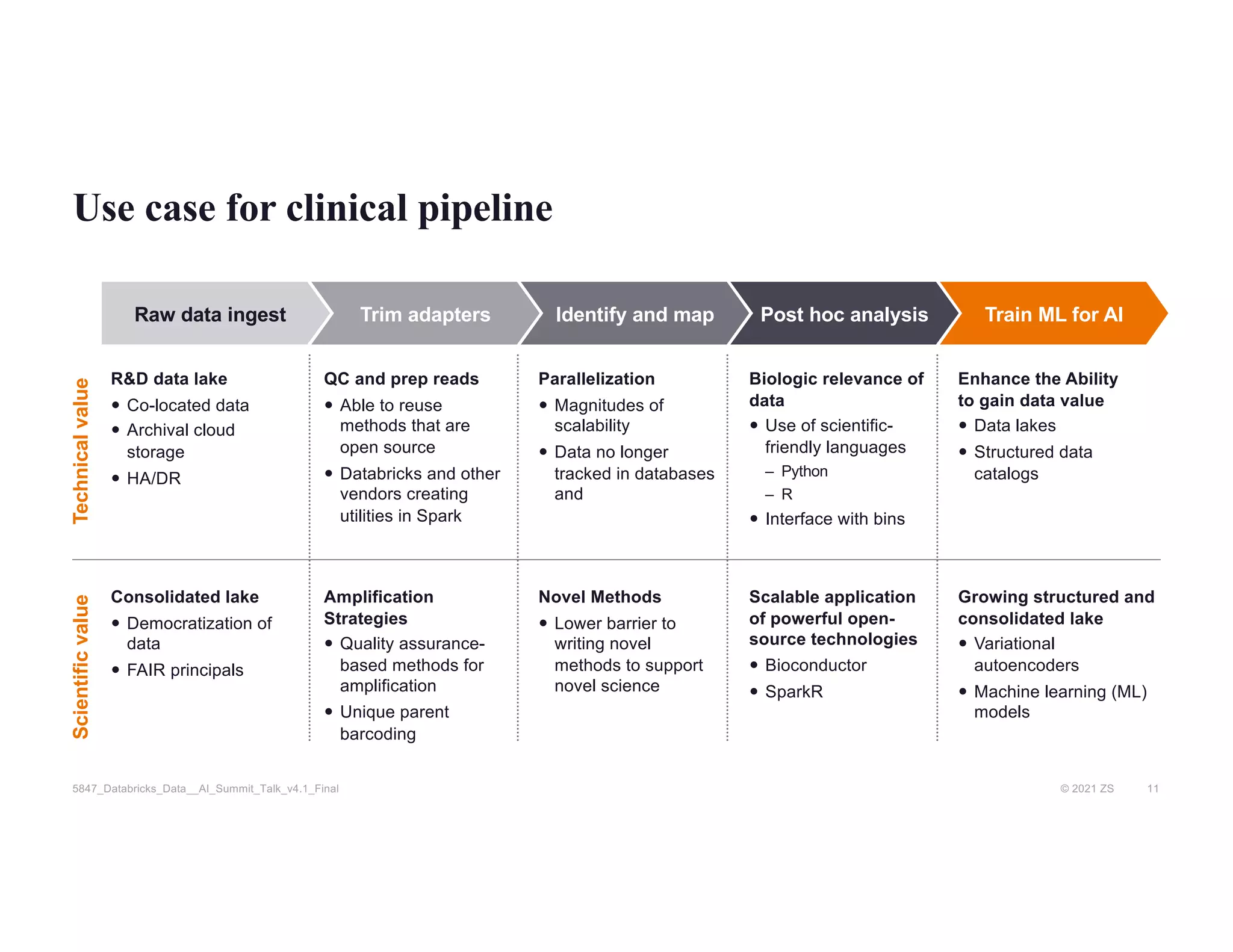

The document discusses the challenges of managing R&D data in biopharma, emphasizing the need for improved data management practices to enhance drug development and the use of AI. It outlines the role of Databricks and the ZS R&D Excellence team in facilitating advanced data analytics and engineering for clinical and scientific clients. The presentation highlights solutions for processing and analyzing genomic data effectively, with examples of efficient clinical pipeline implementations.