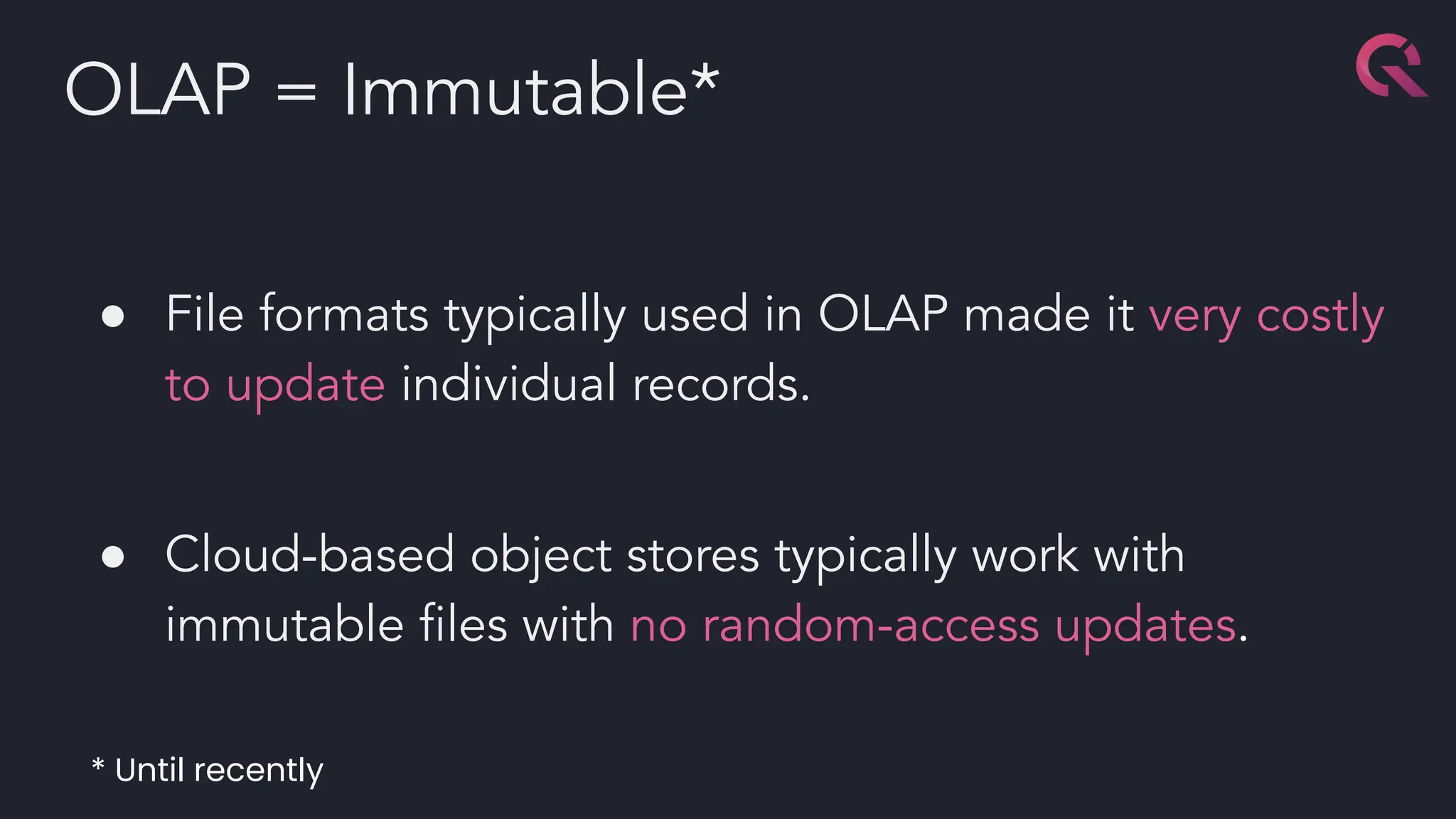

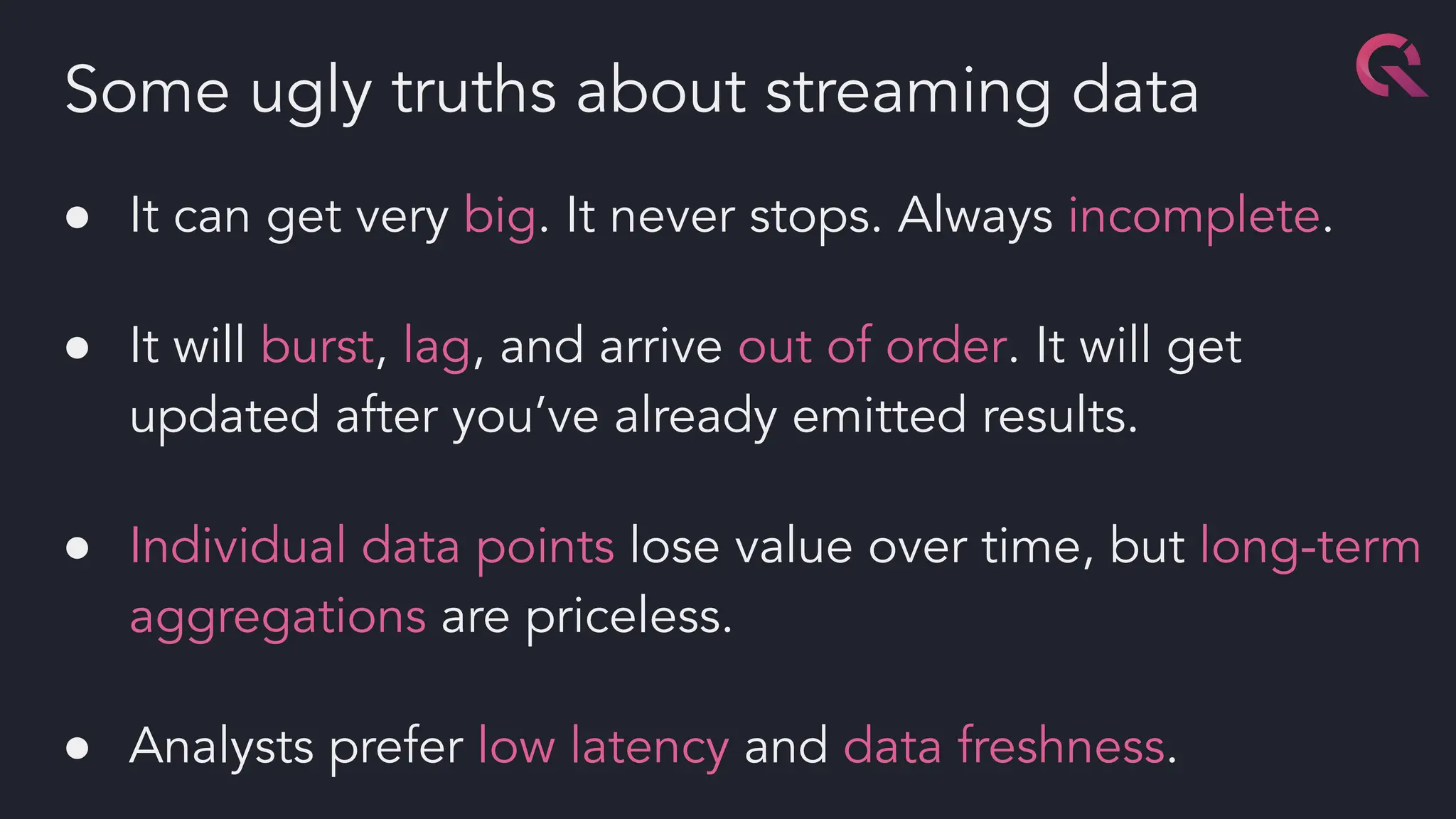

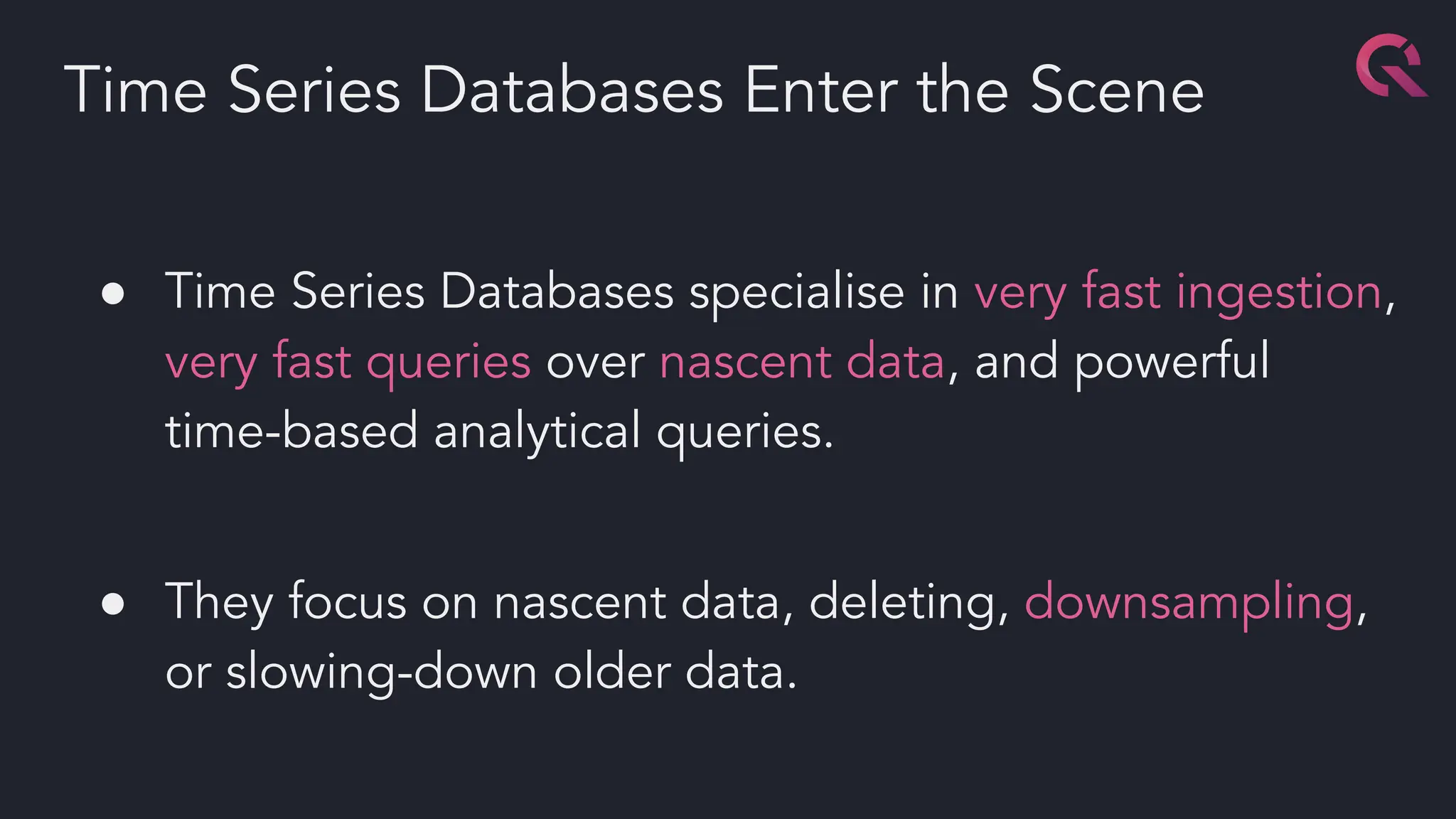

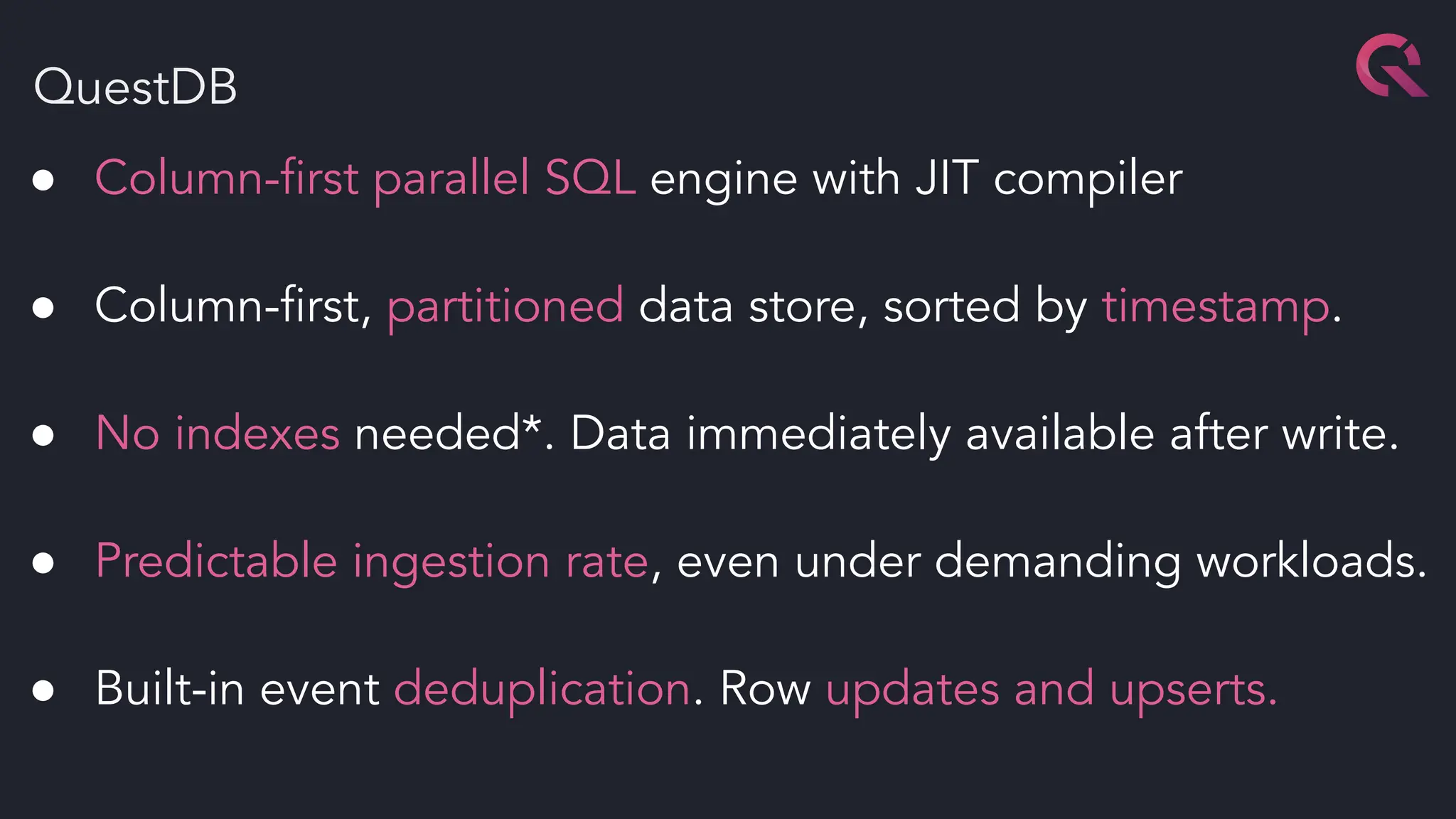

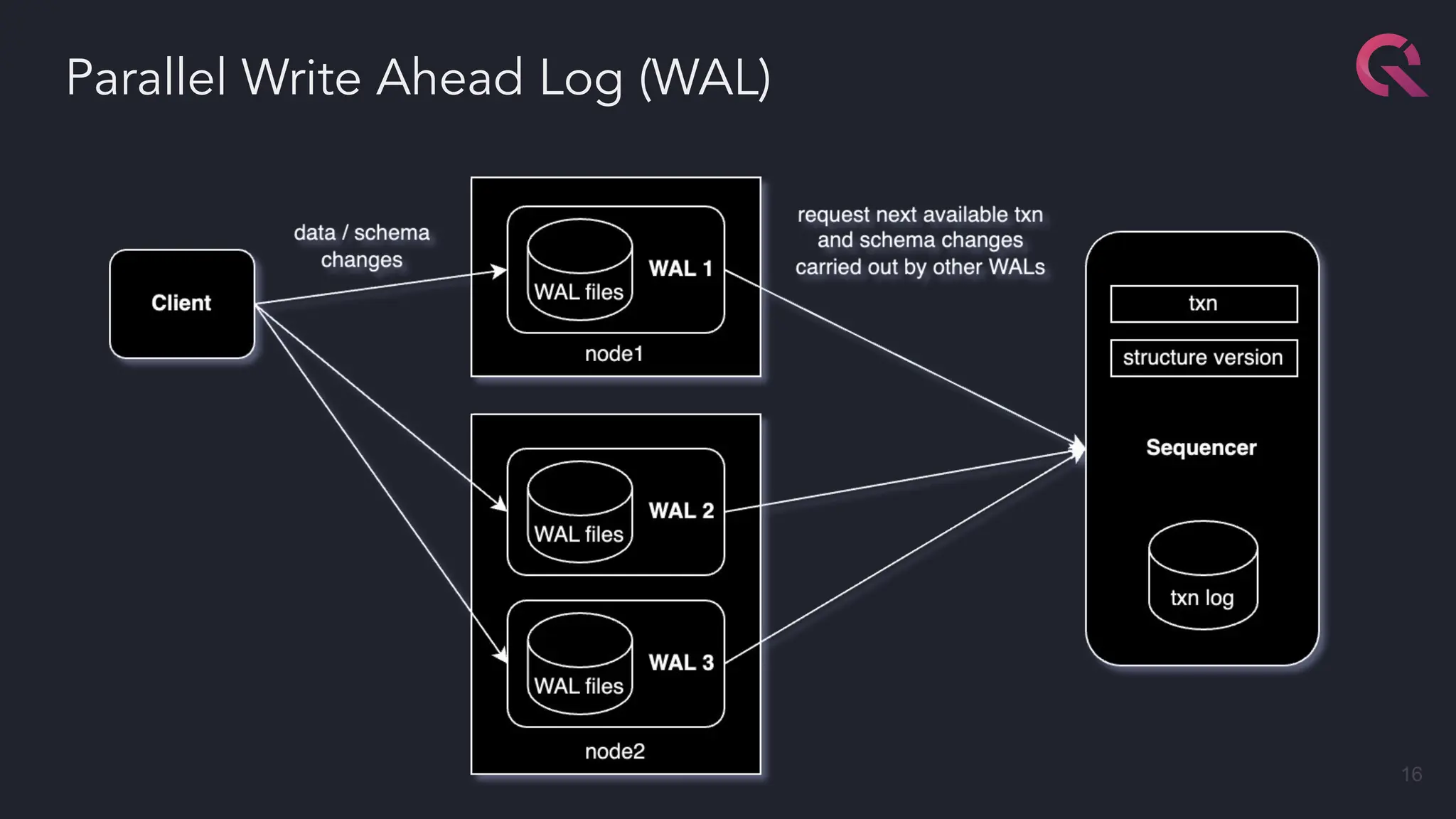

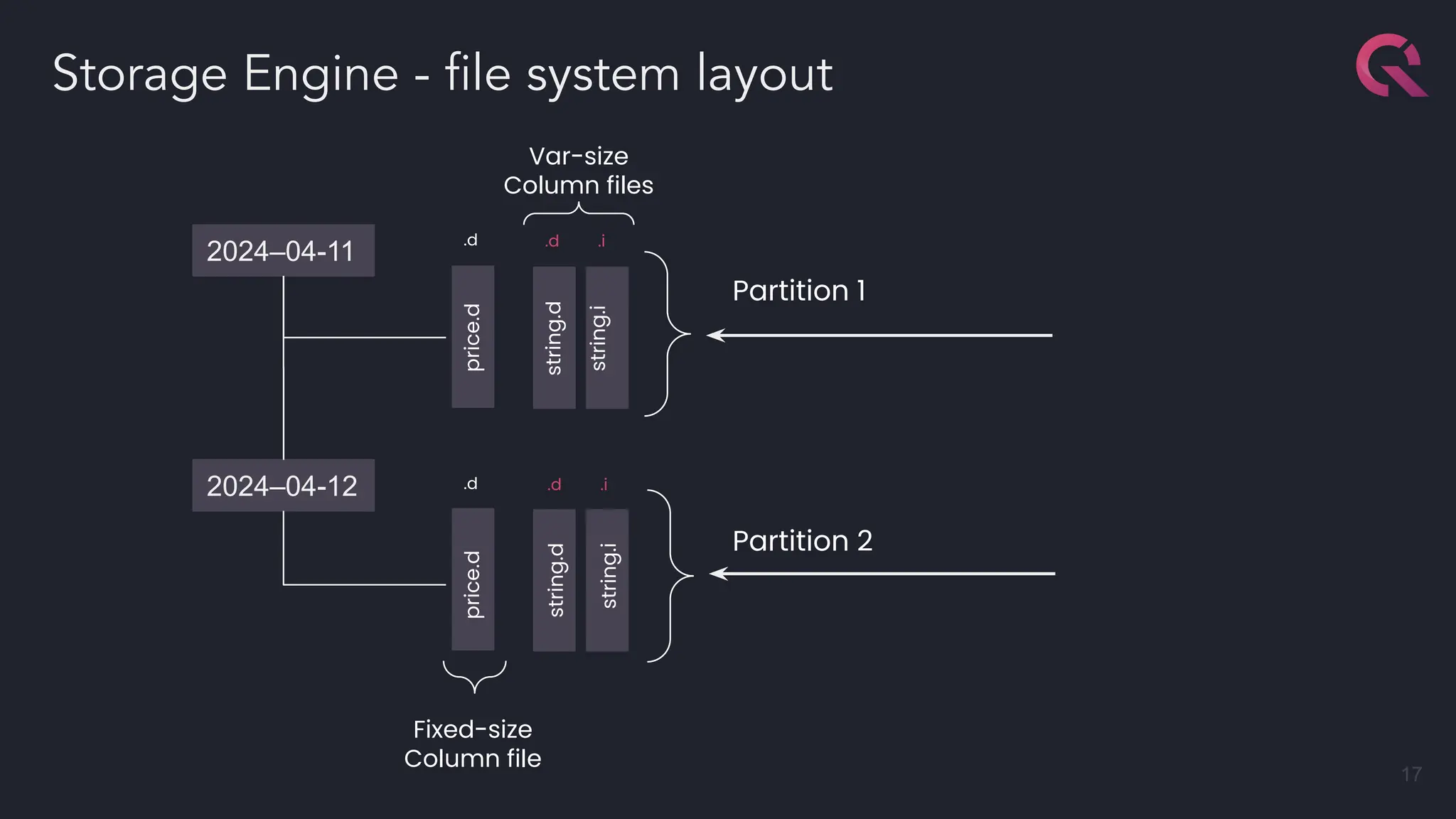

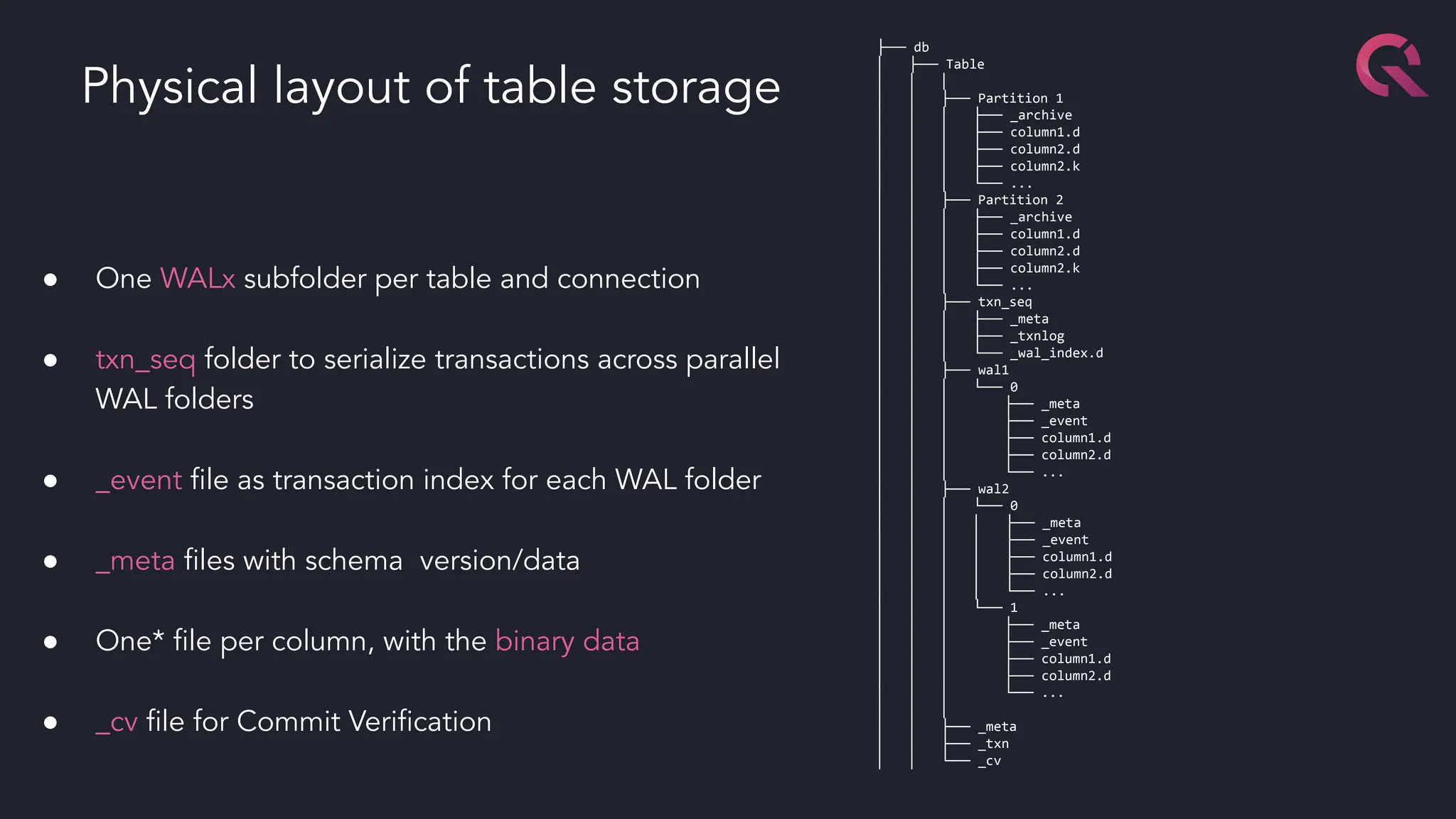

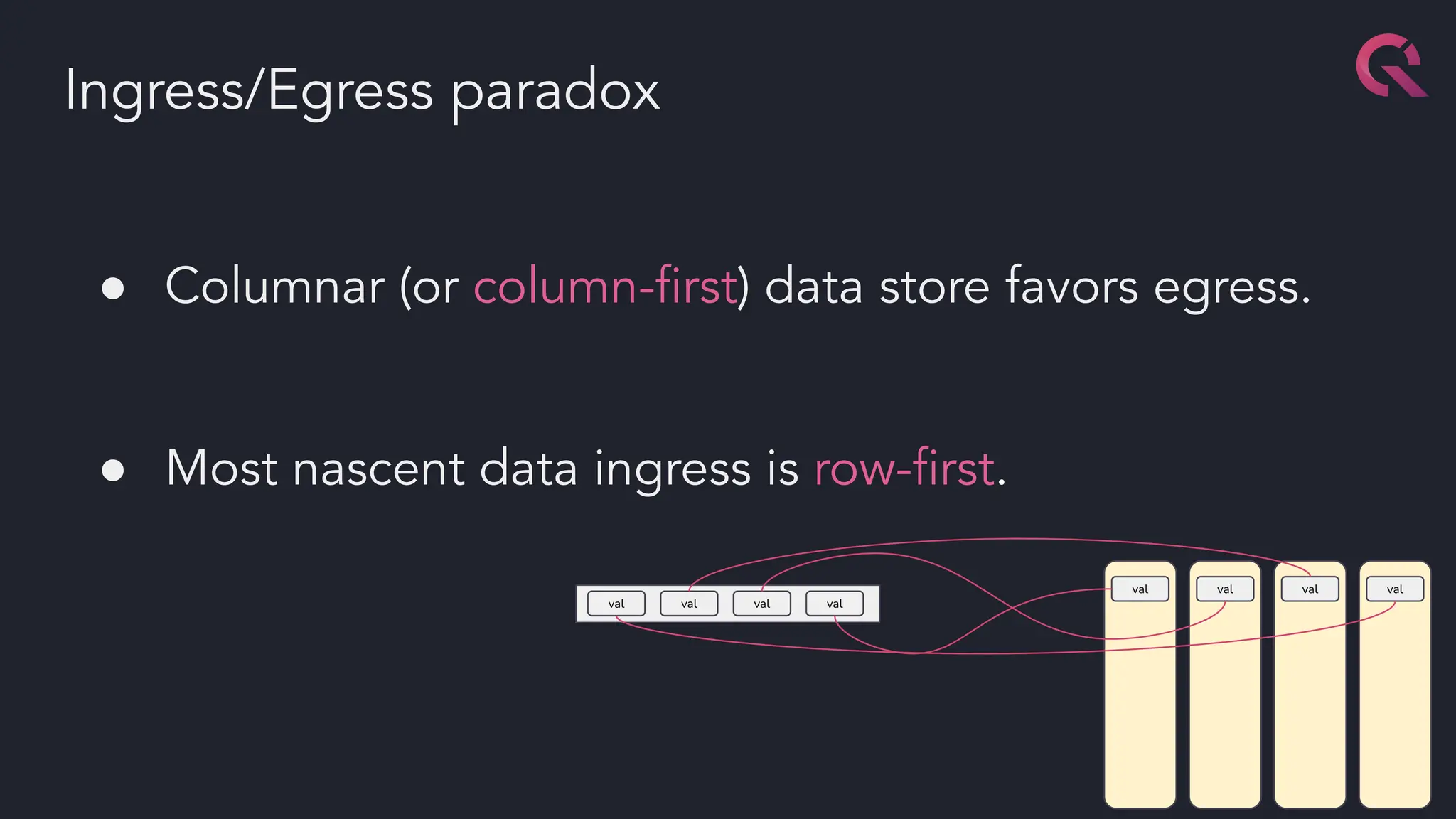

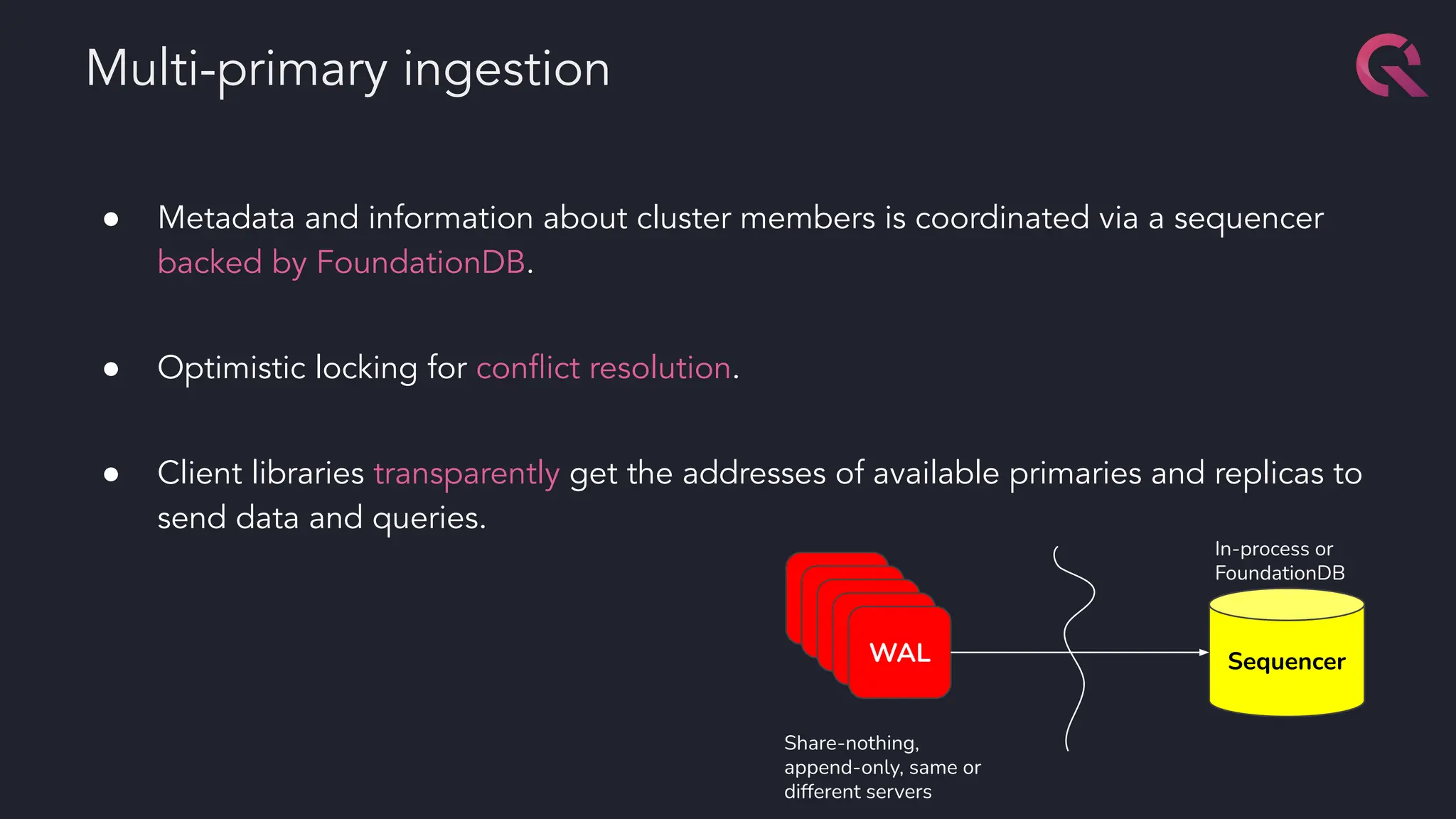

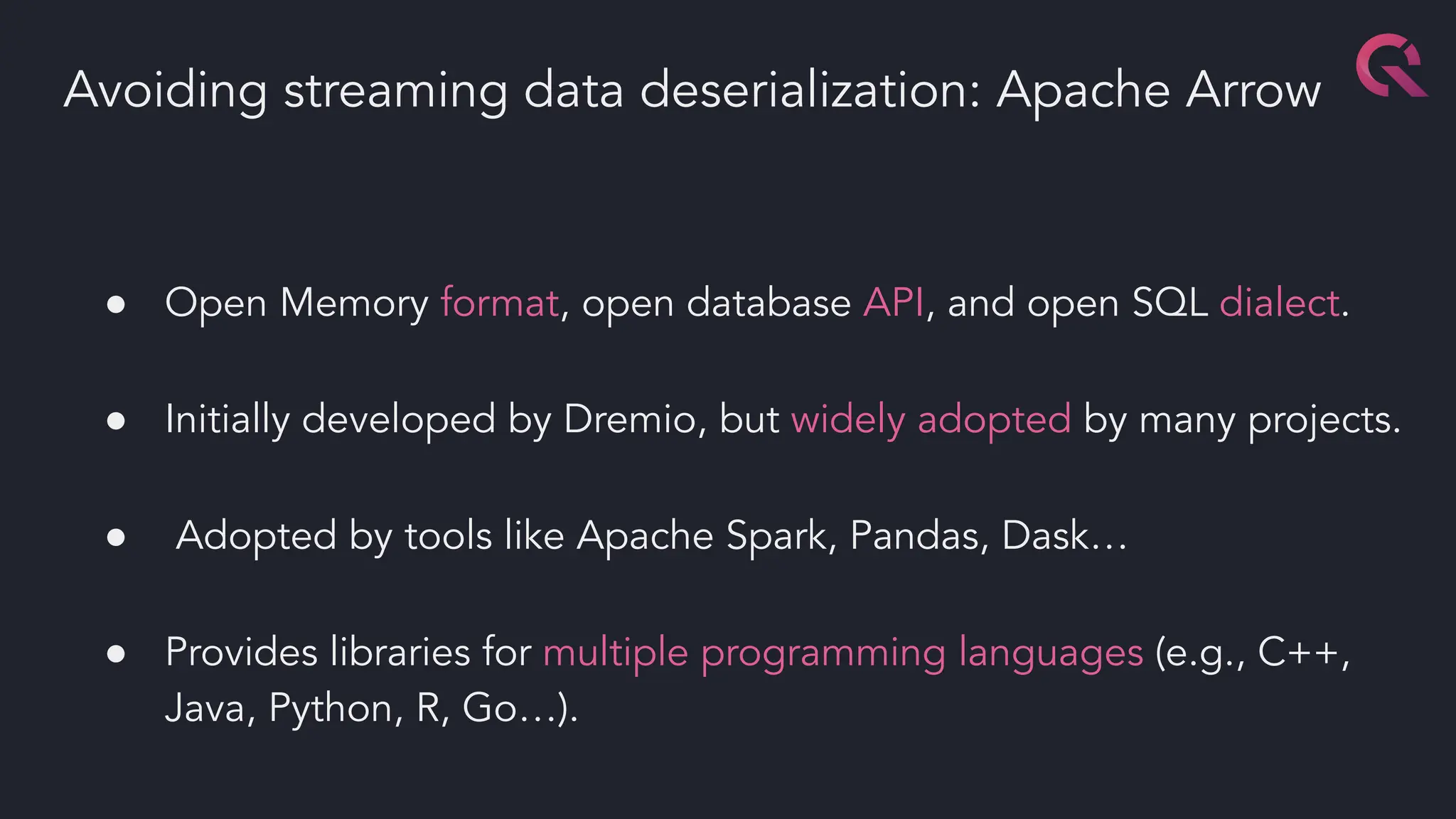

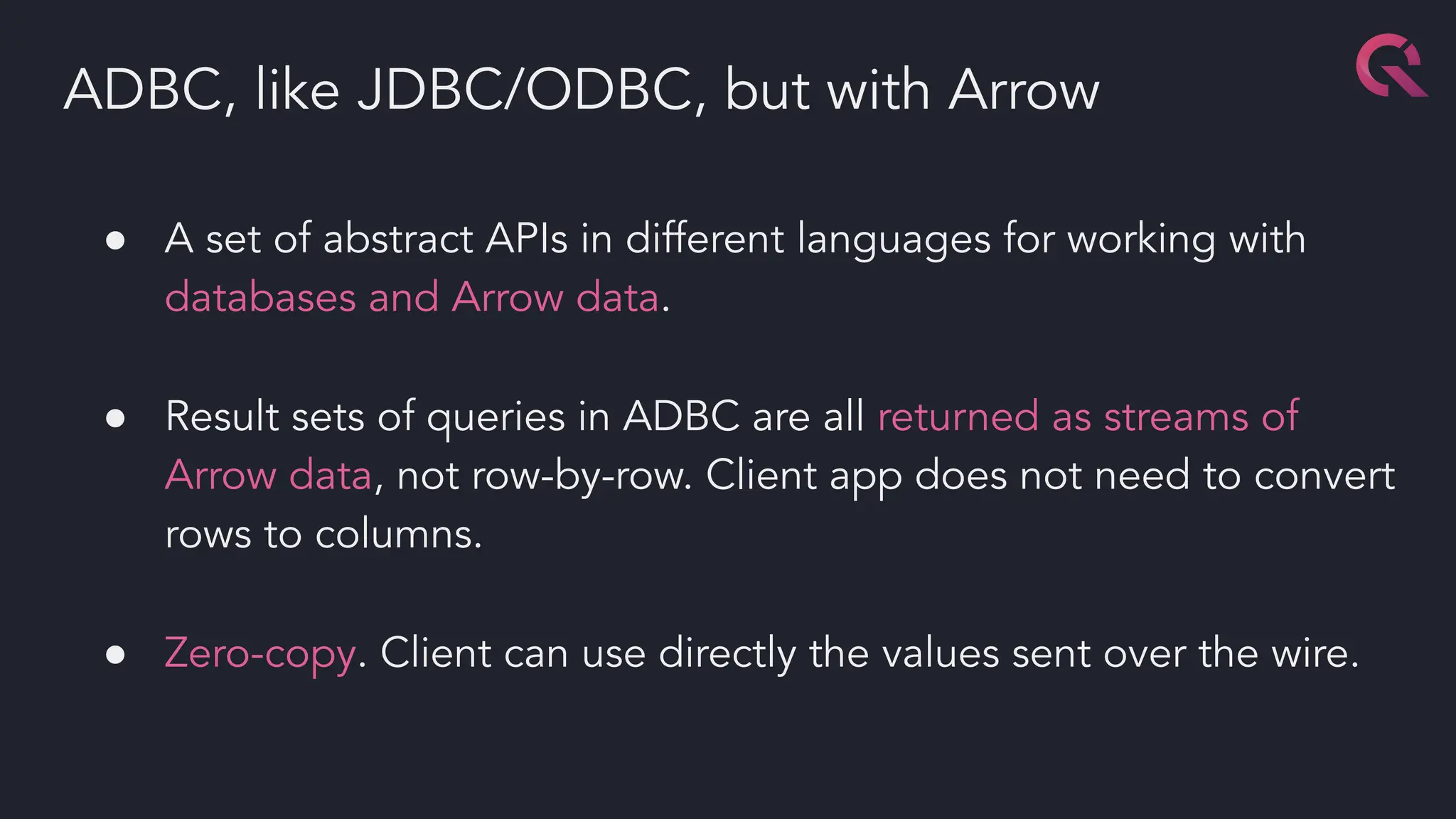

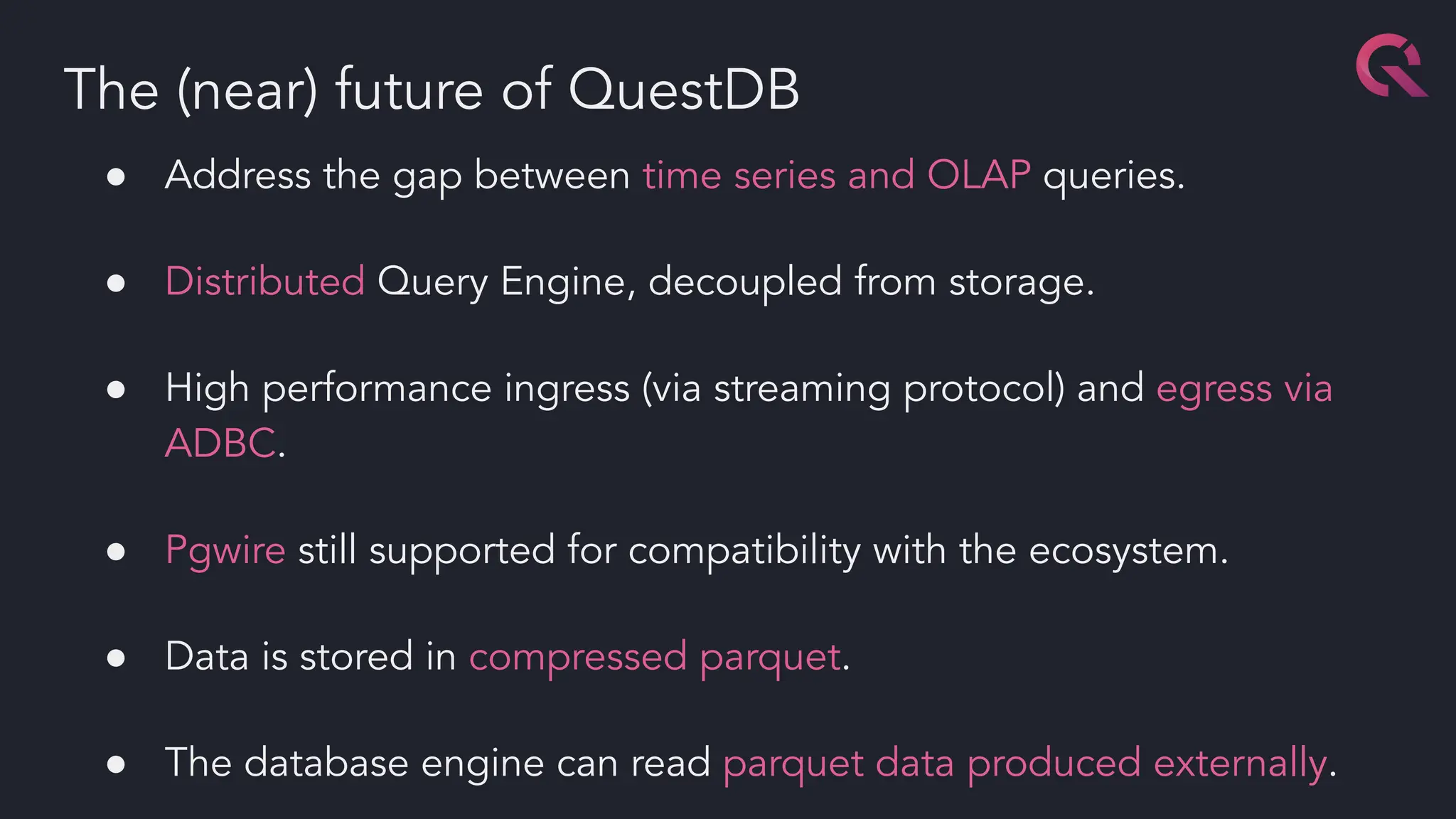

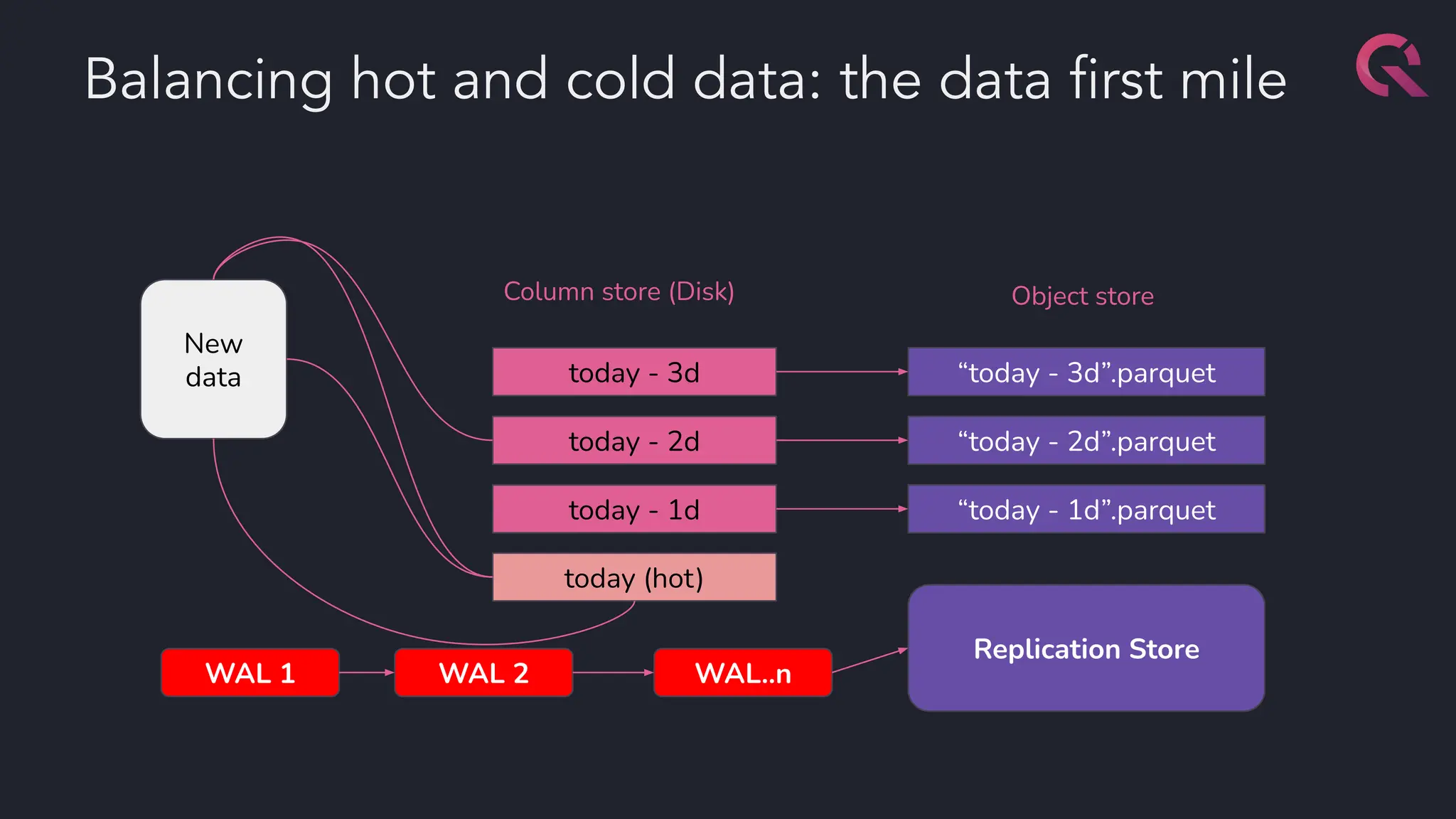

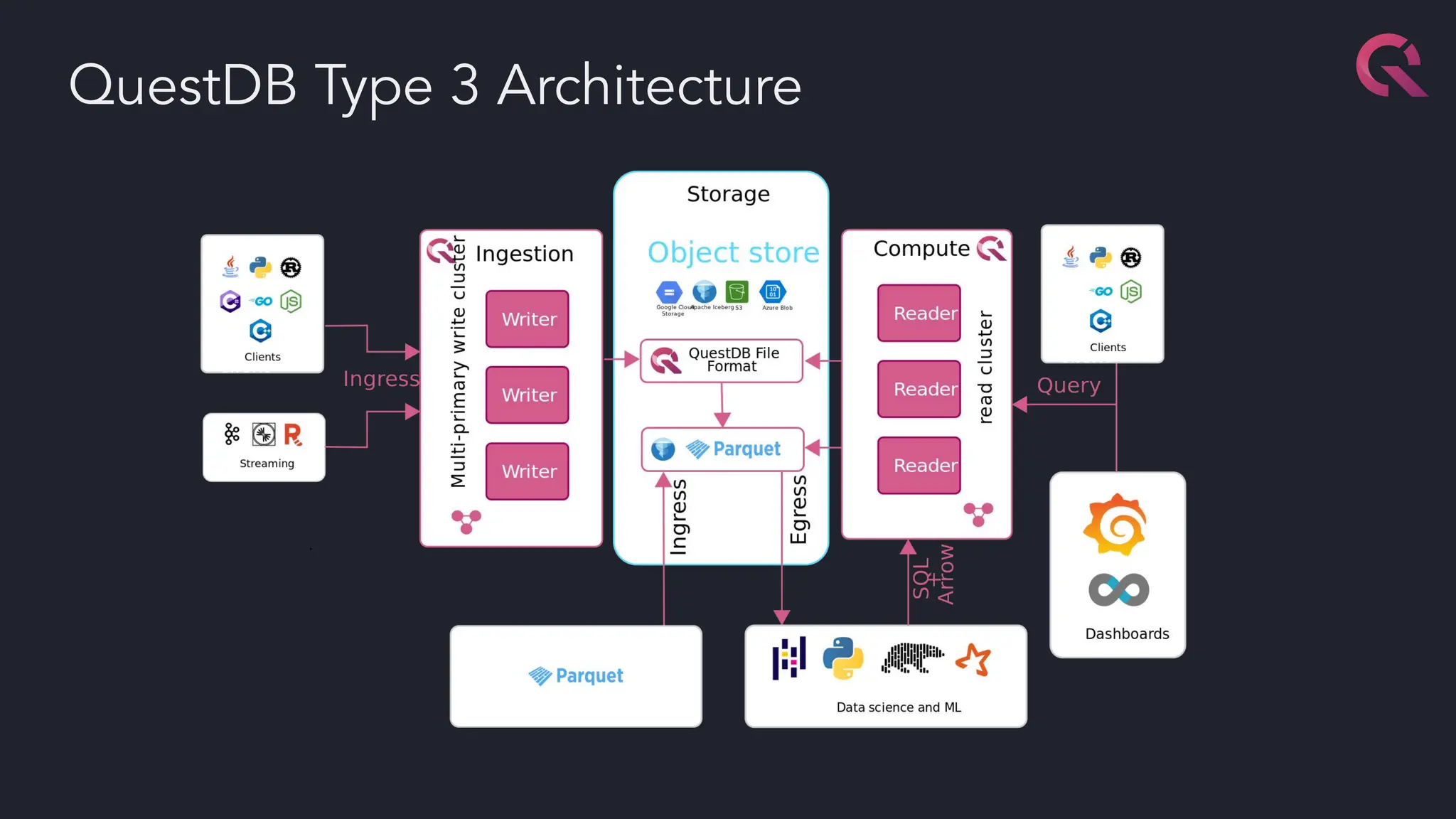

The document discusses advancements in fast databases, particularly focusing on QuestDB, which is designed for efficient ingestion and querying of large datasets. It highlights the evolution of database technology from OLTP and OLAP models to the integration of open formats like Apache Arrow for improved performance. The future vision includes a convergence of time series databases and OLAP, with enhanced capabilities for data handling and analysis.