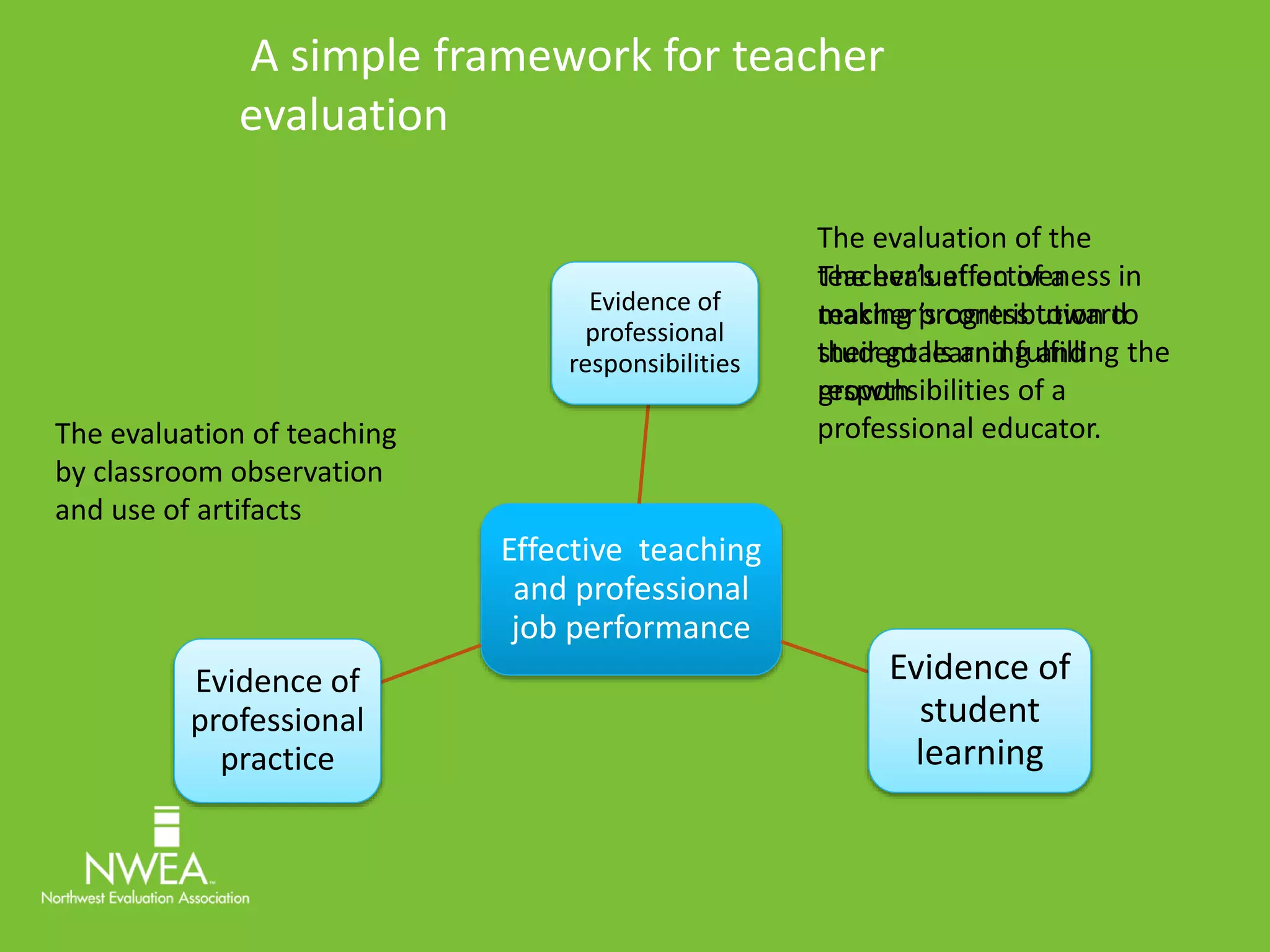

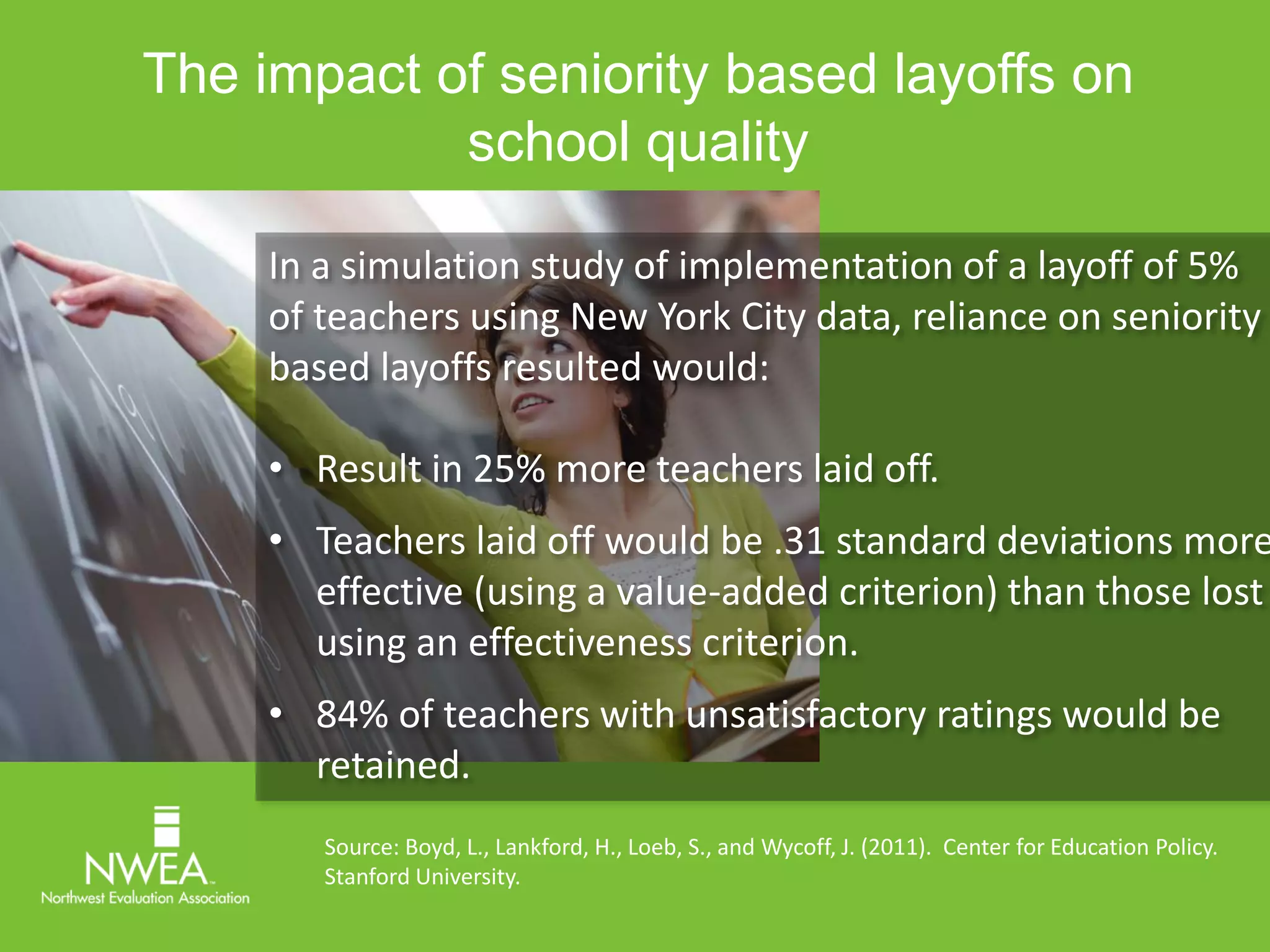

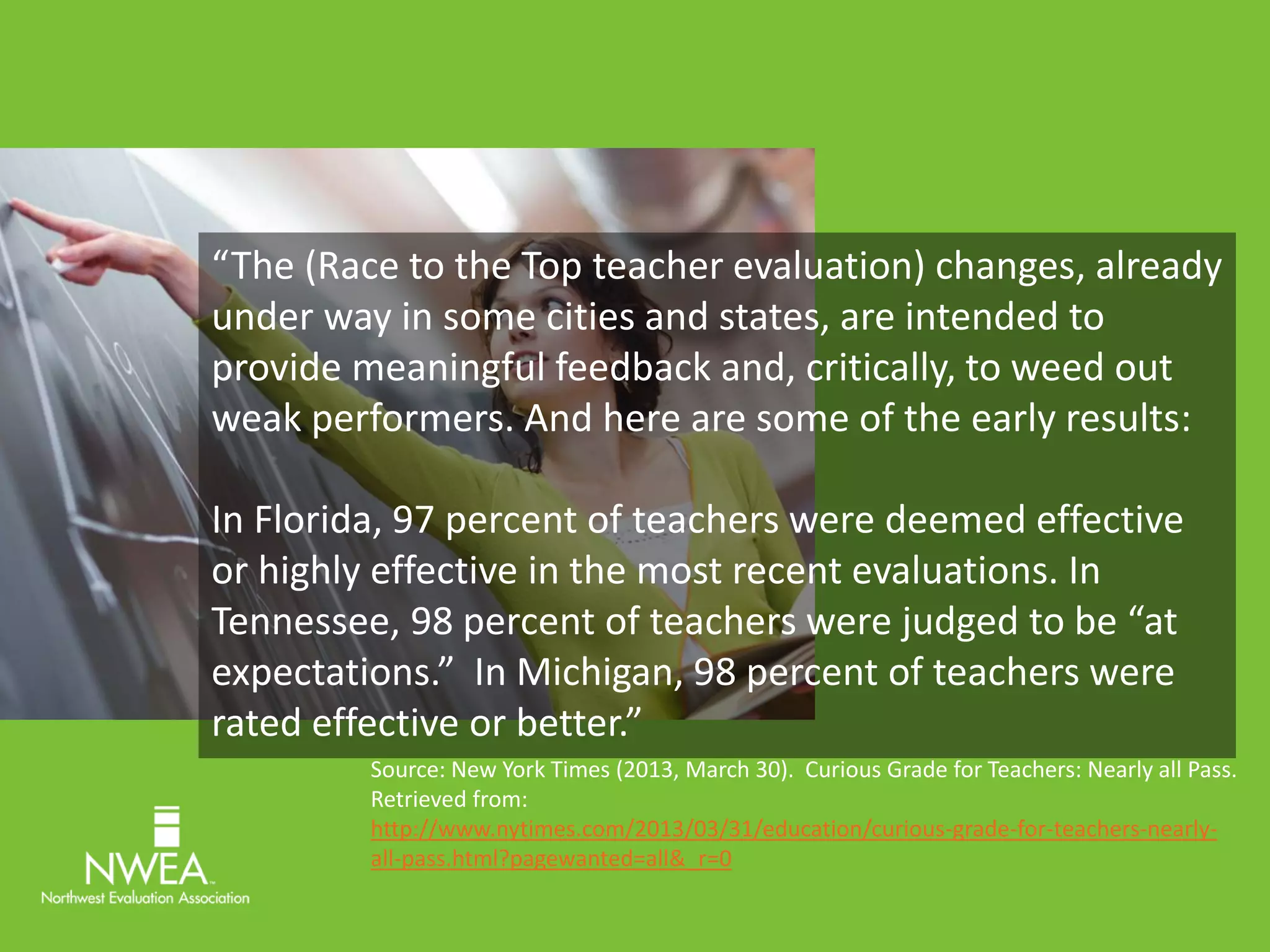

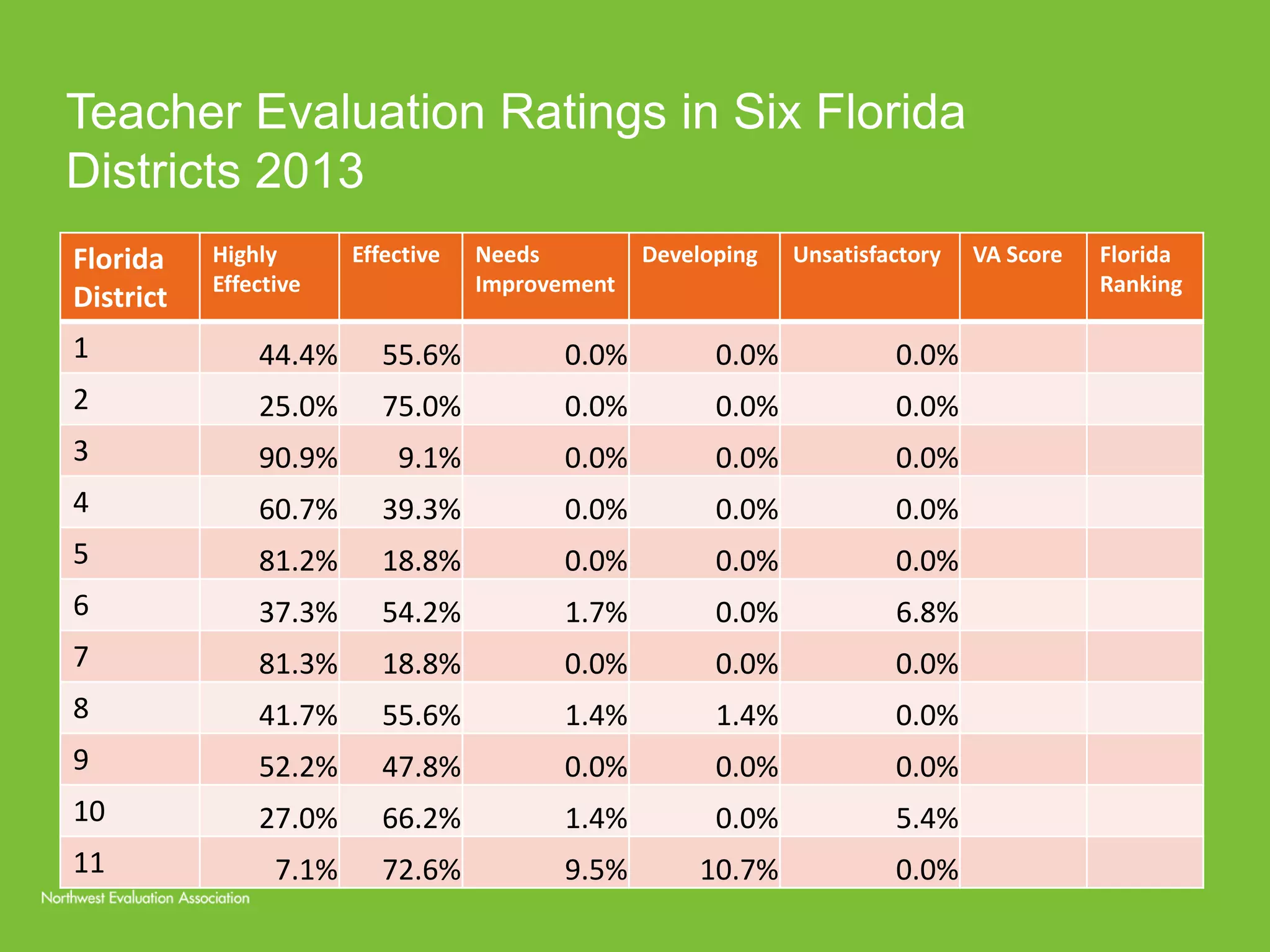

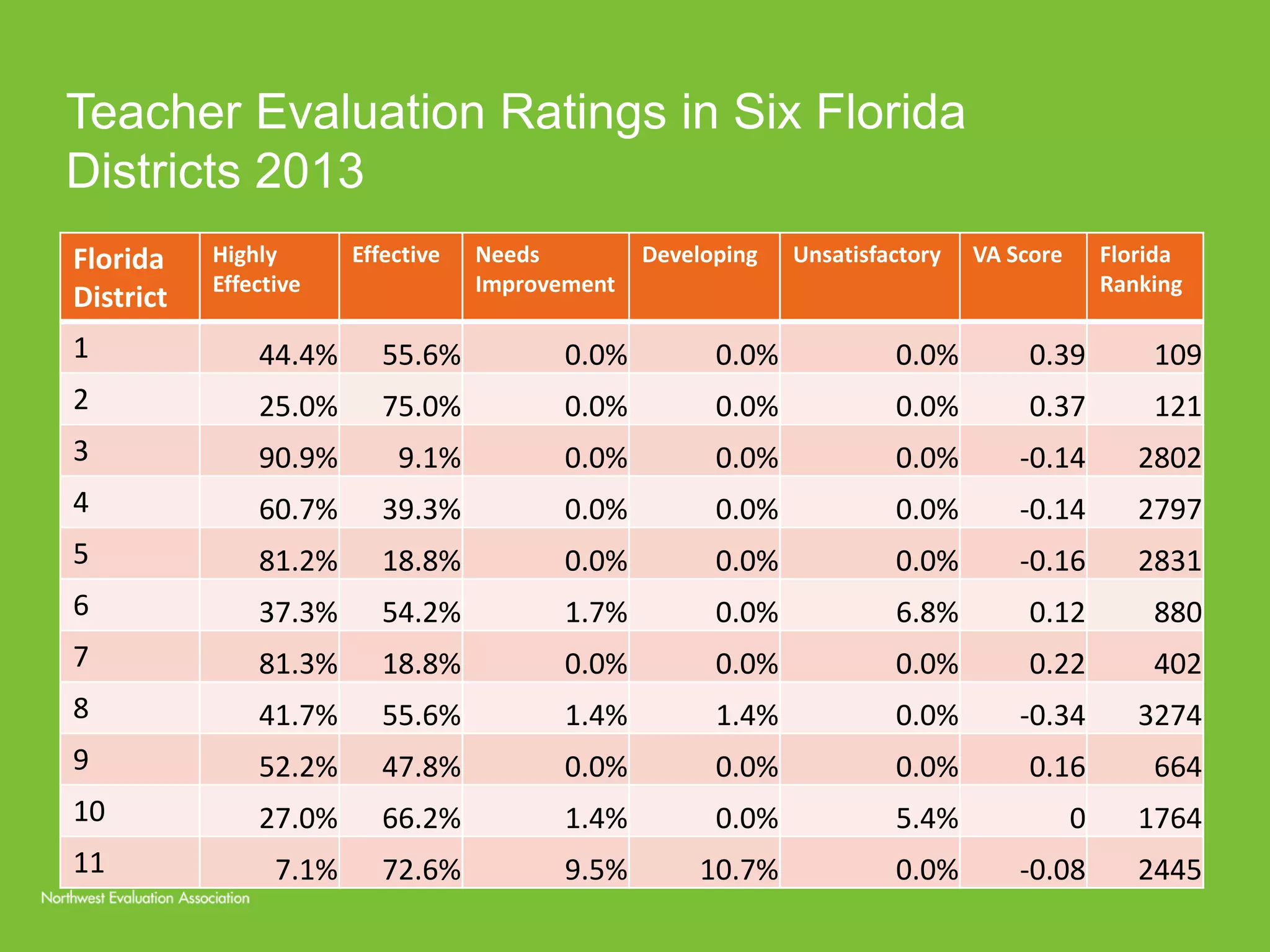

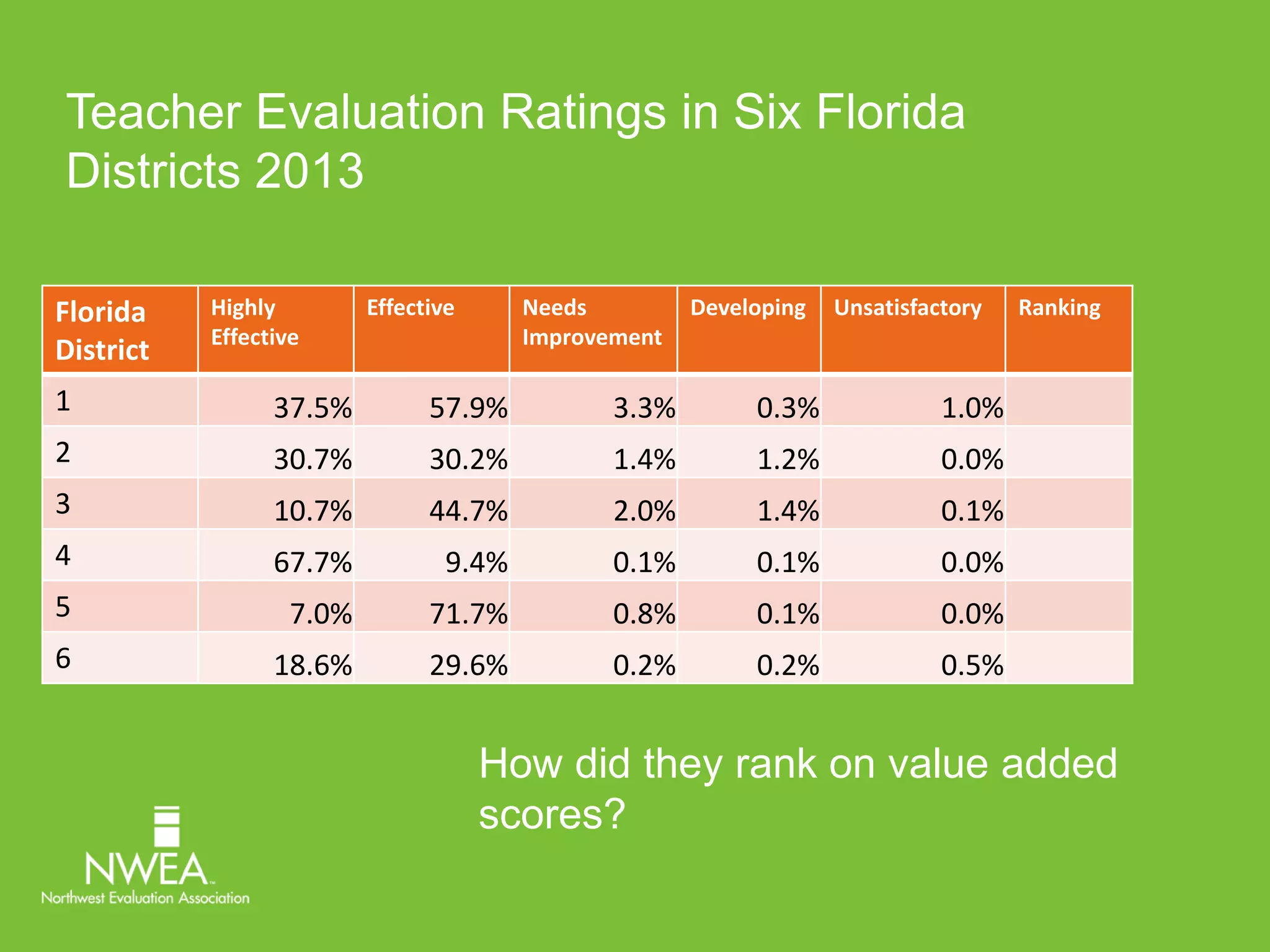

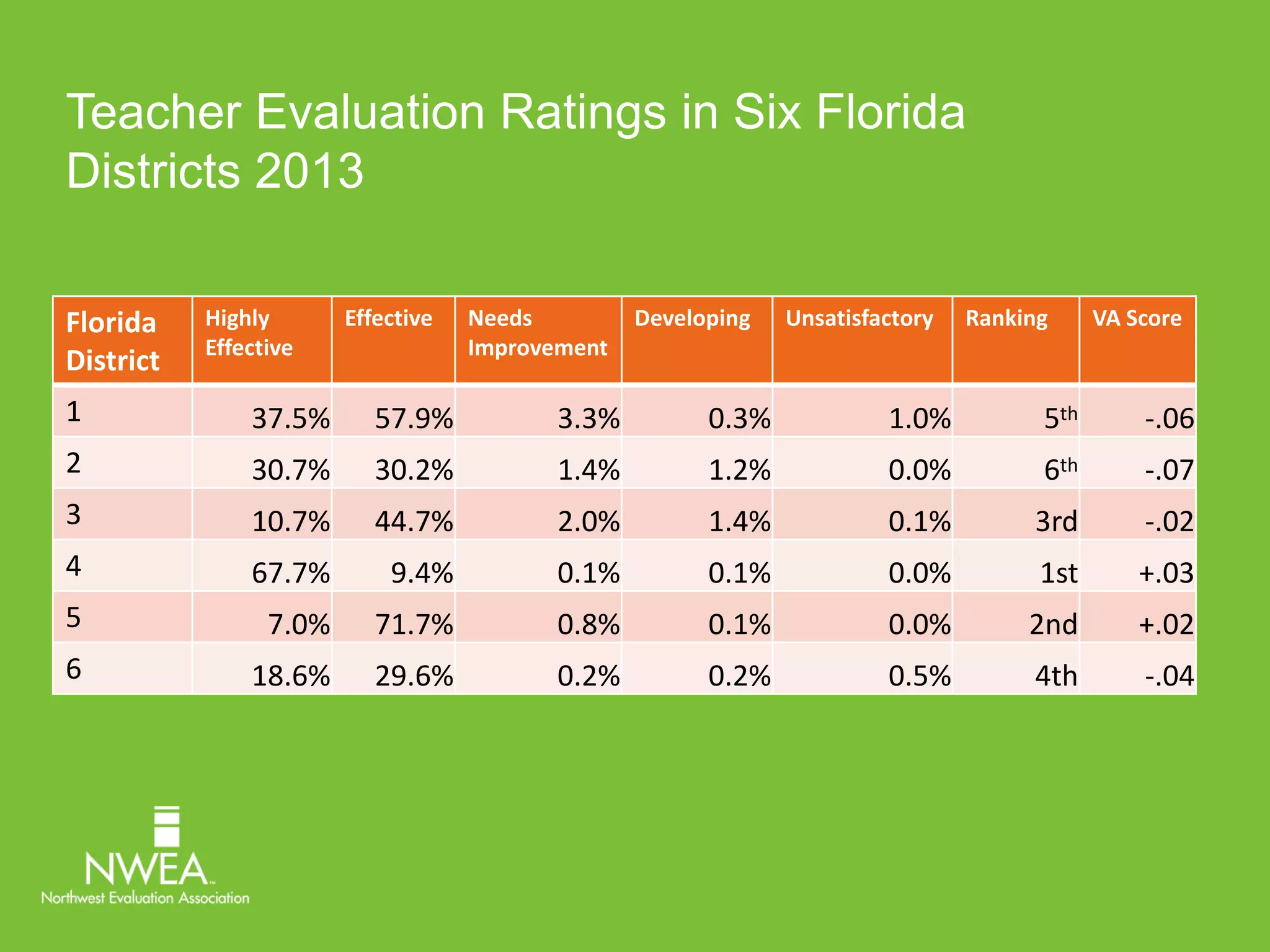

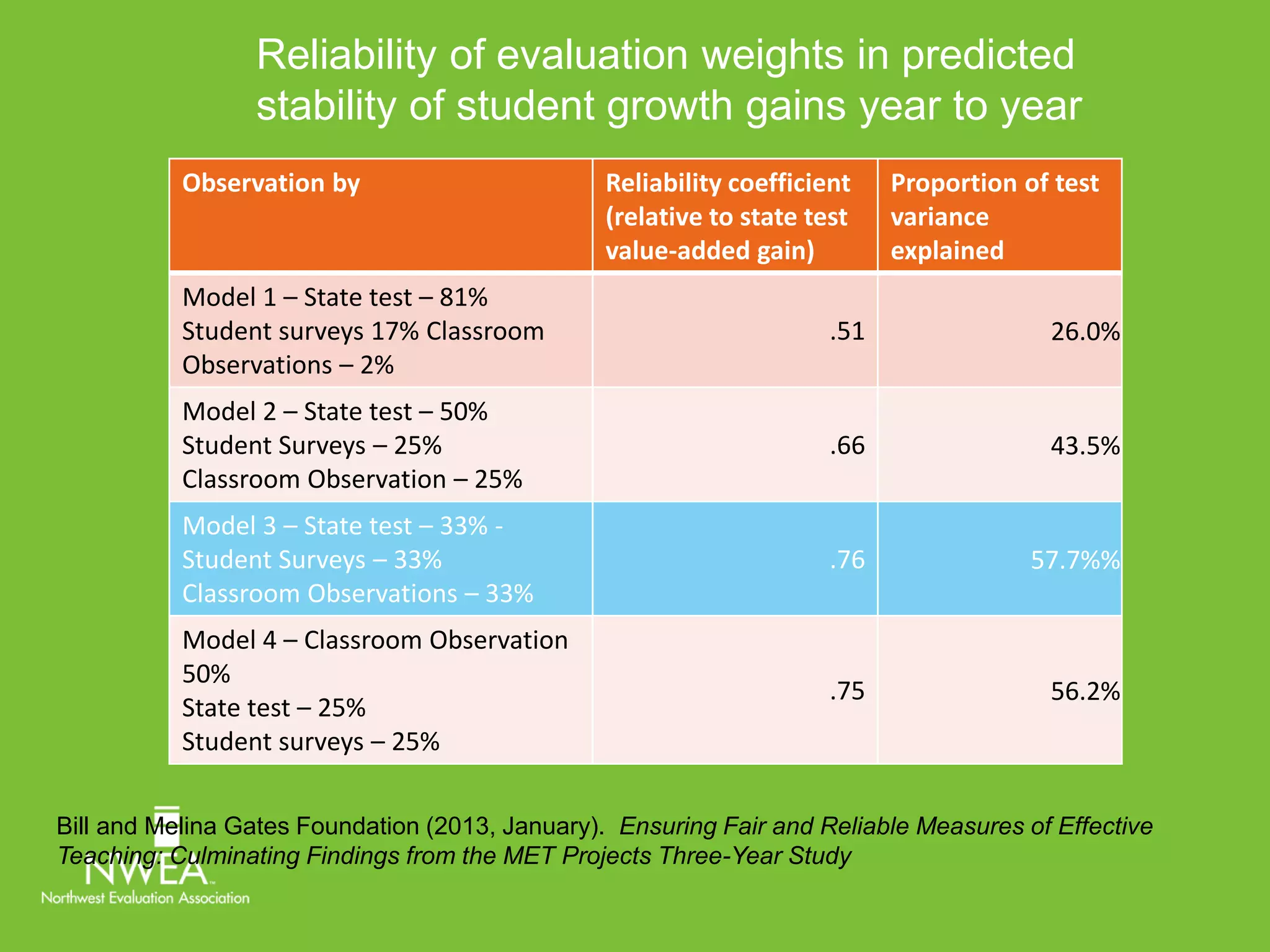

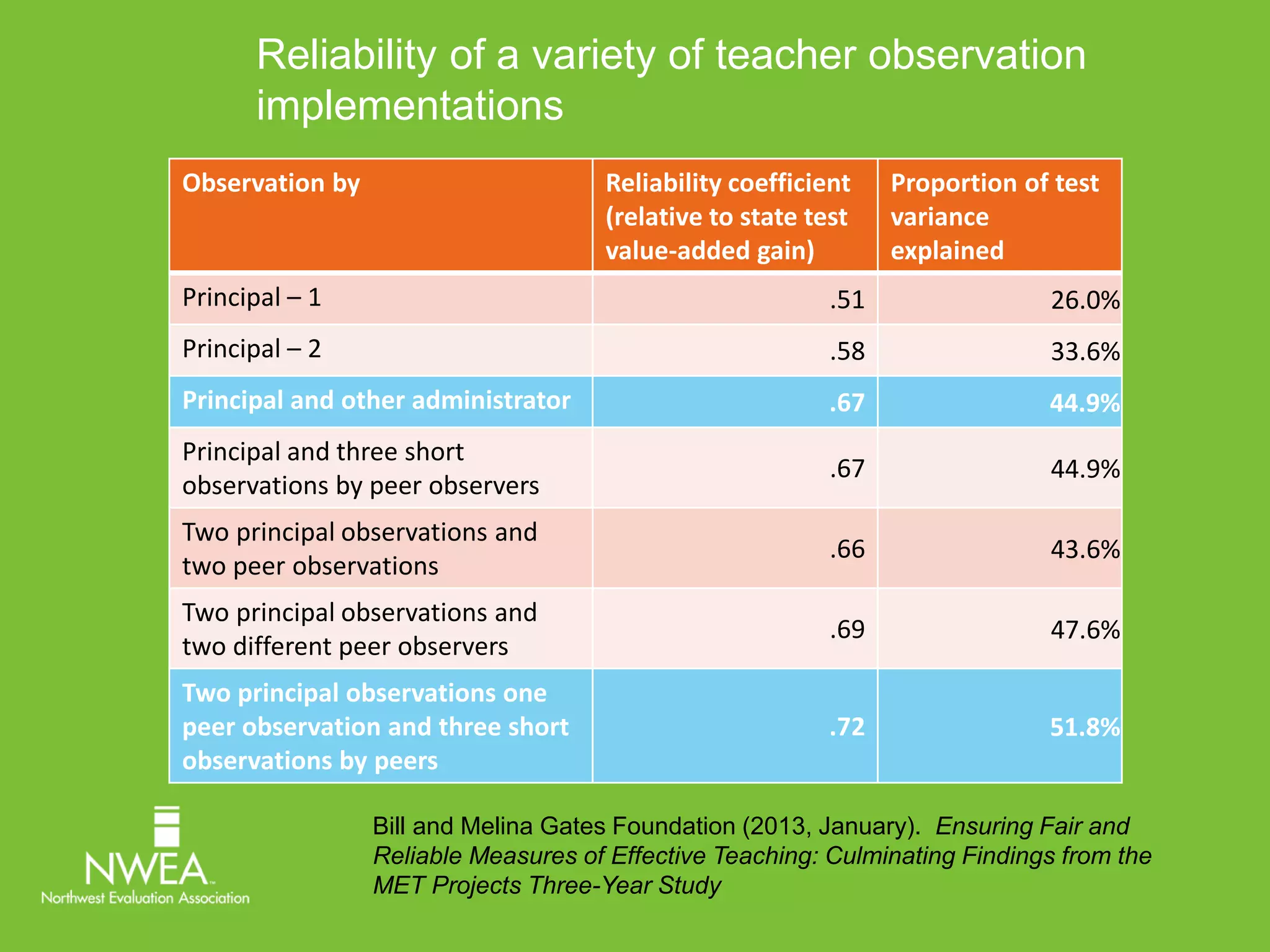

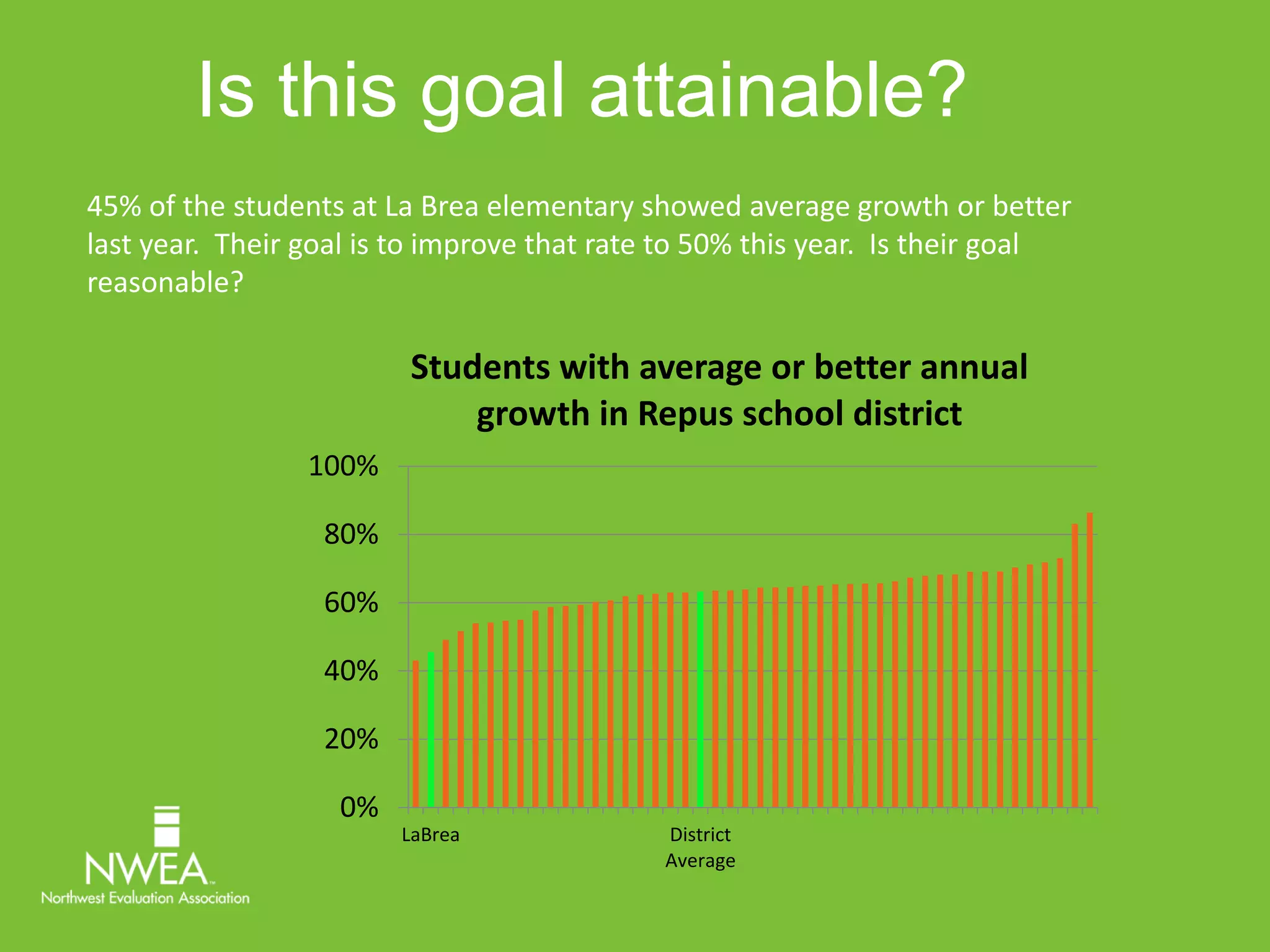

This document discusses recommendations for improving teacher evaluation frameworks. It advocates that evaluations should focus on helping teachers improve, be controlled by principals, and use multiple measures rather than solely relying on test scores. An effective framework uses evidence of teaching practices, student learning, and professional responsibilities. While testing and observations are part of evaluations, their results must be interpreted carefully. Overall evaluations should provide meaningful performance differentiations to help retain top educators and dismiss ineffective ones.