Speech recognition, the process of converting spoken language into text, has evolved significantly over the years, thanks to the power of deep learning. Gone are the days when we had to rely on simple algorithms to transcribe audio; today, we have sophisticated models that can process spoken words with human-like accuracy.

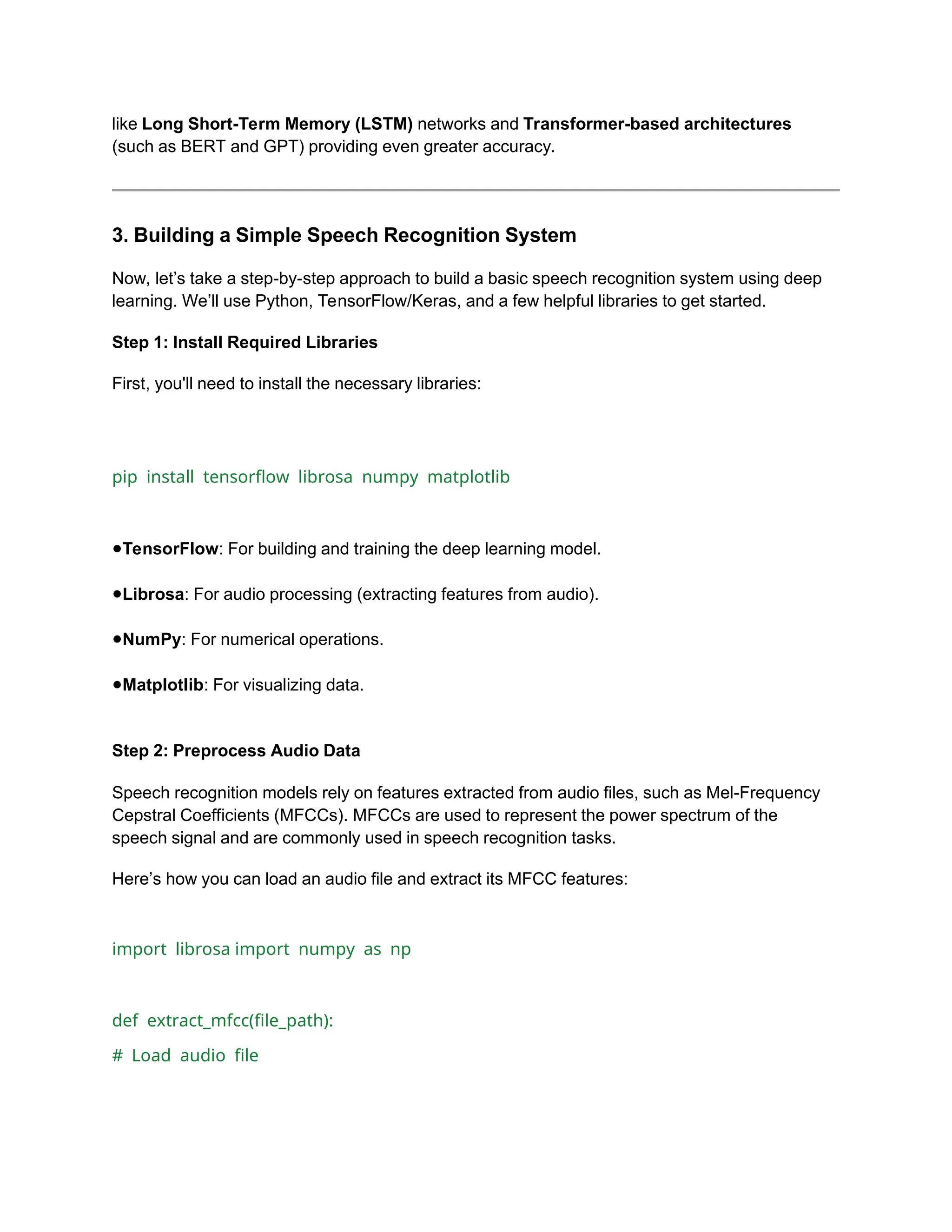

![# Add another LSTM layer model.add(LSTM(64))

# Output layer for classification (adjust based on the number of classes)

model.add(Dense(10, activation='softmax')) # For example, 10 classes (words)

# Compile the model

model.compile(optimizer=Adam(), loss='categorical_crossentropy', metrics=['accuracy'])

# Print the model summary model.summary()

Step 4: Train the Model

Now, you need labeled data (e.g., audio files of words or sentences with their corresponding

text labels). The audio features (MFCCs) will be used as input, and the labels (text) will be the

output.

# Assume we have X_train (MFCCs) and y_train (labels) ready

# Train the model

model.fit(X_train, y_train, epochs=10, batch_size=32)

Step 5: Evaluate the Model

Once the model is trained, you can evaluate its performance on a separate test set to see how

accurately it can transcribe new audio inputs.

# Evaluate the model on test data accuracy = model.evaluate(X_test, y_test)](https://image.slidesharecdn.com/machinelearninghowtodospeechrecognitionwithdeeplearning-250724133632-5f8914d3/75/Machine-Learning_-How-to-Do-Speech-Recognition-with-Deep-Learning-4-2048.jpg)