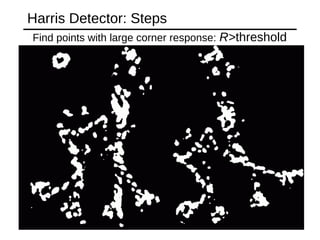

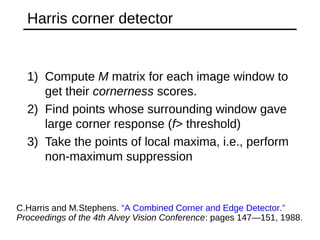

Here are the key steps in Harris corner detection:

1. Compute the autocorrelation matrix M for a window around each pixel using:

M = ∑w(x,y)[IxIx IxIy]

[IxIy IyIy]

Where Ix and Iy are the gradients of the image I in x and y directions.

2. Compute the corner response function R = det(M) - k(trace(M))^2

3. A large R value indicates a corner. Threshold R to find candidate corner points.

4. Refine the candidate locations using interpolation.

So in summary, Harris detection looks for pixels with

![11

of

65

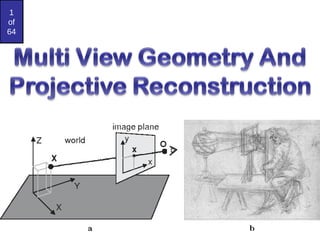

Extrinsic Parameters

• translation parameters

t = [tx ty tz]

• rotation matrix

r11 r12 r13 0

r21 r22 r23 0

r31 r32 r33 0

0 0 0 1

R = Are there really

nine parameters?

extrinsic parameters are where the camera sits in the world](https://image.slidesharecdn.com/saadalsheekhmulti-view-200308060806/85/Saad-alsheekh-multi-view-11-320.jpg)

![Many Existing Detectors

Available

K. Grauman, B. Leibe

Hessian & Harris [Beaudet ‘78], [Harris ‘88]

Laplacian, DoG [Lindeberg ‘98], [Lowe 1999]

Harris-/Hessian-Laplace [Mikolajczyk & Schmid

‘01]

Harris-/Hessian-Affine [Mikolajczyk & Schmid ‘04]

EBR and IBR [Tuytelaars & Van Gool ‘04]

MSER [Matas ‘02]

Salient Regions [Kadir & Brady ‘01]

Others…](https://image.slidesharecdn.com/saadalsheekhmulti-view-200308060806/85/Saad-alsheekh-multi-view-70-320.jpg)

![Corner Detection: Mathematics

2

,

( , ) ( , ) ( , ) ( , )

x y

E u v w x y I x u y v I x y

Change in appearance of window w(x,y)

for the shift [u,v]:

I(x, y)

E(u, v)

E(3,2)

w(x, y)](https://image.slidesharecdn.com/saadalsheekhmulti-view-200308060806/85/Saad-alsheekh-multi-view-72-320.jpg)

![Corner Detection: Mathematics

2

,

( , ) ( , ) ( , ) ( , )

x y

E u v w x y I x u y v I x y

I(x, y)

E(u, v)

E(0,0)

w(x, y)

Change in appearance of window w(x,y)

for the shift [u,v]:](https://image.slidesharecdn.com/saadalsheekhmulti-view-200308060806/85/Saad-alsheekh-multi-view-73-320.jpg)

![Corner Detection: Mathematics

2

,

( , ) ( , ) ( , ) ( , )

x y

E u v w x y I x u y v I x y

IntensityShifted

intensity

Window

function

orWindow function w(x,y) =

Gaussian1 in window, 0 outside

Source: R. Szeliski

Change in appearance of window w(x,y)

for the shift [u,v]:](https://image.slidesharecdn.com/saadalsheekhmulti-view-200308060806/85/Saad-alsheekh-multi-view-74-320.jpg)

![Corner Detection: Mathematics

2

,

( , ) ( , ) ( , ) ( , )

x y

E u v w x y I x u y v I x y

We want to find out how this function behaves for

small shifts

Change in appearance of window w(x,y)

for the shift [u,v]:

E(u, v)](https://image.slidesharecdn.com/saadalsheekhmulti-view-200308060806/85/Saad-alsheekh-multi-view-75-320.jpg)

![Corner Detection: Mathematics

2

,

( , ) ( , ) ( , ) ( , )

x y

E u v w x y I x u y v I x y

Local quadratic approximation of E(u,v) in the

neighborhood of (0,0) is given by the second-order

Taylor expansion:

v

u

EE

EE

vu

E

E

vuEvuE

vvuv

uvuu

v

u

)0,0()0,0(

)0,0()0,0(

][

2

1

)0,0(

)0,0(

][)0,0(),(

We want to find out how this function behaves for

small shifts

Change in appearance of window w(x,y)

for the shift [u,v]:](https://image.slidesharecdn.com/saadalsheekhmulti-view-200308060806/85/Saad-alsheekh-multi-view-76-320.jpg)

![Harris Detector [Harris88]

• Second moment

matrix

)()(

)()(

)(),( 2

2

DyDyx

DyxDx

IDI

III

III

g

83

1. Image

derivatives

2. Square of

derivatives

3. Gaussian

filter g(I)

Ix Iy

Ix

2 Iy

2

IxIy

g(Ix

2

) g(Iy

2

) g(IxIy)

222222

)]()([)]([)()( yxyxyx IgIgIIgIgIg

])),([trace()],(det[ 2

DIDIhar

4. Cornerness function – both eigenvalues are strong

har5. Non-maxima suppression

1 2

1 2

det

trace

M

M

(optionally, blur first)](https://image.slidesharecdn.com/saadalsheekhmulti-view-200308060806/85/Saad-alsheekh-multi-view-80-320.jpg)