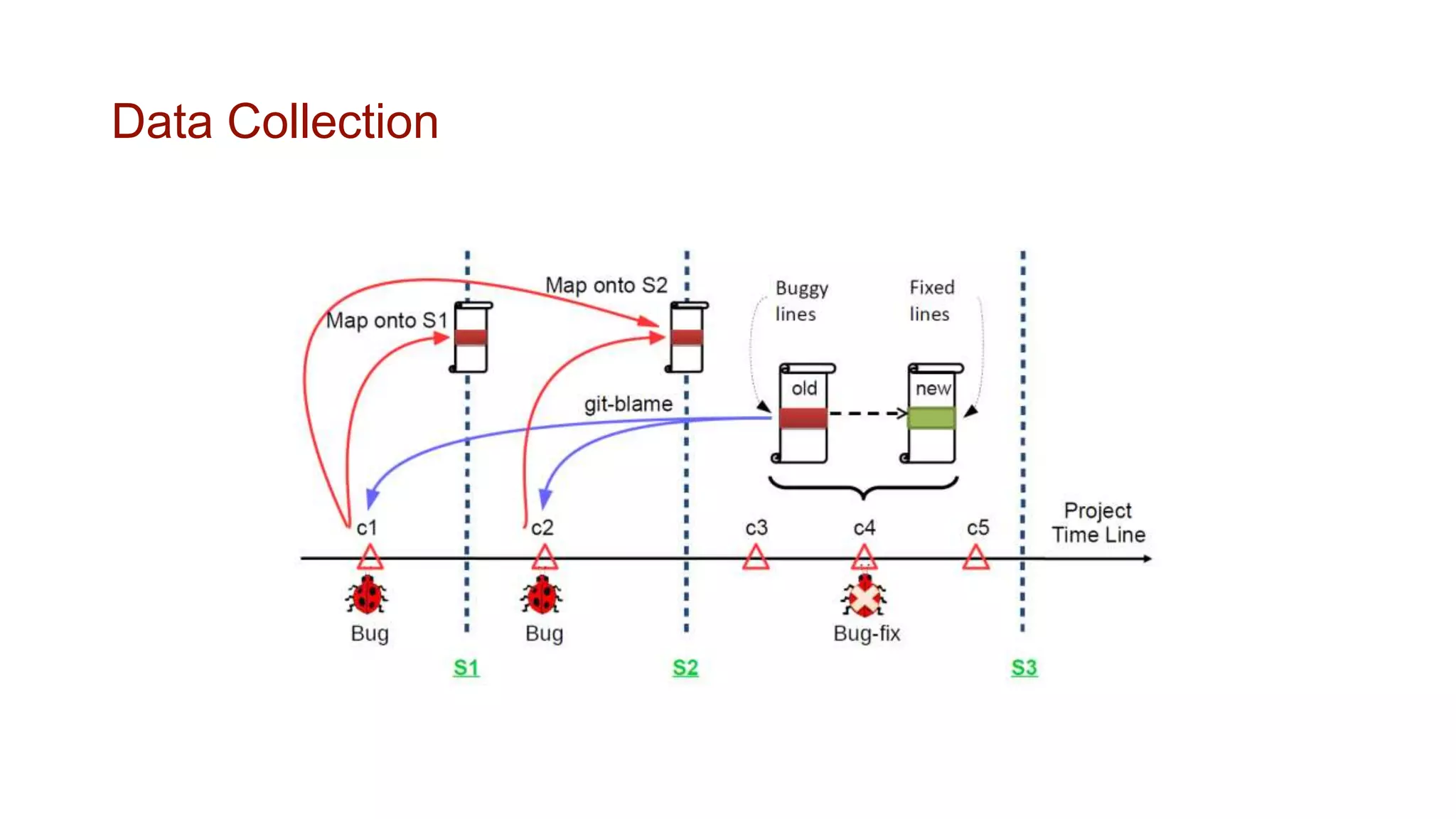

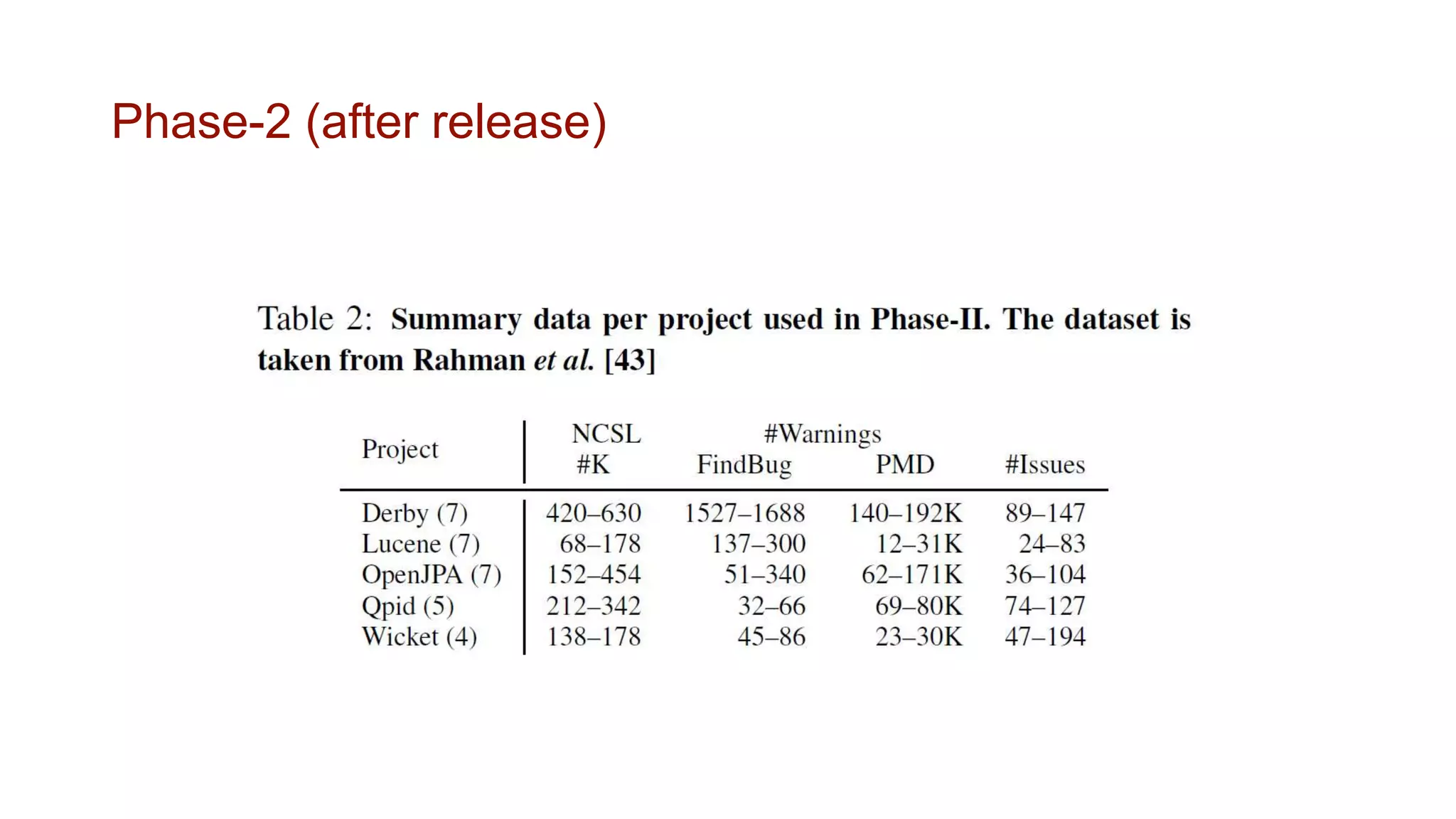

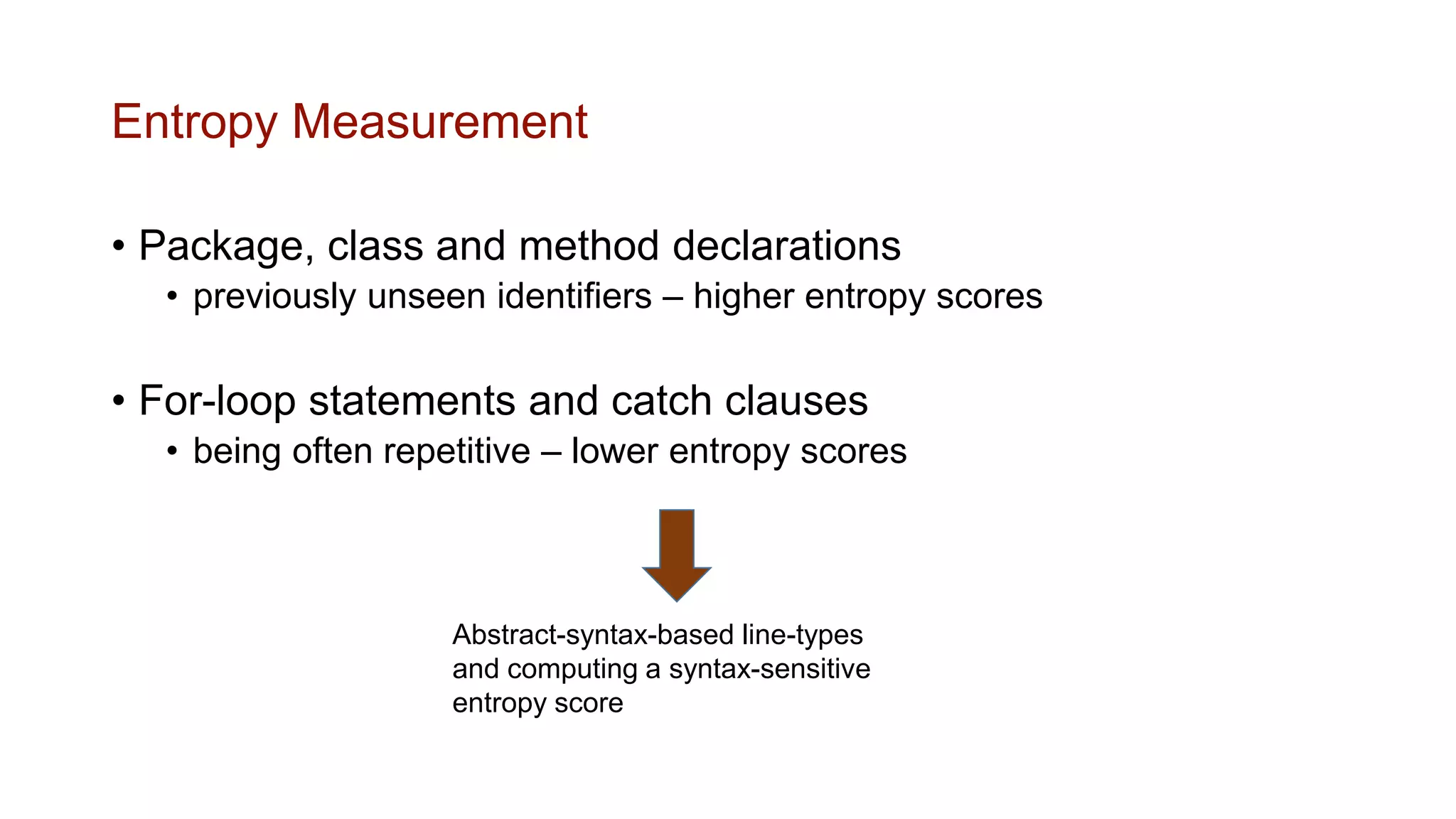

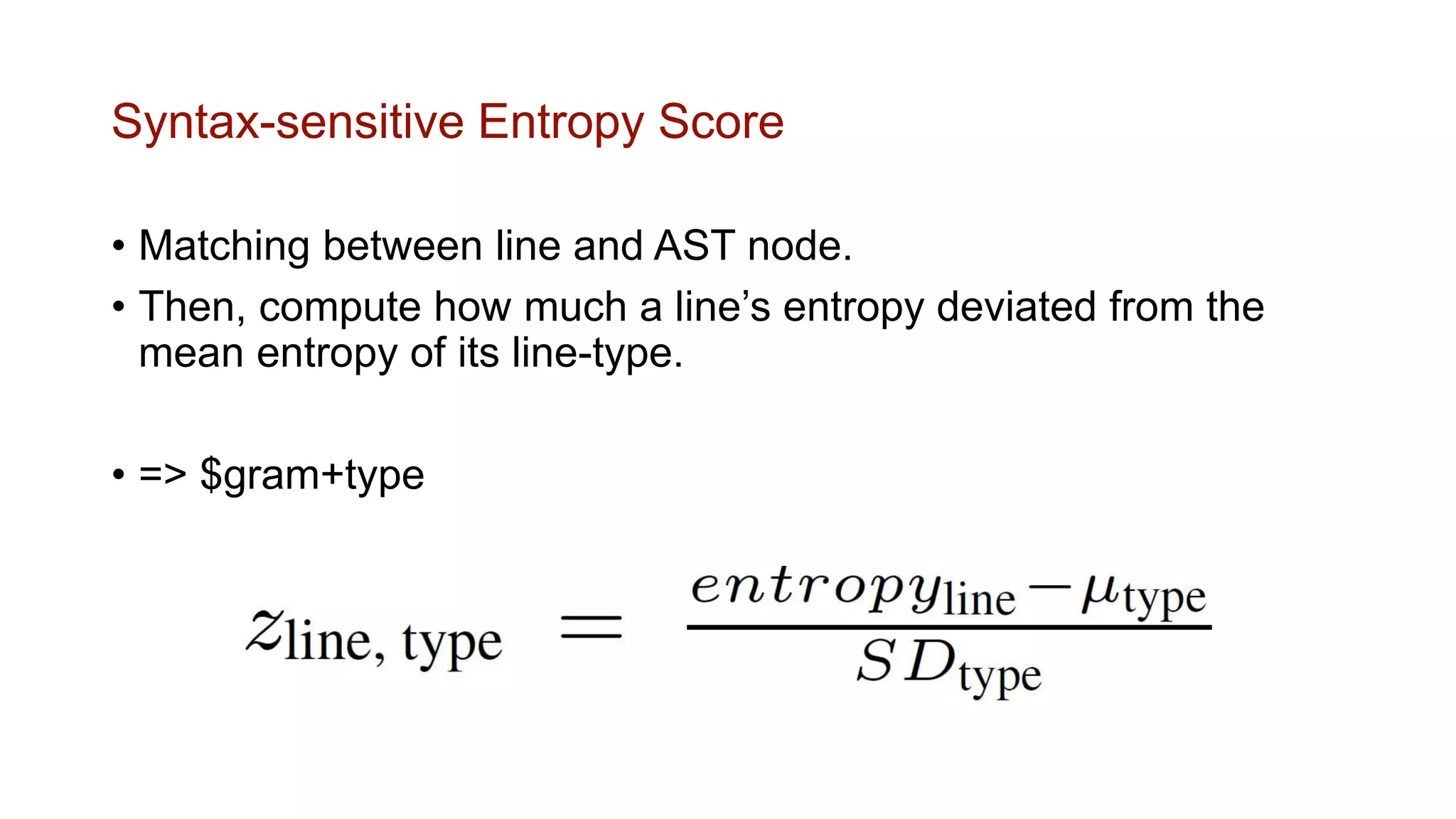

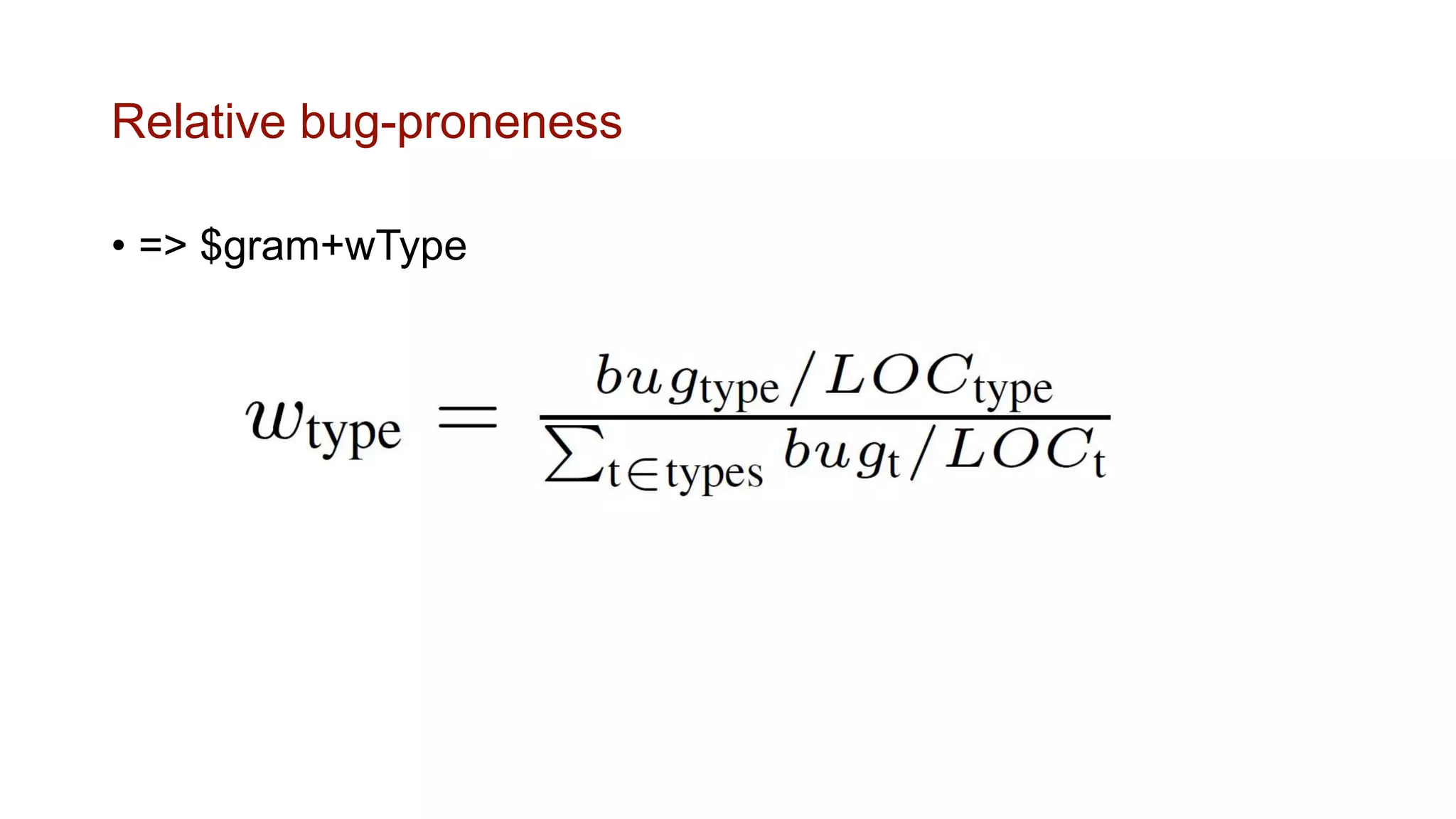

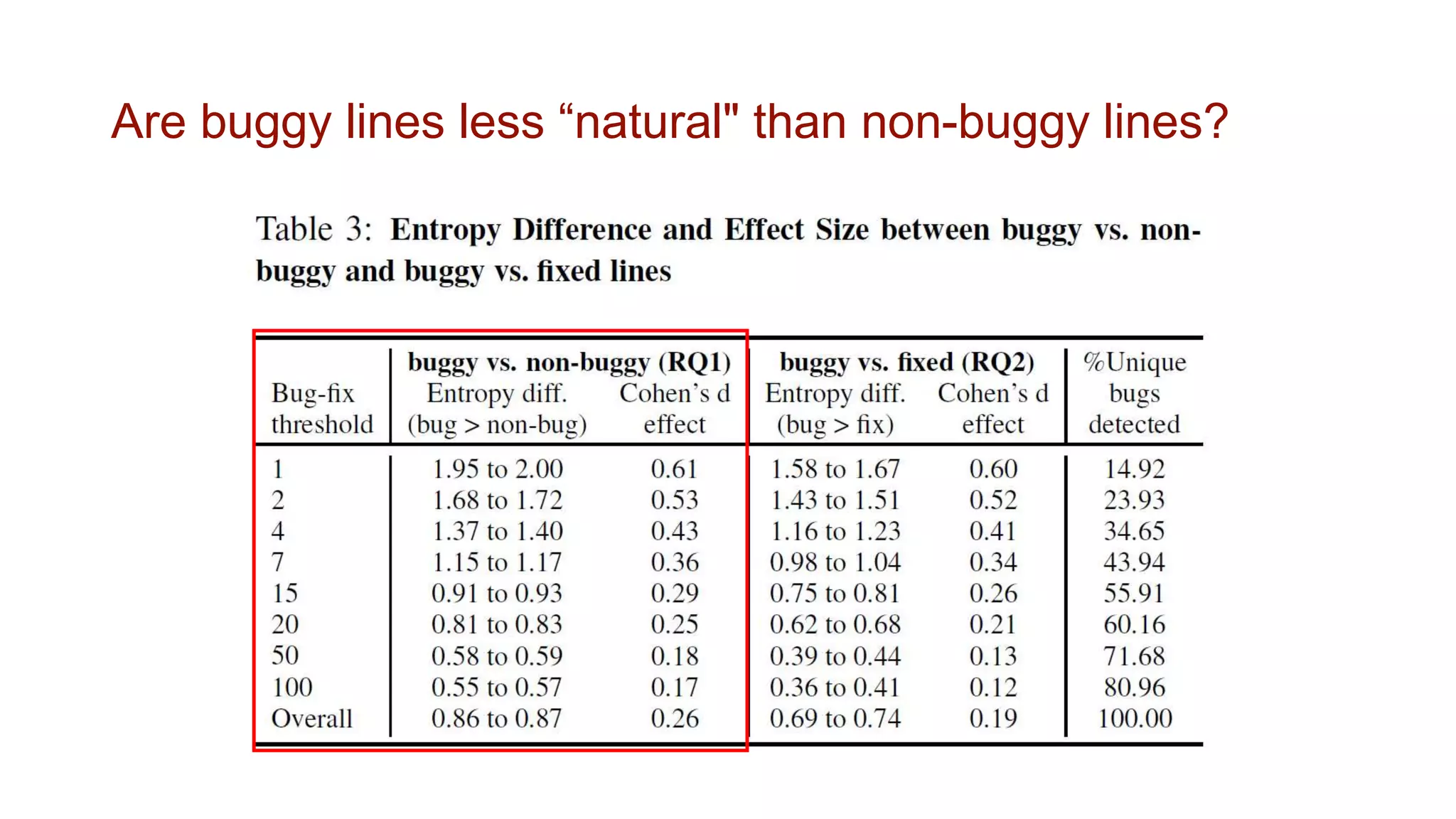

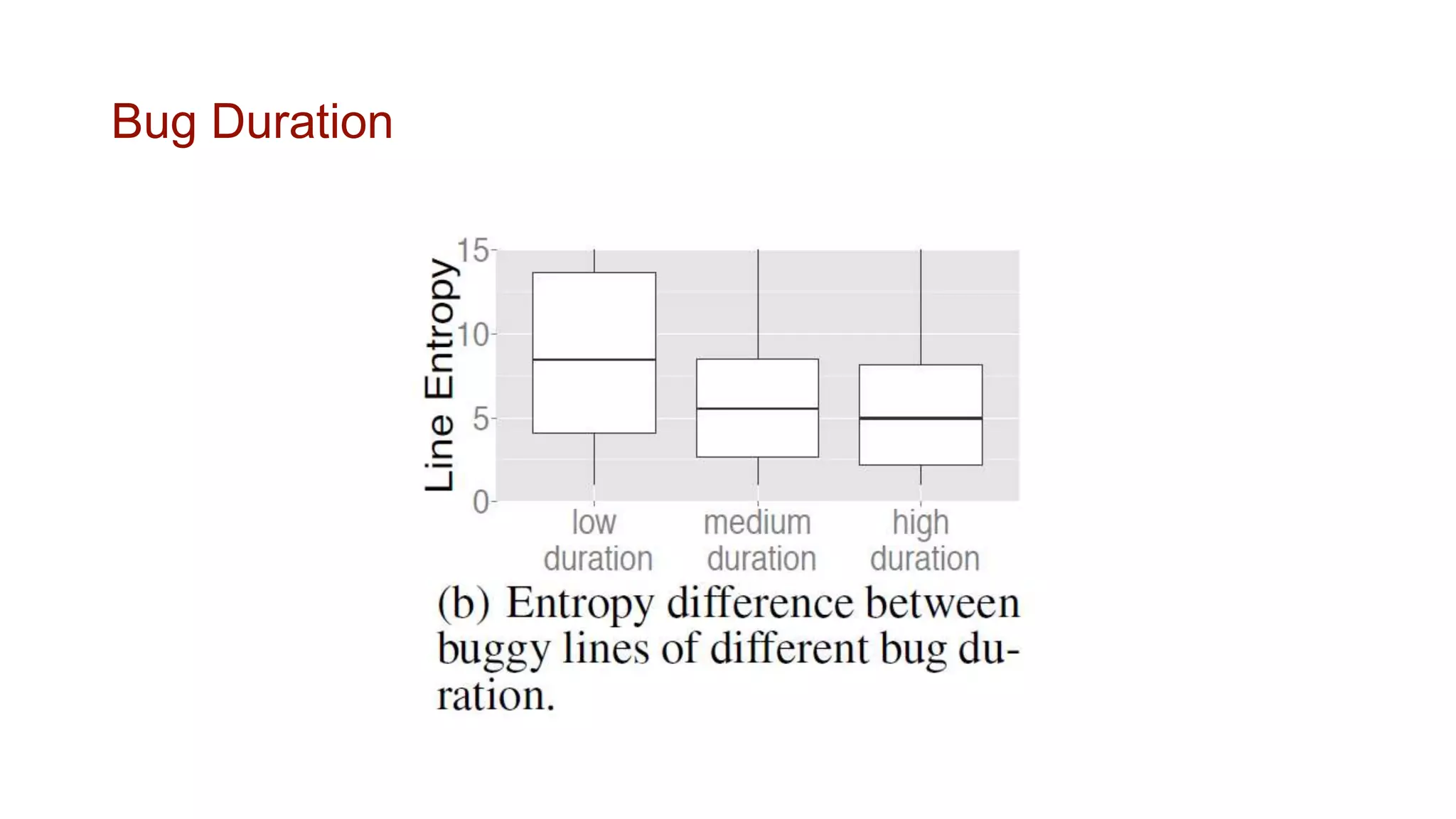

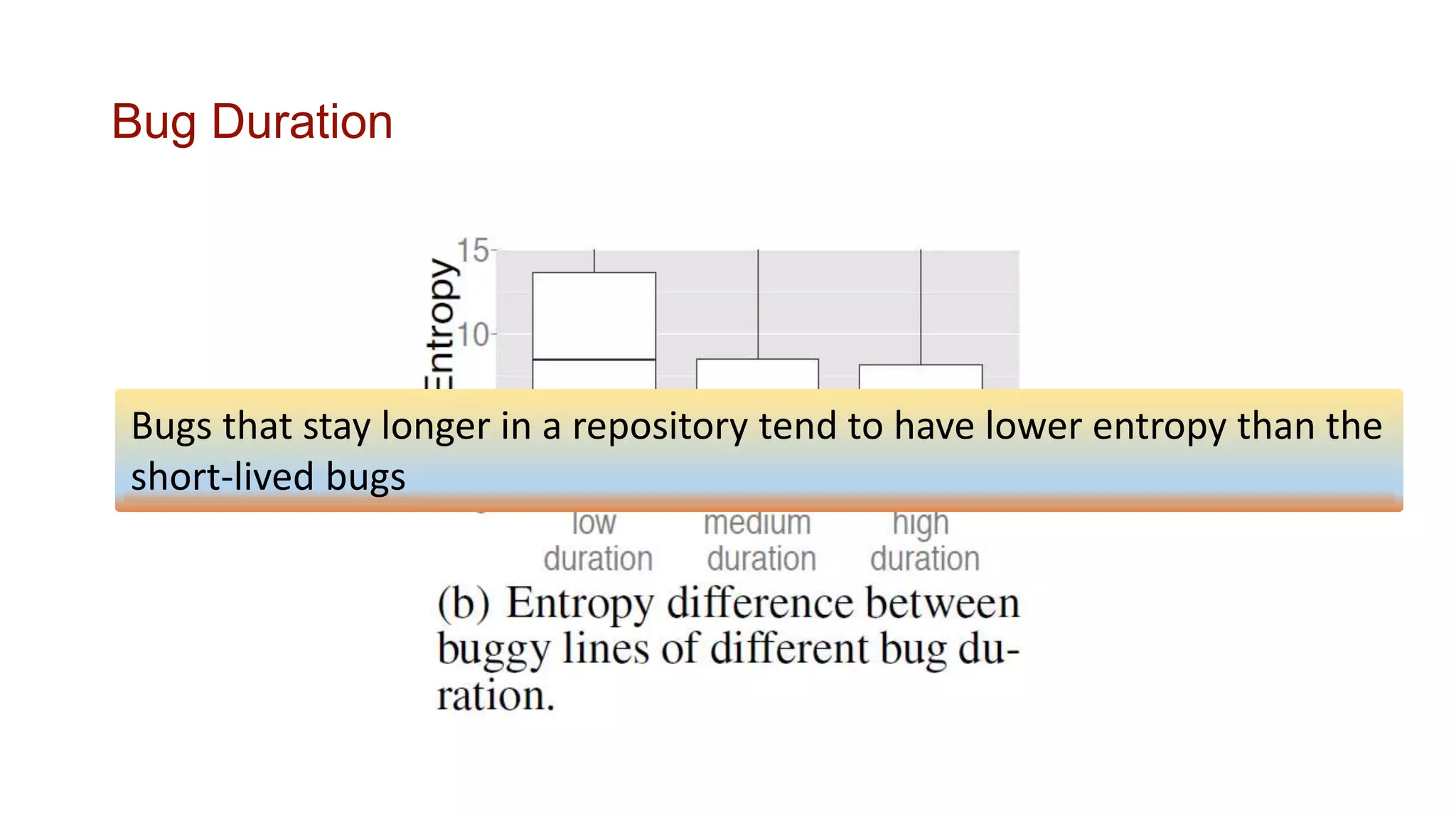

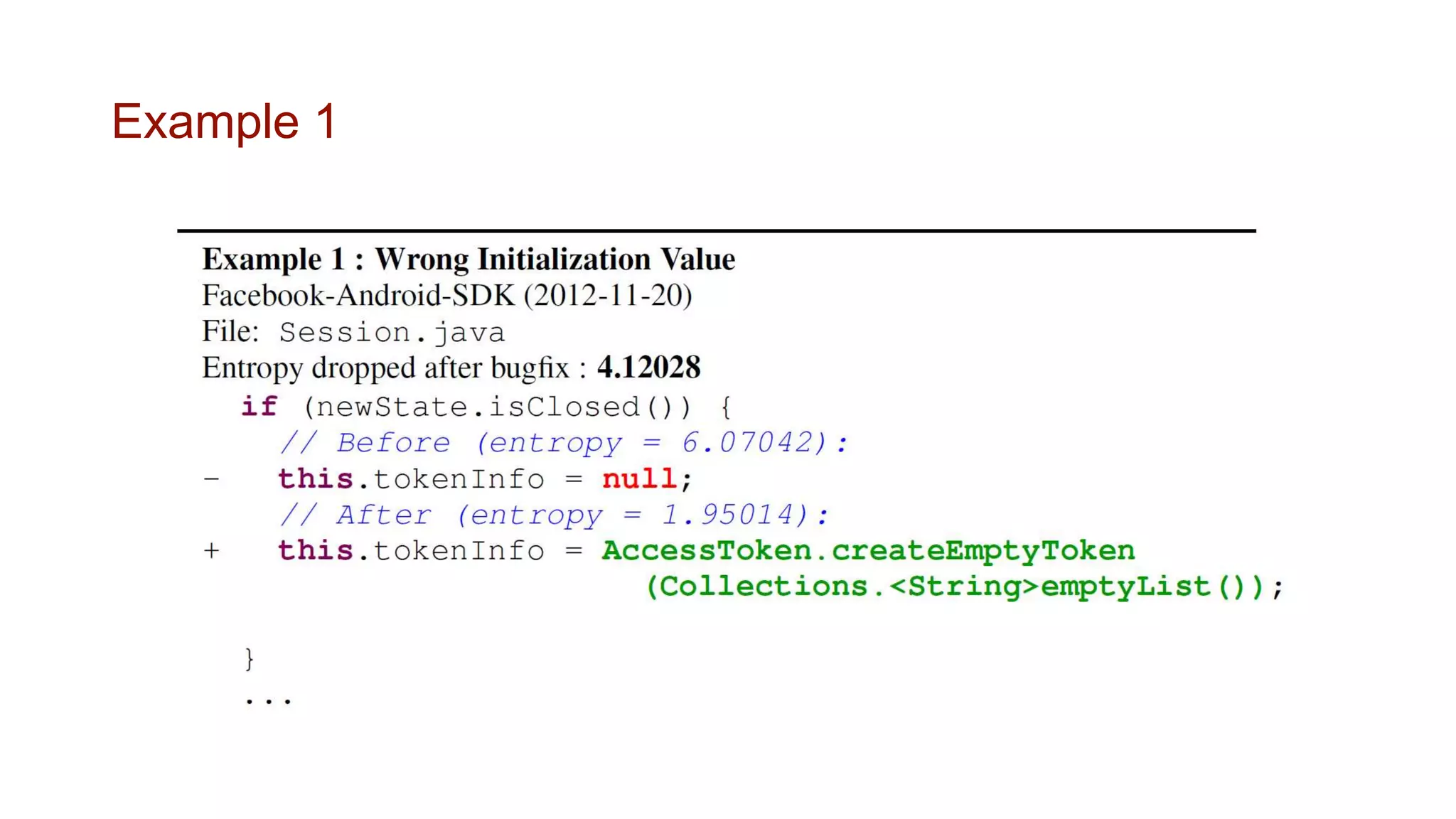

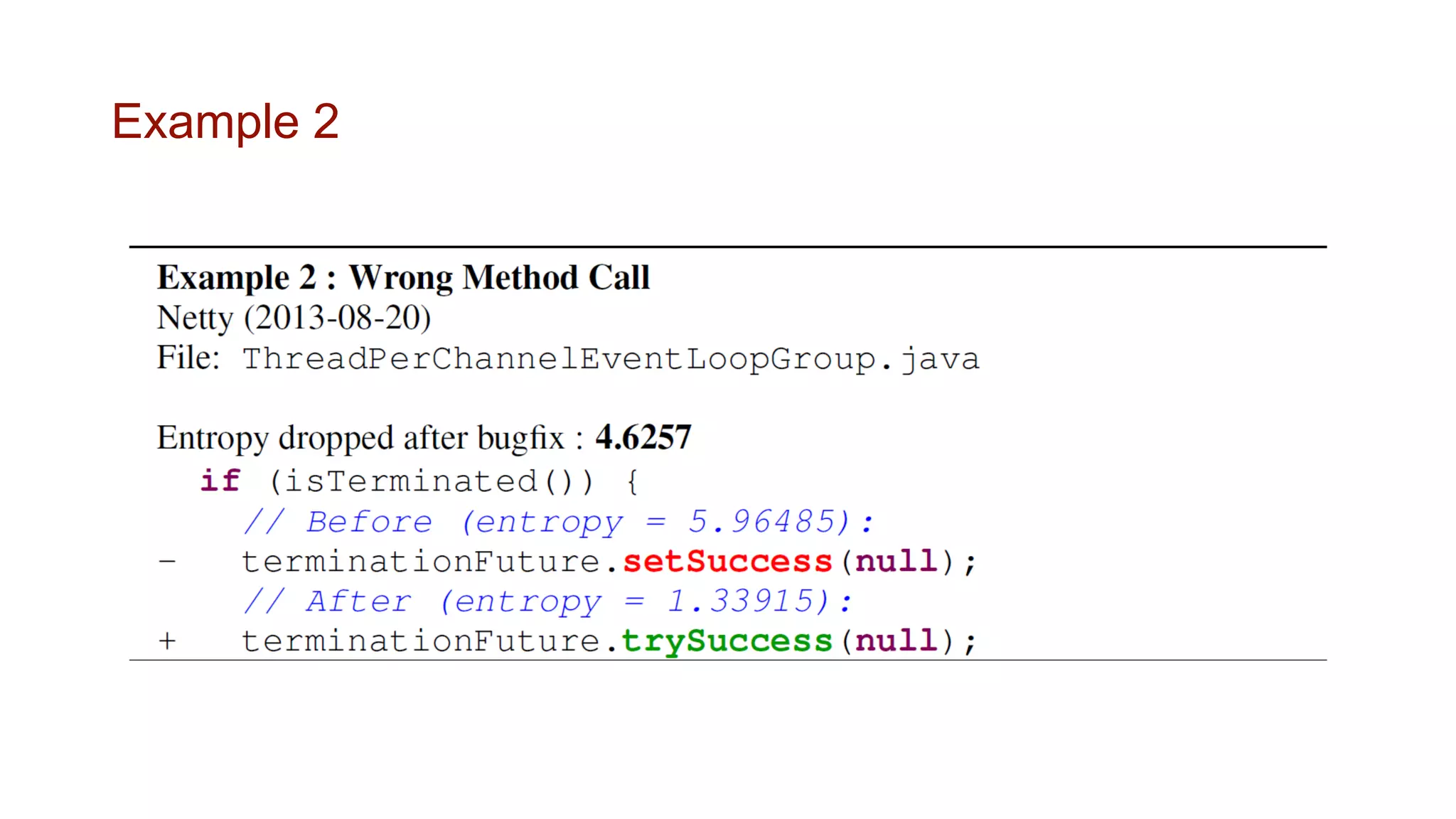

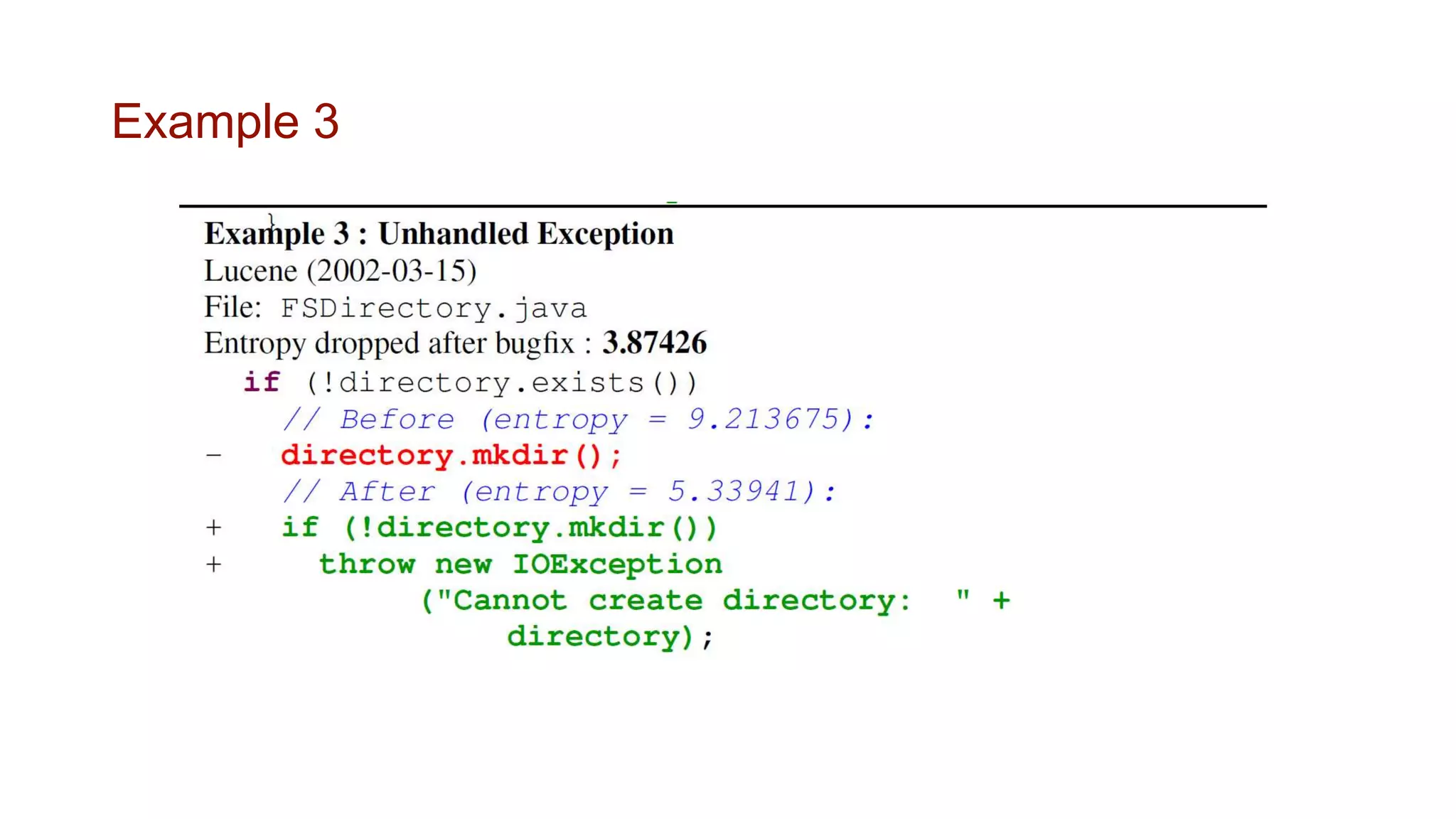

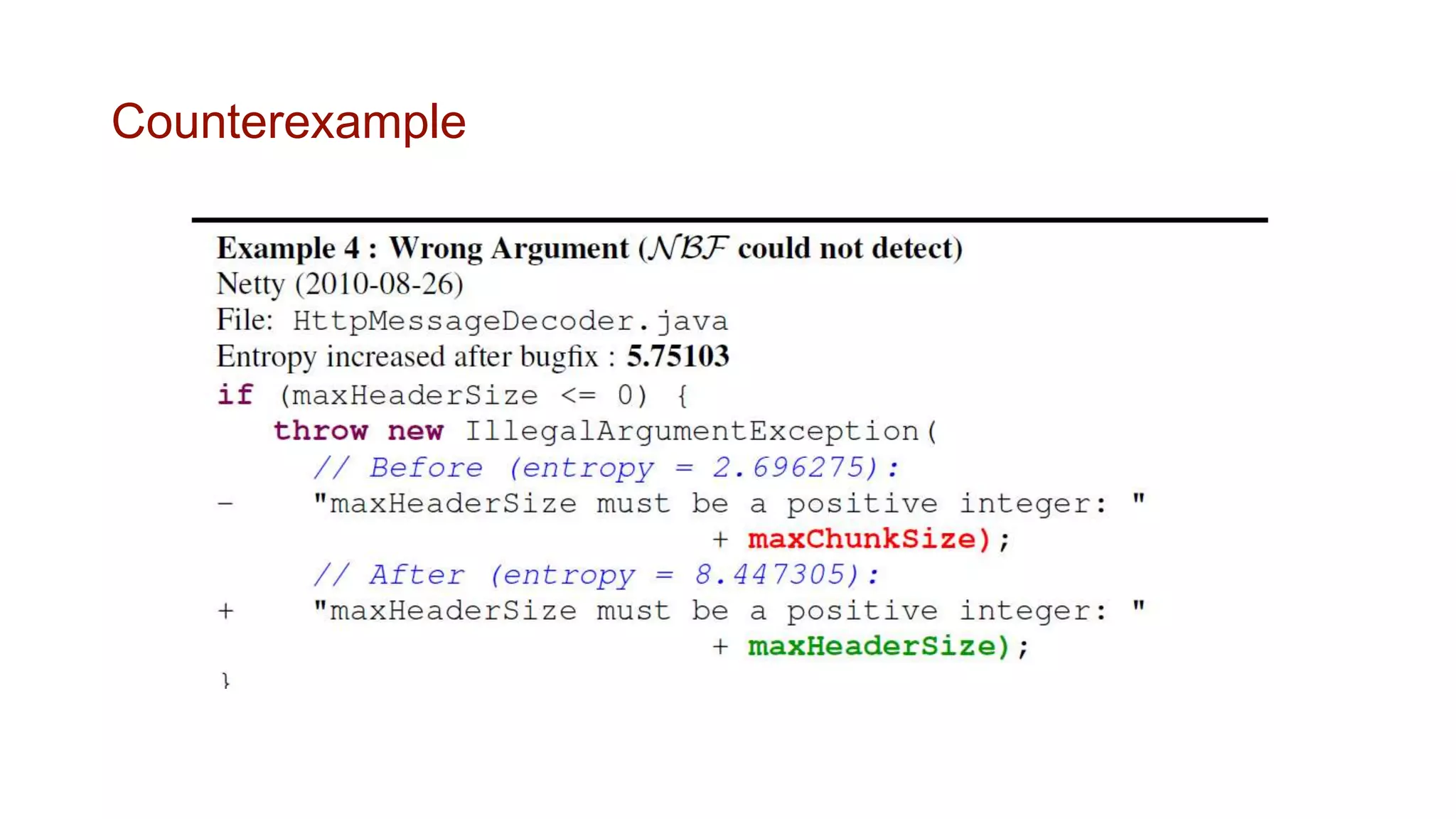

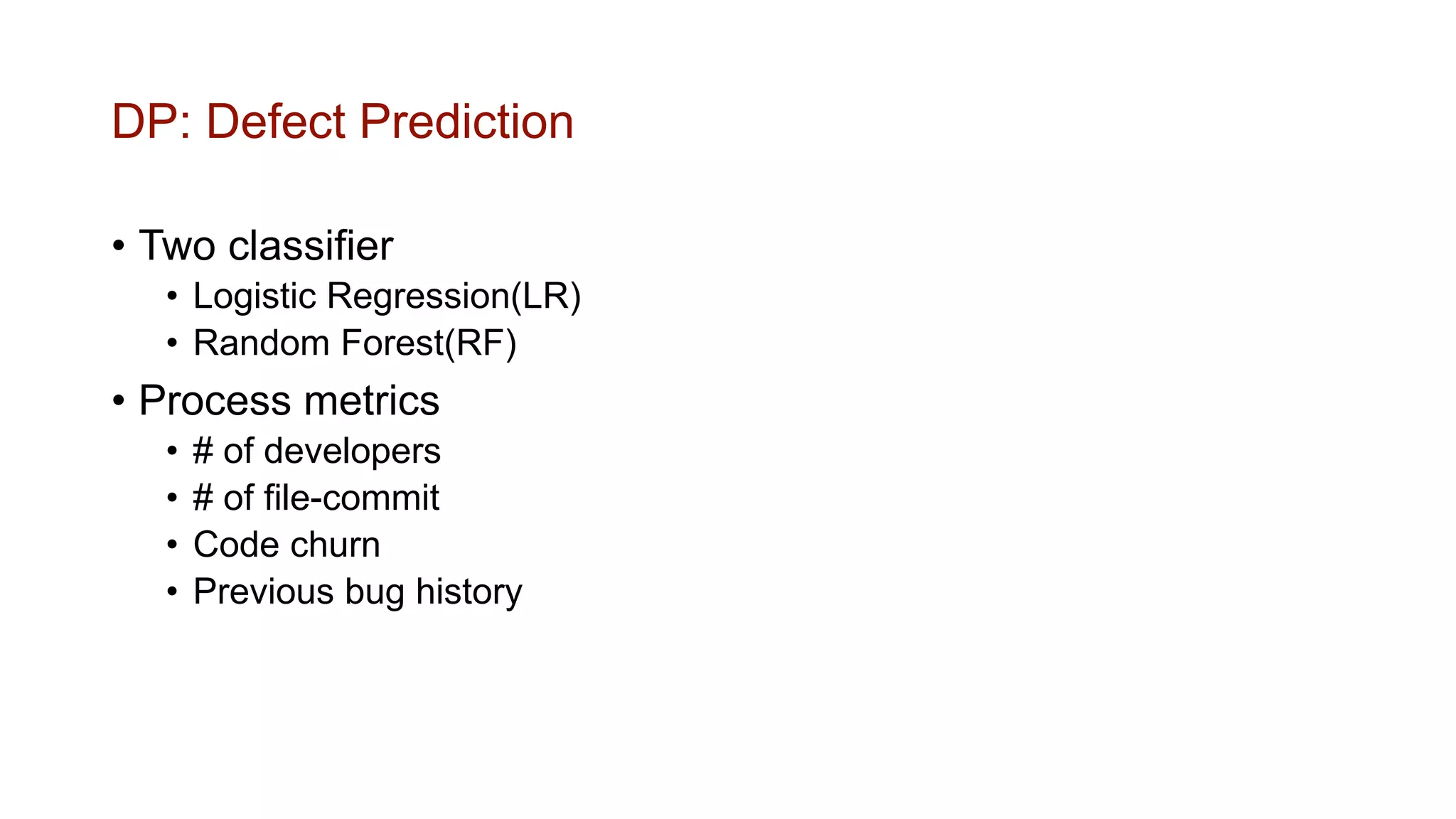

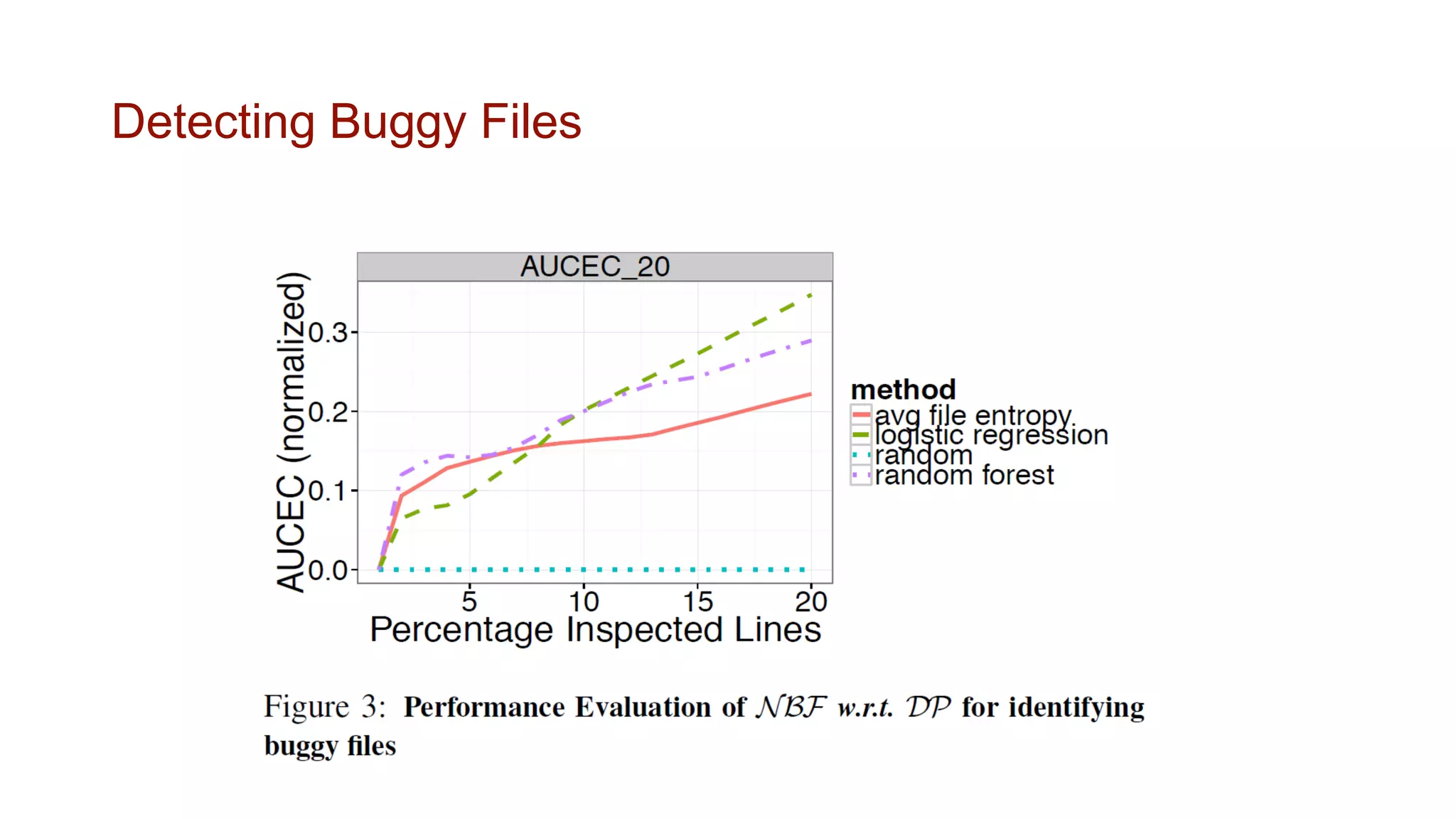

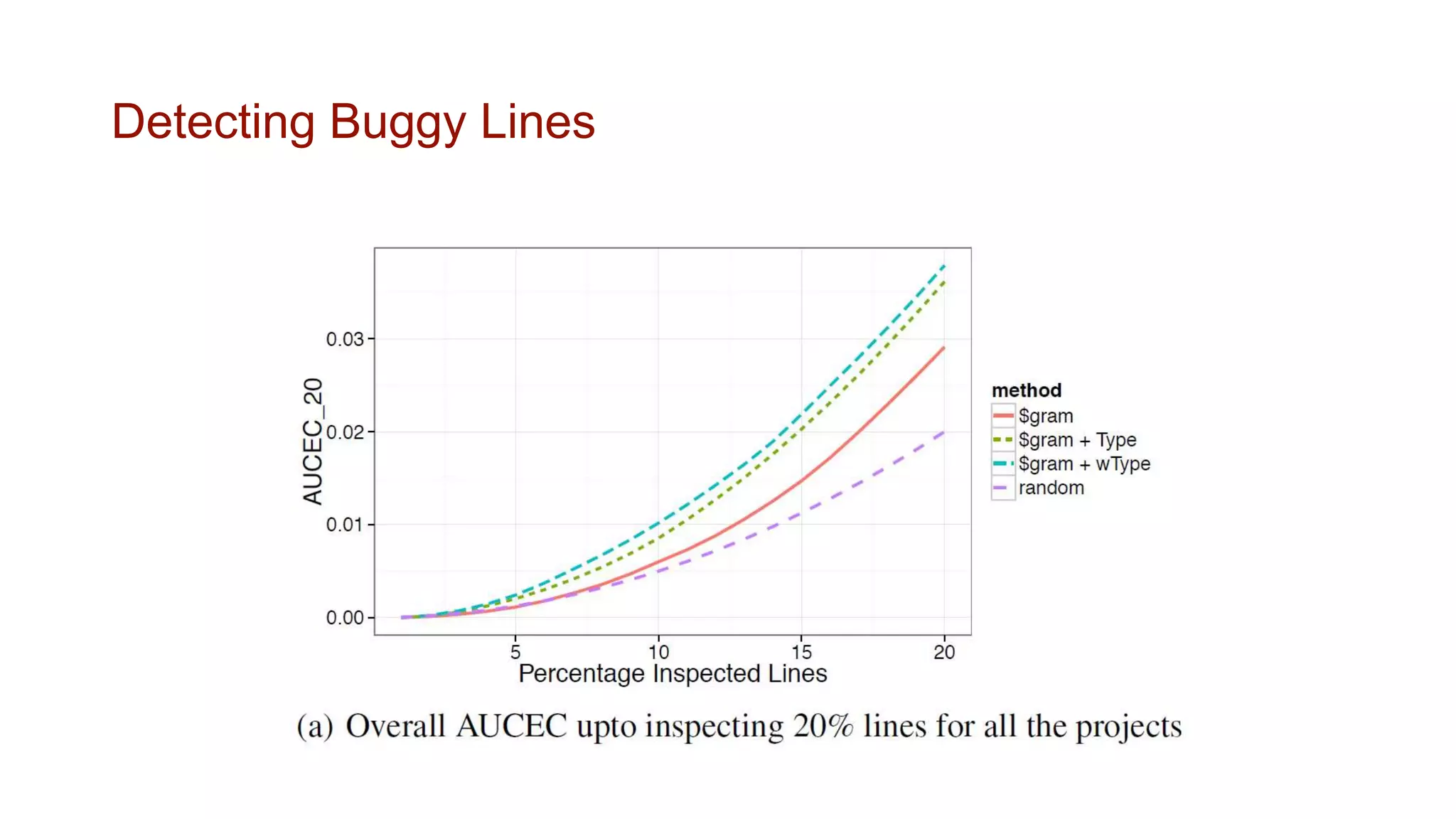

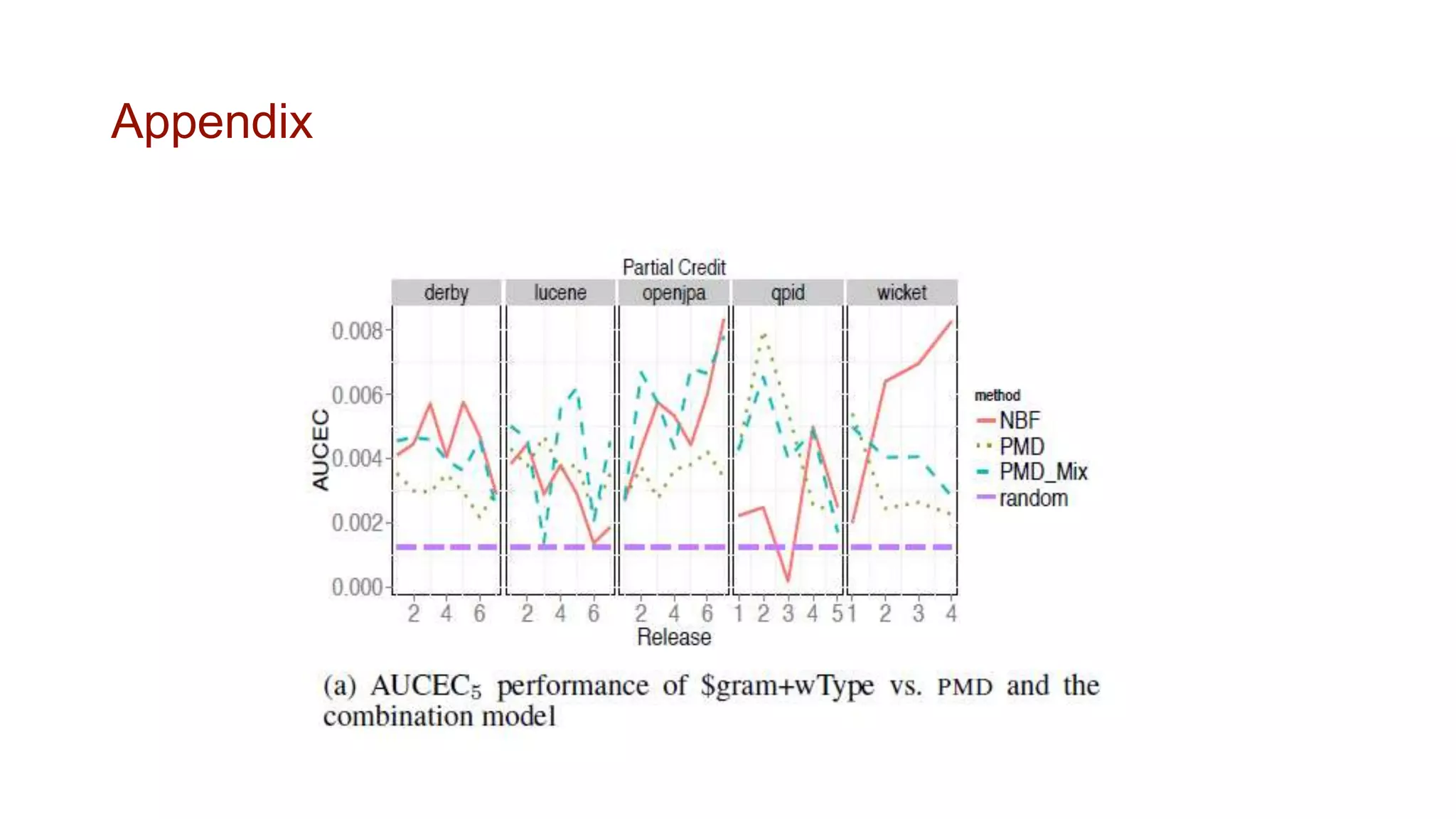

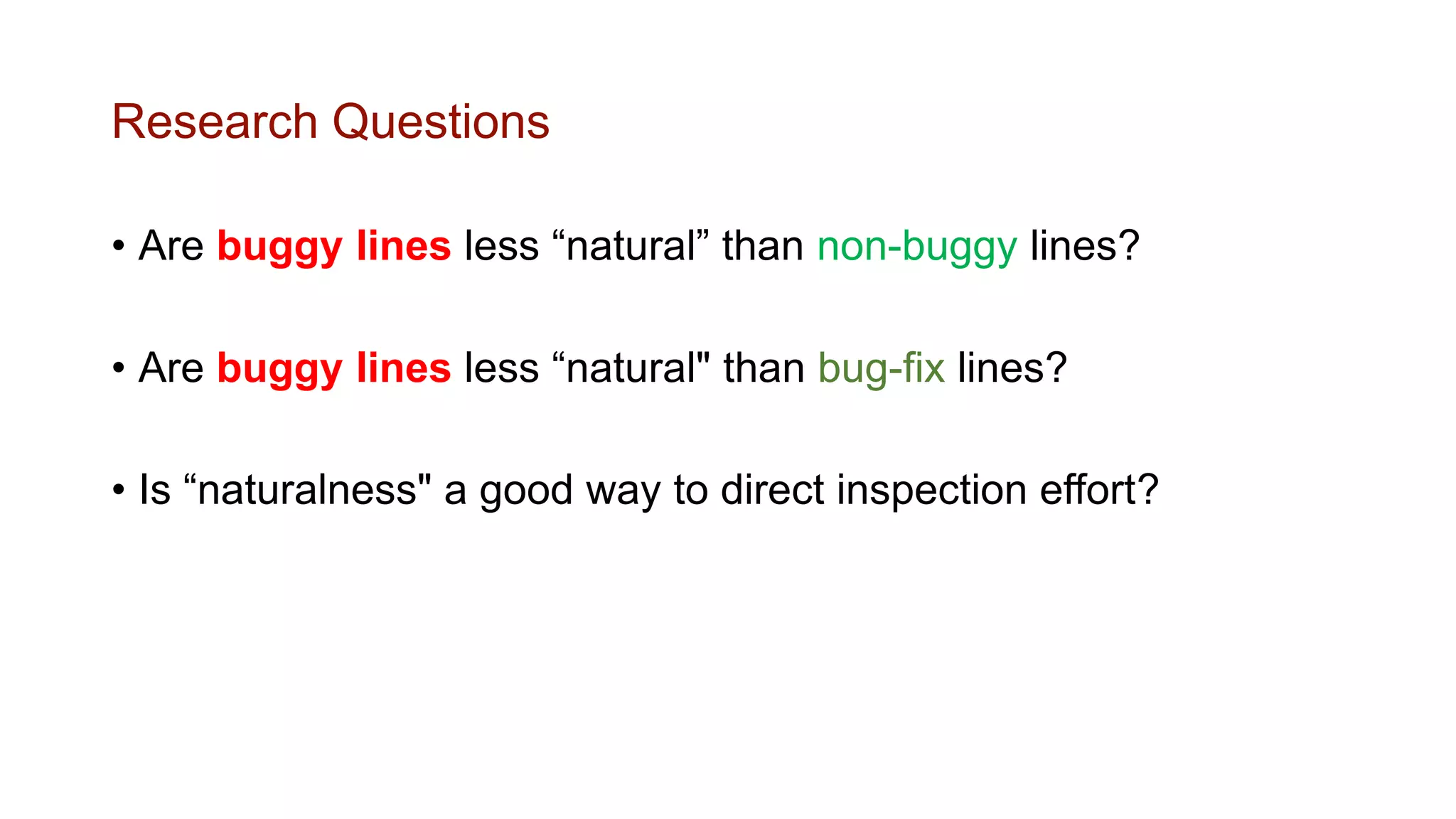

This document summarizes a research paper that studied the "naturalness" of buggy code compared to non-buggy code. The researchers investigated three research questions: (1) Are buggy lines less "natural" than non-buggy lines? (2) Are buggy lines less "natural" than bug-fix lines? (3) Is "naturalness" a good way to direct inspection effort? The study found that buggy lines on average had higher entropies (were less natural) than non-buggy lines. It also found that the entropy of buggy lines dropped after bug fixes. Finally, it concluded that naturalness could be used to guide bug finding efforts at both the file and line level

![Naturalness

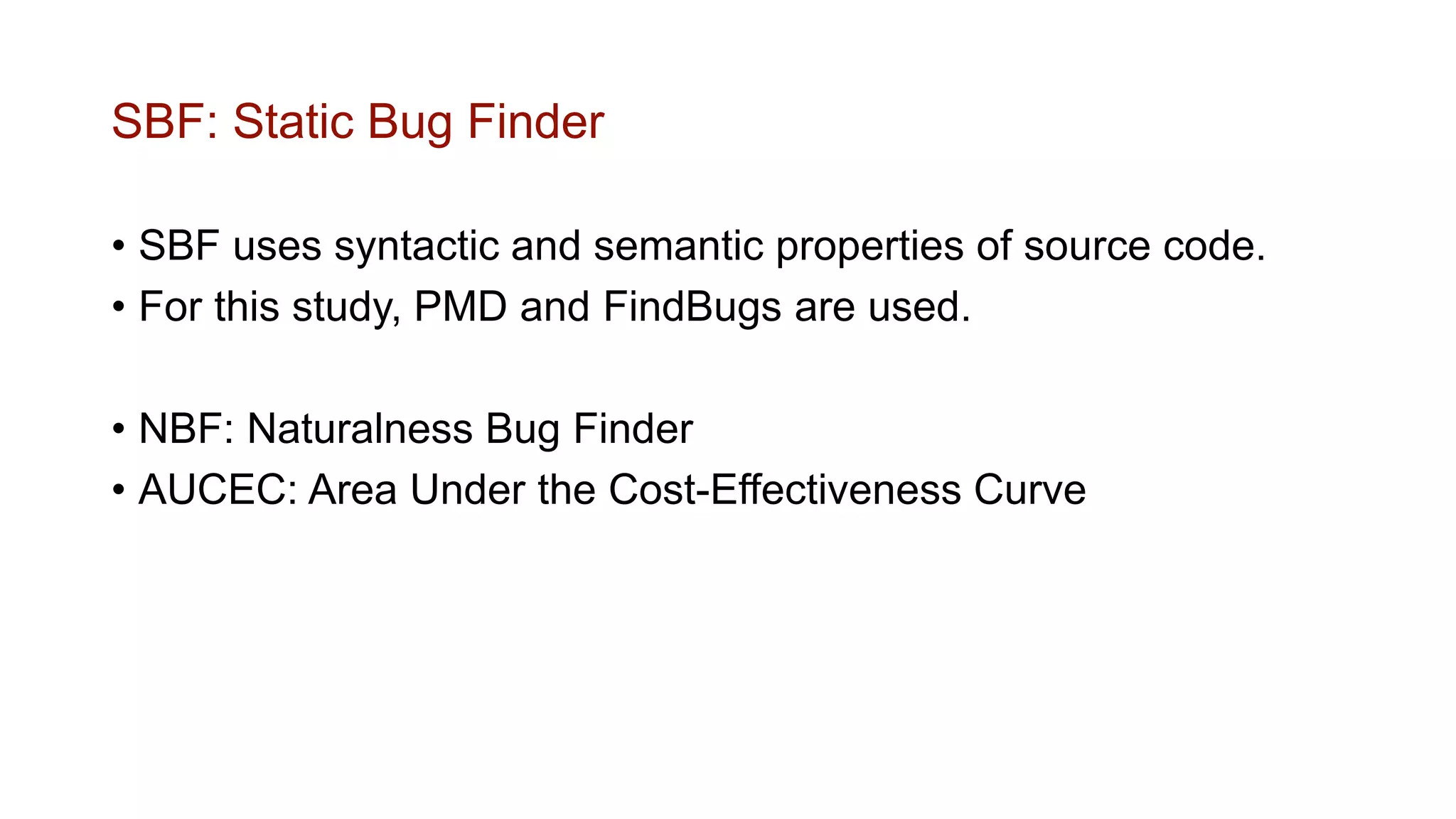

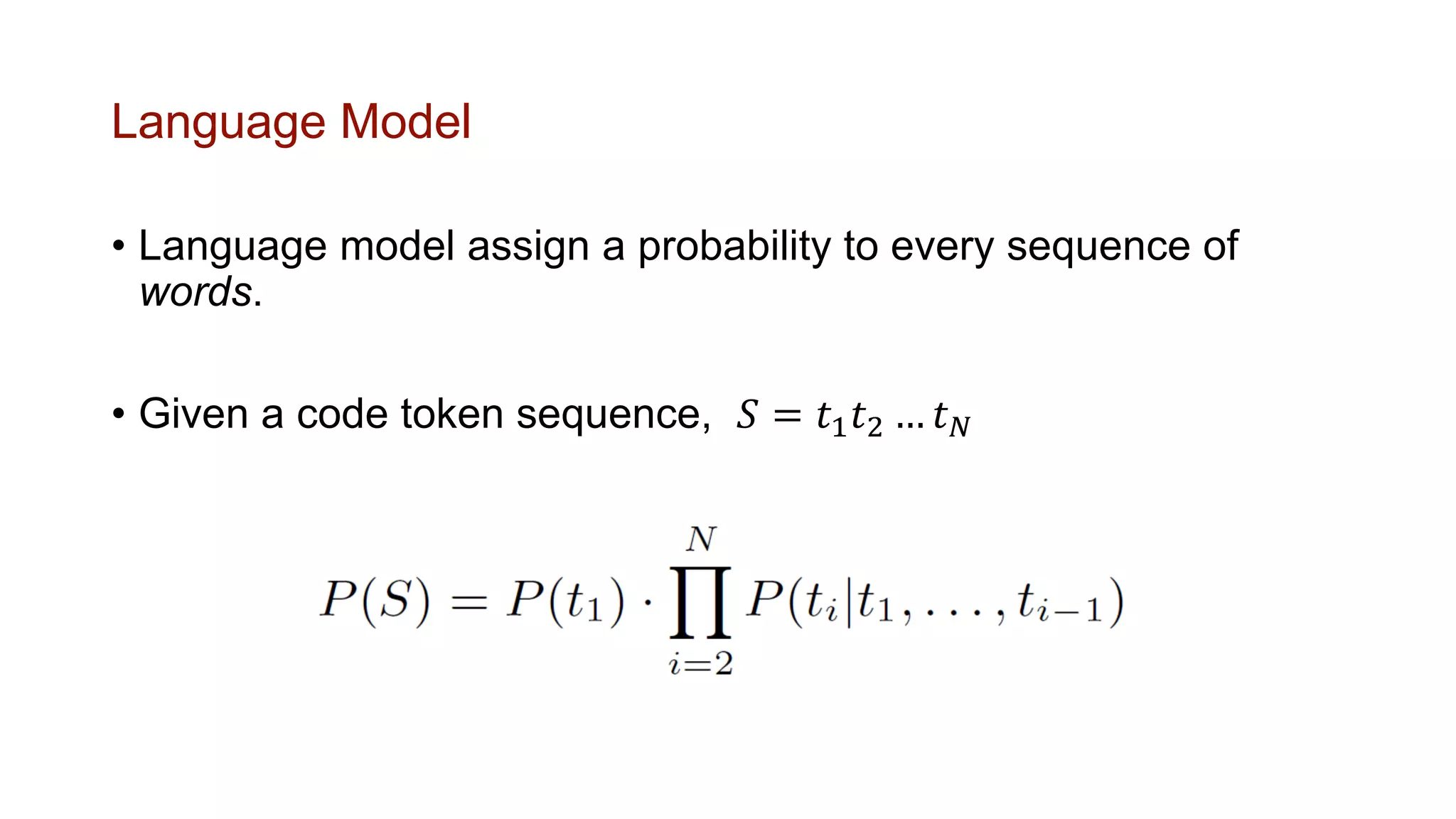

• Real software tends to be natural, like speech or natural

language.

• It tends to be highly repetitive and predictable.

Naturalness of Software1

[1] A. Hindle, E. Barr, M. Gabel, Z. Su, and P. Devanbu. On the naturalness of software. In ICSE, pages 837–847,

2012.](https://image.slidesharecdn.com/onthenaturalnessofbuggycode-181109070142/75/Review-On-the-Naturalness-of-Buggy-Code-2-2048.jpg)

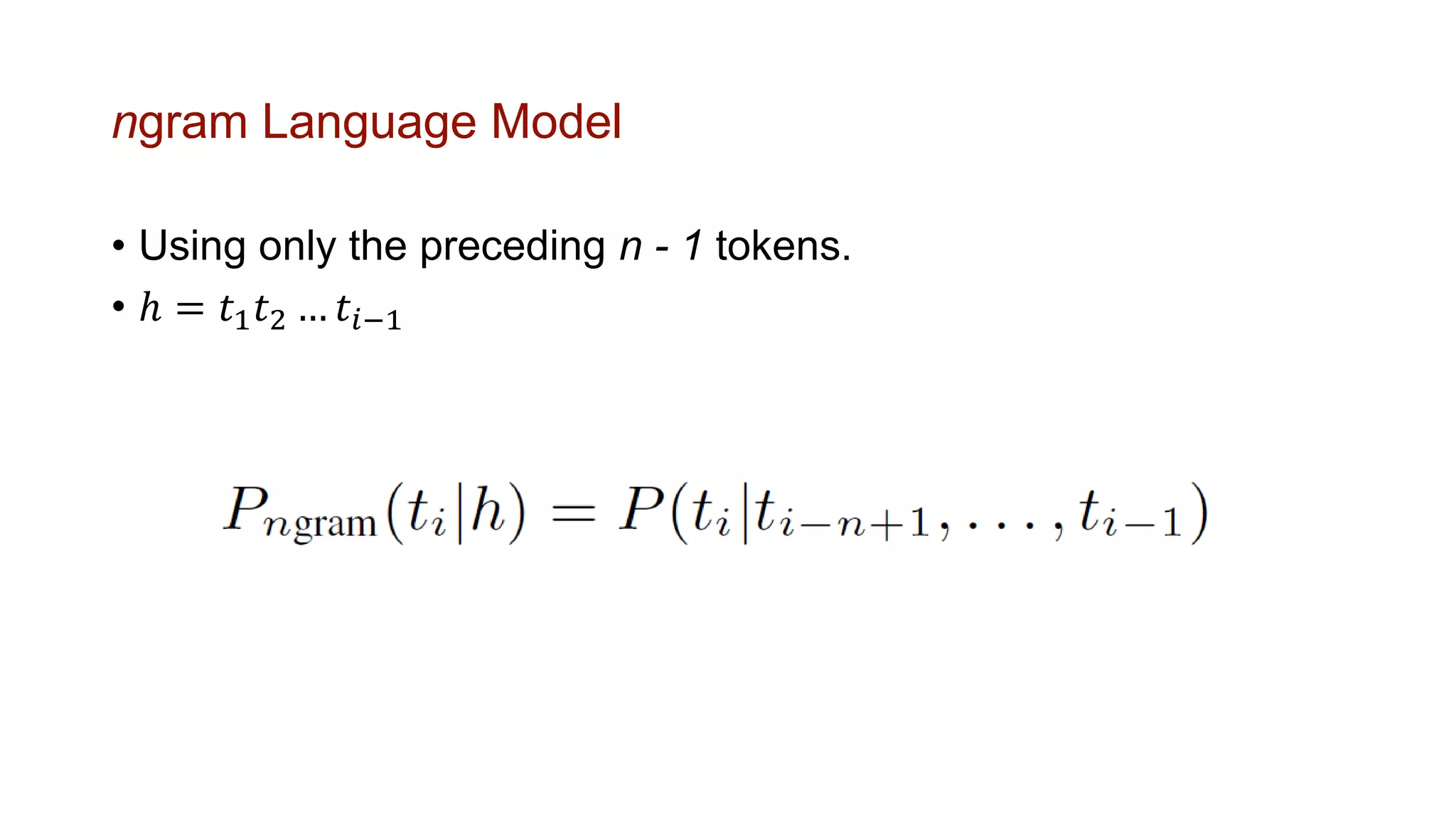

![$gram Language Model2

• Improving language model by deploying an additional cache-

list of ngrams extracted from the local context, to capture the

local regularities.

[2] Z. Tu, Z. Su, and P. Devanbu. On the localness of software. In SIGSOFT FSE, pages 269–280, 2014.](https://image.slidesharecdn.com/onthenaturalnessofbuggycode-181109070142/75/Review-On-the-Naturalness-of-Buggy-Code-8-2048.jpg)