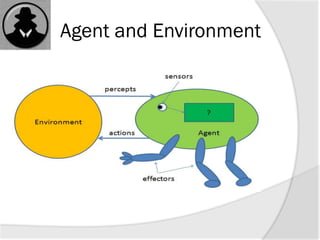

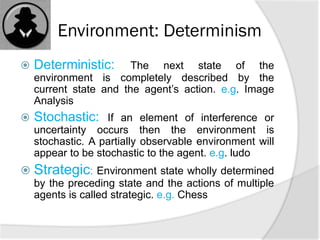

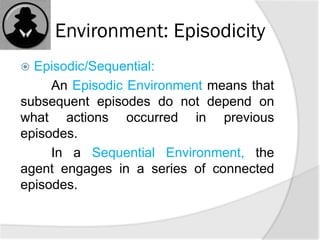

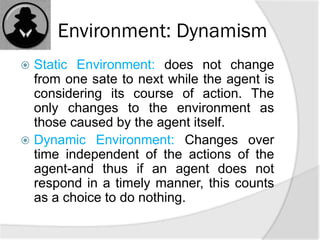

An agent is anything that can perceive its environment and act upon that environment. An intelligent agent must sense, act autonomously, and be rational. There are different types of environments and classes of agents. Intelligent agents are applied as automated online assistants to perceive customer needs and perform individualized customer service.