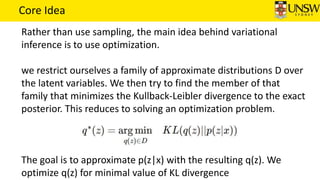

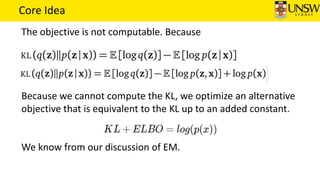

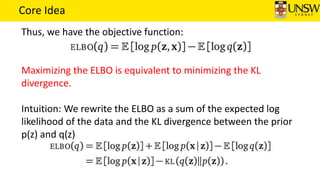

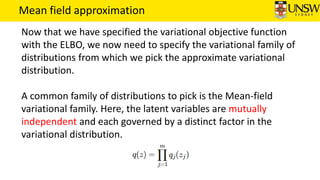

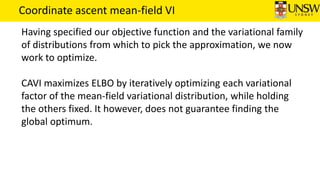

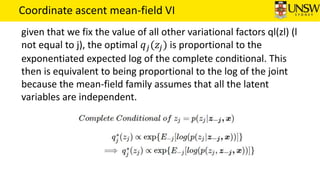

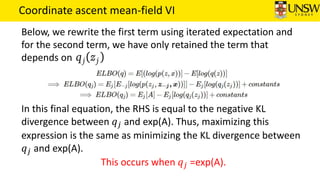

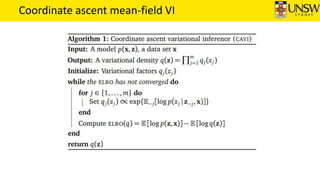

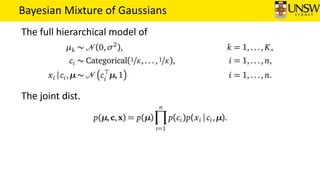

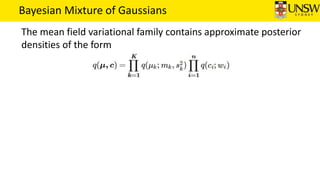

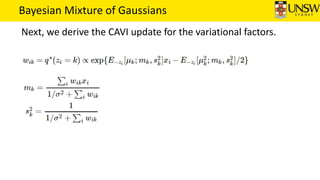

Variational inference is a family of techniques for approximating intractable integrals arising in Bayesian inference and machine learning. It approximates posterior densities for Bayesian models as an alternative to Markov chain Monte Carlo that is faster and easier to scale to large data. The core idea of variational inference is to restrict the approximate posterior to a family of distributions and optimize it to minimize its Kullback-Leibler divergence from the true posterior. This results in an optimization problem of maximizing the evidence lower bound. Mean field variational inference uses a mean field approximation that assumes independent factors and optimizes each factor in turn using coordinate ascent. Variational inference was applied to a Bayesian mixture of Gaussians model as an example.

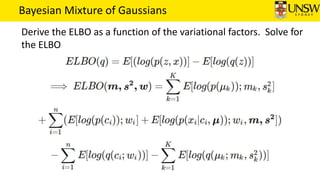

![What is Variational Inference?

Variational Bayesian methods are a family of techniques for

approximating intractable integrals arising in Bayesian inference

and machine learning [Wiki].

It is widely used to approximate posterior densities for Bayesian

models, an alternative strategy to Markov Chain Monte Carlo, but

it tends to be faster and easier to scale to large data.

It has been applied to problems such as document analysis,

computational neuroscience and computer vision.](https://image.slidesharecdn.com/learninggroup-variationalinference-20180525-180606045516/85/Learning-group-variational-inference-3-320.jpg)