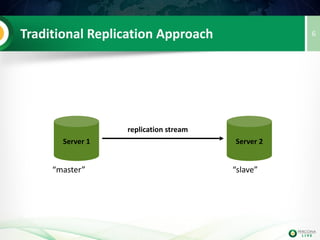

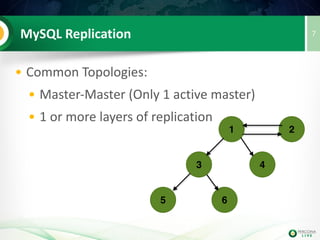

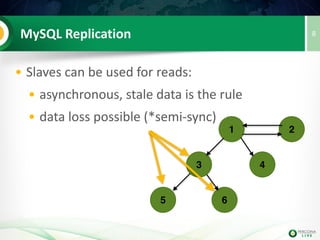

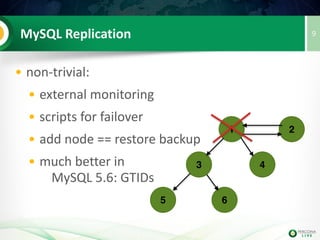

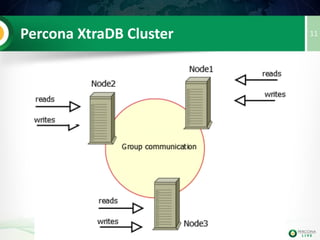

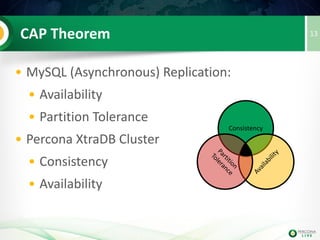

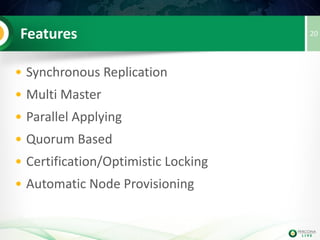

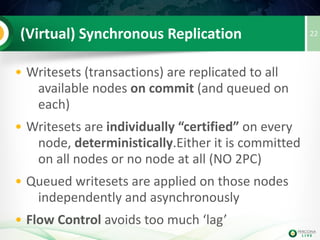

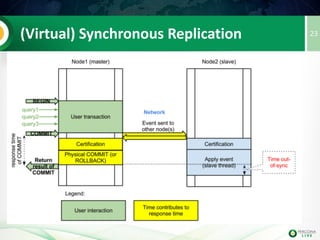

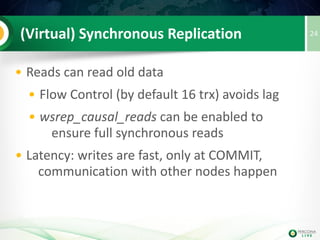

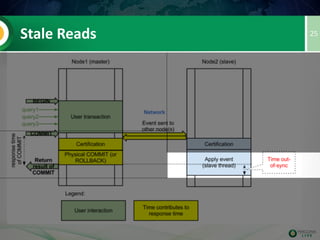

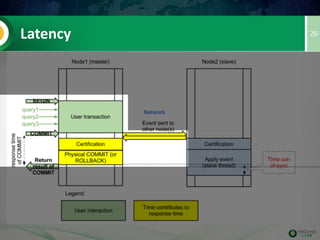

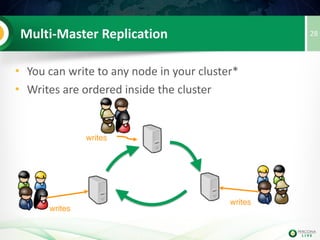

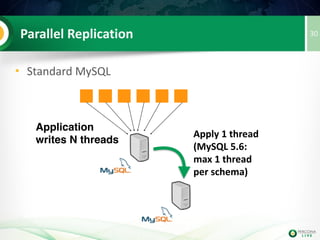

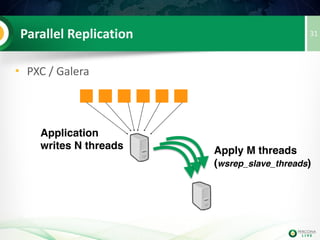

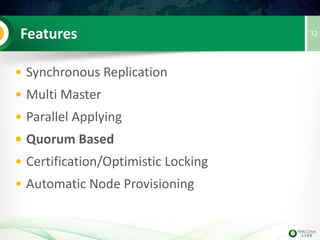

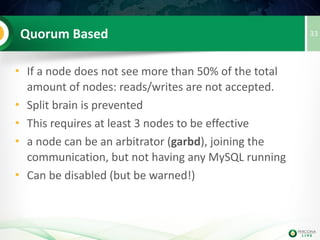

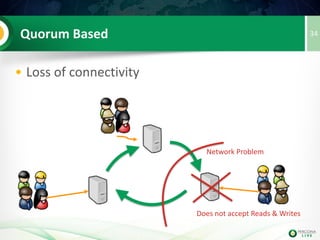

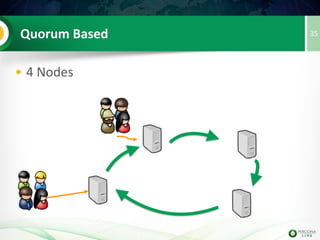

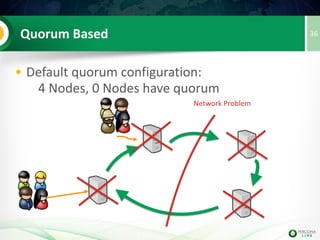

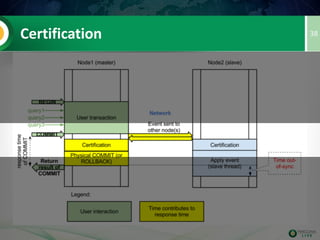

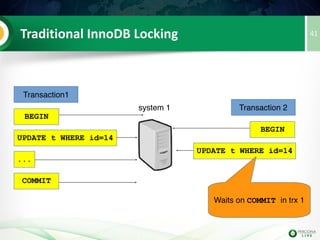

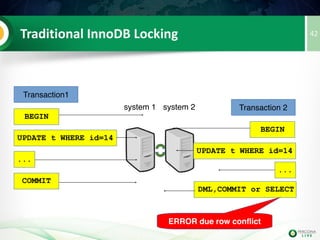

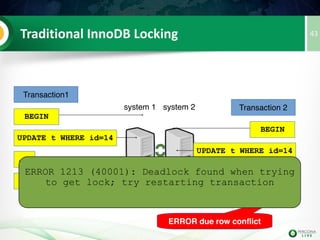

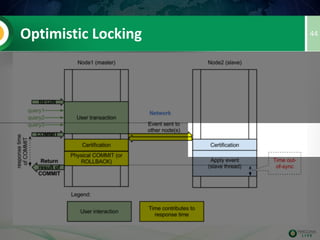

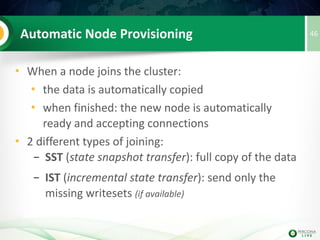

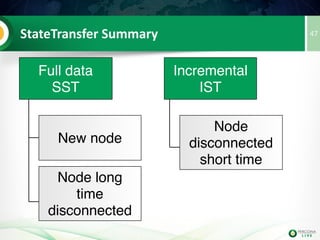

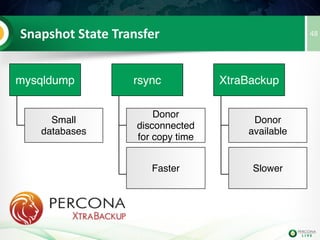

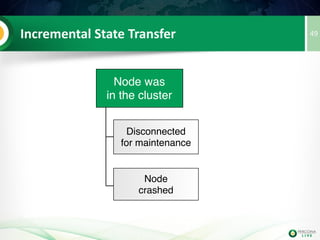

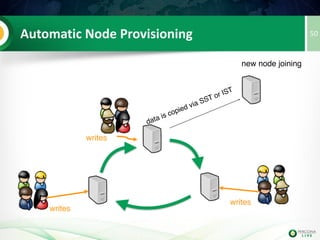

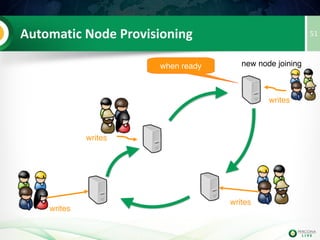

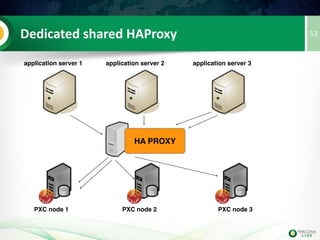

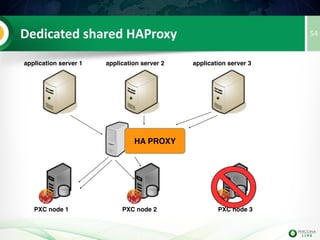

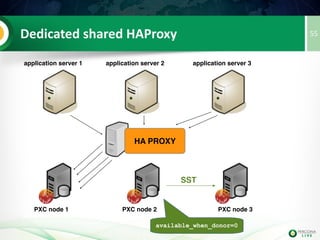

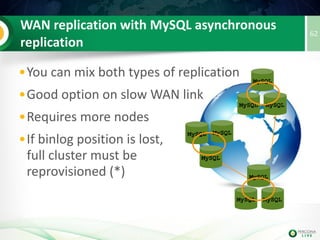

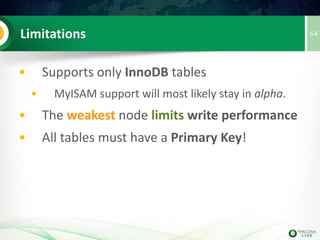

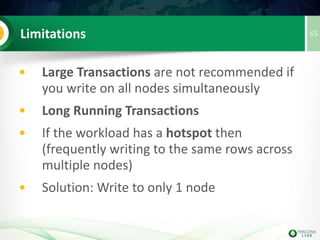

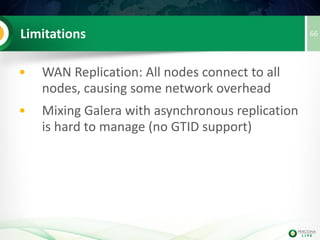

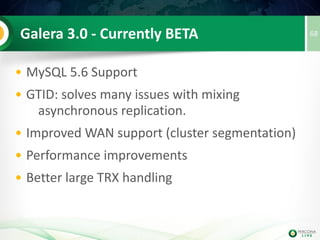

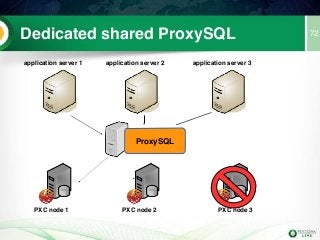

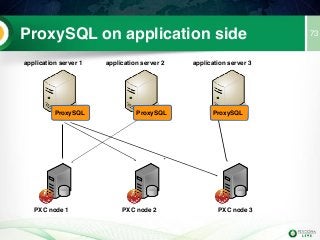

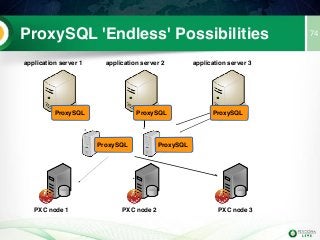

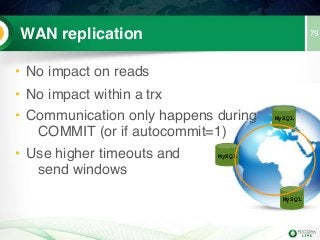

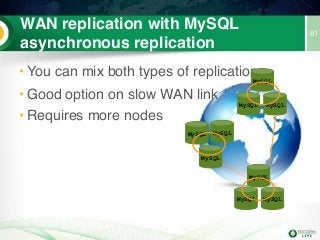

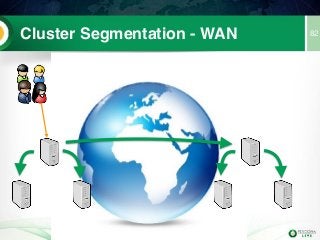

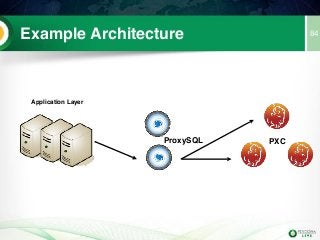

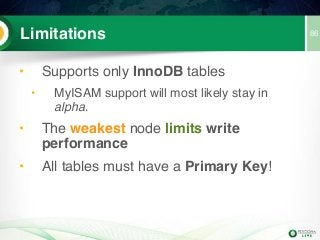

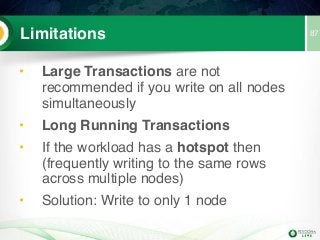

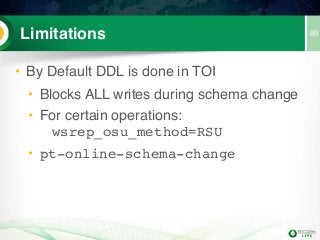

The document provides an overview of Percona XtraDB Cluster, detailing its features like multi-master replication, automatic node provisioning, and load balancing. It discusses use cases such as high availability, WAN replication, and read scaling while addressing limitations like support only for InnoDB tables and performance constraints on the weakest node. Additionally, it highlights the contributions of Percona to the MySQL community and resources for further information.