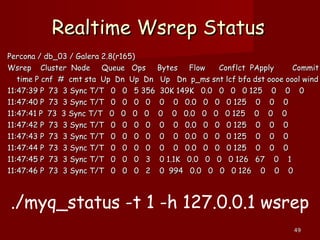

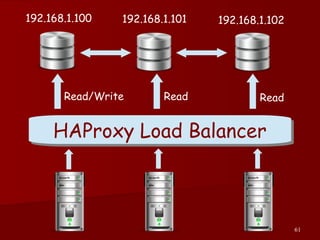

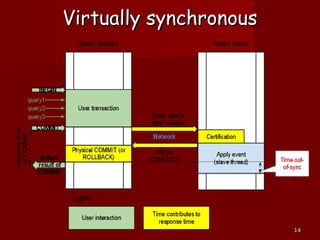

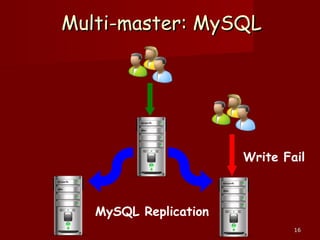

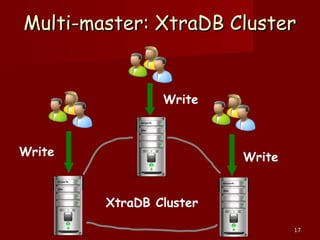

The document provides an introduction to Percona XtraDB Cluster and HAProxy, outlining the steps for installation and configuration. It discusses the benefits of using a synchronous and multi-master replication system, detailing server setup and best practices for handling reads and writes in a clustered environment. Additionally, it includes important considerations for monitoring cluster status and performance.

![4343

Configuring the first nodeConfiguring the first node

[mysqld][mysqld]

wsrep_provider=/usr/lib64/libgalera_smm.sowsrep_provider=/usr/lib64/libgalera_smm.so

wsrep_cluster_address = "wsrep_cluster_address = "gcomm://gcomm://""

wsrep_sst_auth=username:passwordwsrep_sst_auth=username:password

wsrep_provider_options="gcache.size=4G"wsrep_provider_options="gcache.size=4G"

wsrep_cluster_name=Perconawsrep_cluster_name=Percona

wsrep_sst_method=xtrabackupwsrep_sst_method=xtrabackup

wsrep_node_name=db_01wsrep_node_name=db_01

wsrep_slave_threads=4wsrep_slave_threads=4

log_slave_updateslog_slave_updates

innodb_locks_unsafe_for_binlog=1innodb_locks_unsafe_for_binlog=1

innodb_autoinc_lock_mode=2innodb_autoinc_lock_mode=2](https://image.slidesharecdn.com/perconaxtradbcluster-140411221615-phpapp02/85/2014-OSDC-Talk-Introduction-to-Percona-XtraDB-Cluster-and-HAProxy-43-320.jpg)

![4444

Configuring subsequent nodesConfiguring subsequent nodes

[mysqld][mysqld]

wsrep_provider=/usr/lib64/libgalera_smm.sowsrep_provider=/usr/lib64/libgalera_smm.so

wsrep_cluster_address = "wsrep_cluster_address = "gcomm://xxxx,xxxxgcomm://xxxx,xxxx""

wsrep_sst_auth=username:passwordwsrep_sst_auth=username:password

wsrep_provider_options="gcache.size=4G"wsrep_provider_options="gcache.size=4G"

wsrep_cluster_name=Perconawsrep_cluster_name=Percona

wsrep_sst_method=xtrabackupwsrep_sst_method=xtrabackup

wsrep_node_name=db_01wsrep_node_name=db_01

wsrep_slave_threads=4wsrep_slave_threads=4

log_slave_updateslog_slave_updates

innodb_locks_unsafe_for_binlog=1innodb_locks_unsafe_for_binlog=1

innodb_autoinc_lock_mode=2innodb_autoinc_lock_mode=2](https://image.slidesharecdn.com/perconaxtradbcluster-140411221615-phpapp02/85/2014-OSDC-Talk-Introduction-to-Percona-XtraDB-Cluster-and-HAProxy-44-320.jpg)