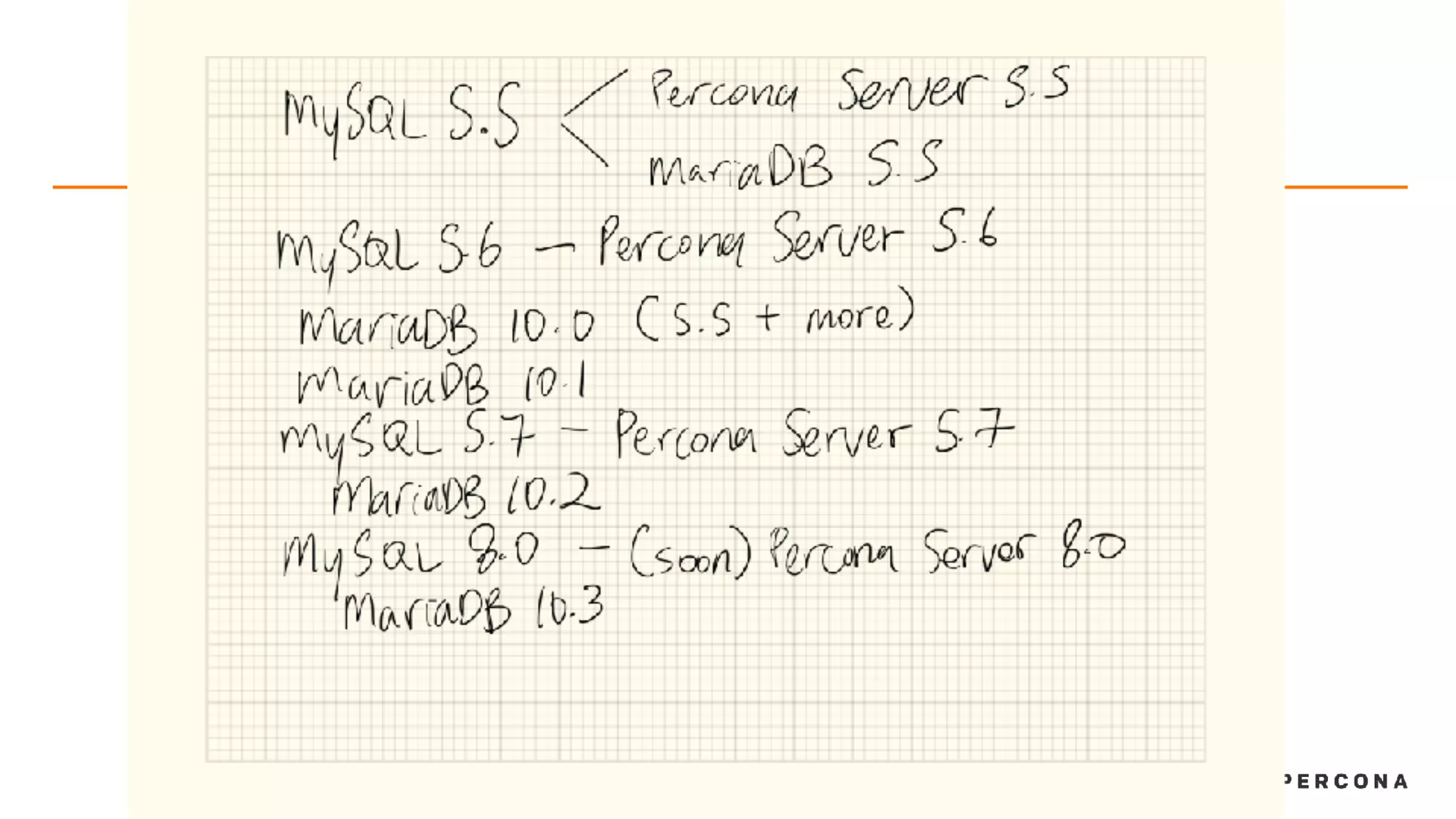

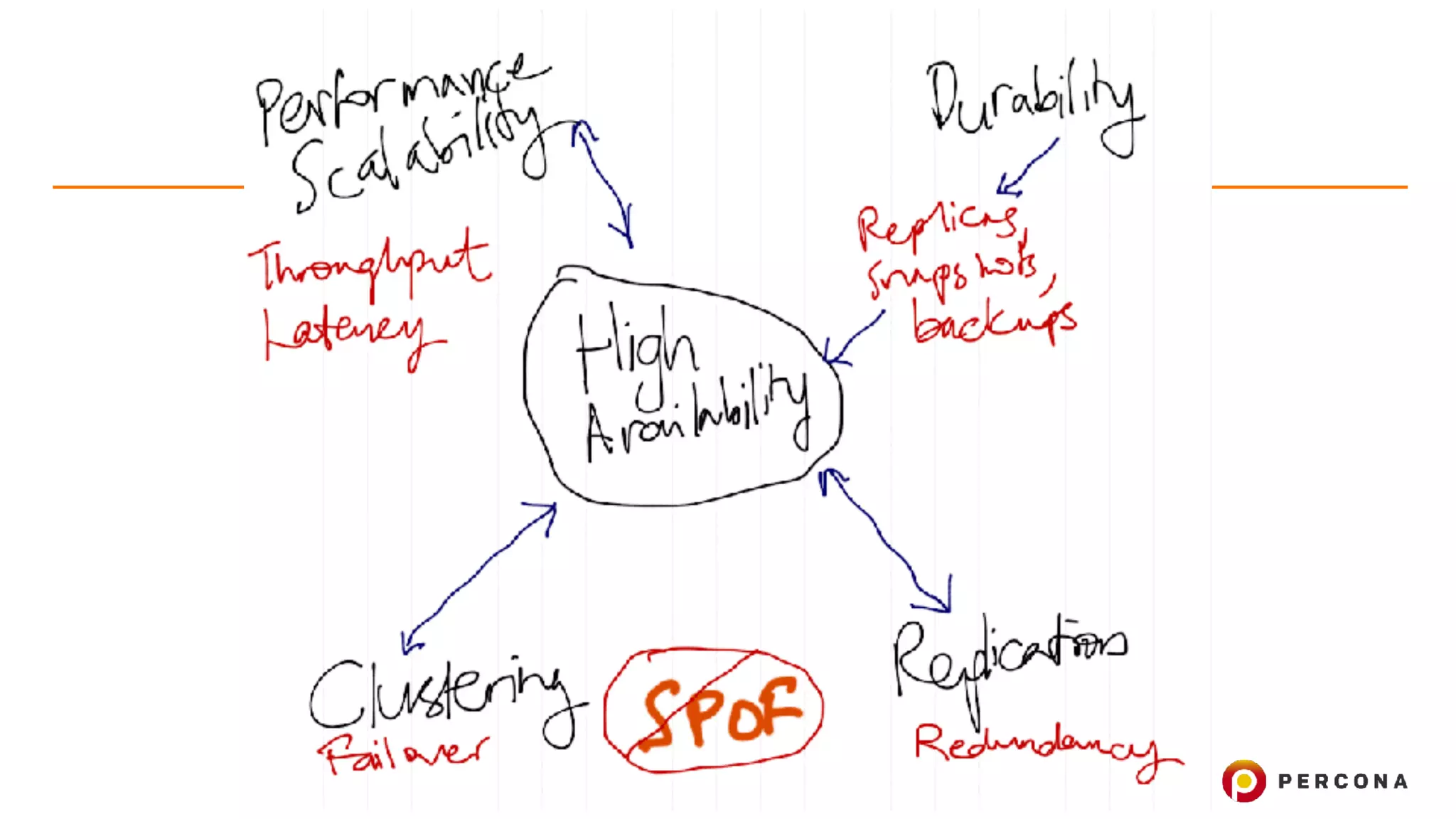

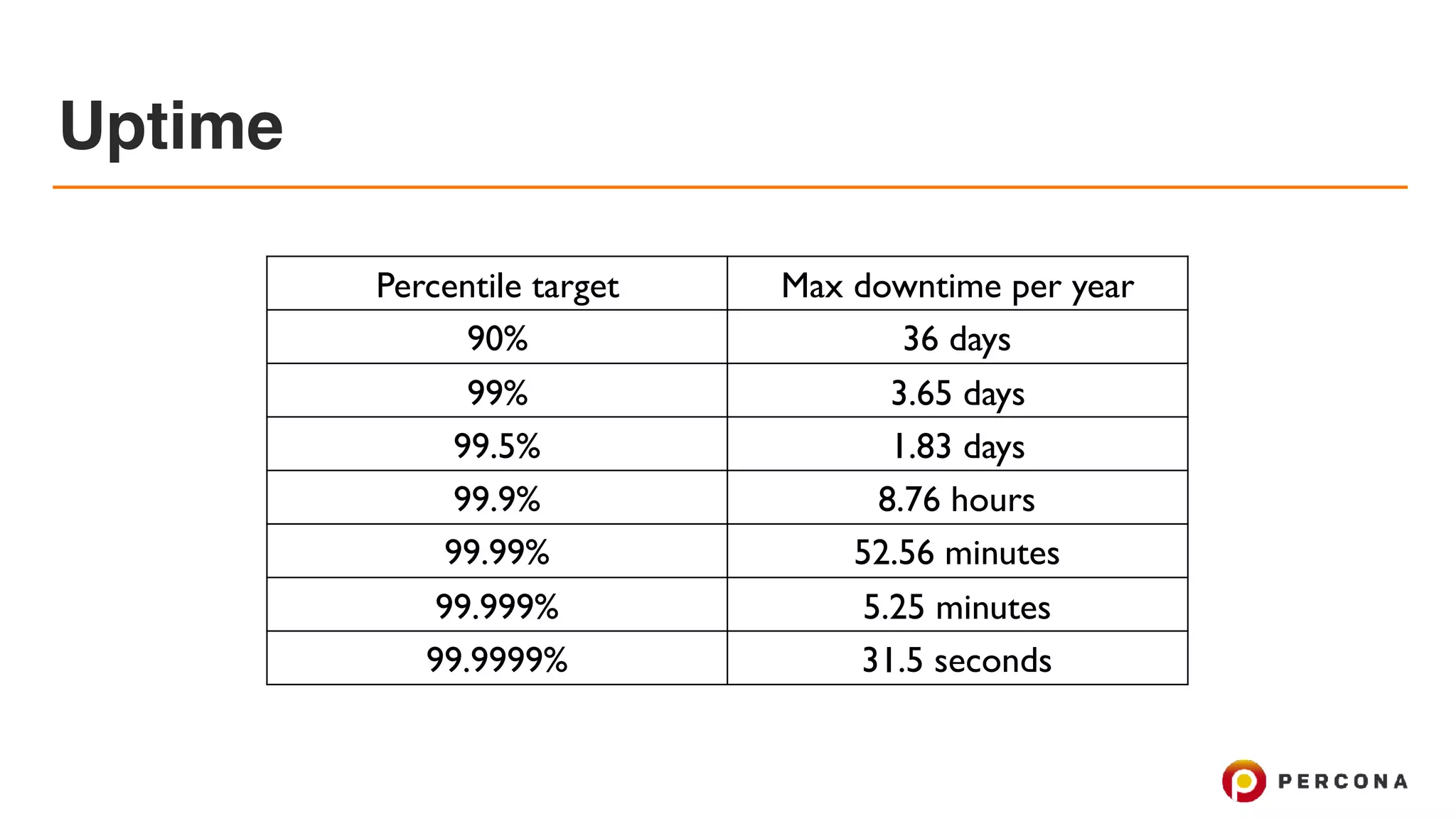

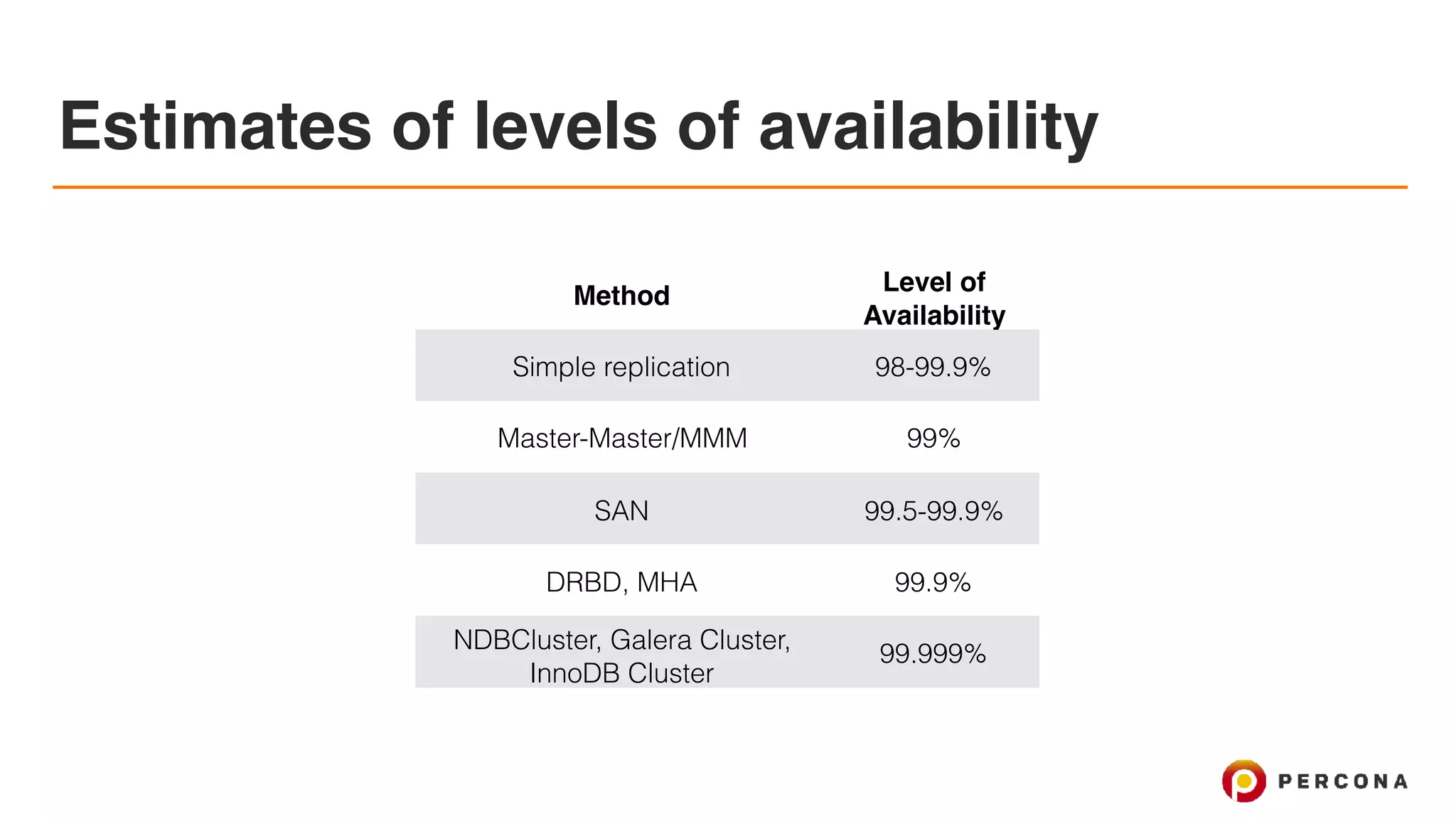

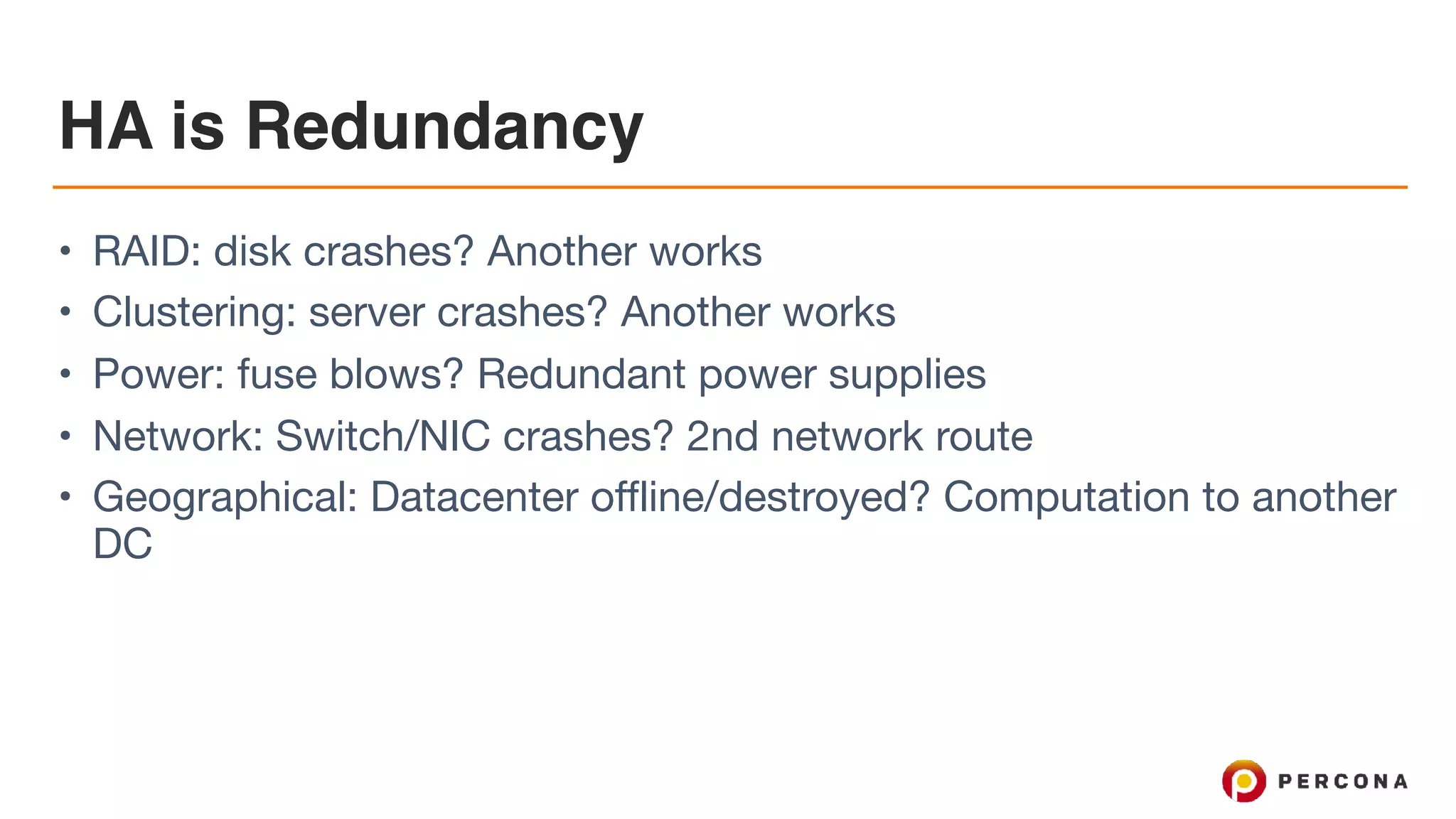

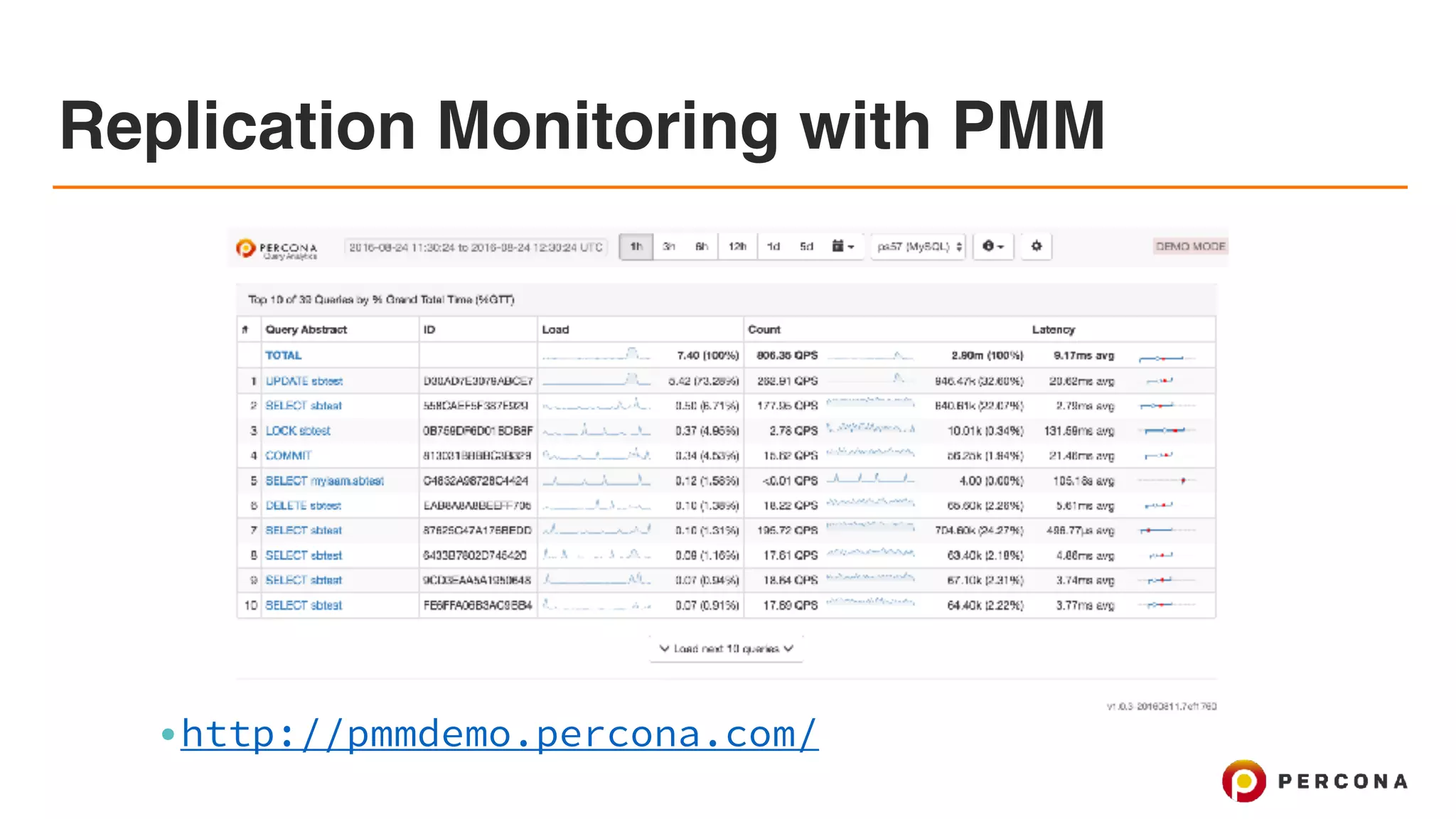

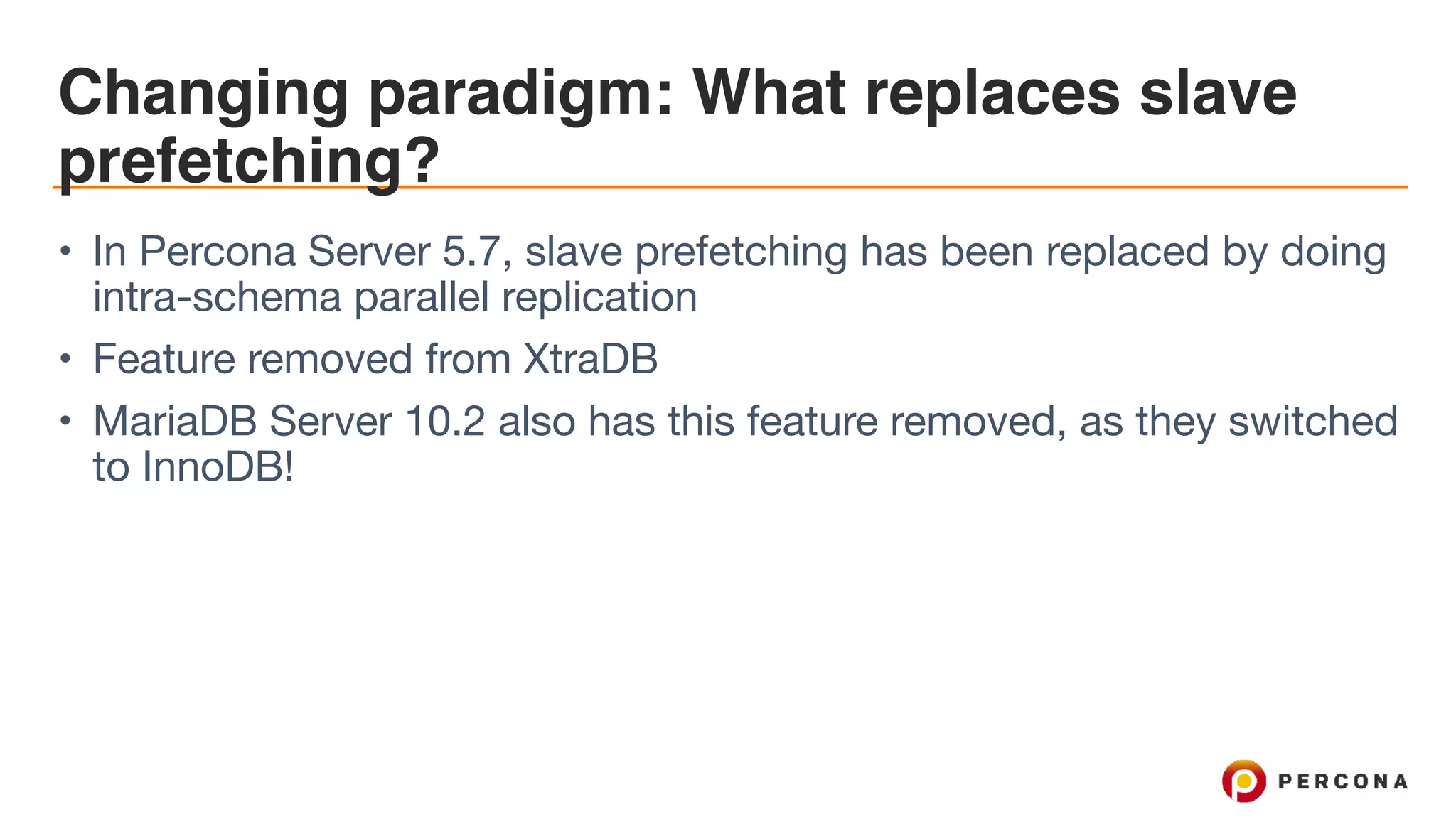

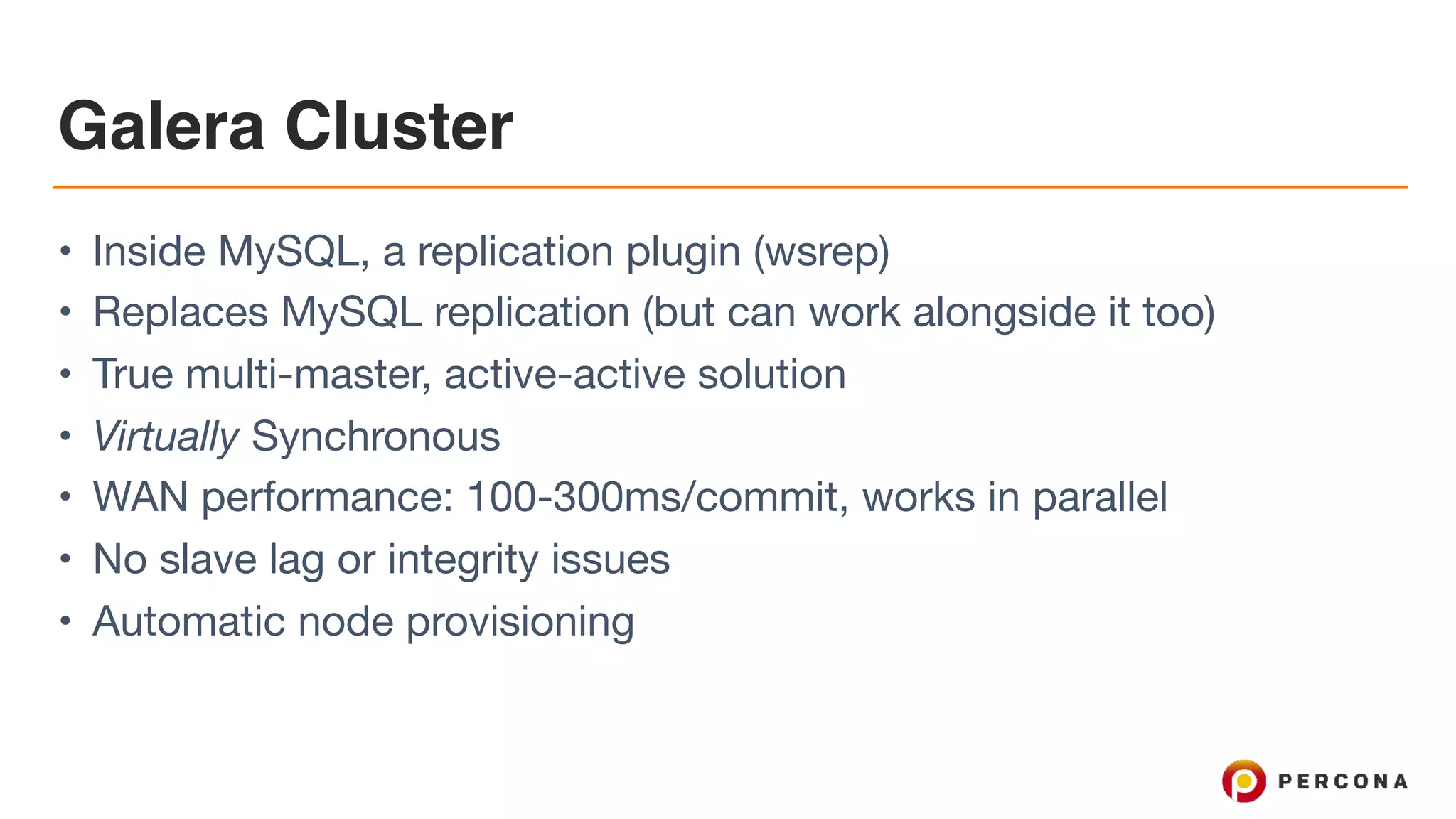

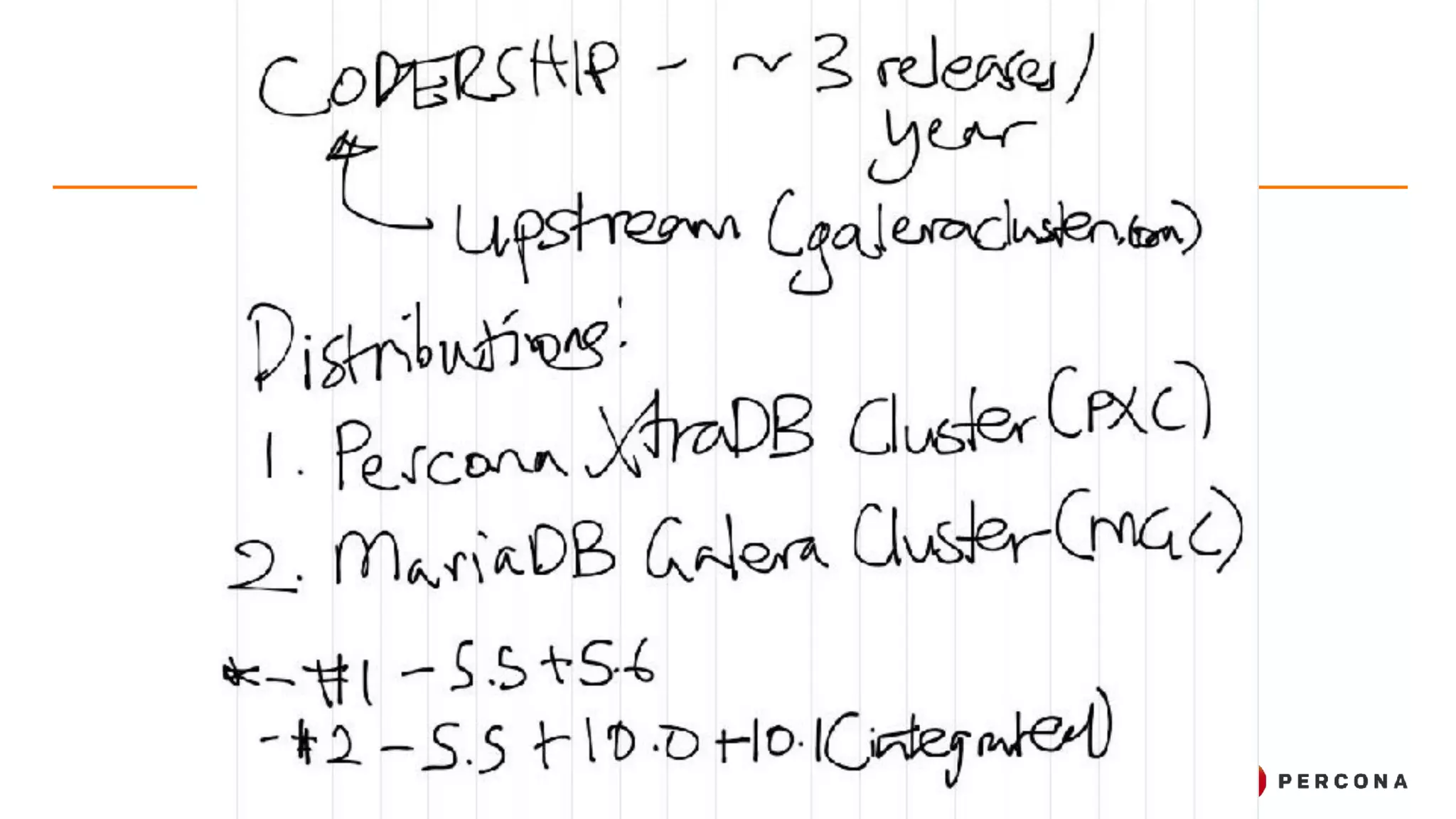

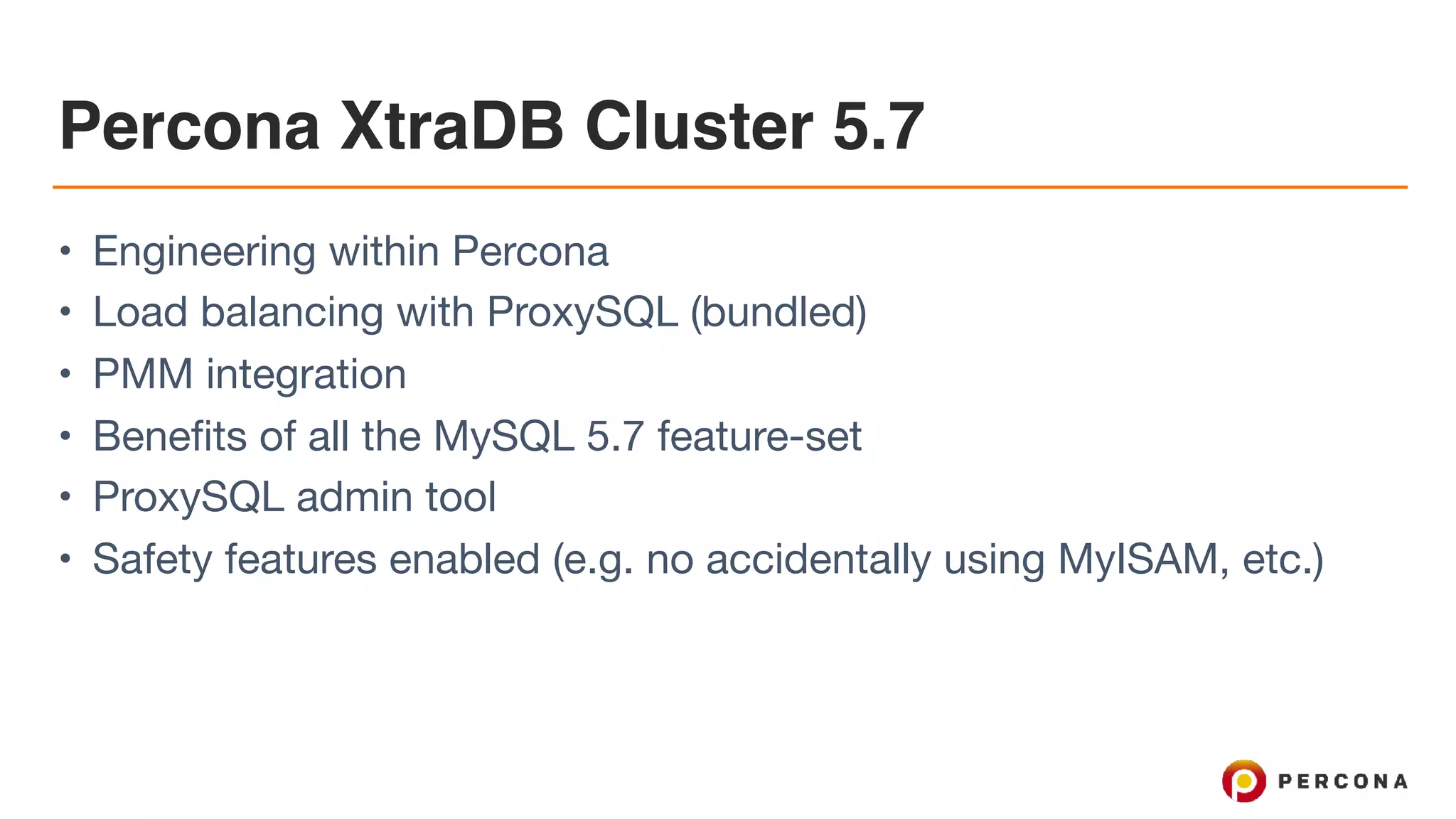

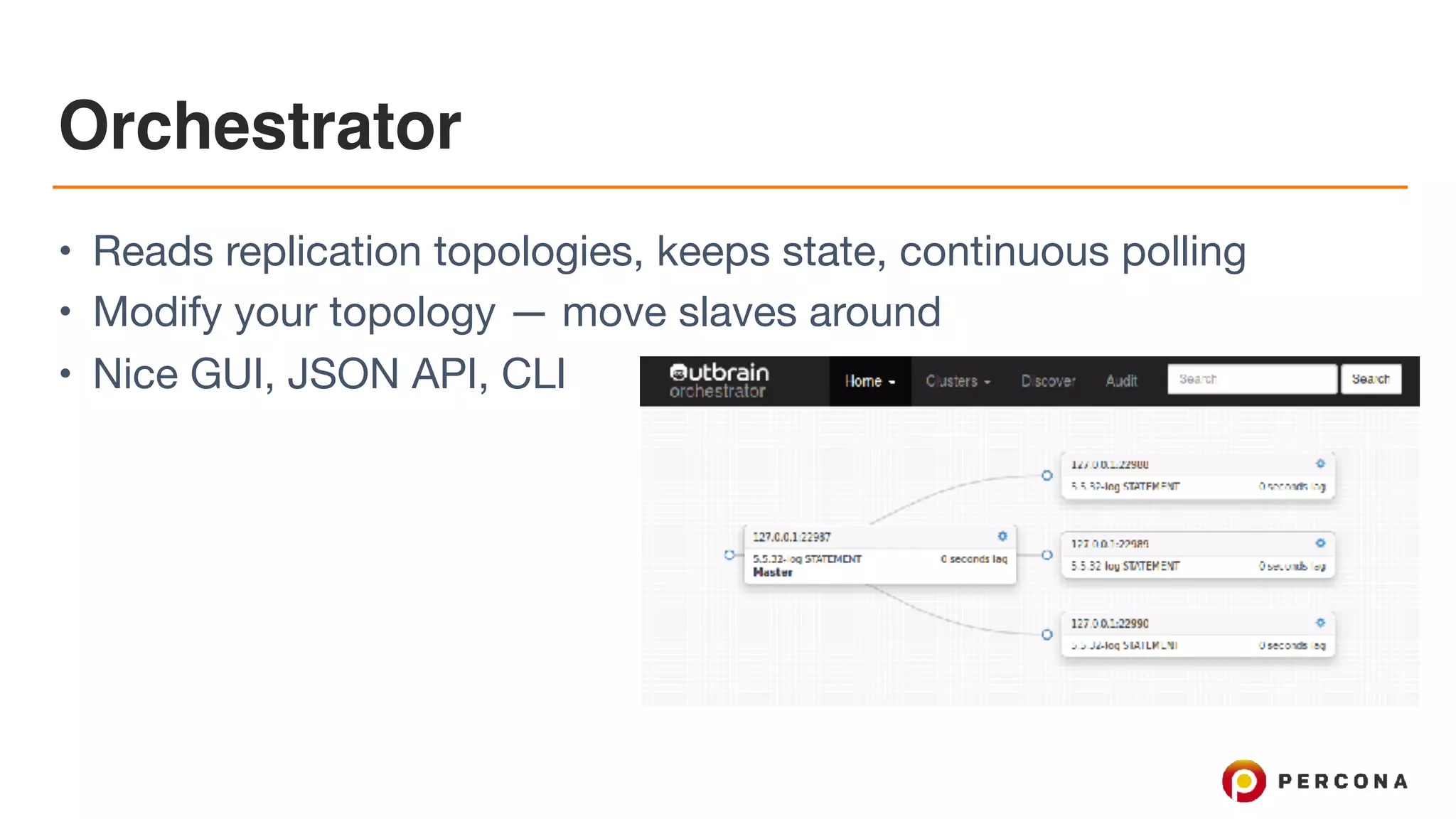

The document discusses the advancements and best practices in achieving high availability (HA) and scalability in MySQL databases over the past decade, emphasizing various redundancy techniques such as replication and clustering. It highlights tools and frameworks, including Percona's offerings, to enhance MySQL performance and reliability while addressing common challenges like node failure and data integrity. The conclusion notes that effective monitoring and automation are crucial to manage multi-node systems and maintain high availability in modern cloud environments.