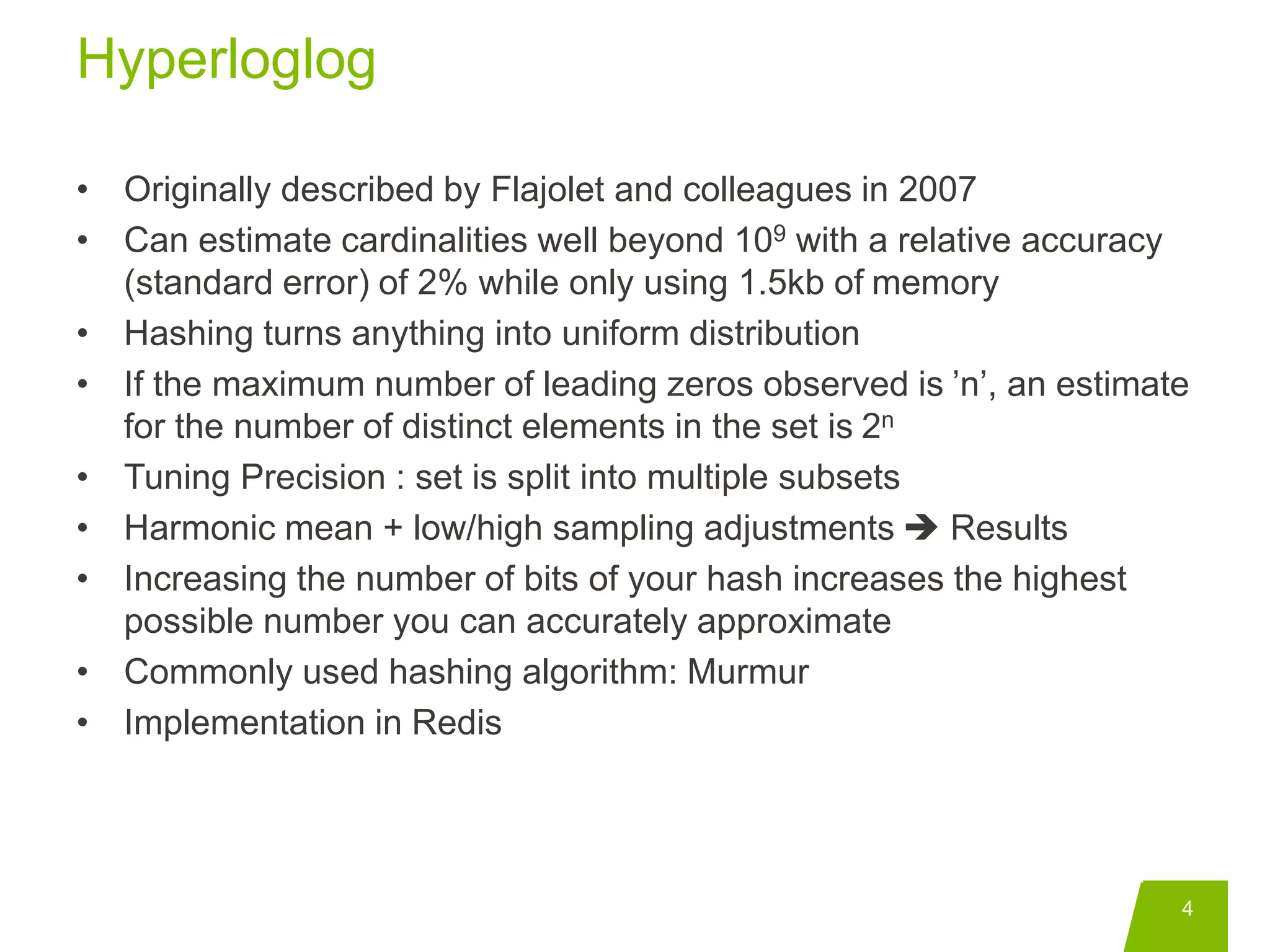

The document discusses probabilistic data structures including hyperloglog, bloom filters, and count-min sketches, which offer efficient approximate solutions for analyzing large data sets. Hyperloglog can estimate cardinalities with high accuracy using minimal memory, bloom filters help test set membership with an error rate, and count-min sketches allow frequency estimation of items in a collection. It also provides references for further reading on these topics.

![Bloom filters

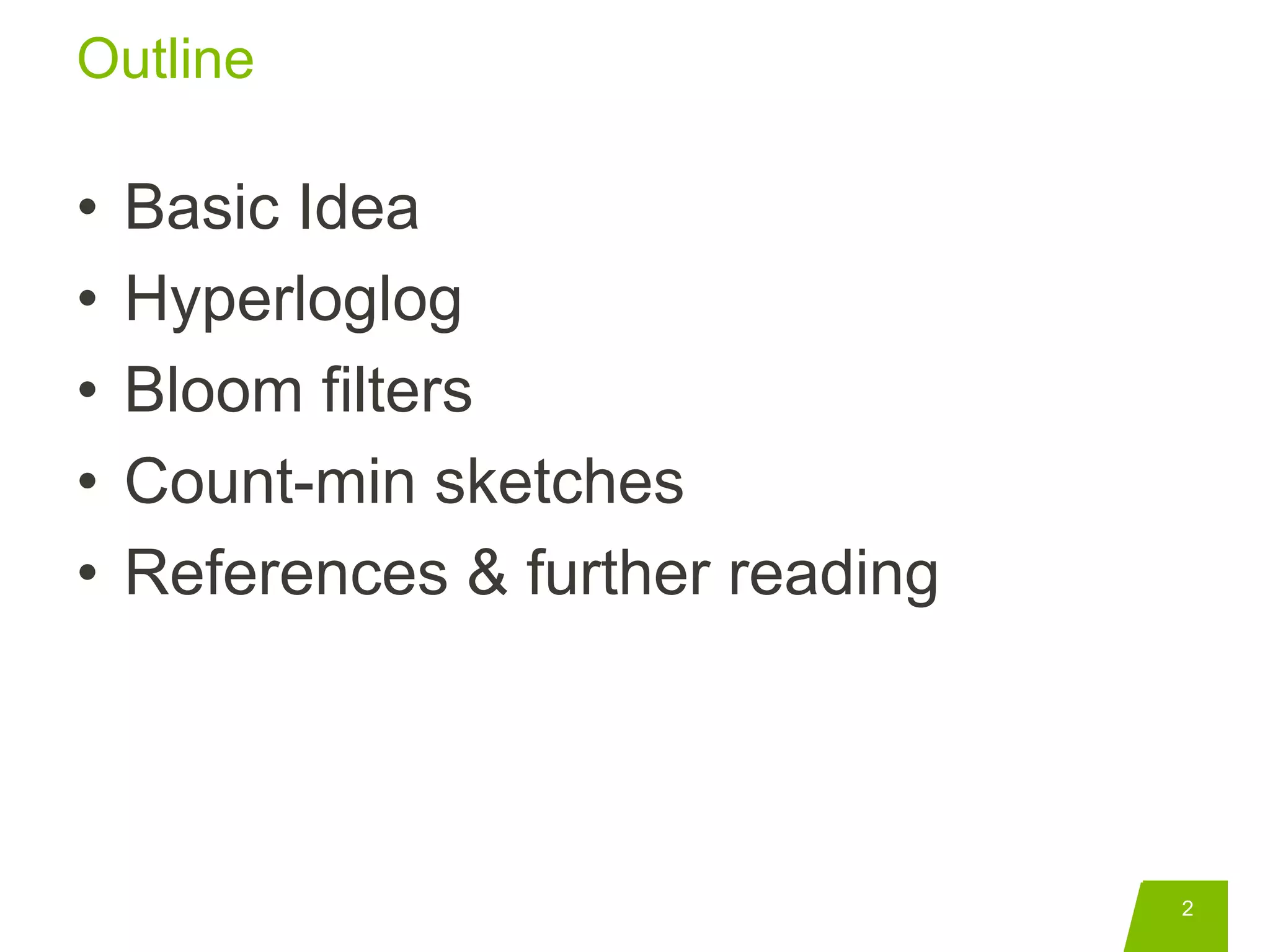

• Conceived by Burton Howard Bloom in 1970

• Used to test whether an element is a member of a set

• Query returns either "possibly in set" or "definitely not in set“

• An empty Bloom filter is a bit array of ‘m’ bits, all set to 0

• ‘k’ hash functions map the set element to one of the m array

positions with a uniform random distribution

• The bits at all these positions are set to 1

• To query, hash with same hash functions and check if all positions

are set, if not its surely not in the set else maybe present in set

• For an optimal value of k with 1% error each element requires only

about 9.6 bits — regardless of the size of the elements

5

1. Choose a ballpark value for no. of elements in the set ‘n’

2. Choose a value for m

3. Calculate the optimal value of k [ k = (m/n) ln 2 ]

4. Calculate the error rate ‘p’ [ p = (1-e-kn/m)k ]

If p is unacceptable, return to step 2 and change m;

otherwise we're done.](https://image.slidesharecdn.com/probabilisticdatastructures-160808070851/75/Probabilistic-data-structures-5-2048.jpg)

![Count-min sketches

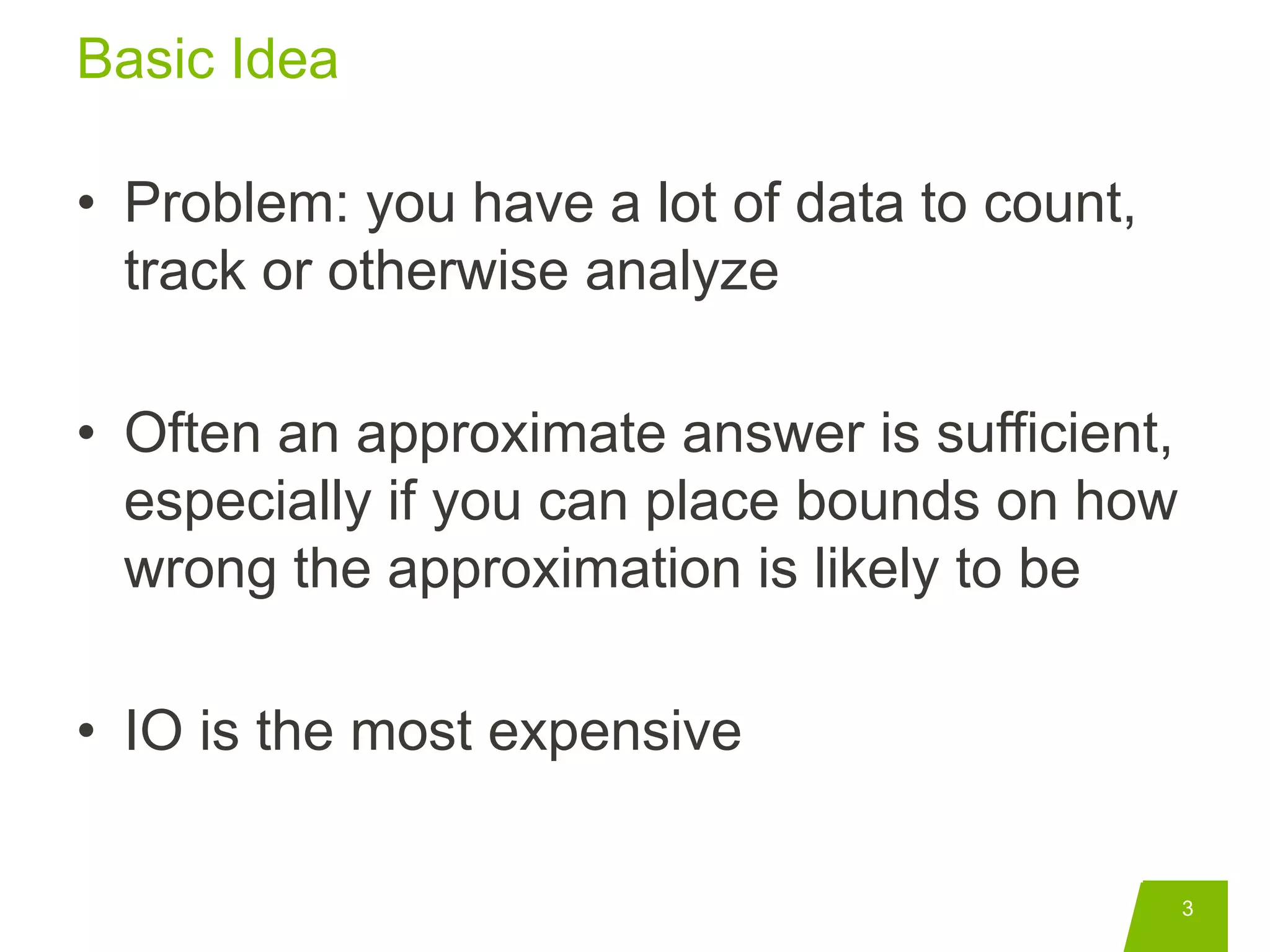

• Invented by Graham Cormode and S. Muthu Muthukrishnan in 2003

• How many of each item there is in an collection

• Sketch is a compact summary of a large amount of data, is a 2D array

of w columns and d rows

• Each box is a counter

• Each row is indexed by a

corresponding hash function

• Estimated frequency for

‘Something’ is min(a,b,c,d)

• ‘w’ limits the magnitude of the error [ error <= 2 * n/w ]

• ‘d’ controls the probability that the estimation is greater than the error

[probability limit exceeded = 1 – (1/2) ** d]

• Works best on Skewed data](https://image.slidesharecdn.com/probabilisticdatastructures-160808070851/75/Probabilistic-data-structures-6-2048.jpg)