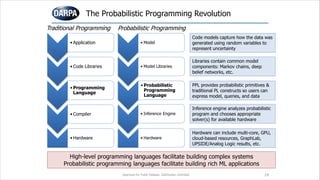

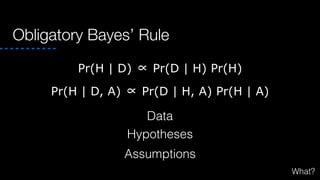

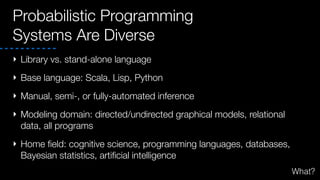

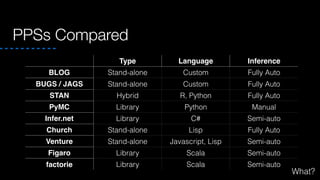

The document discusses probabilistic programming, its importance in addressing challenges posed by traditional machine learning, and how it allows users to model uncertainty directly in programs. It covers various aspects including what probabilistic programming is, examples of its applications, and the diverse systems and libraries available for implementation. The document emphasizes the potential of probabilistic programming to enable quicker model development, empower domain experts, and enhance the sophistication and accuracy of machine learning applications.

![Business Data Is Heterogeneous and

Structured

id: “abcdef”

gender: “Male”

dob: 1978-12-09

twitter_id: 9458201

Profile

2014-01-21 18:41:04, “https://devcenter.heroku.com/articles/quickstart”, …

2014-01-20 12:35:56, “https://devcenter.heroku.com/categories/java”, …

2014-01-20 09:12:52, “https://devcenter.heroku.com/articles/ssl-endpoint”, …

Page Views

Order Date Order ID Title Category ASIN/ISBN Release DateConditionSeller Per Unit Price

1/5/13 002-1139353-0278652 Under Armour Men's Resistor No Show Socks,pack of 6 SocksApparel B003RYQJJW new The Sock Company, Inc.$21.99

1/5/13 002-1139353-0278652 Under Armour Men's Resistor No Show Socks,pack of 6 SocksApparel B004UONNXI new The Sock Company, Inc.$21.99

1/8/13 002-2593752-8837806 CivilWarLand in Bad DeclinePaperback 1573225797 1/31/97 new Amazon.com LLC $8.4

1/8/13 109-0985451-2187421 Nothing to Envy: Ordinary Lives in North KoreaPaperback 385523912 9/20/10 new Amazon.com LLC$10.88

1/12/13 109-8581642-2322617 Excession Mass Market Paperback553575376 2/1/98 new Amazon.com LLC $7.99

Transactions

[

{

text: “key to compelling VR is…”,

retweet_count: 3,

favorites_count: 5,

urls: [ ],

hashtags: [ ],

in_reply_to: 39823792801012

…

},

{

text: “@John4man really liked your piece”,

retweets: 0,

favorites: 0,

…

}

]

Social Posts

[ 657693, 7588892, 9019482, …]

Followers

blocked: False

want_retweets: True

marked_spam: False

since: 2013-09-13

Relationship](https://image.slidesharecdn.com/strata2014probabilisticprogramming-140627155634-phpapp02/85/Probabilistic-Programming-Why-What-How-When-8-320.jpg)

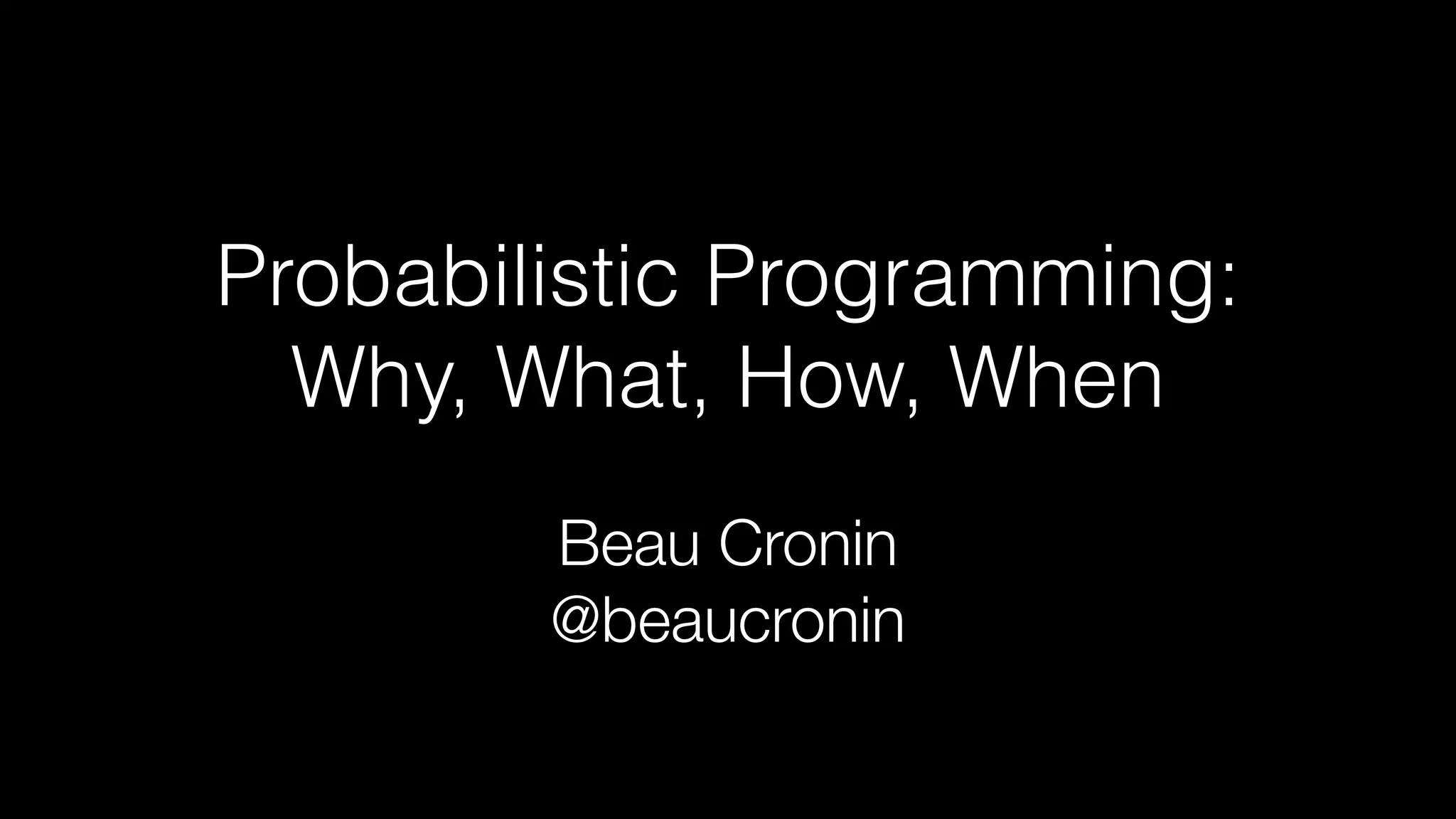

![!

!

!

fair-prior = .999

!

fair-coin? = flip(fair-prior)

!

if fair-coin?:

weight = 0.5

else:

weight = 0.9

!

observe(repeat(flip(weight), 10)),

[H, H, H, H, H, H, H, H, H, H])

!

query(fair-coin?)

First example: Deciding if a coin is fair based on flips

Assumptions

!

Unknowns

!

Observables](https://image.slidesharecdn.com/strata2014probabilisticprogramming-140627155634-phpapp02/85/Probabilistic-Programming-Why-What-How-When-15-320.jpg)

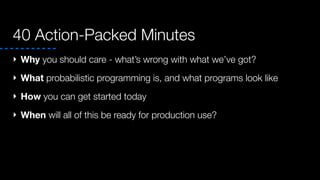

![VariableArray<bool> controlGroup =

Variable.Observed(new bool[] { false, false, true, false, false });

VariableArray<bool> treatedGroup =

Variable.Observed(new bool[] { true, false, true, true, true });

Range i = controlGroup.Range; Range j = treatedGroup.Range;

!

Variable<bool> isEffective = Variable.Bernoulli(0.5);

!

Variable<double> probIfTreated, probIfControl;

using (Variable.If(isEffective))

{

// Model if treatment is effective

probIfControl = Variable.Beta(1, 1);

controlGroup[i] = Variable.Bernoulli(probIfControl).ForEach(i);

probIfTreated = Variable.Beta(1, 1);

treatedGroup[j] = Variable.Bernoulli(probIfTreated).ForEach(j);

}

!

using (Variable.IfNot(isEffective))

{

// Model if treatment is not effective

Variable<double> probAll = Variable.Beta(1, 1);

controlGroup[i] = Variable.Bernoulli(probAll).ForEach(i);

treatedGroup[j] = Variable.Bernoulli(probAll).ForEach(j);

}

!

InferenceEngine ie = new InferenceEngine();

Console.WriteLine("Probability treatment has an effect = " + ie.Infer(isEffective));

Infer.net example: Is a new treatment effective?

http://research.microsoft.com/en-us/um/cambridge/projects/infernet/docs/Clinical%20trial%20tutorial.aspx

Observations

Unknown

Assumptions &

Unknowns

Query](https://image.slidesharecdn.com/strata2014probabilisticprogramming-140627155634-phpapp02/85/Probabilistic-Programming-Why-What-How-When-19-320.jpg)