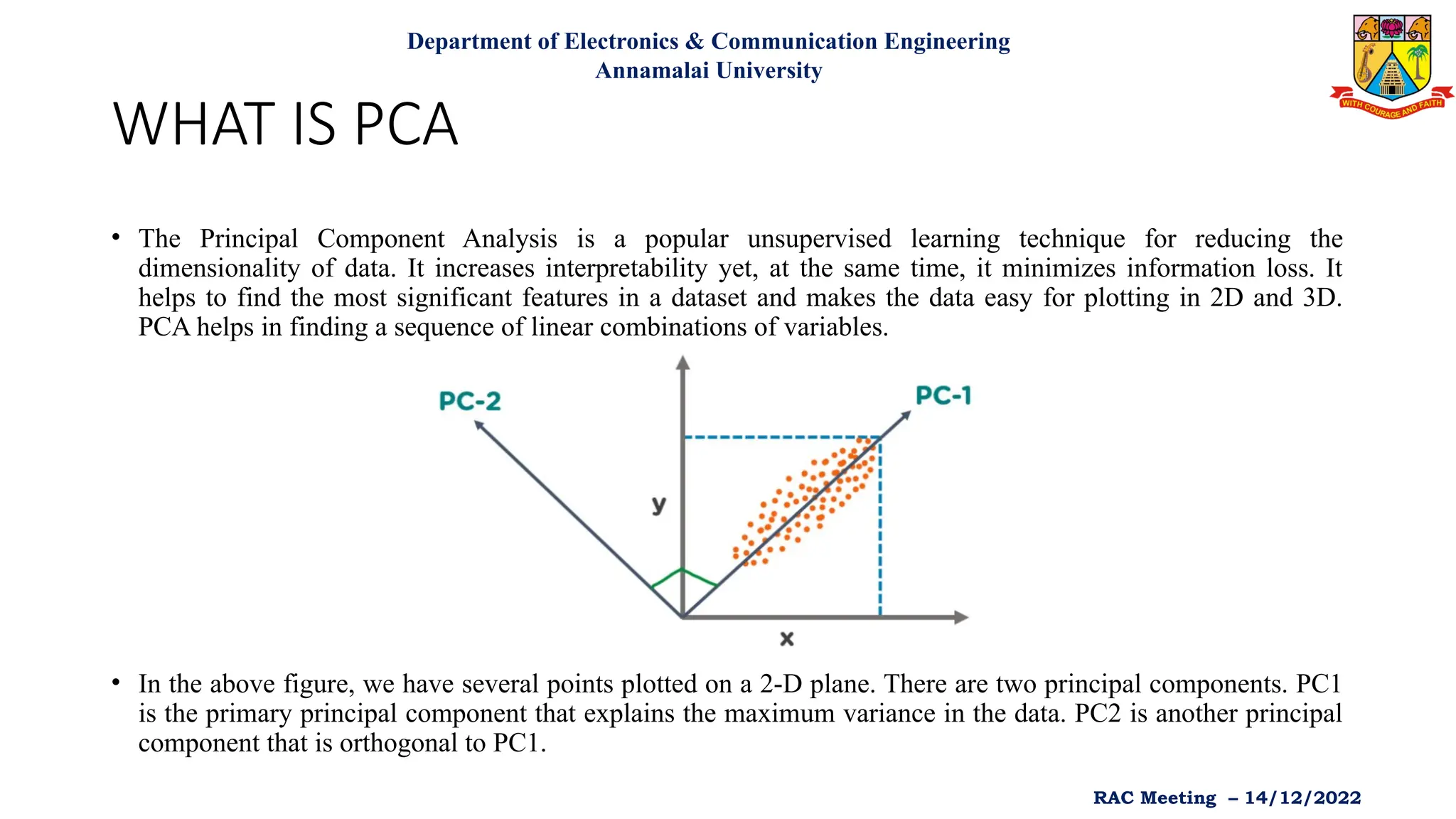

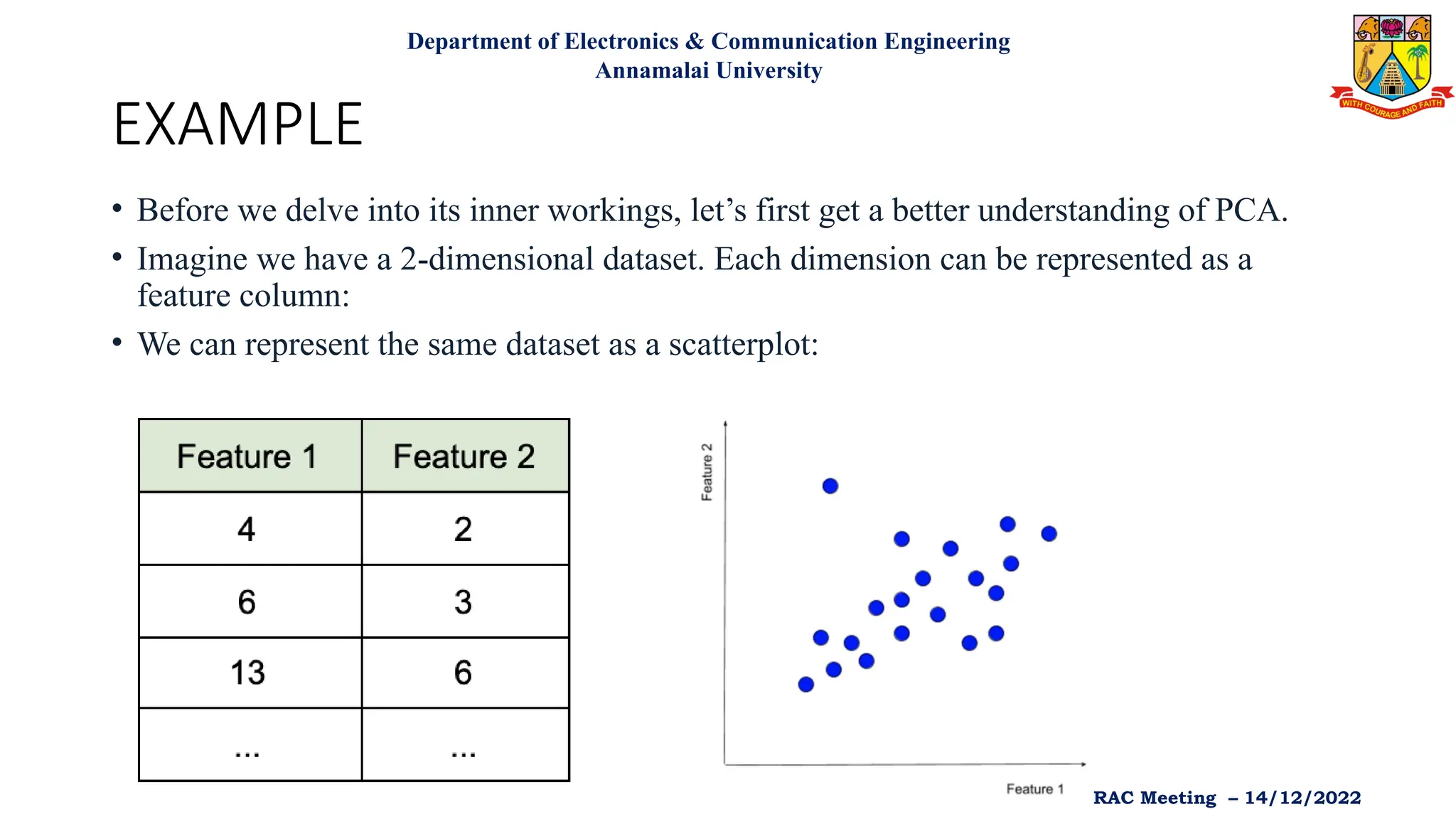

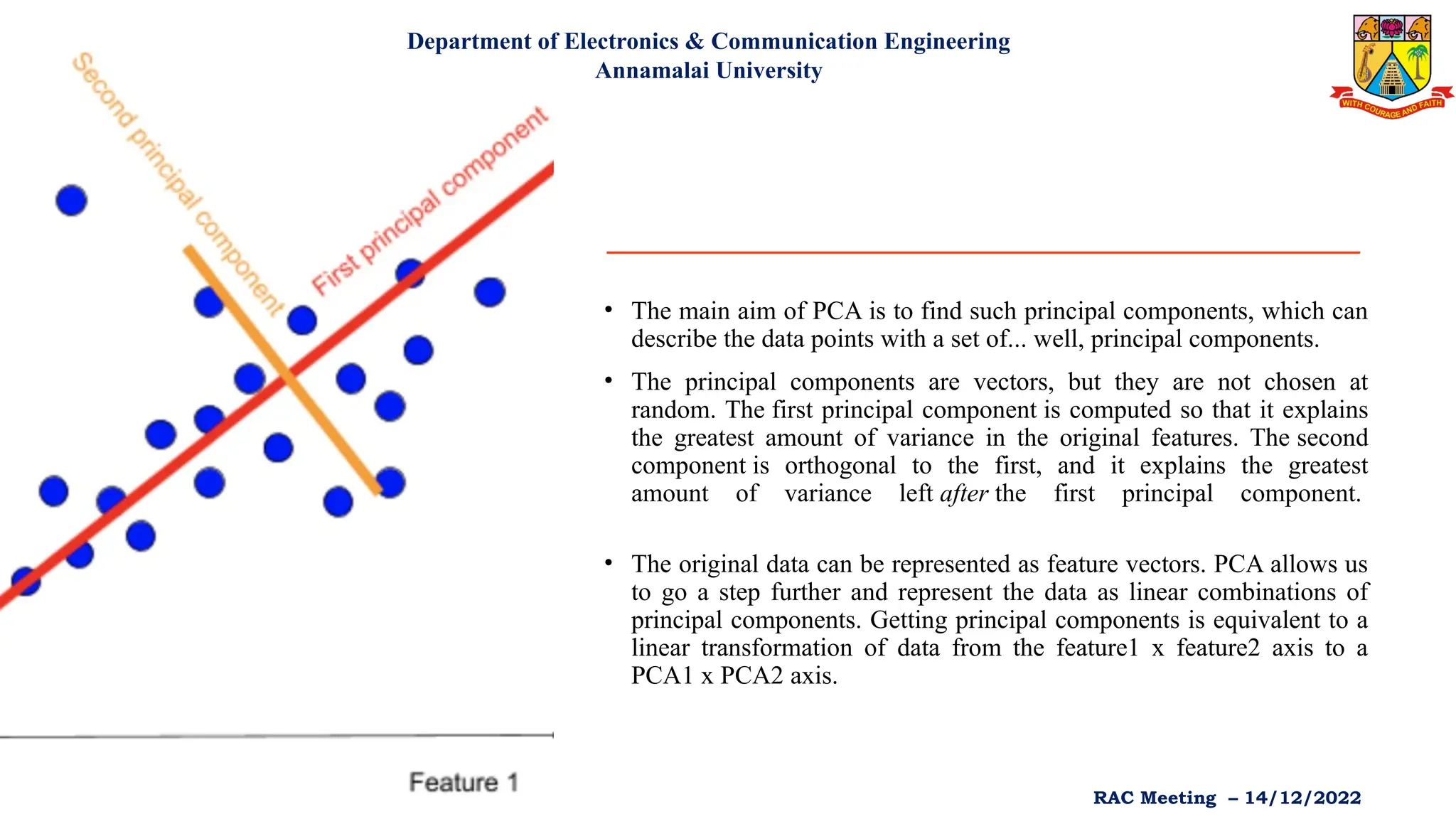

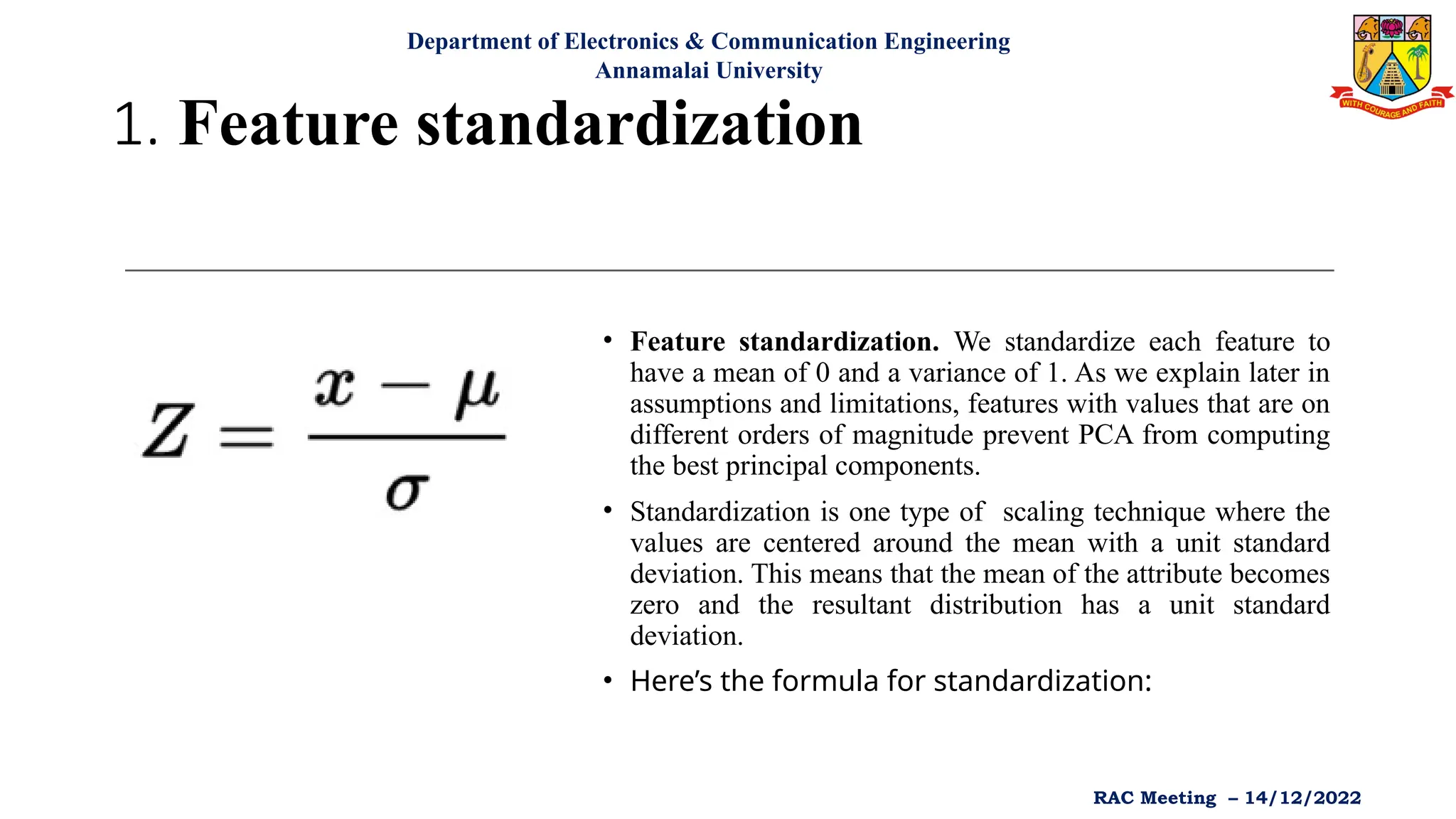

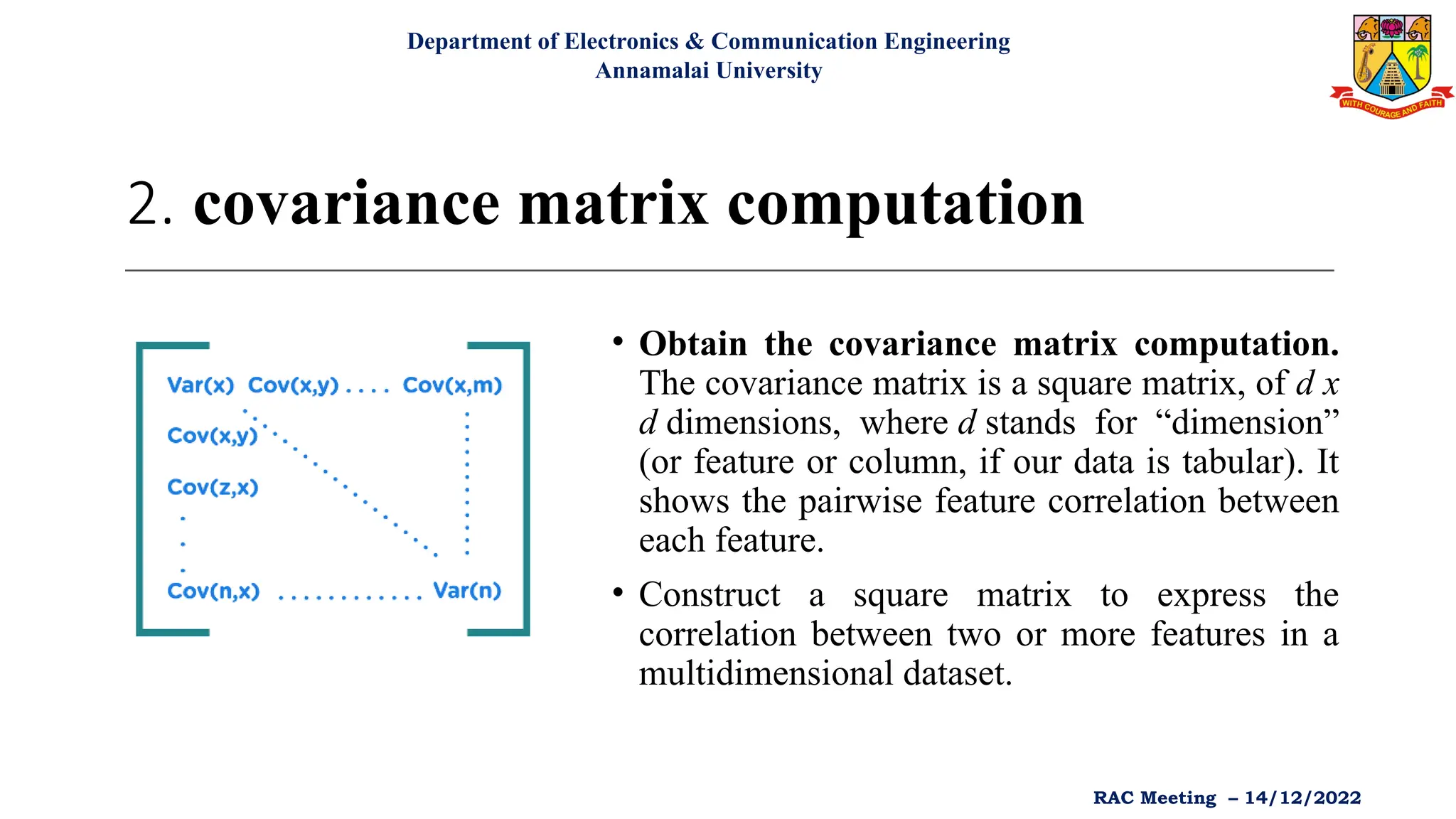

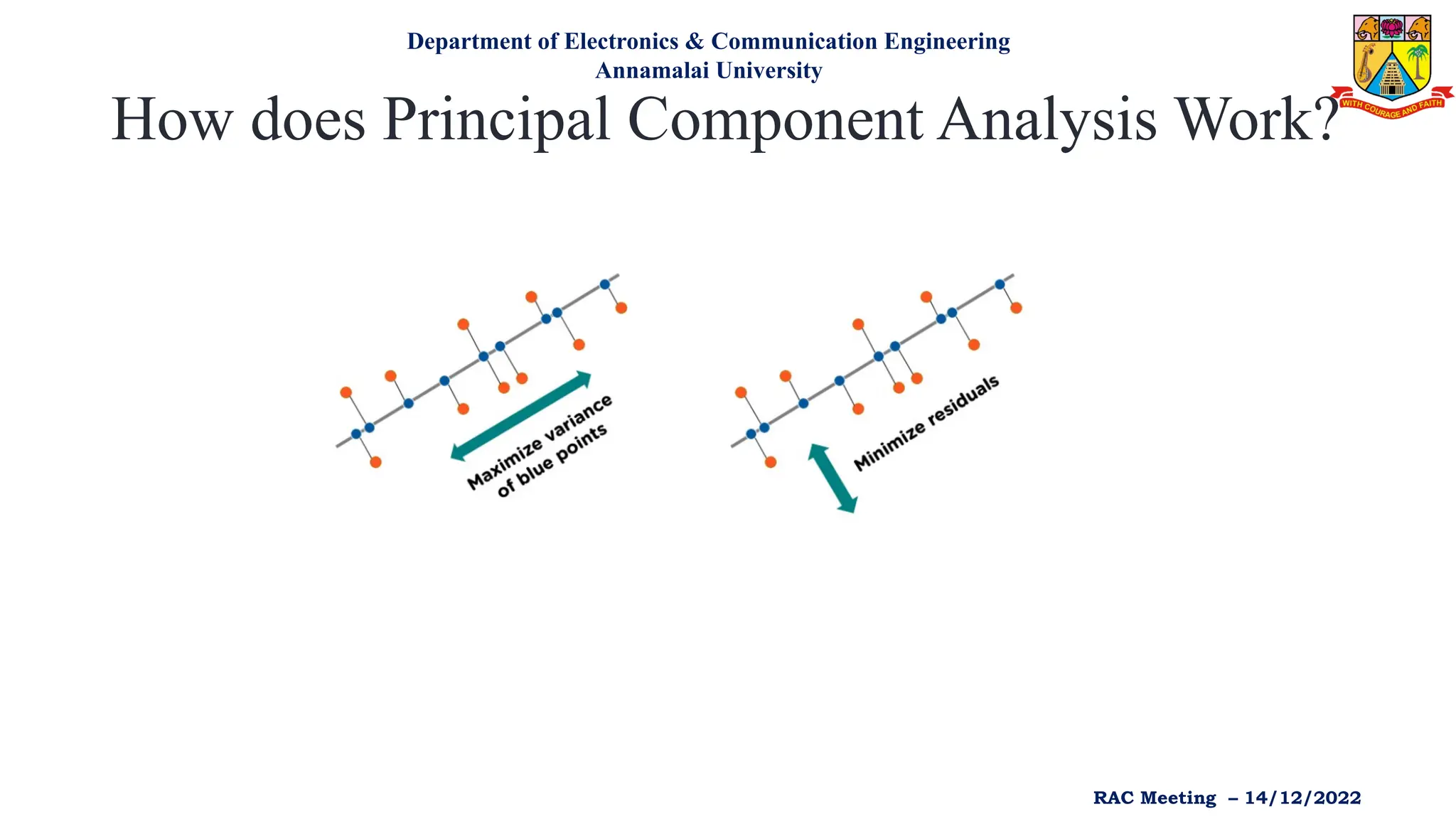

Principal Component Analysis (PCA) is an unsupervised machine learning technique used for dimensionality reduction, enhancing interpretability while minimizing information loss. It identifies principal components that explain the maximum variance within a dataset and is widely applied in various fields such as healthcare, finance, and image processing. Although PCA is computationally efficient and helps prevent overfitting, it presents challenges such as low interpretability of principal components and potential information loss.