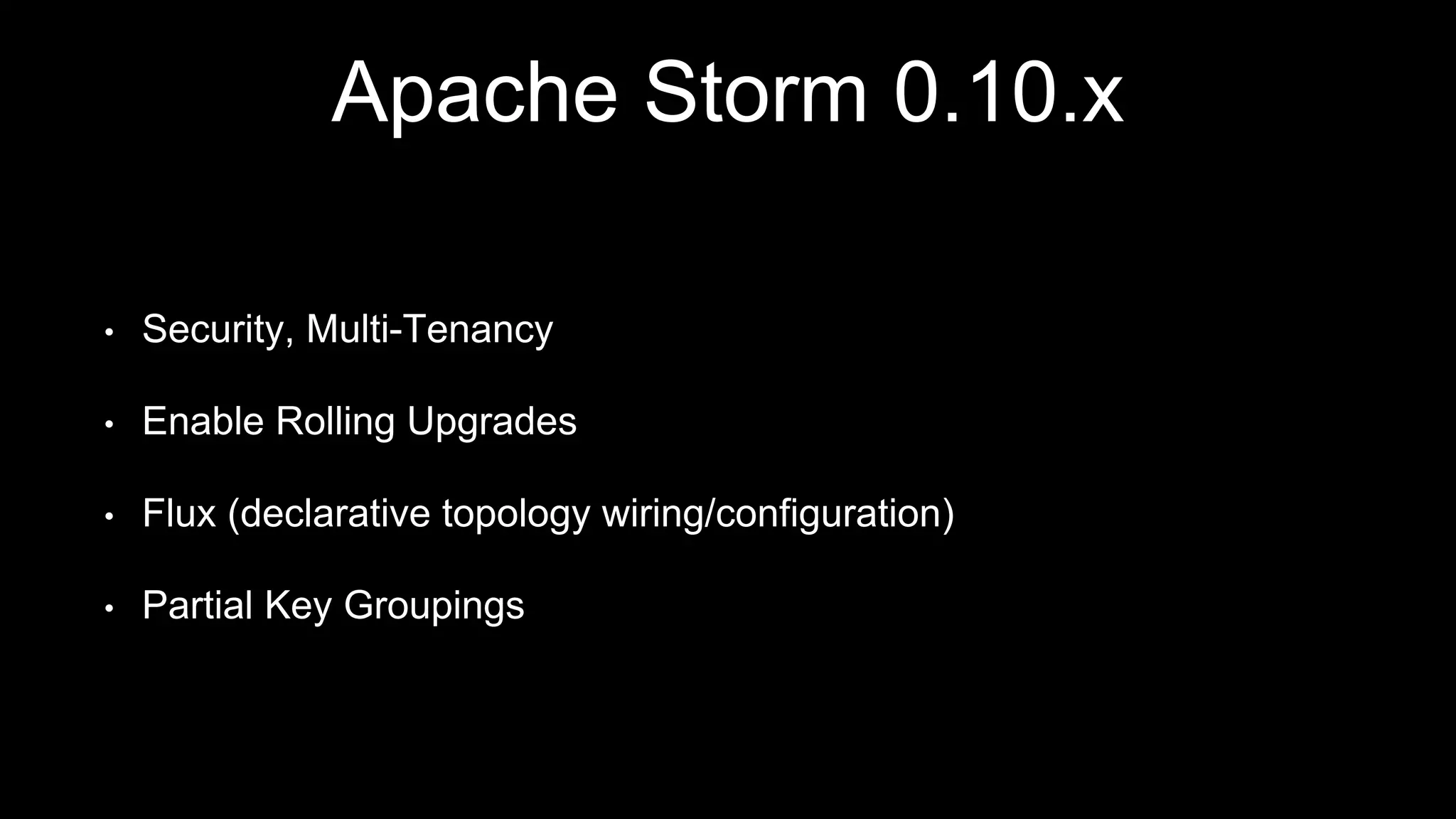

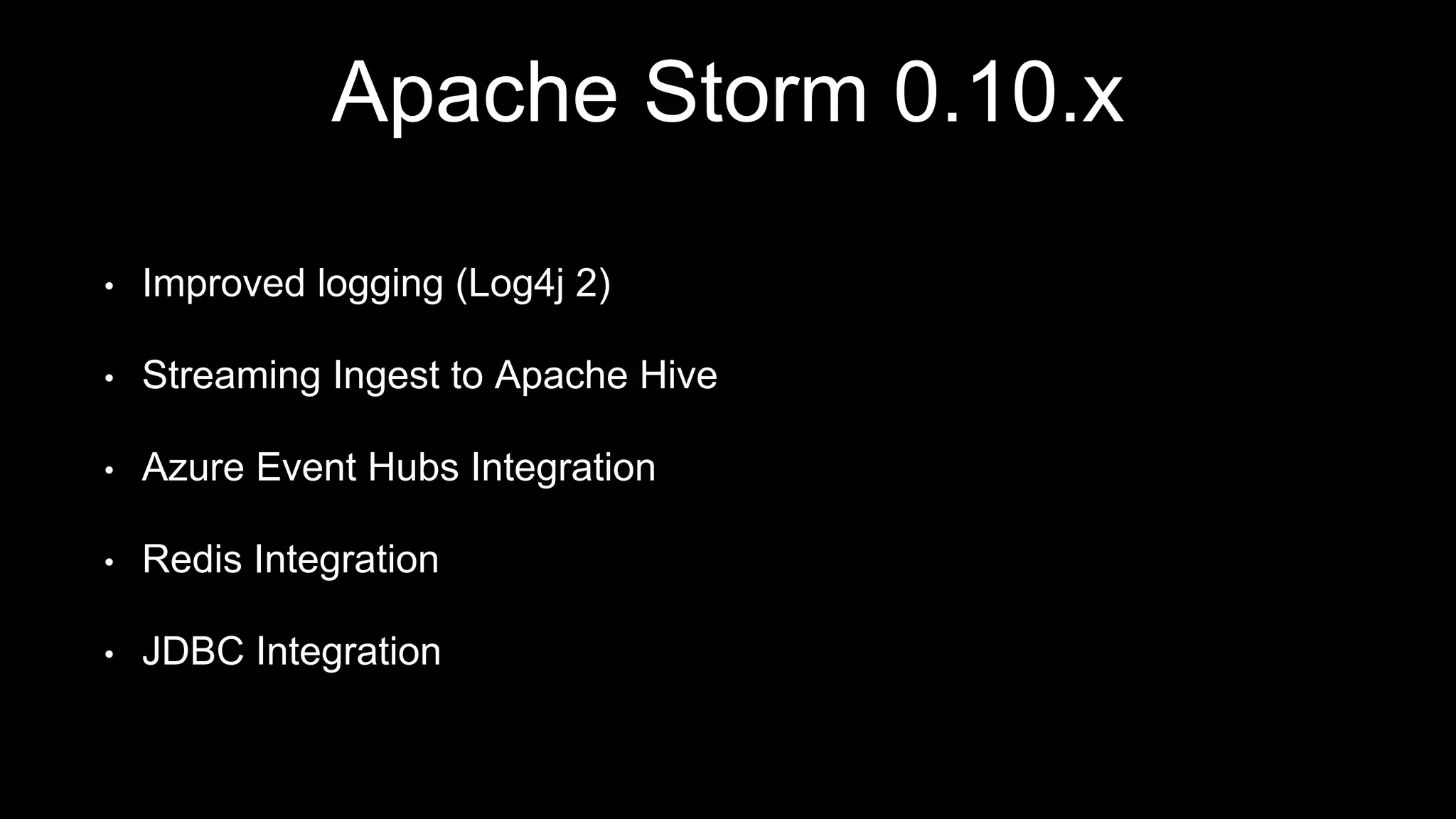

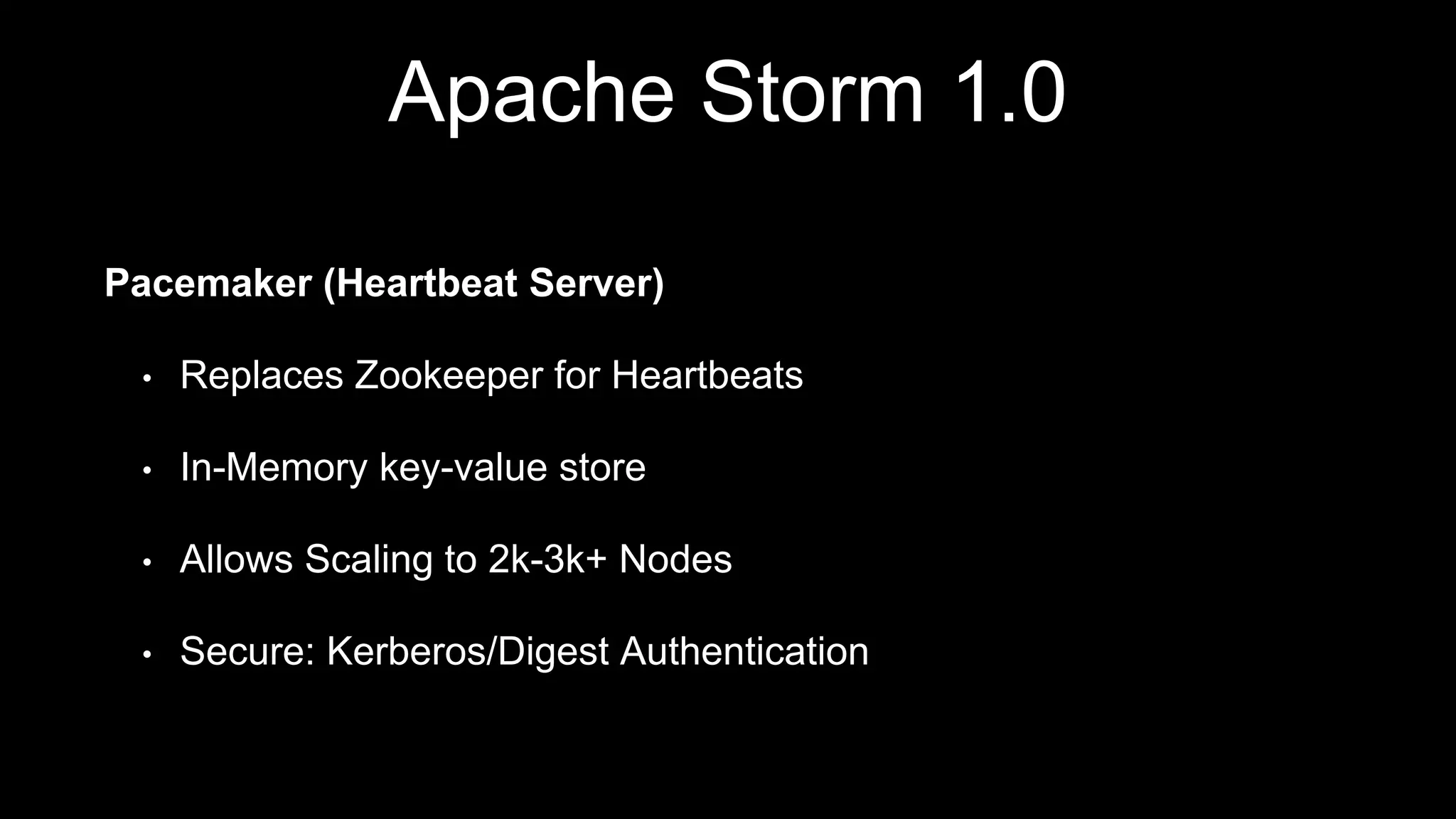

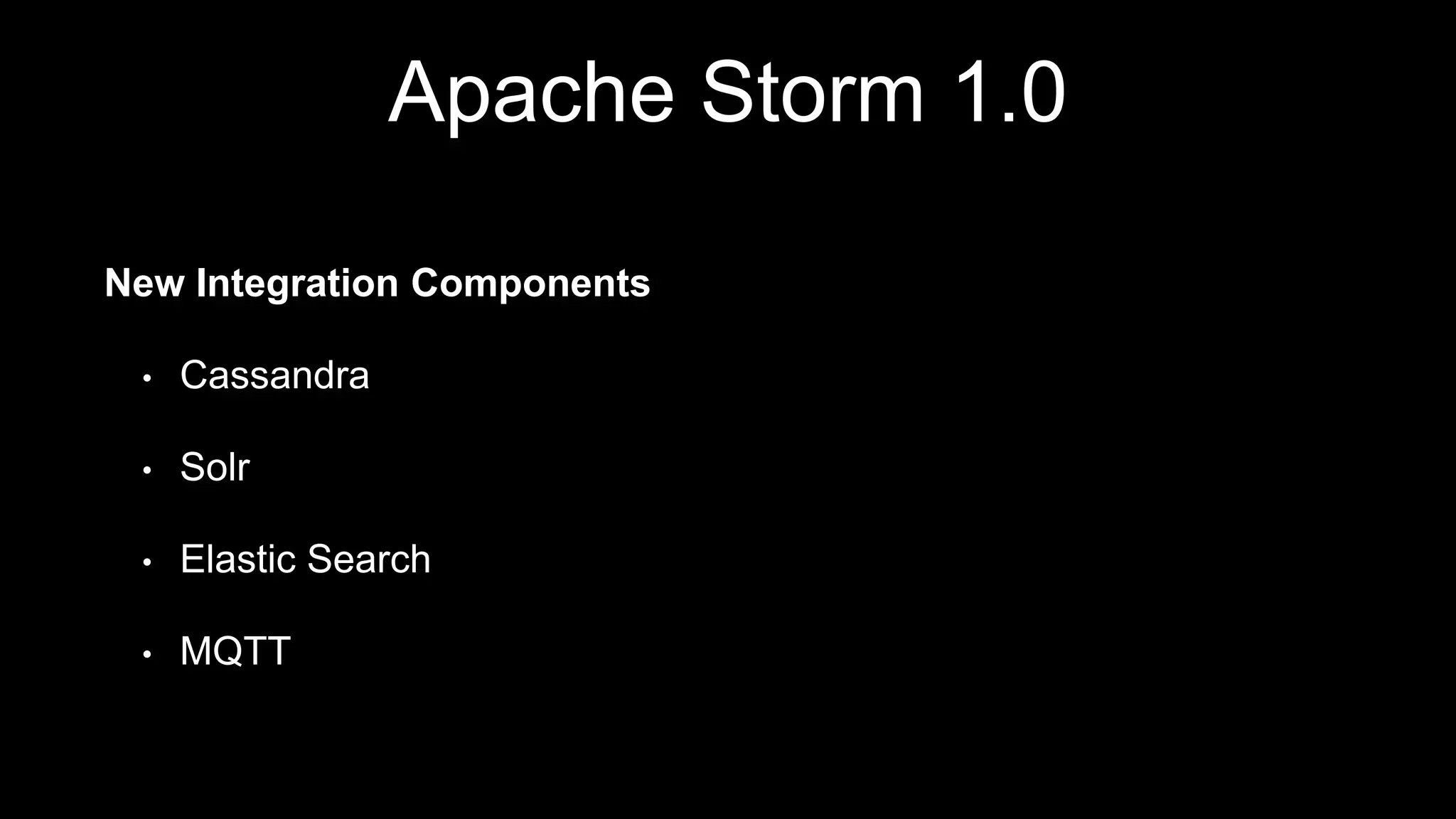

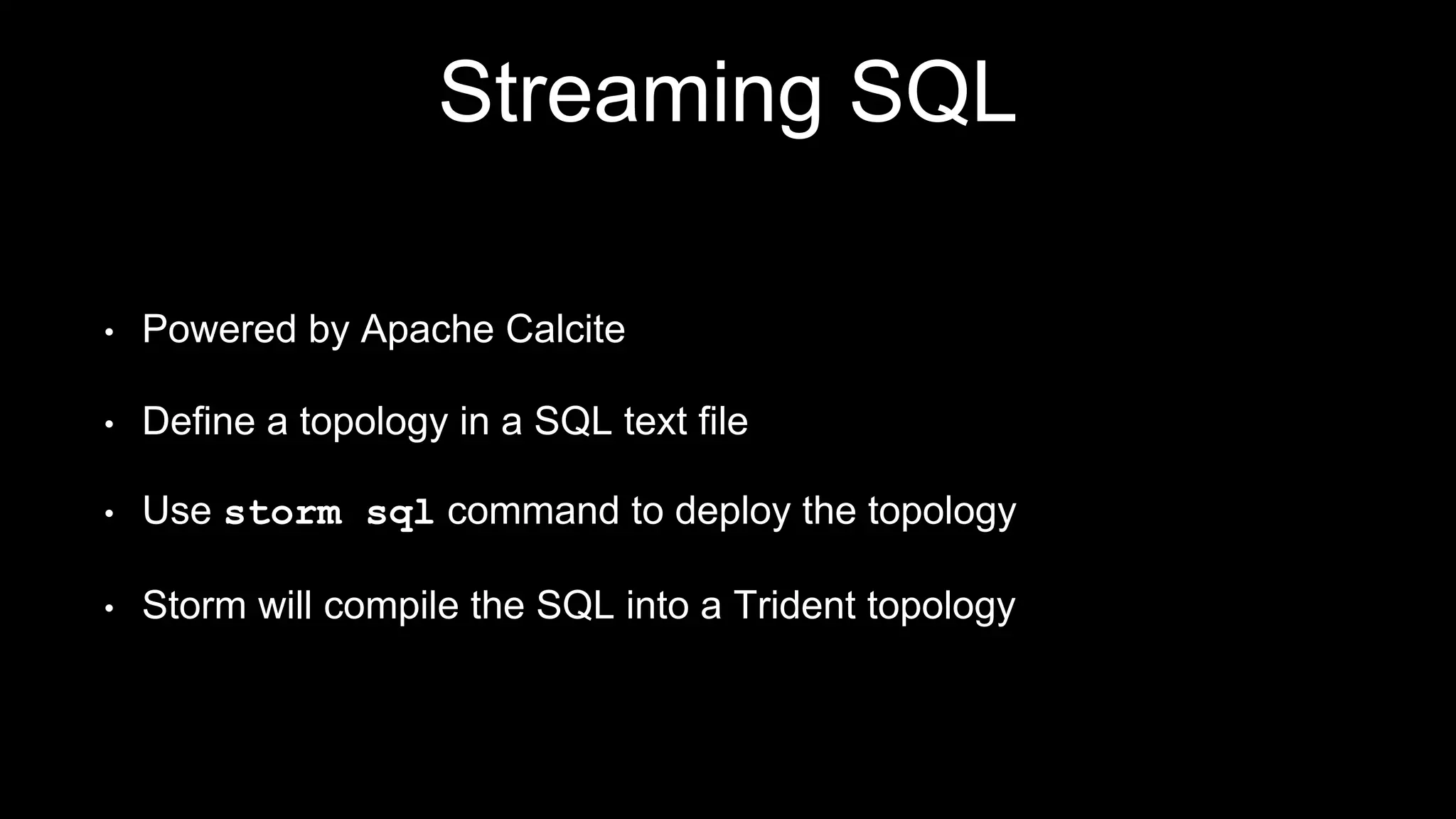

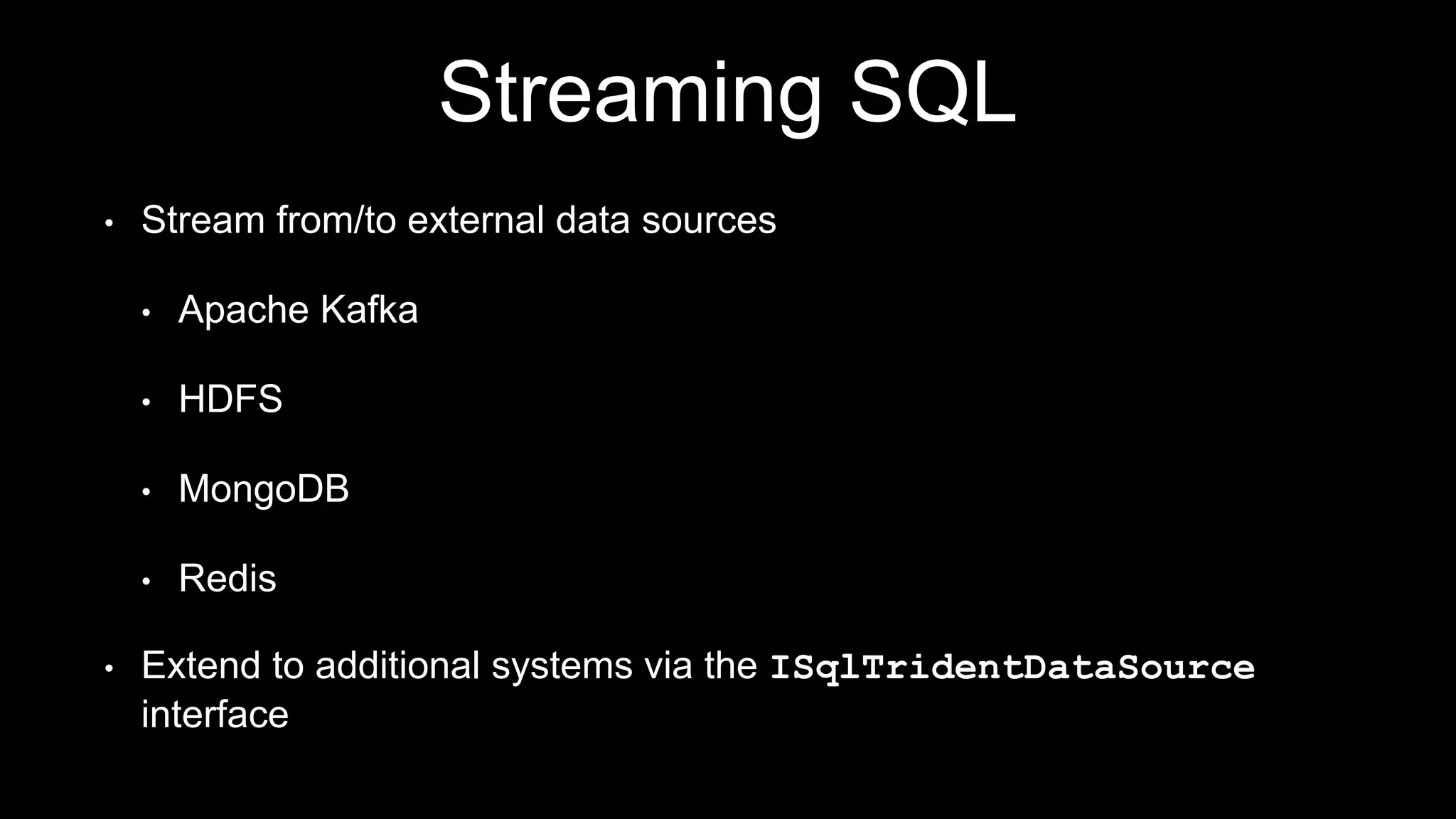

This document discusses the past, present, and future of Apache Storm. It summarizes key releases of Storm including 0.9.x which moved Storm to Apache, 0.10.x which focused on enterprise readiness, 1.0 which improved performance and maturity, and 1.1.0 which added features like streaming SQL. It outlines current features such as the Resource Aware Scheduler, Kafka integration improvements, and new integrations. It concludes by discussing Storm 2.0 and beyond, including transitions to Java from Clojure and improved performance, as well as integrating Apache Beam and allowing bounded spouts and dynamic topology updates.

![Apache Storm 1.0

State Management - Stateful Bolts

• Automatic checkpoints/snapshots

• Query/Update state

• Asynchronous Barrier Snapshotting (ABS) algorithm [1]

• Chandy-Lamport Algorithm [2]

[1] http://arxiv.org/pdf/1506.08603v1.pdf

[2] http://research.microsoft.com/en-us/um/people/lamport/pubs/chandy.pdf](https://image.slidesharecdn.com/futureofapachestorm-hs17munich-180917164116/75/Past-Present-and-Future-of-Apache-Storm-12-2048.jpg)

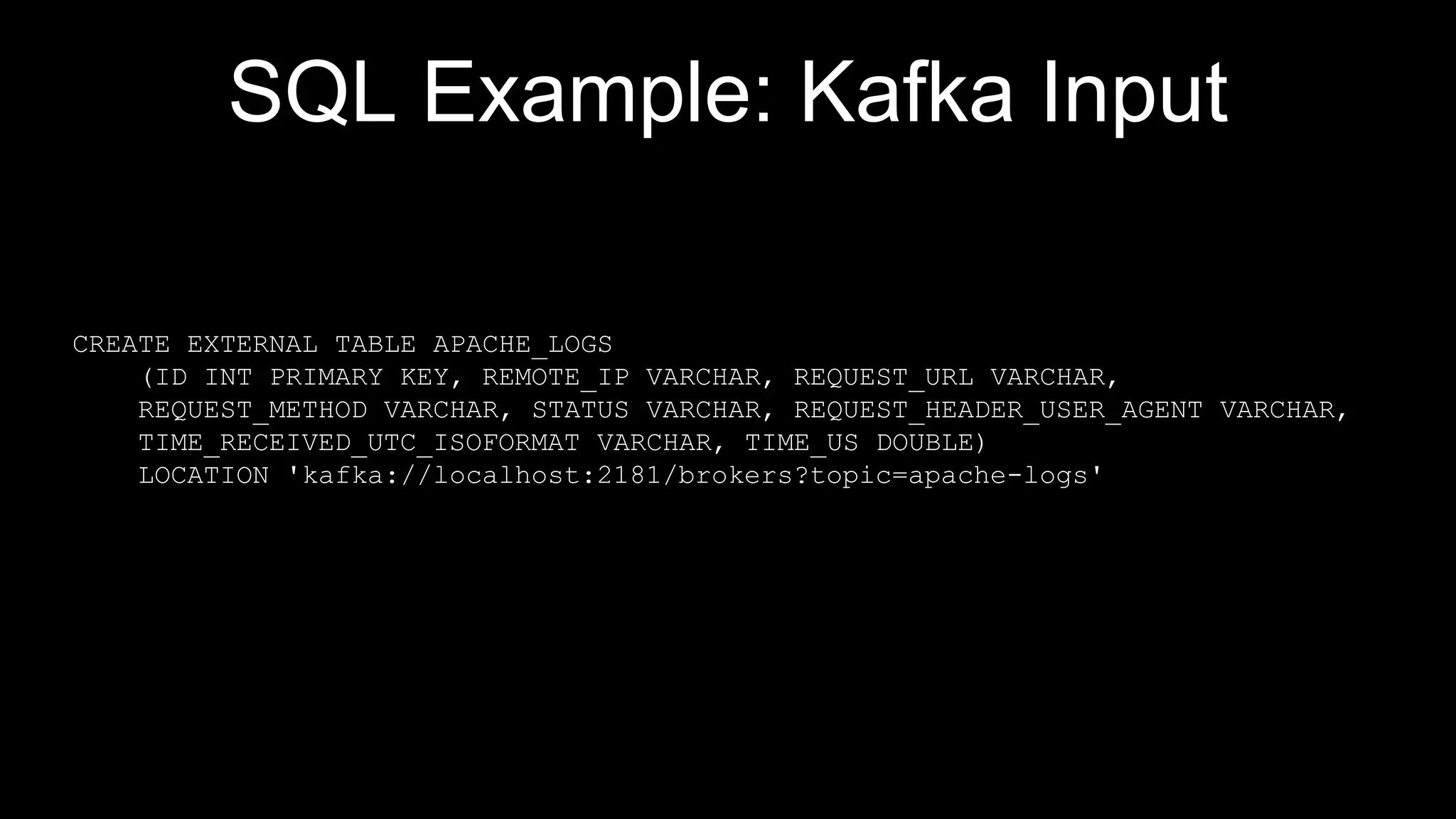

![Streaming SQL - External Data

Sources

CREATE EXTERNAL TABLE table_name field_list

[ STORED AS

INPUTFORMAT input_format_classname

OUTPUTFORMAT output_format_classname

]

LOCATION location

[ PARALLELISM parallelism ]

[ TBLPROPERTIES tbl_properties ]

[ AS select_stmt ]](https://image.slidesharecdn.com/futureofapachestorm-hs17munich-180917164116/75/Past-Present-and-Future-of-Apache-Storm-29-2048.jpg)