1) Bayesian inference in hidden Markov models aims to compute the posterior distribution p(x1:n|y1:n) and marginal likelihoods p(y1:n) given observed data y1:n. This can be done using filtering recursions to calculate the marginal distributions p(xn|y1:n) and likelihoods p(y1:n).

2) Sequential Monte Carlo (SMC) methods, also known as particle filters, provide a way to approximate the filtering distributions and likelihoods using a set of random samples or "particles". Importance sampling is used to assign importance weights to the particles to represent the target distributions.

3) Sequential importance sampling (SIS) recursively propag

![§1.3 Sequential Monte Carlo Methods 9

1.3.1. X =R

n n

πn (x1:n ) = πn (xk ) = N (xk ; 0, 1), (1.3.12)

k=1 k=1

n

x2

k

γn (x1:n ) = exp − ,

k=1

2

Zn = (2π)n/2 .

n n

qn (x1:n ) = qk (xk ) = N (xk ; 0, σ 2 ).

k=1 k=1

1

σ2 > 2

VIS Zn < ∞ relative variance

VIS Zn 1 σ4

n/2

= −1 .

2

Zn N 2σ 2 − 1

1 σ4

σ 2

< σ2 = 1 2σ 2 −1

>1 relative variance

n . σ = 1.2

VIS [Zn ] VIS [Zn ]

qk (xk ) ≈ πn (xk ) N 2

Zn

≈ (1.103)n/2 . n = 1000 N 2

Zn

≈

21 23

1.9 × 10 N ≈ 2 × 10 relative variance

VIS [Zn ]

Z2

= 0.01 .

n

§1.3.4 Resampling

IS SIS n

SMC .

. πn (x1:n ) IS

πn (x1:n ) qn (x1:n )

πn (x1:n ) . πn (x1:n )

i i

IS πn (x1:n ) Wn X1:n

resampling πn (x1:n )

. πn (x1:n ) N

i i

πn (x1:n ) N X1:n Nn

1:N 1 N 1:N

Nn = (Nn , . . . , Nn ) (N, Wn )

1/N . resampled empirical measure

πn (x1:n )

N i

Nn

π n (x1:n ) = δX i (x1:n ) (1.3.13)

i=1

N 1:n](https://image.slidesharecdn.com/particlefilter-100428092908-phpapp02/85/Particle-filter-9-320.jpg)

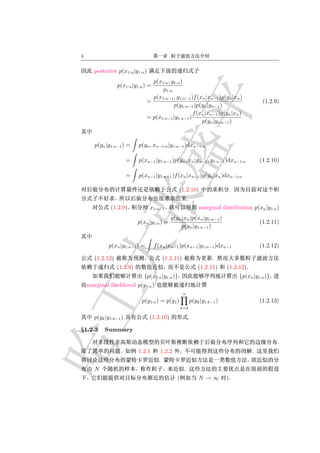

![10

i 1:N i

E [Nn |Wn ] = N Wn . π n (x1:n ) πn (x1:n ) .

1

• Systematic Resampling U1 ∼ U 0, N i = 2, . . . , N

i−1 i i−1 k i k

Ui = U1 + N

Nn = Uj : k=1 Wn ≤ Uj ≤ k=1 Wn

0

k=1 = 0.

i i 1:N

• Residual Resampling Nn = N W n N, W n

1:N i i

Nn W n ∝ Wn − N −1 Nn

i i i i

Nn = Nn + N n .

1:N 1:N

• Multinomial Resampling (N, Wn ) Nn .

O(N ) . systematic

resampling

.

πn (x1:n )

In (ϕn ) πn (x1:n )

π n (x1:n ) .

.

.

n n+1

.

. .

§1.3.5 A Generic Sequential Monte Carlo Algorithm

SMC SIS . 1

i i

π1 (x1 ) IS π1 (x1 ) {W1 , X1 }.

.

1 i i

{ N , X 1} . X1

i i j1 j2

N1 N1 j1 = j2 = · · · = jN1

i X1 = X1 =

jN i i

i i

· · · = X1 1

= X1 . SIS X2 ∼ q2 (x2 |X 1 ).

i i

(X 1 , X2 ) π1 (x1 )q2 (x2 |x1 ).

incremental weights α2 (x1:2 ).](https://image.slidesharecdn.com/particlefilter-100428092908-phpapp02/85/Particle-filter-10-320.jpg)

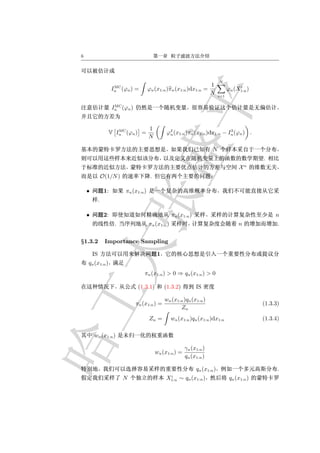

![§1.4 Particle Filter 13

1

1.3.1 σ2 > 2

asymptotic variance

VSMC Zn n σ4

1/2

= −1

2

Zn N 2σ 2 − 1

VIS Zn 1 σ4

n/2

= −1 .

2

Zn N 2σ 2 − 1

SMC n IS n

2

. σ = 1.2 qk (xk ) ≈ πn (xk ).

n = 1000 IS N ≈ 2 × 1023

VIS [Zn ] VSMC [Zn ]

Zn 2 = 10−2 . 2

Zn

= 10−2 SMC

N ≈ 104 19 .

§1.3.6 Summary

SMC {πn (x1:n )} {Zn }.

• n qn (xn |x1:n−1 )

αn (x1:n ) n .

• k n > k πn (x1:k )

SMC . n

{πn (x1:n )} SMC . , πn (x1 )

.

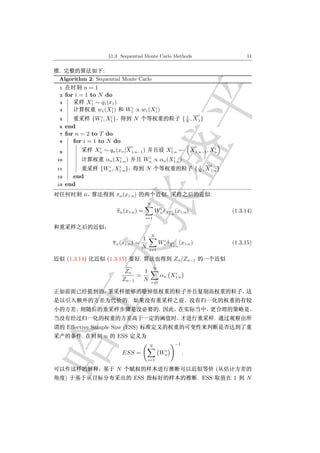

§1.4 Particle Filter

SMC SIS

.

{p(x1:n |y1:n )}n≥1 .

ESS .

§1.4.1 SMC for Filtering

SMC {p(x1:n |y1:n )}n≥1](https://image.slidesharecdn.com/particlefilter-100428092908-phpapp02/85/Particle-filter-13-320.jpg)

![§1.4 Particle Filter 15

Algorithm 4: SMC for Filtering

1 n=1

2 for i = 1 to N do

i

3 X1 ∼ q1 (x1 |y1 )

i µ(xi )g(y1 |X1 )

i

i i

4 w1 (X1 ) = 1

i

q(Xi |y1 )

W1 ∝ w1 (X1 )

i i 1 i

5 {W1 , X1 } N { N , X 1}

6 end

7 for n = 2 to T do

8 for i = 1 to N do

i i i i i

9 Xn ∼ qn (xn |yn , X n−1 ) X1:n ← (X 1:n−1 , Xn )

i i i

i g(yn |Xn )f (Xn |Xn−1 ) i i

10 αn (Xn−1:n ) = i i

q(Xn |yn ,Xn−1 )

Wn ∝ αn (Xn−1:n )

i i 1 i

11 {Wn , X1:n } N { N , X 1:n }

12 end

13 end

[1] A.D. and A. Johansen, Particle filtering and smoothing: Fifteen years later, in Hand-

book of Nonlinear Filtering (eds. D. Crisan et B. Rozovsky), Oxford University Press,

2009. See http://www.cs.ubc.ca/~arnaud](https://image.slidesharecdn.com/particlefilter-100428092908-phpapp02/85/Particle-filter-15-320.jpg)