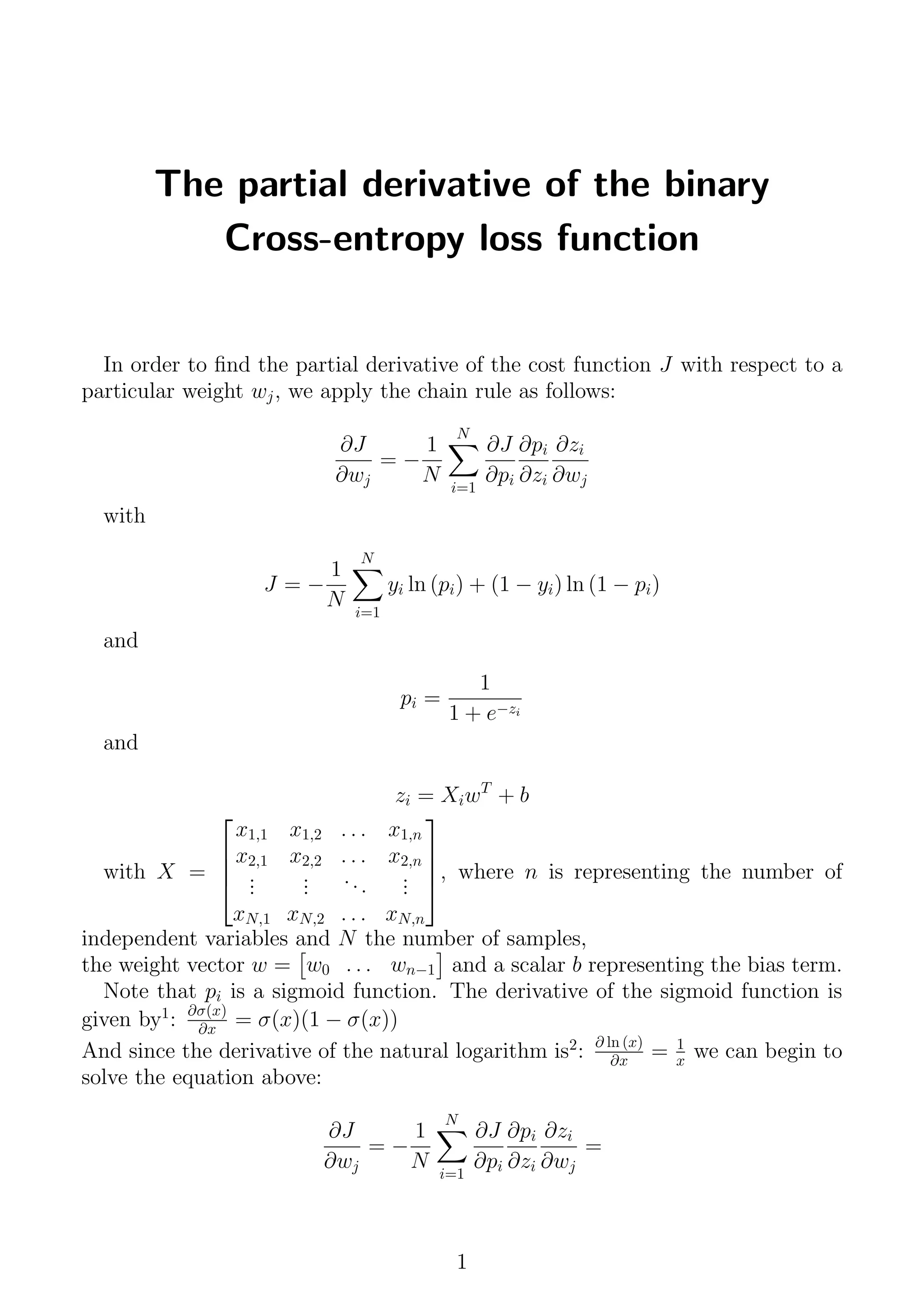

This document discusses the computation of the partial derivative of the binary cross-entropy loss function relative to weights and biases in a logistic regression model. It uses the chain rule to express the derivative in terms of the output probabilities and the input features, highlighting the roles of the sigmoid function and the natural logarithm in the calculations. The derived formulas allow for the adjustment of weights and biases during model training to minimize the loss.

![= −

1

N

N

i=1

[

yi

pi

+

1 − yi

1 − pi

(−1)] [pi(1 − pi)] xj =

= −

1

N

N

i=1

[yi(1 − pi) − (1 − yi)pi] xj =

= −

1

N

N

i=1

(yi − pi) xj =

=

1

N

N

i=1

(pi − yi) xj

The partial derivative of the cost function J with respect to the bias b can

be calculated accordingly. Considering that the mathematical deriviation of the

formula is very similar, except that ∂zi

∂b = 1, we can simply write:

∂J

∂b

=

1

N

N

i=1

(pi − yi)

References:

1) http://www.ai.mit.edu/courses/6.892/lecture8-html/sld015.htm

2) https://www.onlinemathlearning.com/derivative-ln.html

November 10, 2020 T. Roeschl

2](https://image.slidesharecdn.com/derivativeofcrossentropyloss3-201110111909/85/The-partial-derivative-of-the-binary-Cross-entropy-loss-function-2-320.jpg)