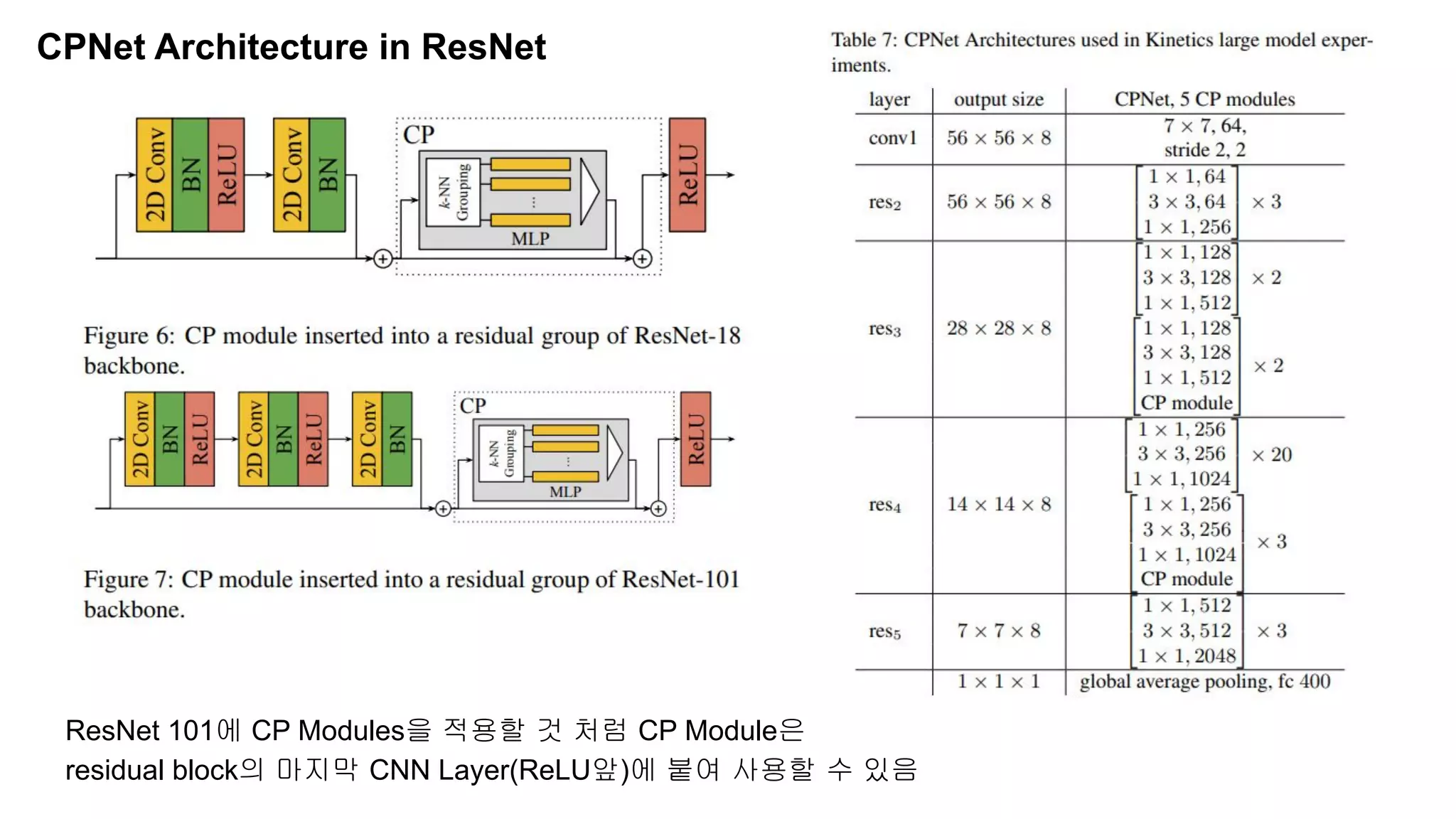

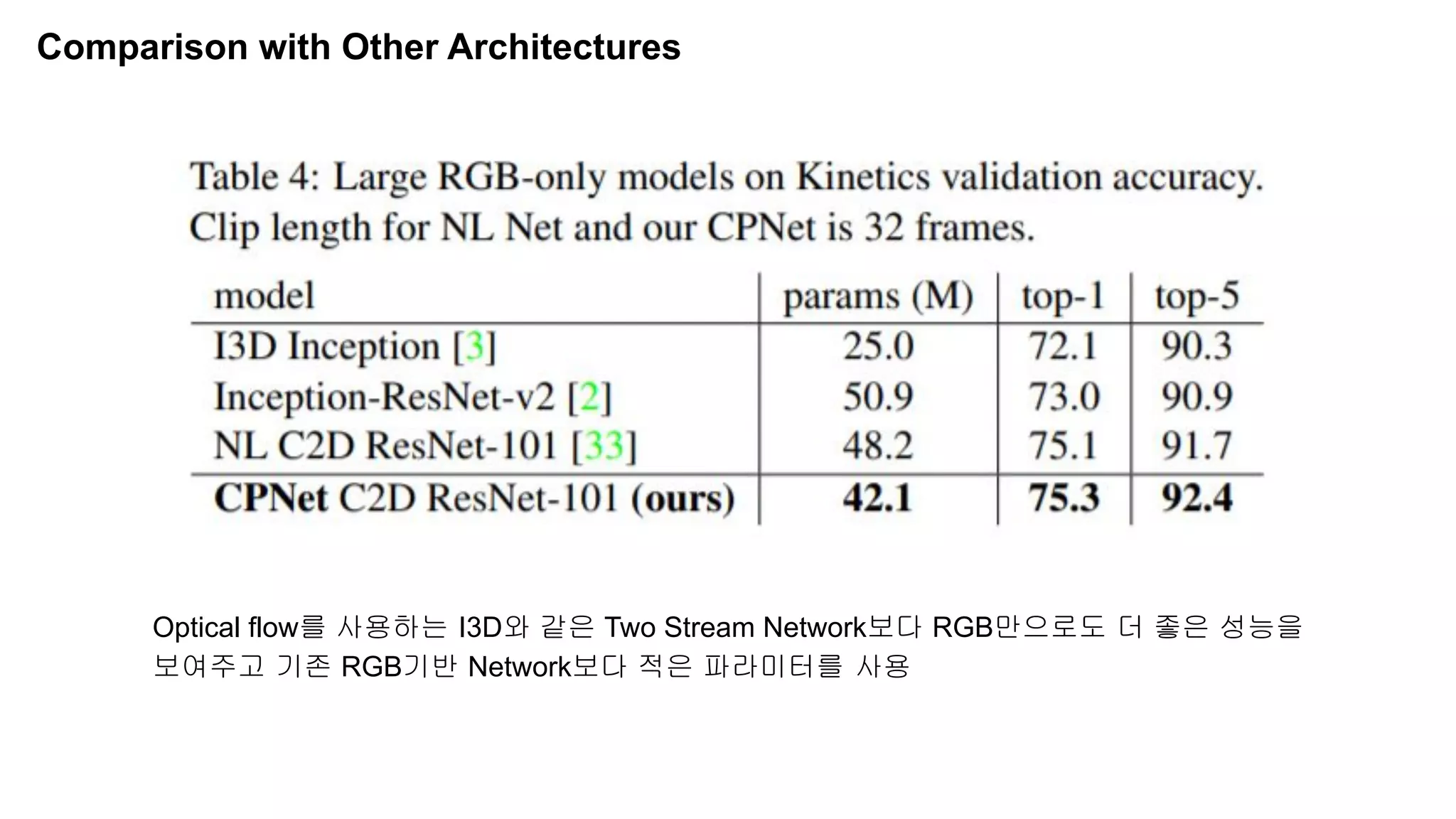

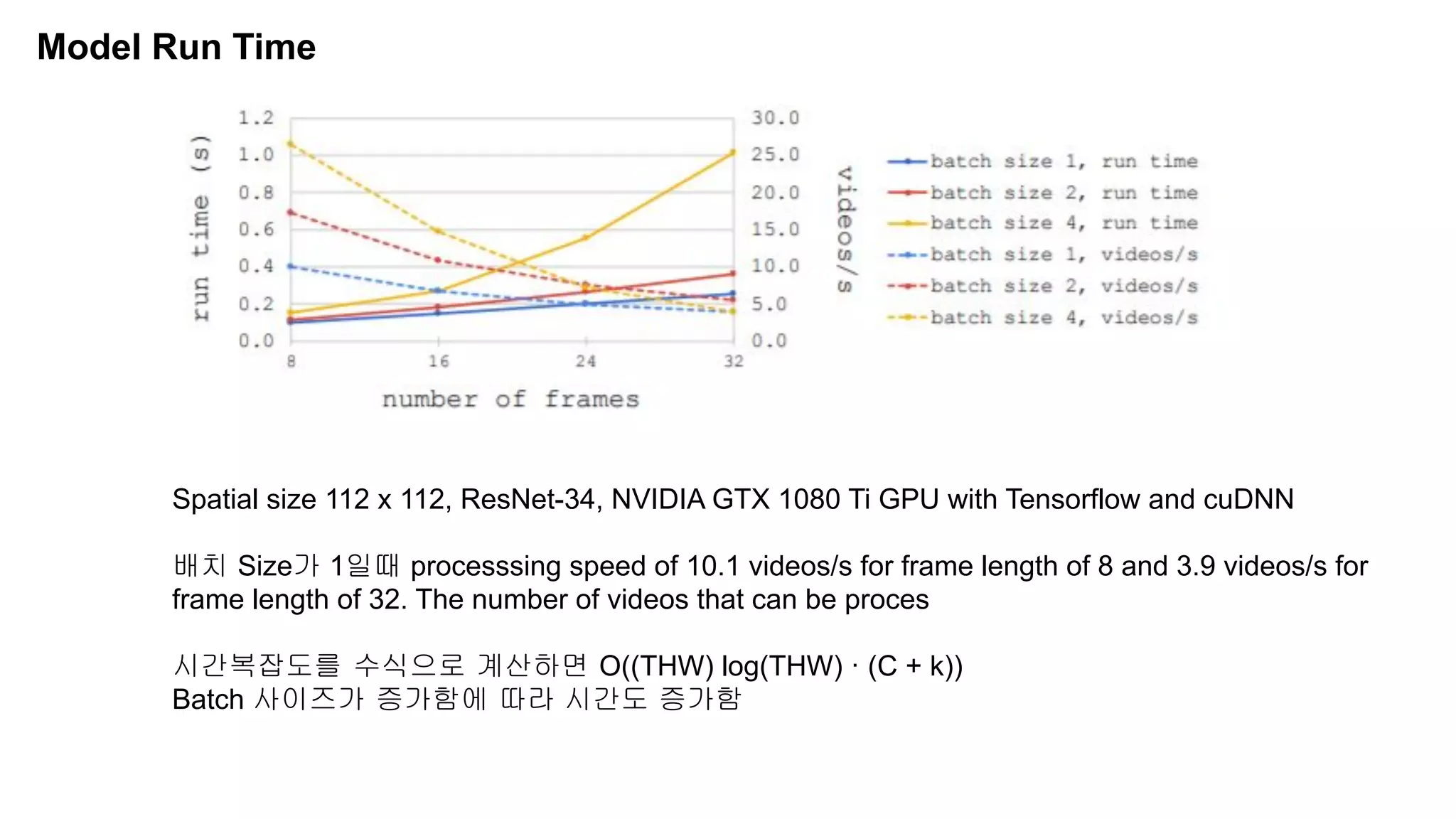

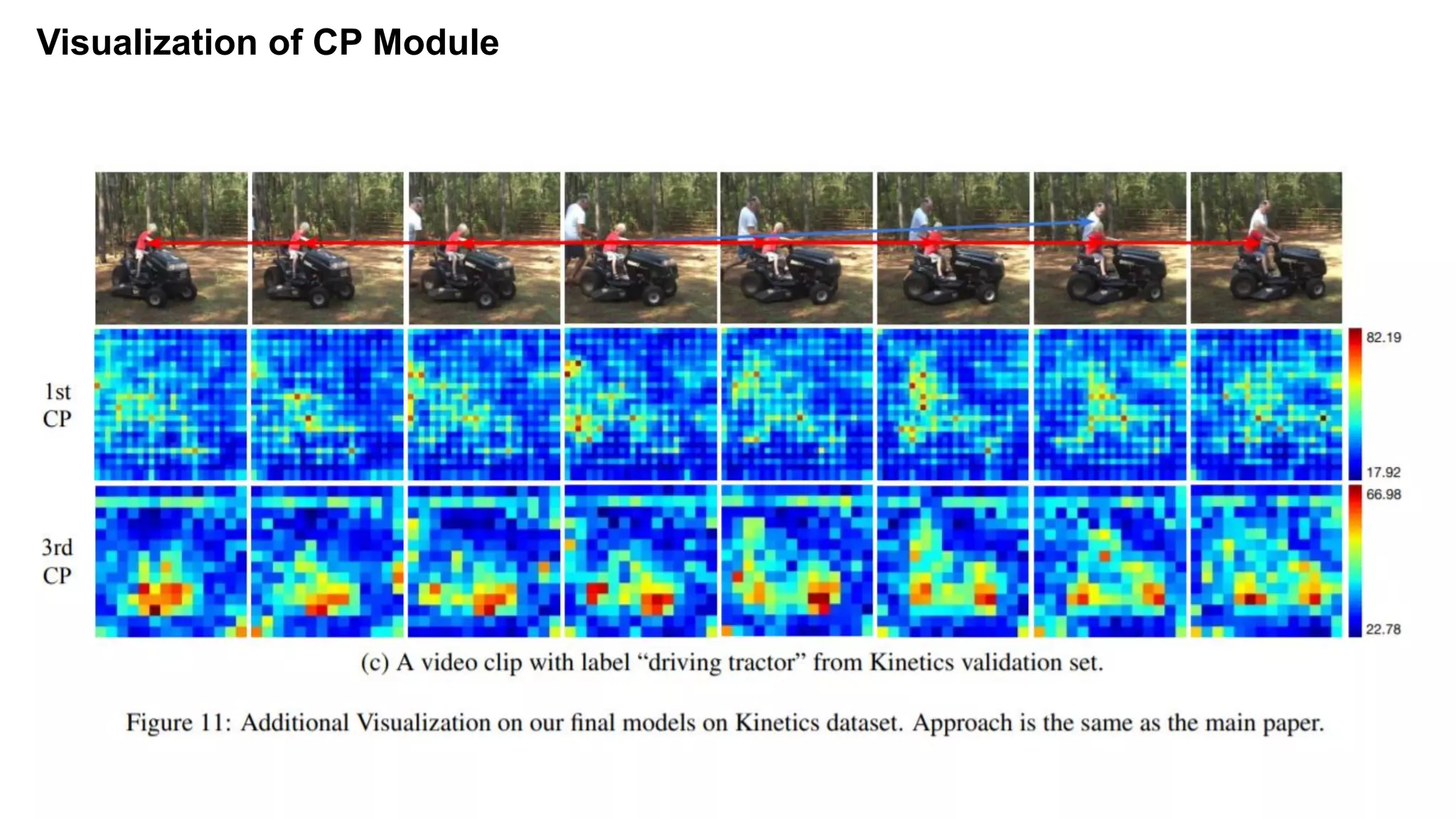

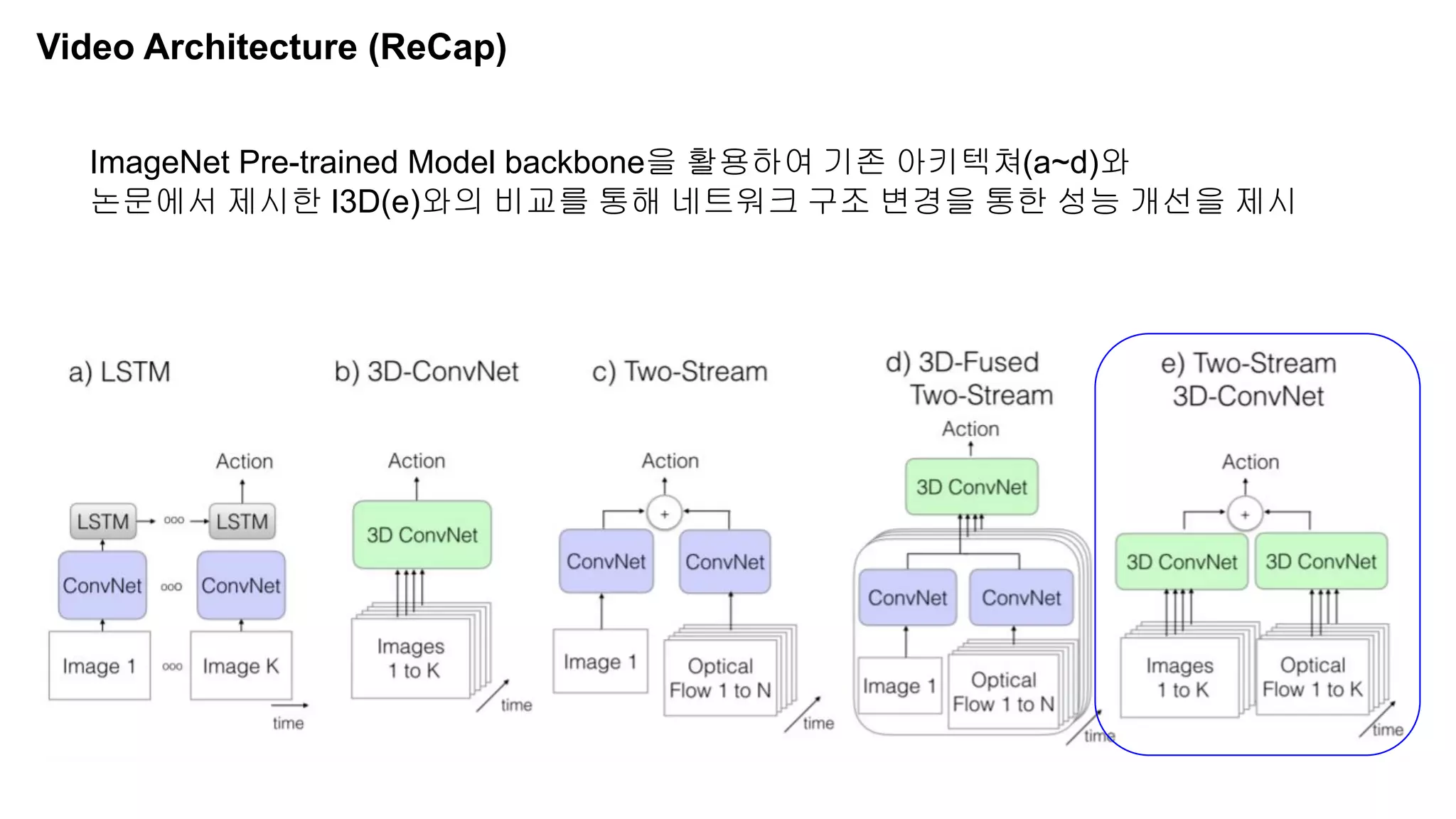

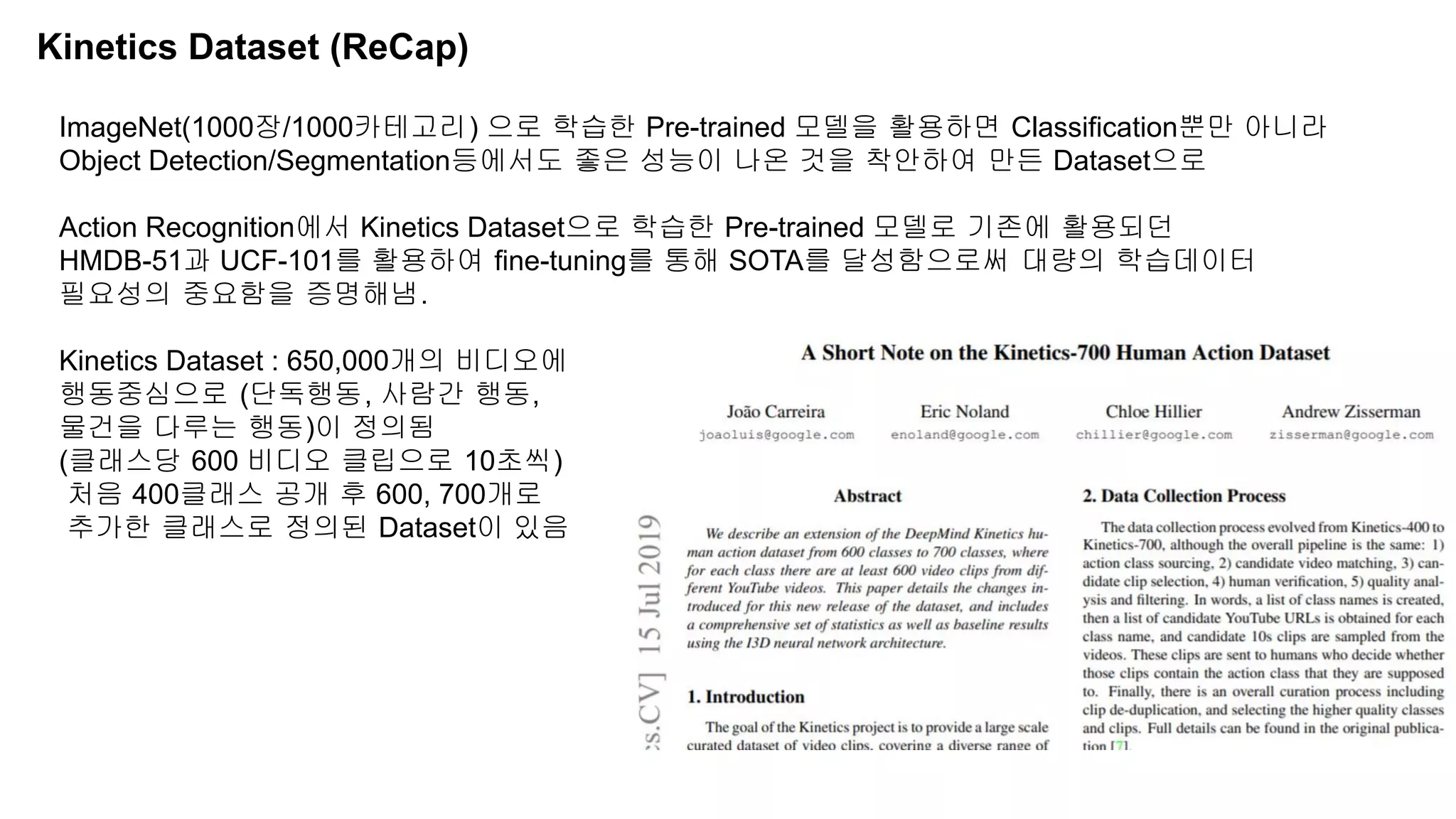

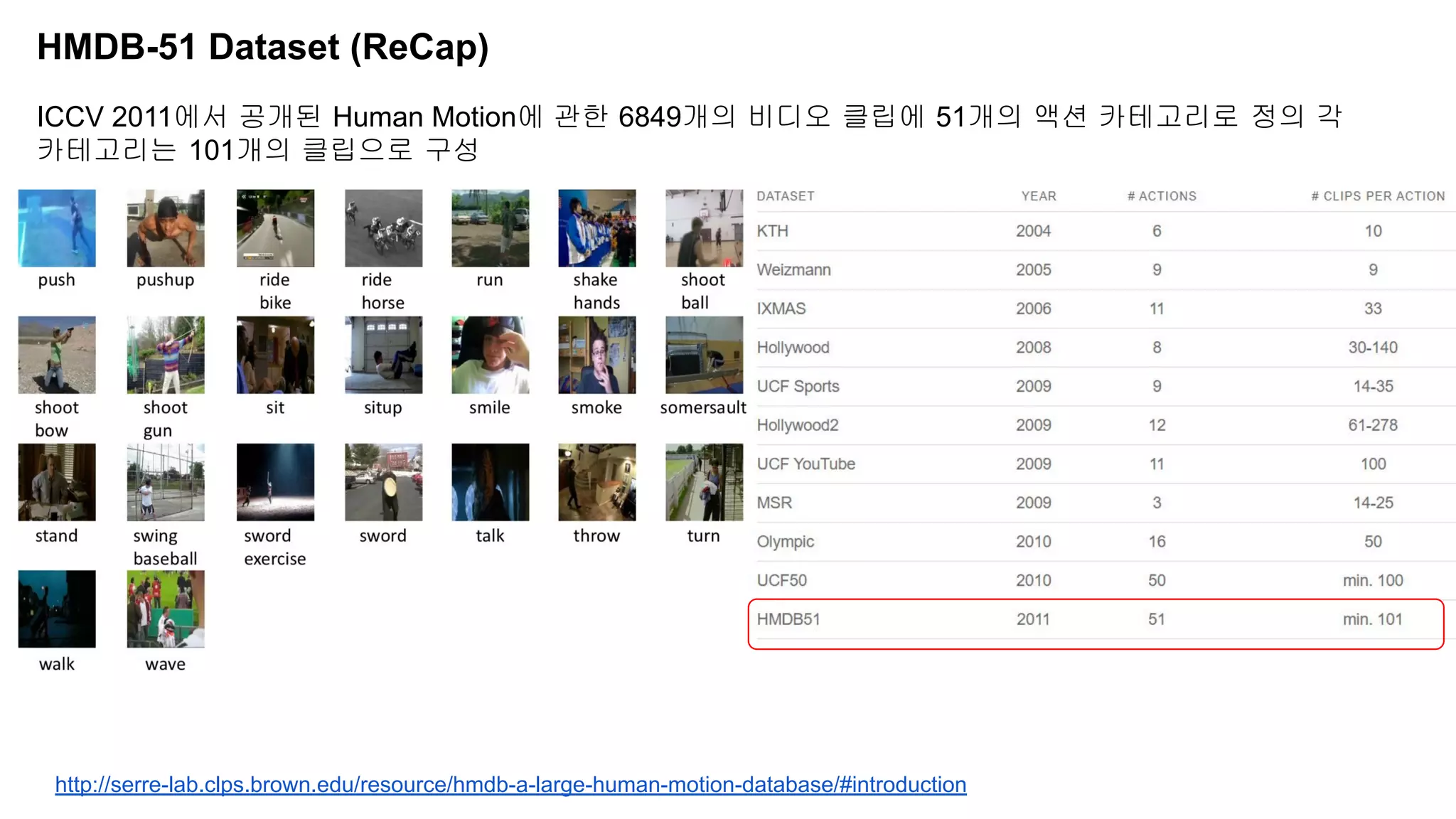

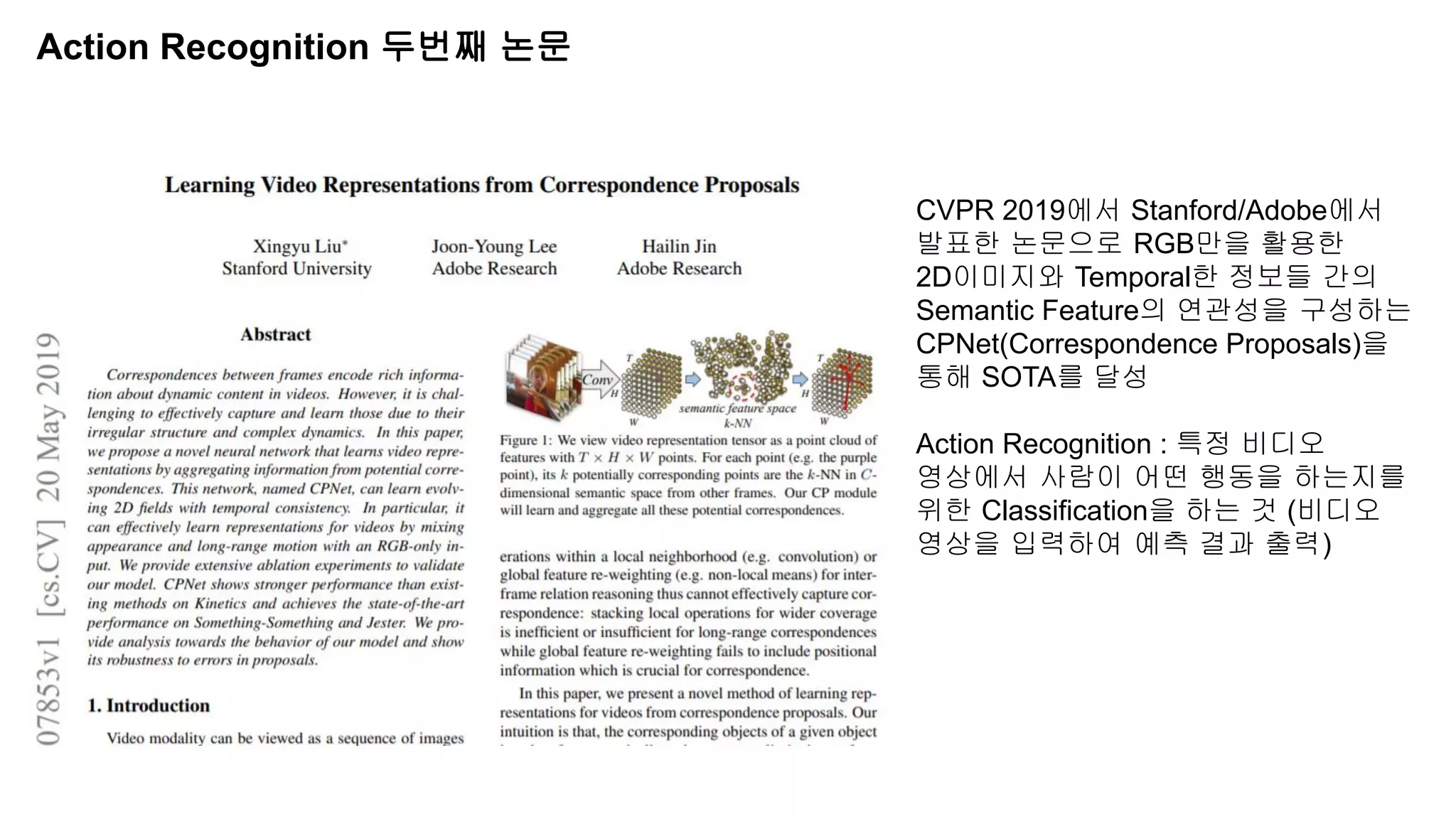

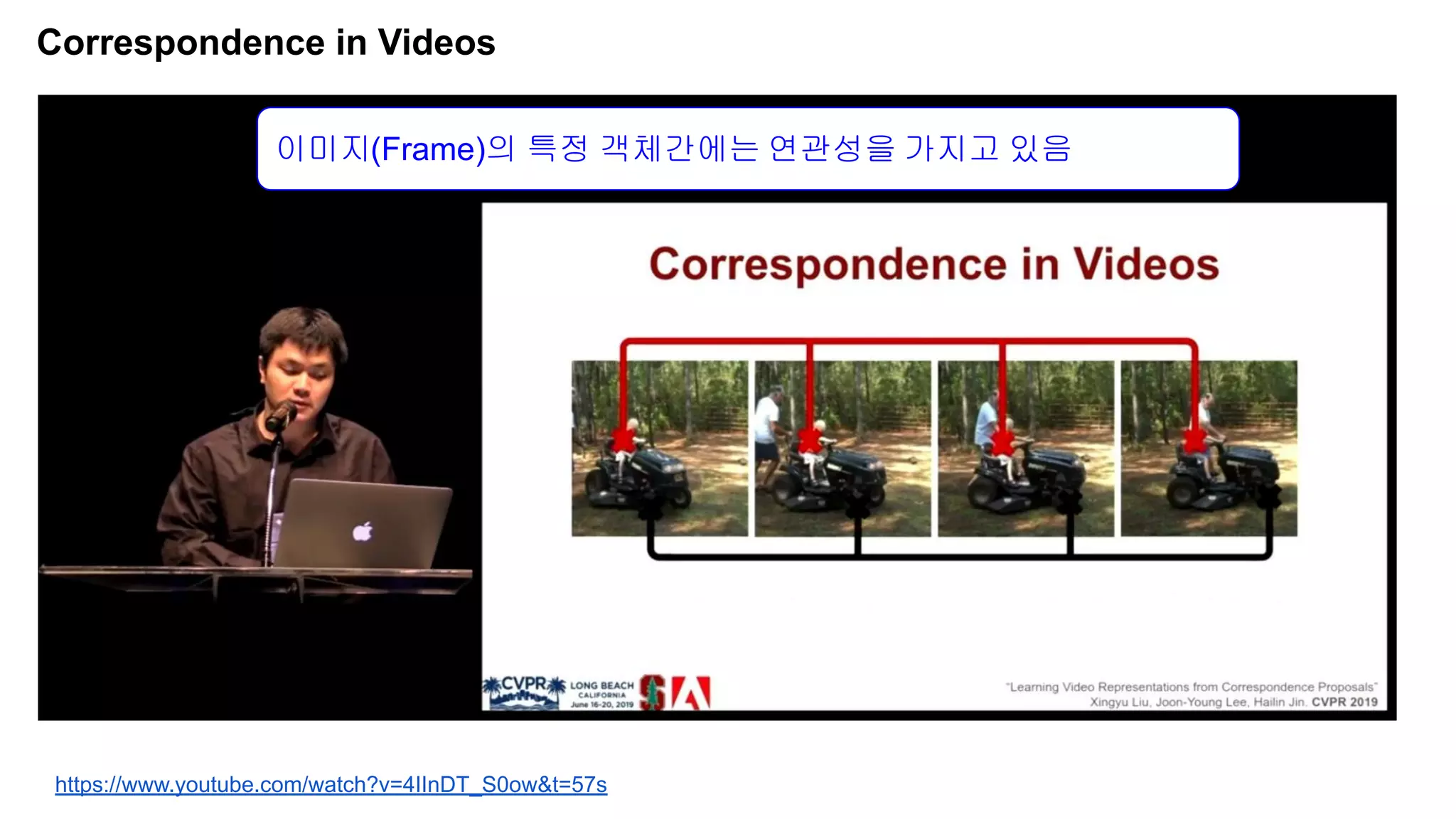

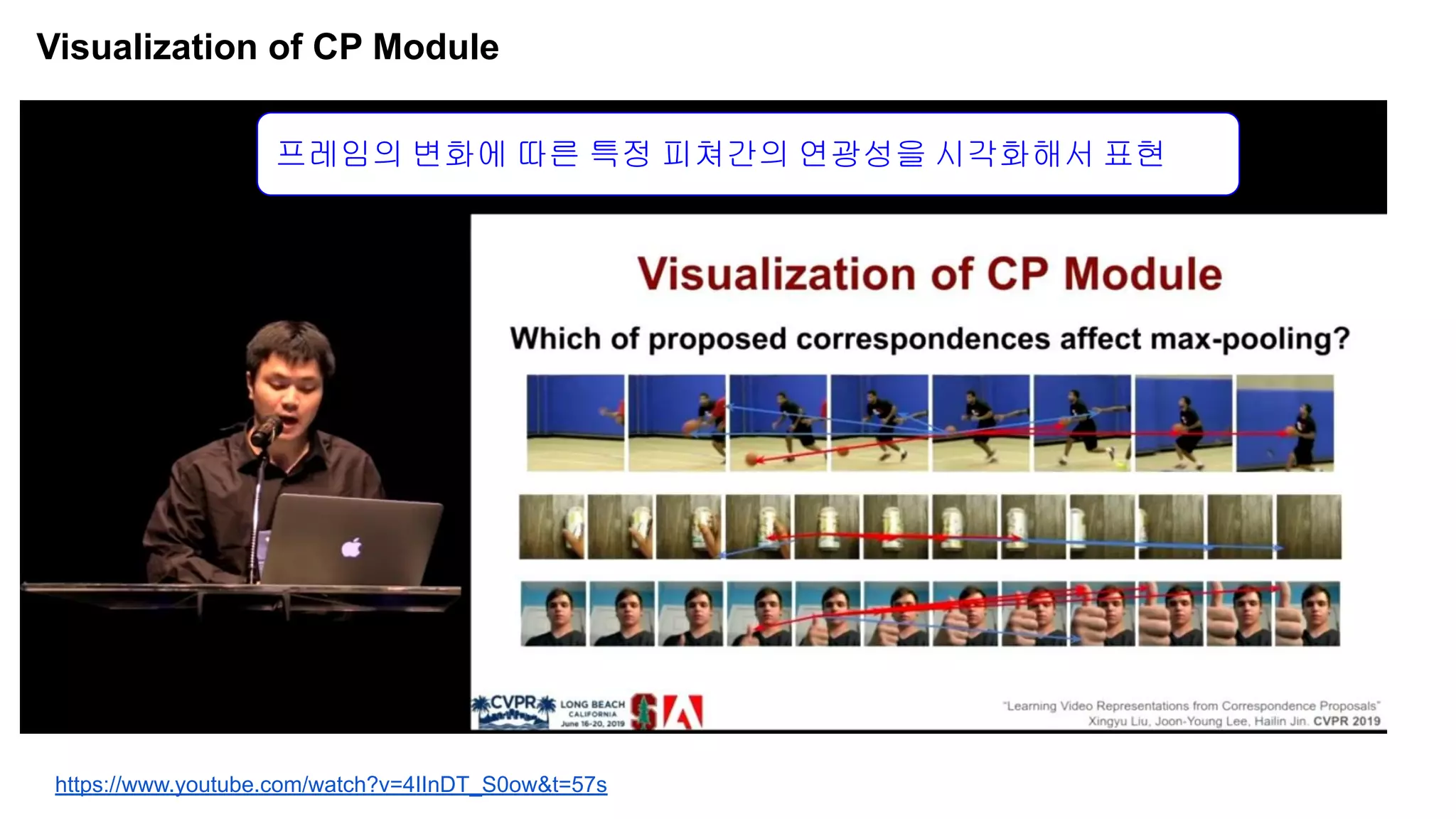

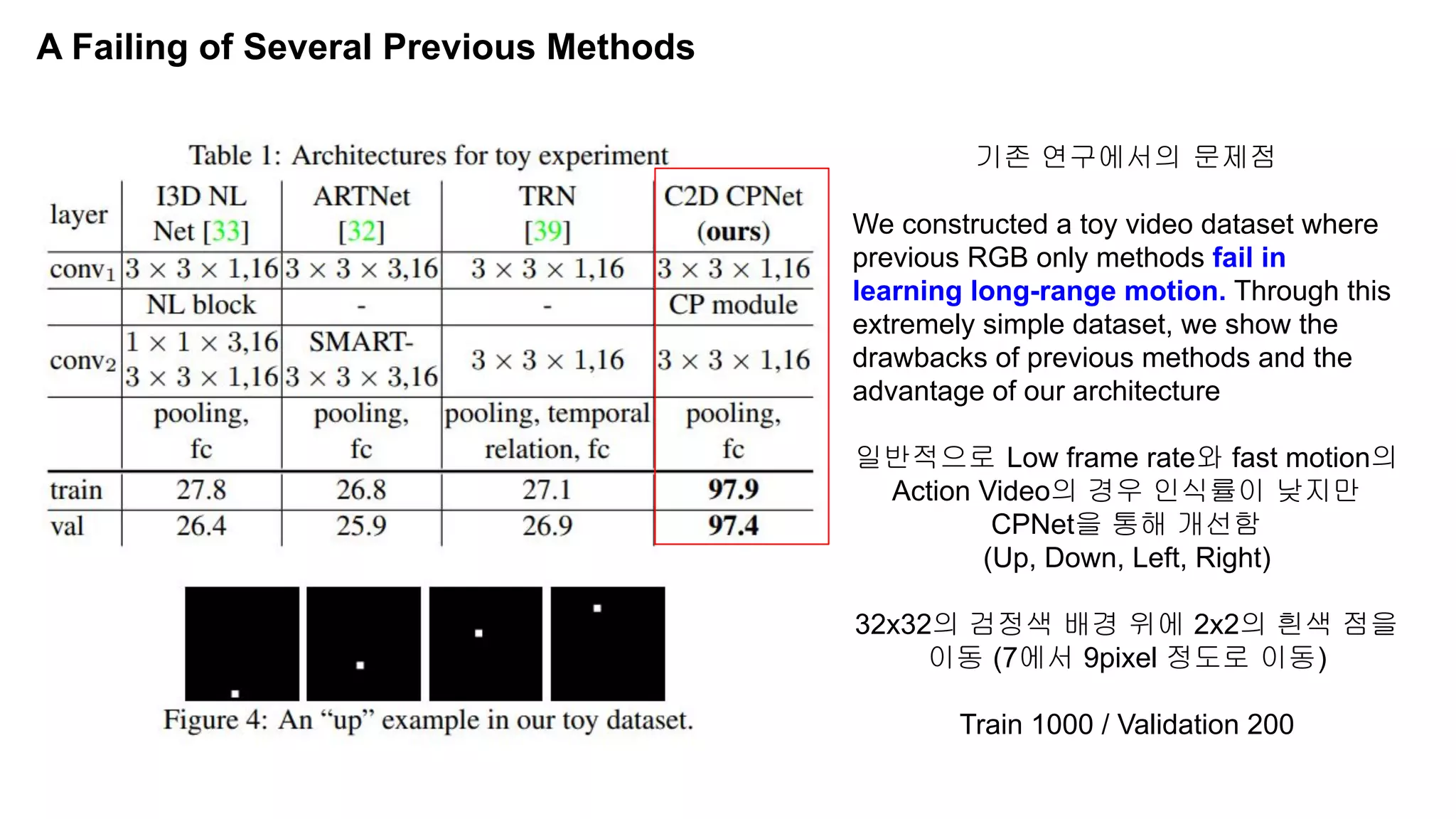

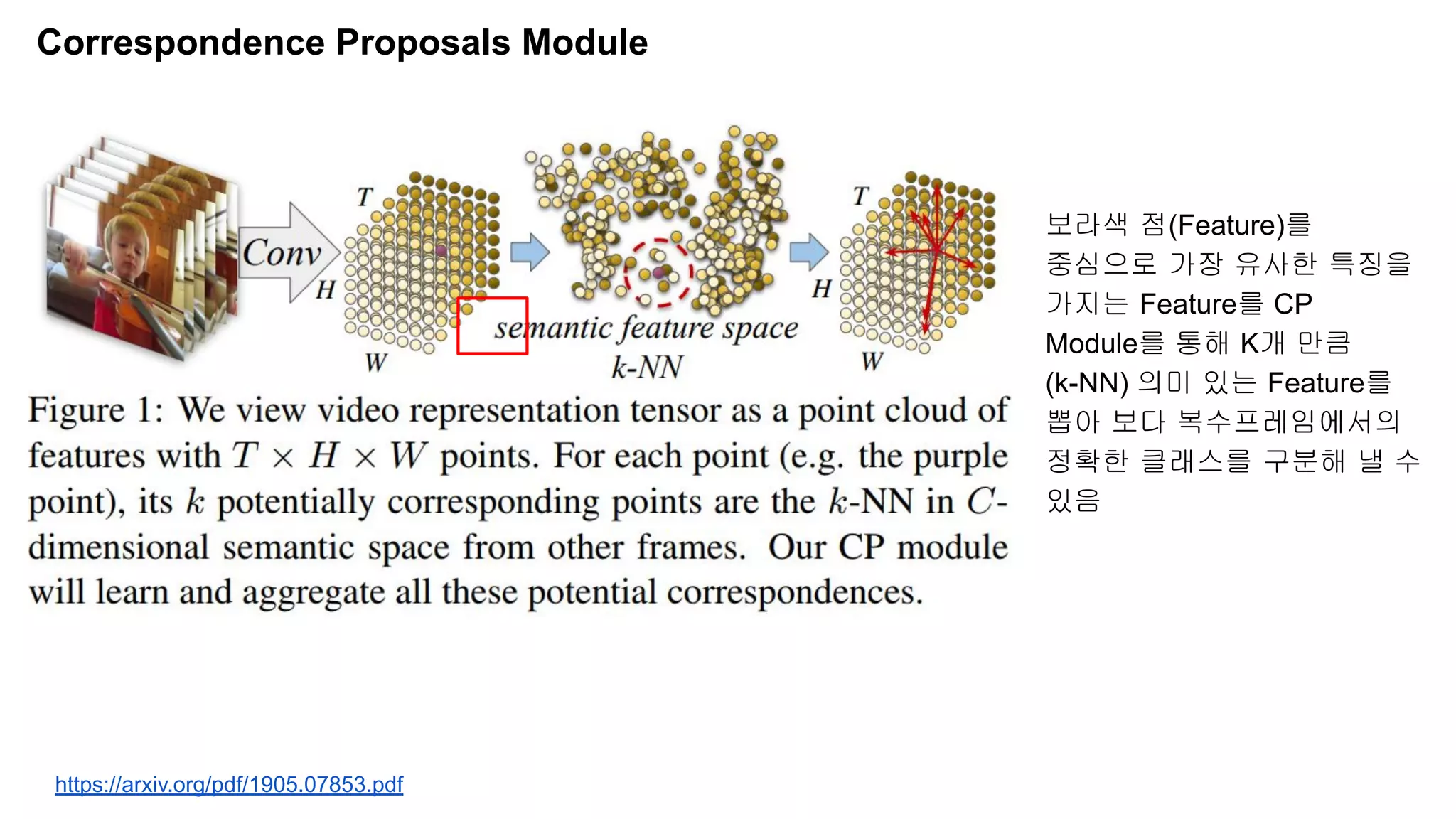

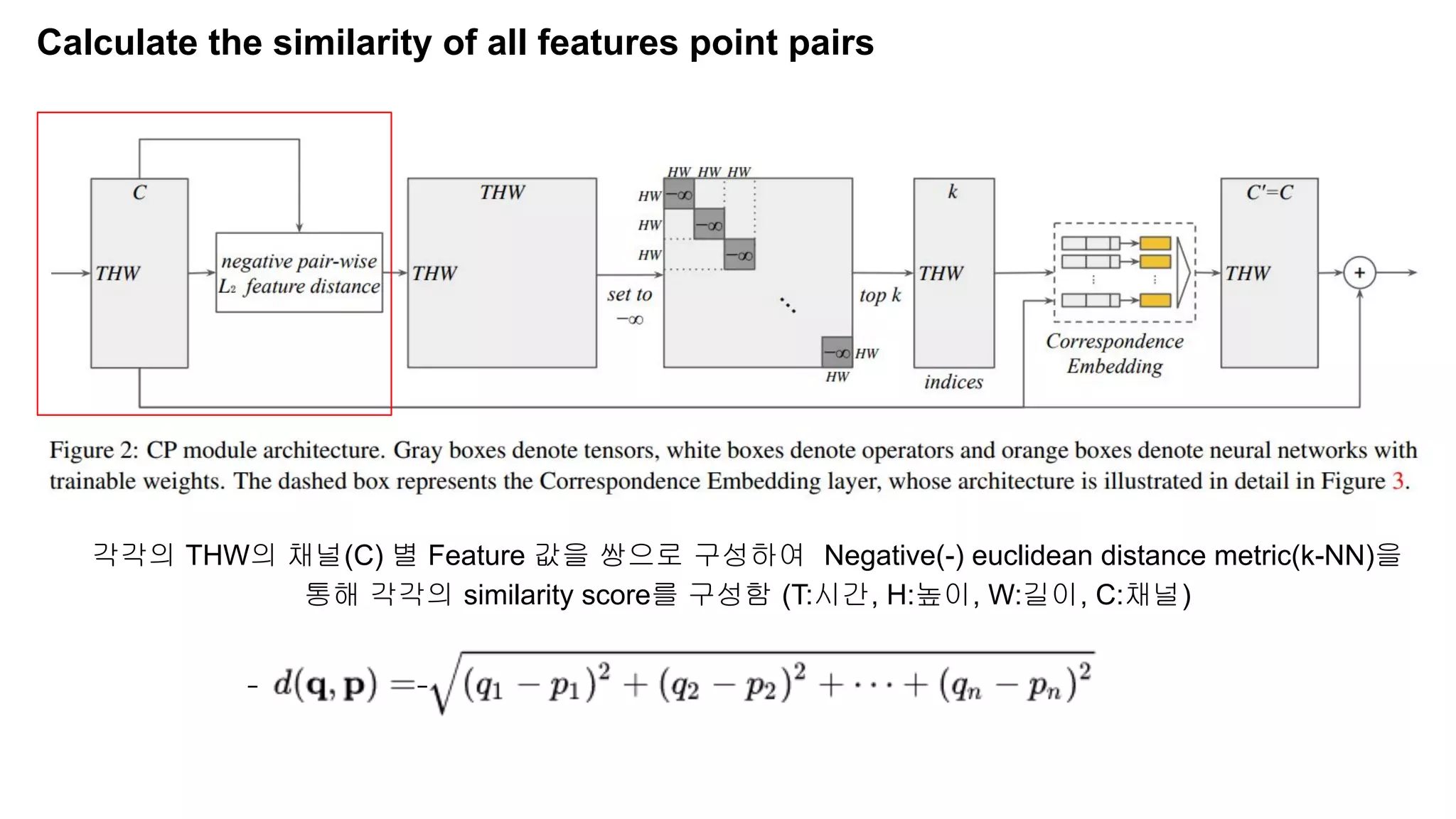

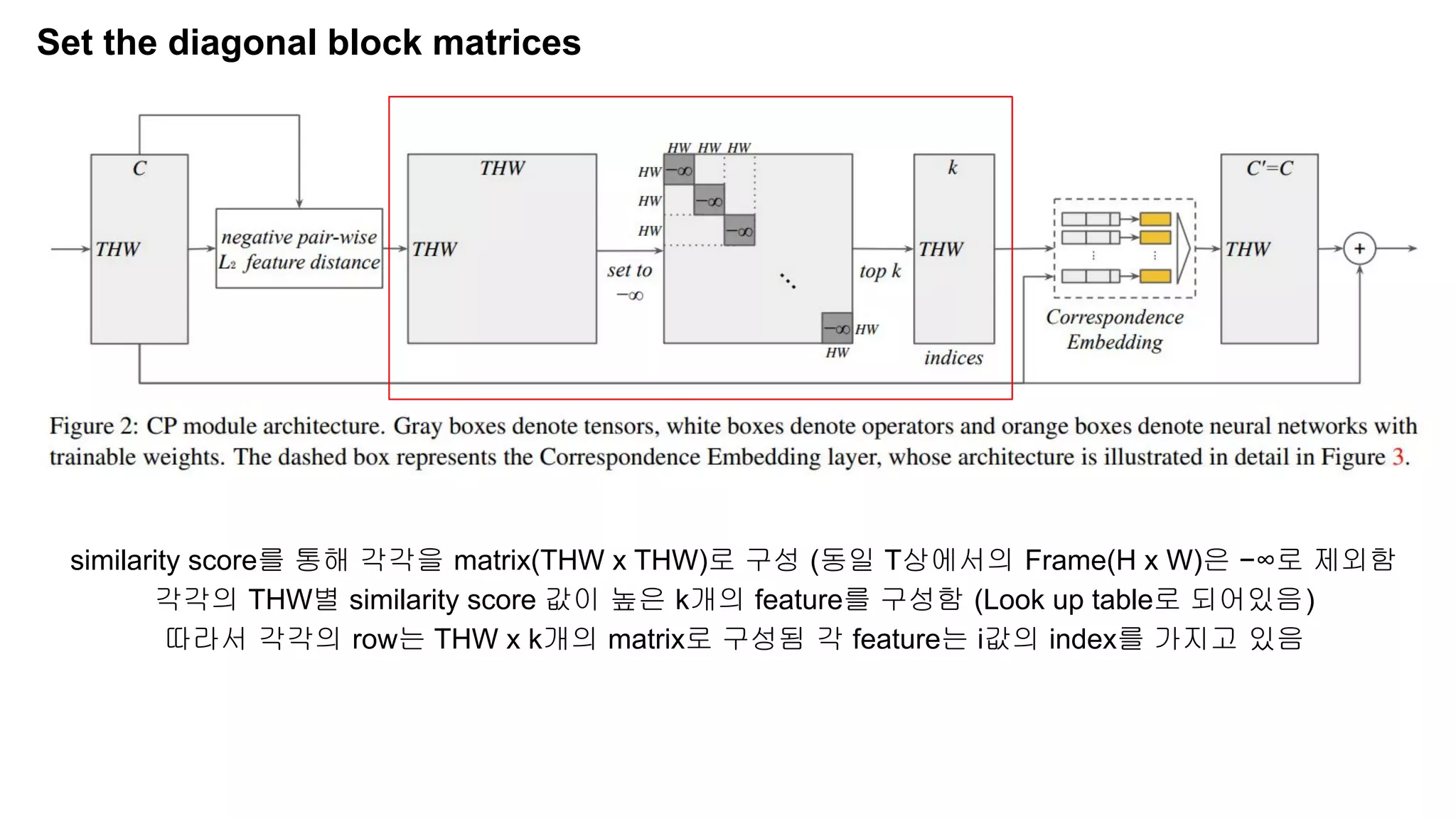

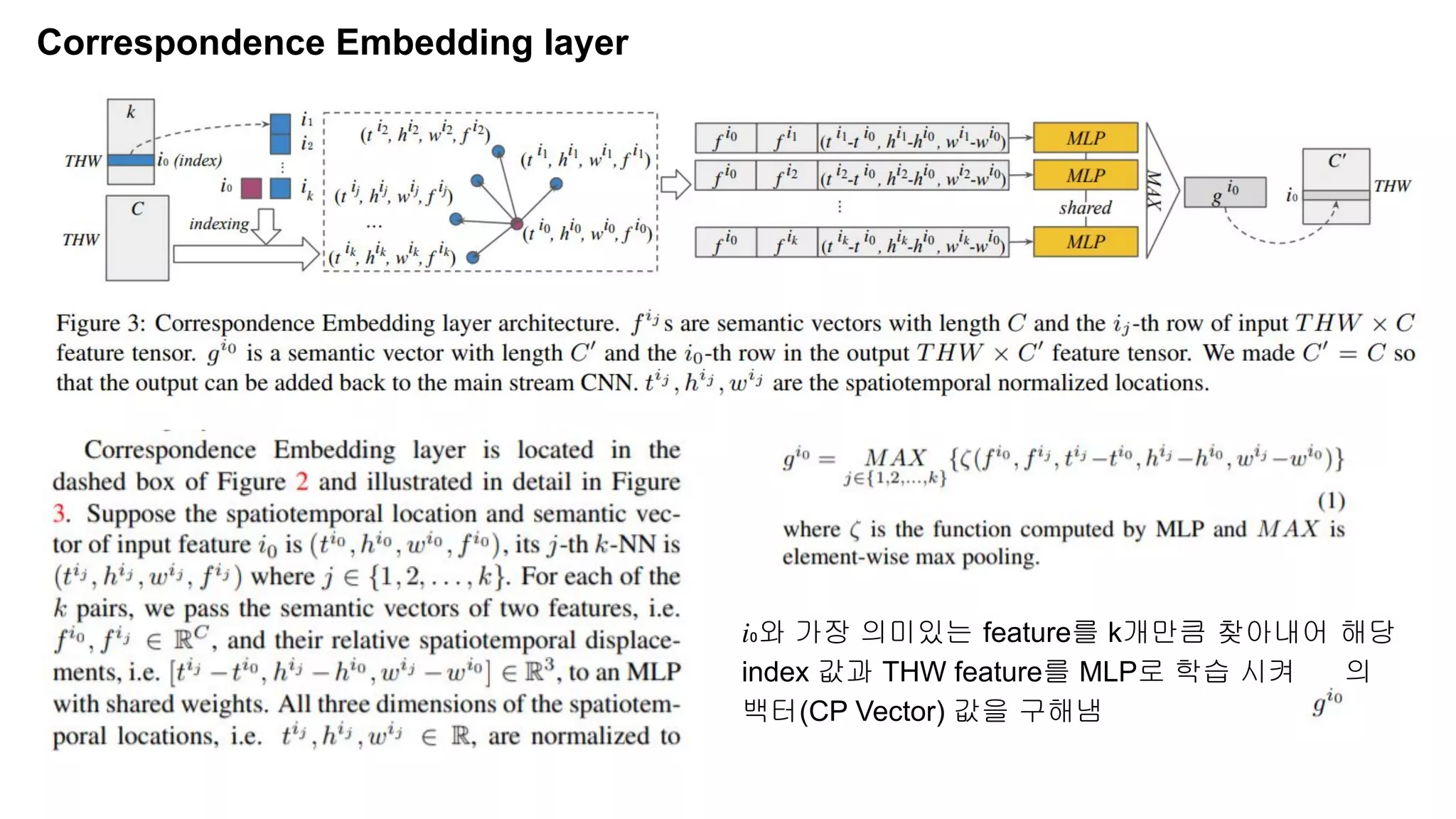

The document discusses advancements in video understanding through action recognition using deep learning techniques, particularly highlighting the use of the Kinetics dataset and a correspondence proposals module (CPNet). It compares traditional RGB-based methods with the proposed architecture that improves long-range motion recognition and achieves state-of-the-art results. Furthermore, it details the architecture and implementation aspects, including performance metrics and processing speeds on various computing setups.

![CP Modules Codes

nn_idx = knn.knn(net, k, new_height * new_width)

net_expand = tf.tile(tf.expand_dims(net, axis=2), [1,1,k,1])

net_grouped = tf_grouping.group_point(net, nn_idx)

coord = get_coord(tf.reshape(video, [batch_size, -1, new_height, new_width,

num_channels_bottleneck]))

coord_expand = tf.tile(tf.expand_dims(coord, axis=2), [1,1,k,1])

coord_grouped = tf_grouping.group_point(coord, nn_idx)

coord_diff = coord_grouped - coord_expand

end_points['coord'] = {'coord': coord, 'coord_grouped': coord_grouped, 'coord_diff':

coord_diff}

net = tf.concat([coord_diff, net_expand, net_grouped], axis=-1)

with tf.variable_scope(scope) as sc:

for i, num_out_channel in enumerate(mlp):

net = tf_util.conv2d(net, num_out_channel, [1,1], padding='VALID',

stride=[1,1], bn=True, is_training=is_training,

scope='conv%d'%(i), bn_decay=bn_decay, weight_decay=weight_decay,

data_format=data_format, freeze_bn=freeze_bn)

end_points['before_max'] = net

net = tf.reduce_max(net, axis=[2], keepdims=True, name='maxpool')

end_points['after_max'] = net

net = tf.reshape(net, [batch_size, num_frames, new_height, new_width, lp[-1]])

with tf.variable_scope(scope) as sc:

net = tf_util.conv3d(net, num_channels, [1, 1, 1], stride=[1, 1, 1],

bn=False, activation_fn=None, weight_decay=weight_decay, scope='conv_final')

net = tf.contrib.layers.batch_norm(net, center=True, scale=True,

is_training=is_training if not freeze_bn else tf.constant(False,

shape=(), dtype=tf.bool), decay=bn_decay, updates_collections=None,

scope='bn_final', data_format=data_format, param_initializers={'gamma':

tf.constant_initializer(0., dtype=tf.float32)}, trainable=not freeze_bn)

return net, end_points

def cp_module(video, k, mlp, scope, mlp0=None, is_training=None, bn_decay=None, weight_decay=None, data_format='NHWC',

distance='l2', activation_fn=None, shrink_ratio=None, freeze_bn=False):](https://image.slidesharecdn.com/paperlearningvideorepresentationsfromcorrespondenceproposals-210410235049/75/Paper-learning-video-representations-from-correspondence-proposals-13-2048.jpg)