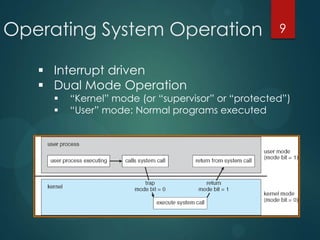

The document provides an overview of operating systems, including what constitutes an OS (kernel, system programs, application programs), storage device hierarchy, system calls, process creation and states, process scheduling, inter-process communication methods like shared memory and pipes, synchronization techniques like mutexes and semaphores, readers-writers problem, and potential for deadlocks. Key concepts covered include kernel mode vs user mode, process control blocks, context switching, preemption, and requirements for deadlock situations.