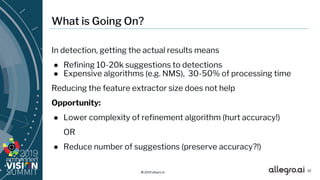

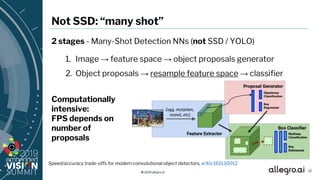

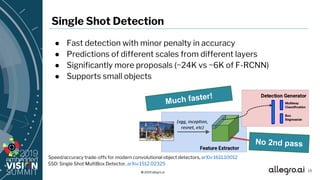

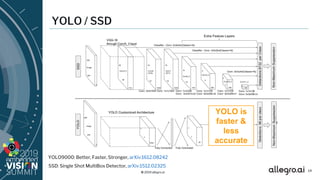

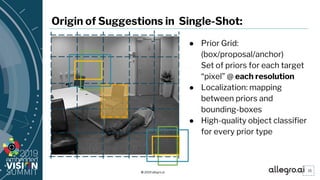

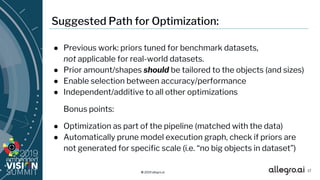

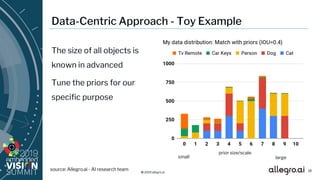

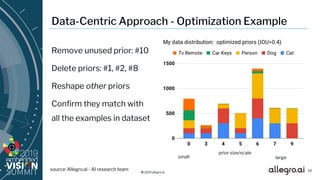

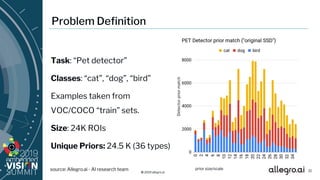

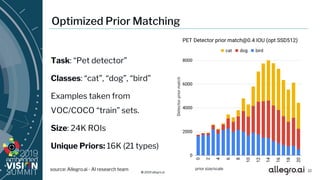

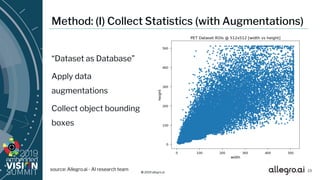

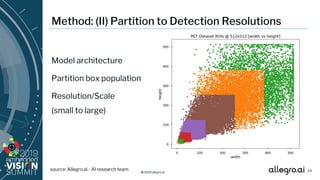

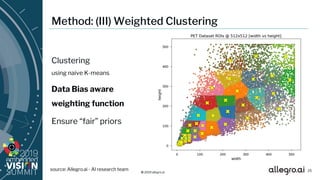

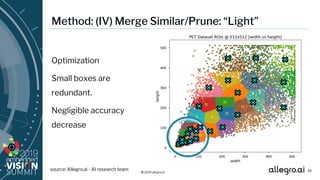

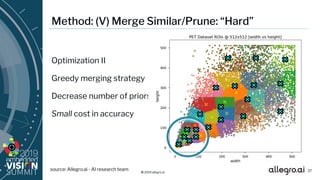

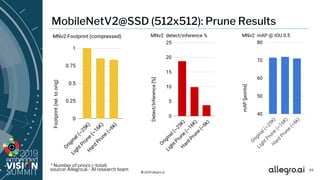

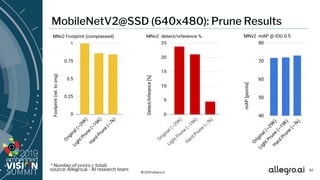

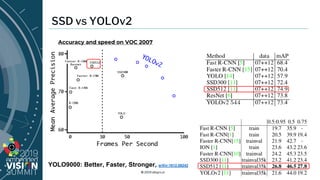

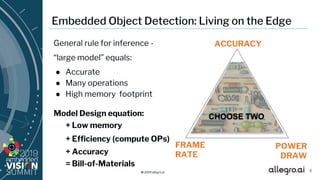

The document discusses optimizing single-shot detection (SSD) models for low-power embedded applications, focusing on achieving a balance between accuracy, memory efficiency, and computational operations. It emphasizes the importance of data-driven optimizations, particularly in refining object detection algorithms and prior matching based on specific datasets. The document also outlines future work directions including automating the pruning process and enhancing models for instance segmentation.

![© 2019 allegro.ai

Detection: Towards Embedded Applications

1. Function split: [feature extractor] + [detection heads]

2. Multiple heads for different tasks Shared feature extractor

3. Single-Shot models Execution

path is not dynamic

4. Use weak feature extractors Low operations

count

5. Optional: model quantization Performance

boost, optimized

6

☹ DLCV “state of the art” == High memory footprint & low FPS](https://image.slidesharecdn.com/t1t4guttmannallegroai2019-190718202707/85/Optimizing-SSD-Object-Detection-for-Low-power-Devices-a-Presentation-from-Allegro-6-320.jpg)