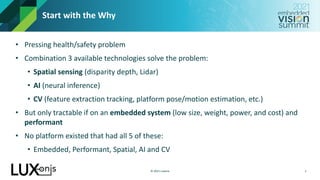

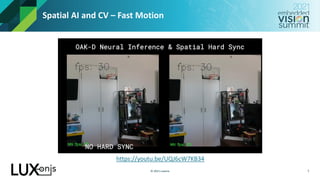

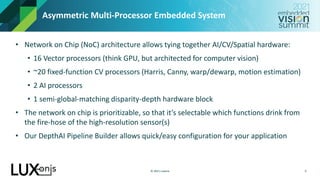

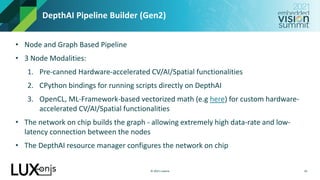

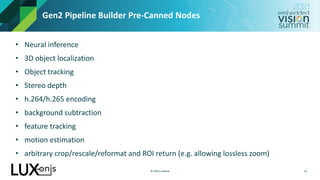

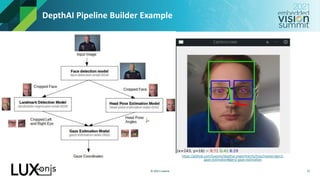

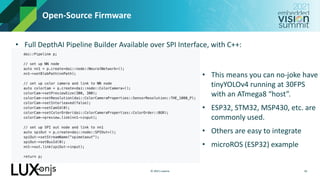

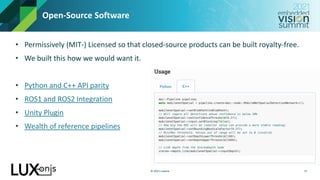

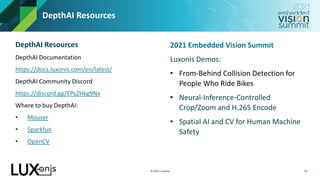

The document discusses the implementation of spatial AI and computer vision (CV) on embedded systems to address significant health and safety problems using technologies such as spatial sensing, AI, and CV. It highlights the capabilities of the DepthAI platform, which integrates multiple processors for high-performance applications in real-time object detection and tracking, emphasizing its open-source nature and ease of integration. The platform presents new opportunities for various applications previously considered impractical due to hardware limitations.