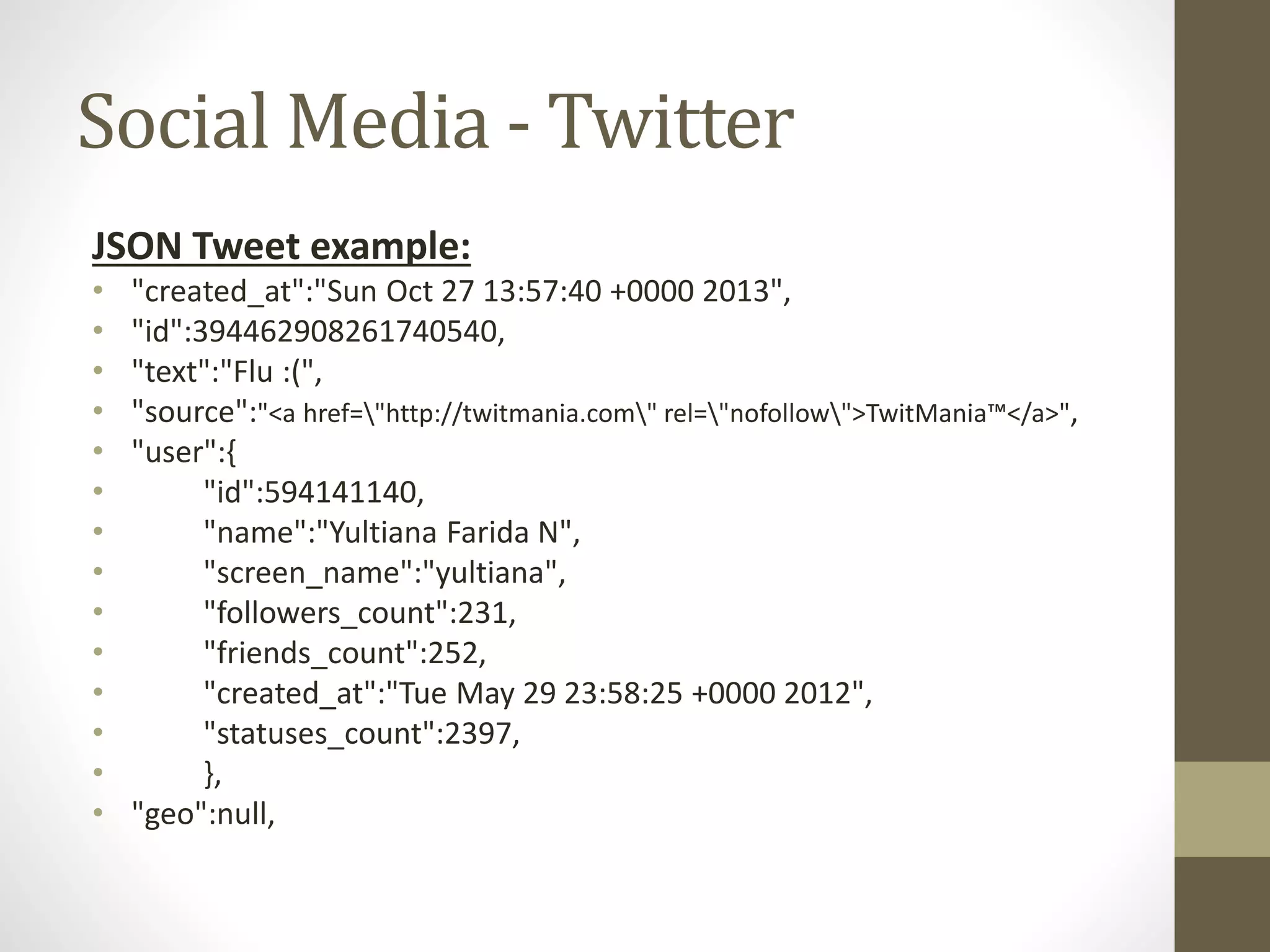

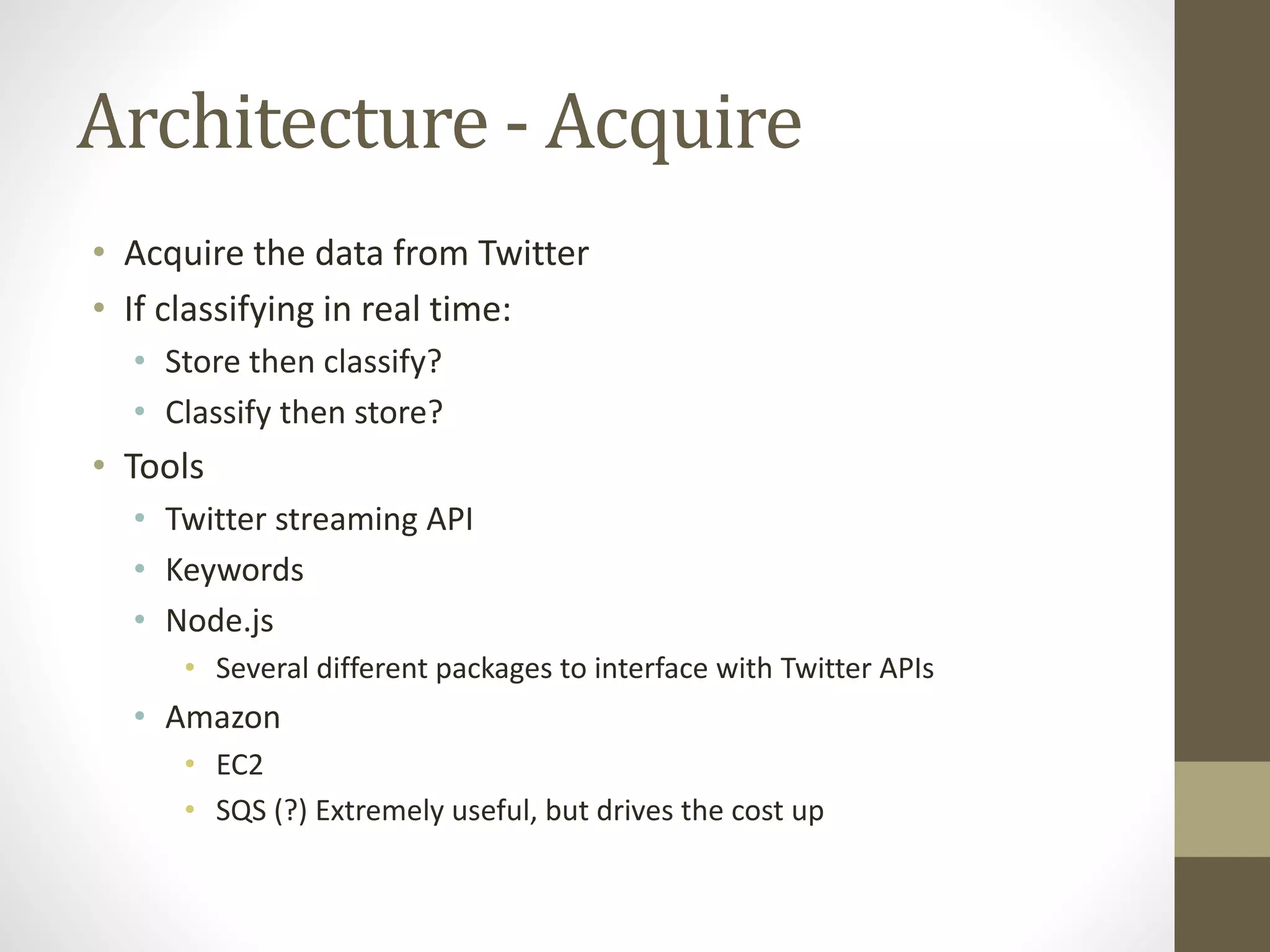

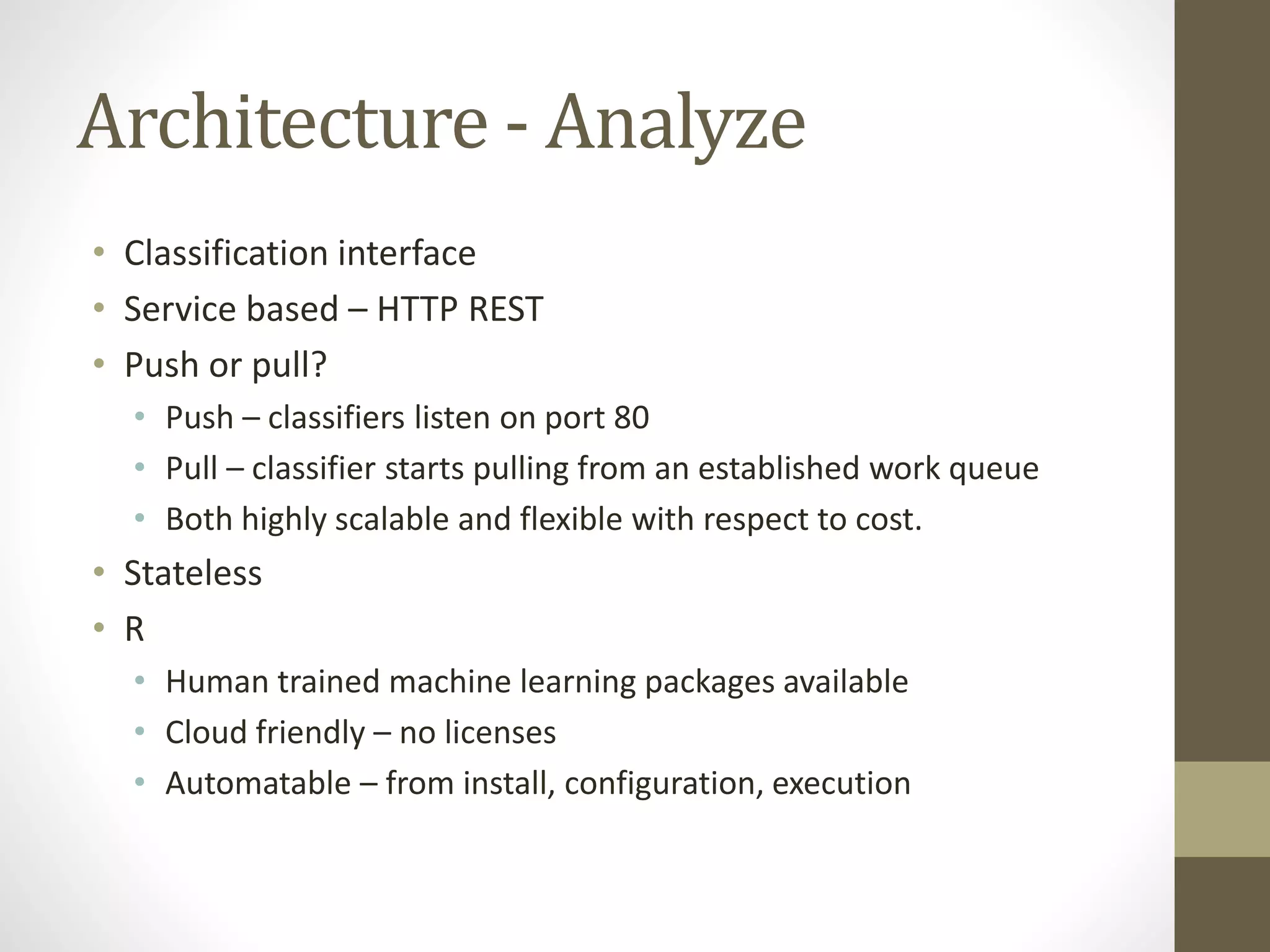

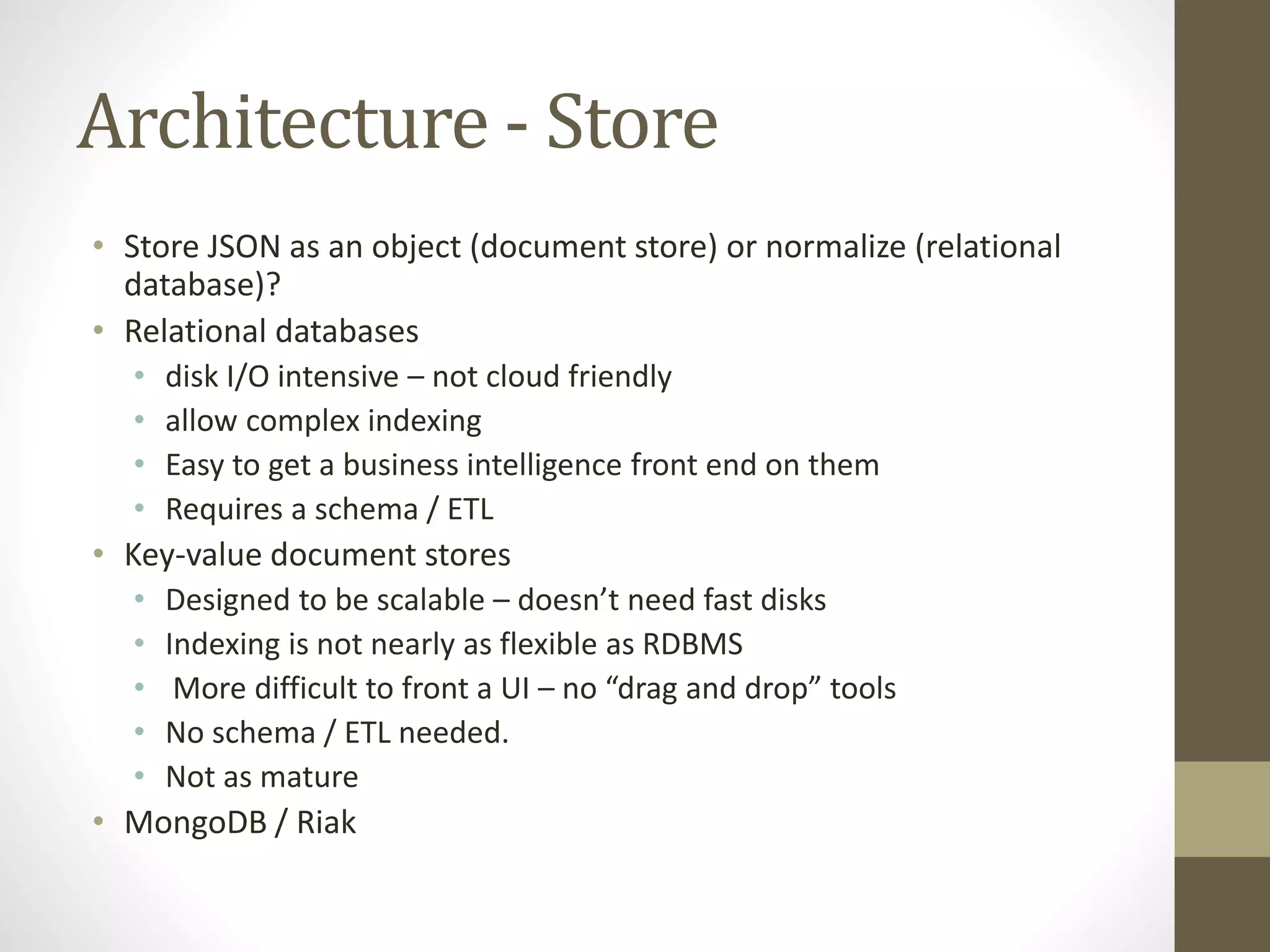

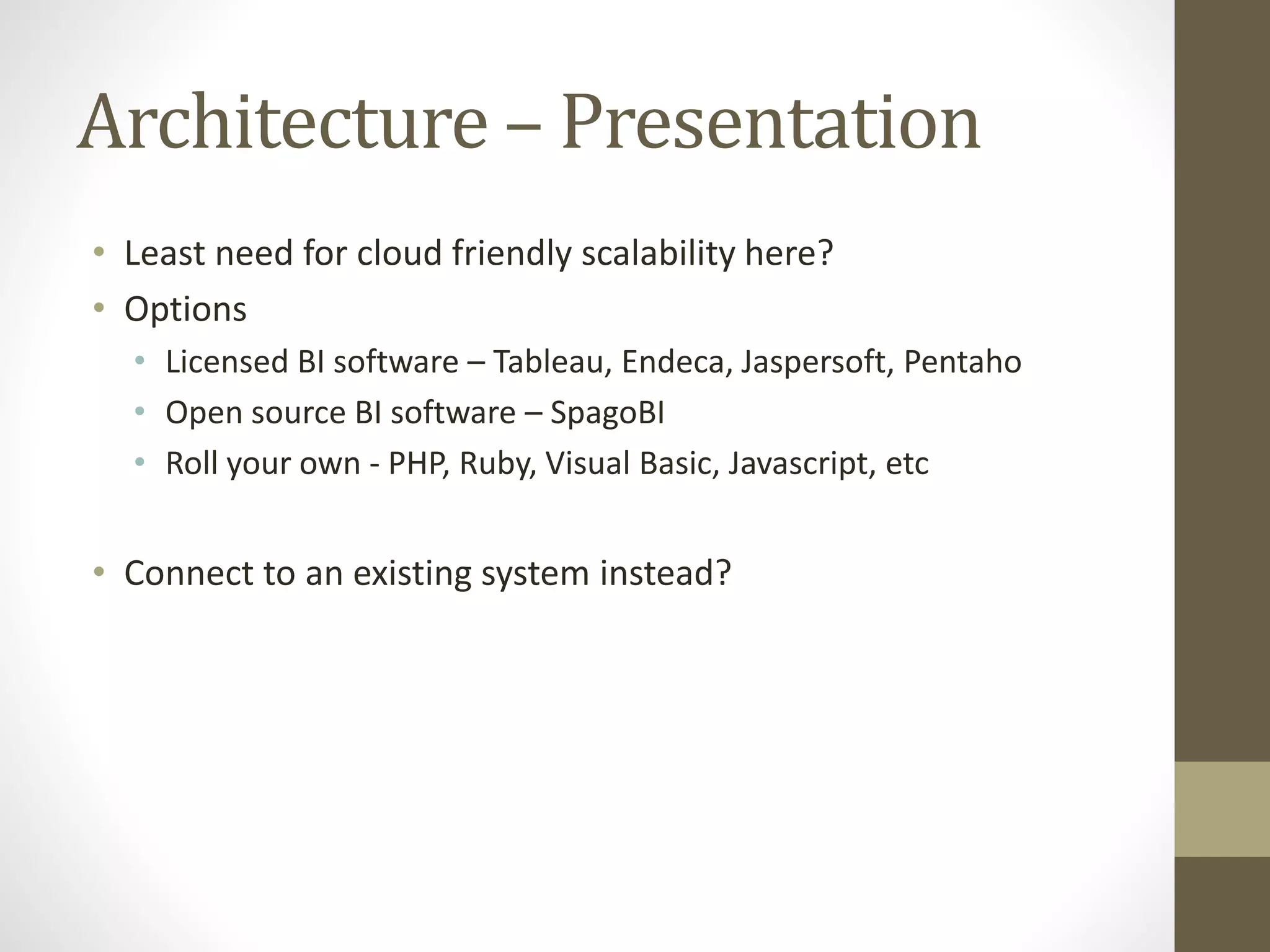

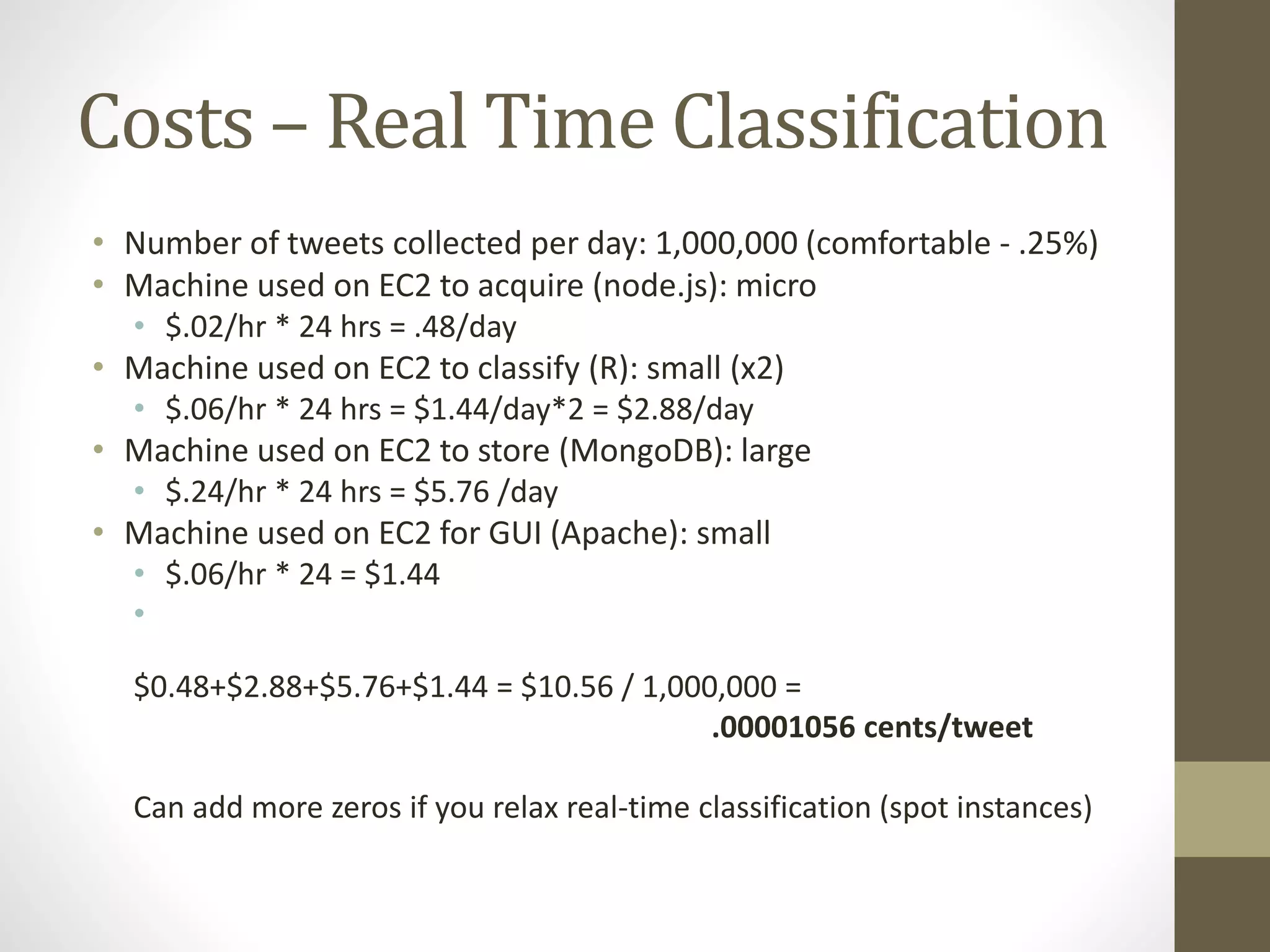

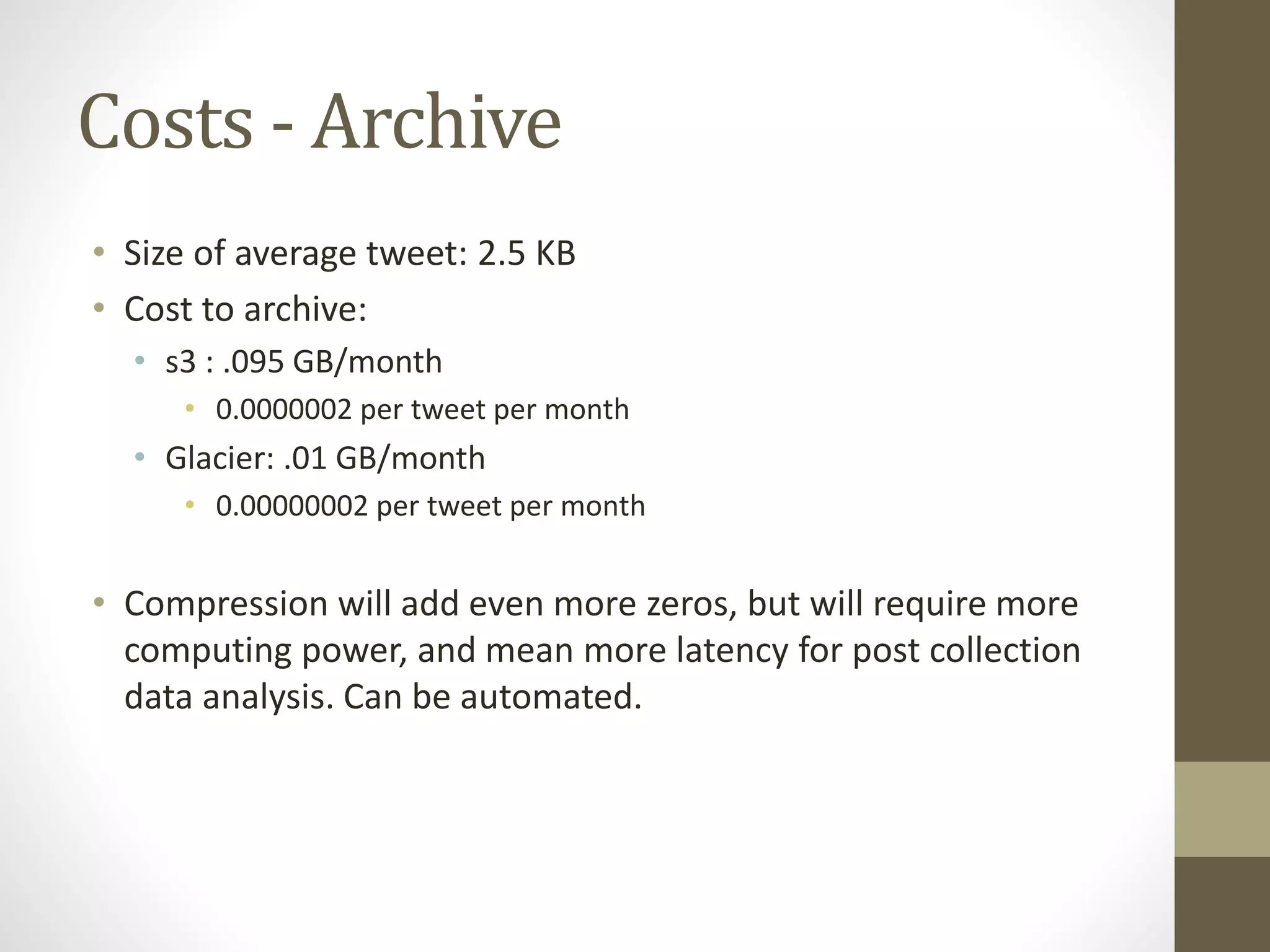

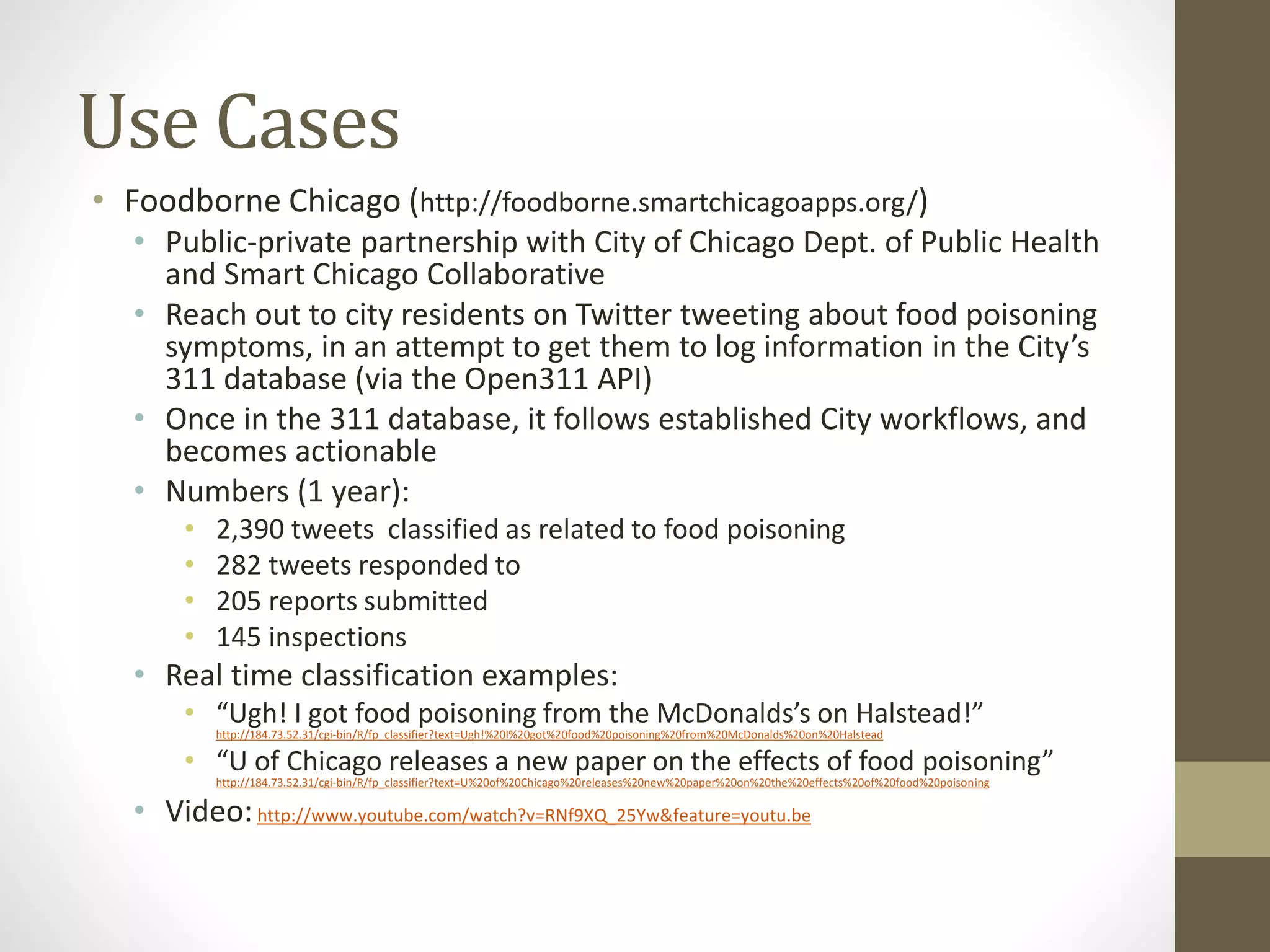

This document discusses using social media, cloud computing, machine learning, open source, and big data analytics to analyze Twitter data. It describes how to collect tweets using the Twitter API, classify tweets in real-time using machine learning models on AWS, store classified tweets in MongoDB on AWS, and present results. Cost estimates for real-time classification of 1 million tweets per day are provided. Use cases described include tracking food poisoning reports and disease occurrence. Future directions discussed include developing turnkey services and linking to additional open data sources.