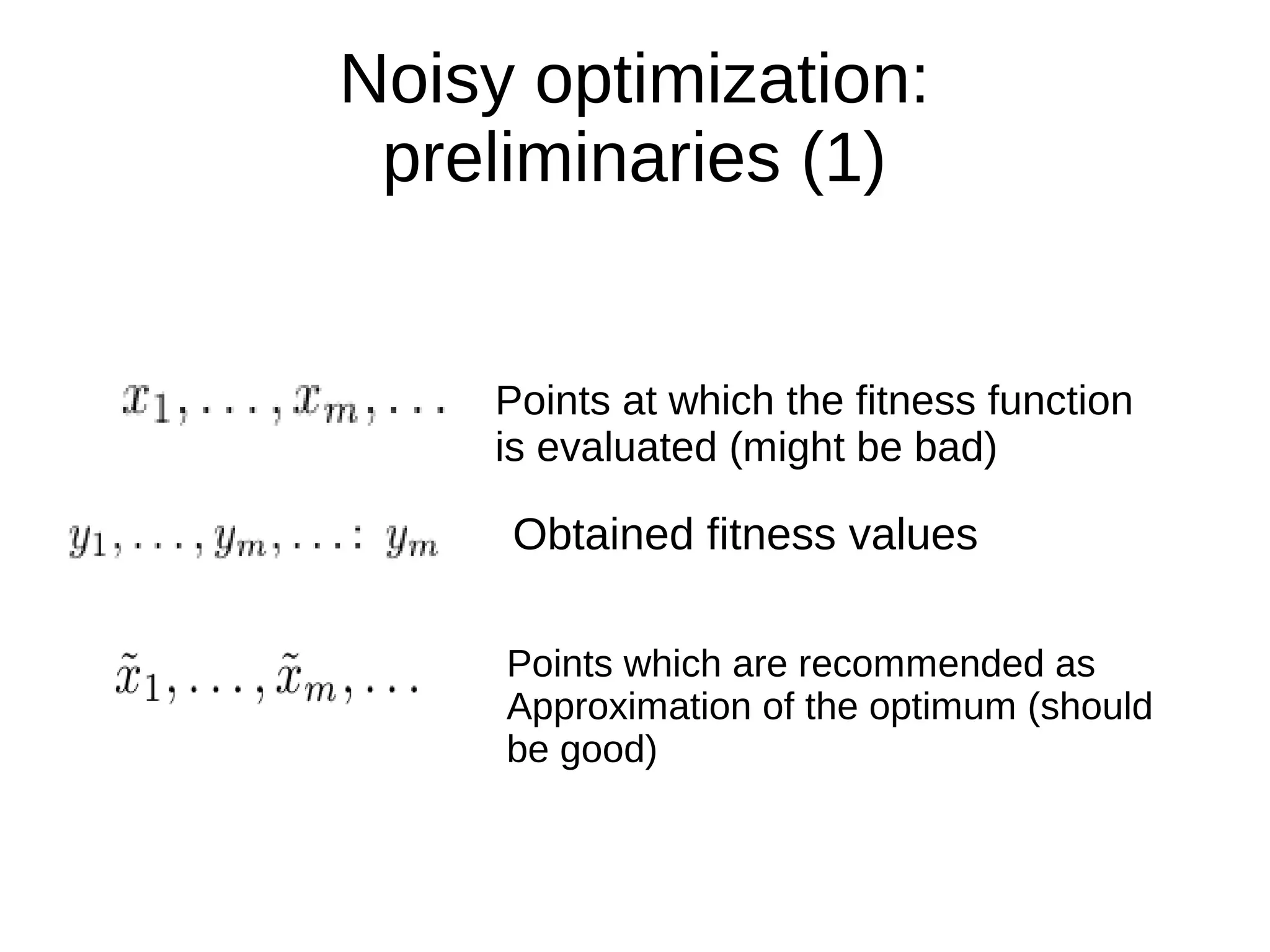

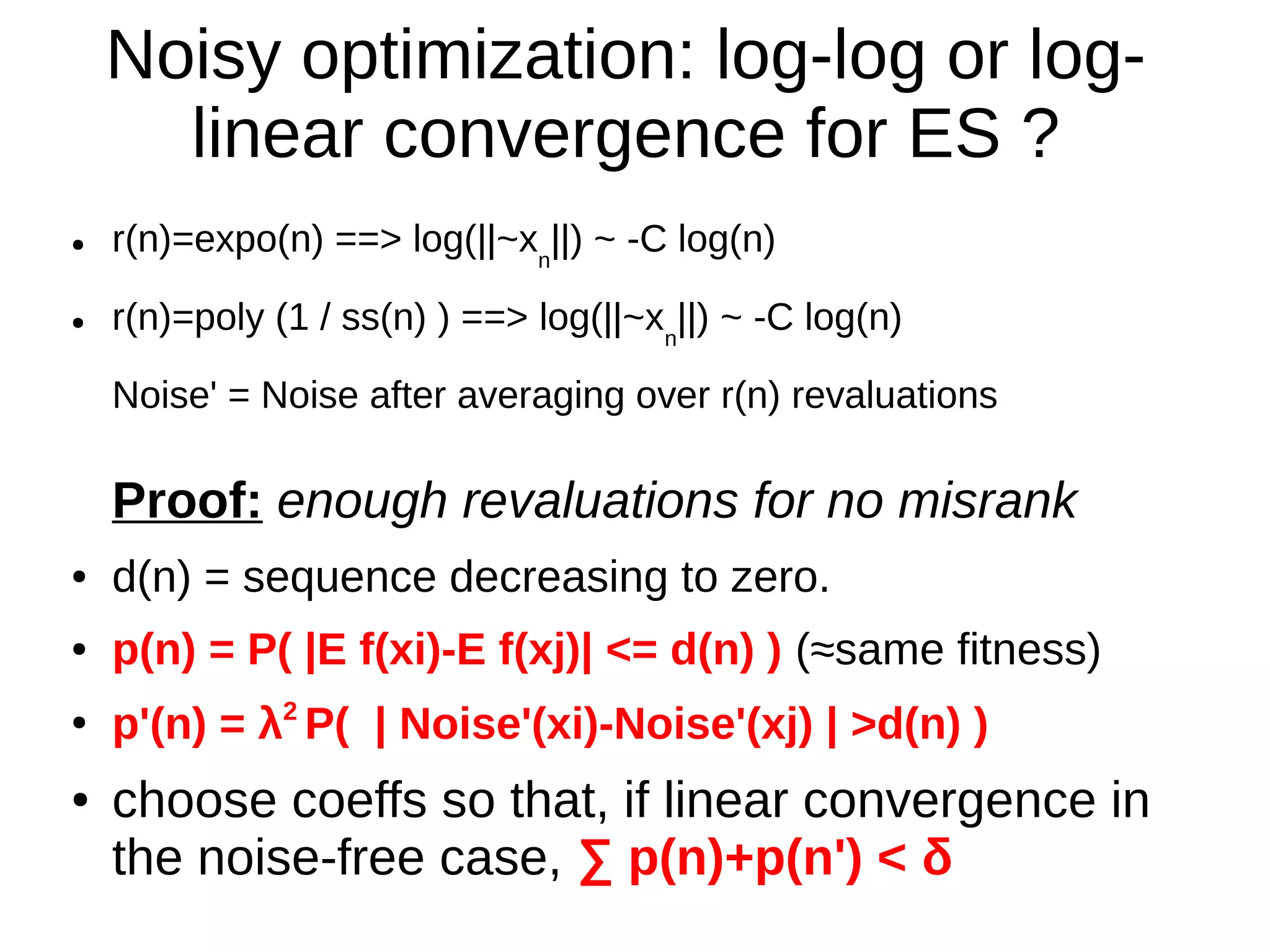

The document discusses the impact of noisy optimization on evolutionary algorithms, focusing on runtime analysis and convergence rates in both discrete and continuous domains. It explores different noise models, log-log and log-linear convergence rates, and the significance of revaluation strategies in optimization processes. The conclusions indicate that careful sampling and consideration of noise are crucial for improving algorithm performance and complexity bounds.