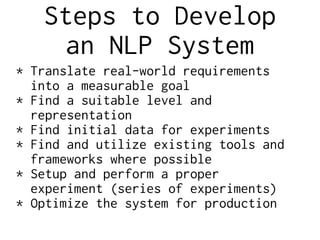

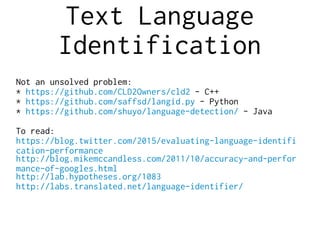

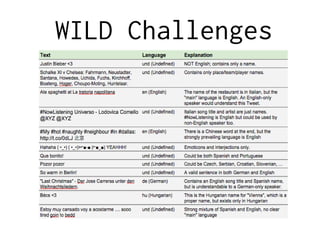

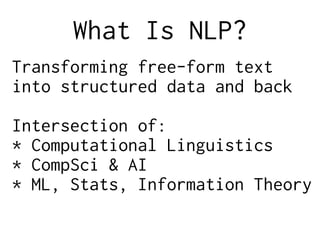

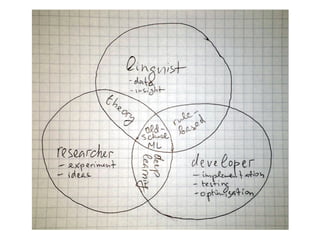

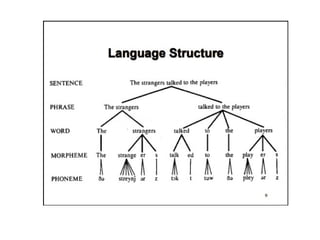

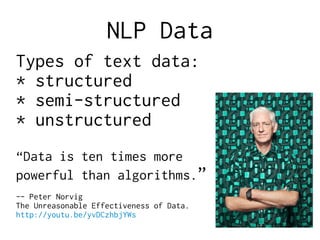

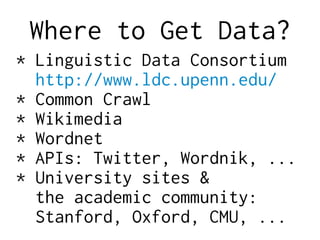

The document outlines a comprehensive overview of Natural Language Processing (NLP) from a practitioner's perspective, detailing various aspects such as data types, common problems, and methodologies, including both rule-based and machine learning approaches. It discusses specific tasks like text language identification, tokenization, and parsing, alongside insights into data sourcing and model training. Additionally, it emphasizes the importance of experimental rigor in developing effective NLP systems while highlighting ongoing challenges and considerations in the field.

![linguist [noun]

1. A specialist in linguistics](https://image.slidesharecdn.com/lang-detect-161011092815/85/NLP-Project-Full-Cycle-8-320.jpg)

![linguist [noun]

1. A specialist in linguistics

linguistics [noun]

1. The scientific study of

language.](https://image.slidesharecdn.com/lang-detect-161011092815/85/NLP-Project-Full-Cycle-9-320.jpg)

![engineer [noun]

5. A person skilled in the

design and programming of

computer systems](https://image.slidesharecdn.com/lang-detect-161011092815/85/NLP-Project-Full-Cycle-17-320.jpg)

![Regular Expressions

Simplest regex: [^s]+

More advanced regex:

w+|[!"#$%&'*+,./:;<=>?@^`~…() {}[|]⟨⟩ ‒–—

«»“”‘’-]―

Even more advanced regex:

[+-]?[0-9](?:[0-9,.]*[0-9])?

|[w@](?:[w'’`@-][w']|[w'][w@'’`-])*[w']?

|["#$%&*+,/:;<=>@^`~…() {}[|] «»“”‘’']⟨⟩ ‒–—―

|[.!?]+

|-+

In fact, it works:

https://github.com/lang-uk/ner-uk/blob/master/doc

/tokenization.md](https://image.slidesharecdn.com/lang-detect-161011092815/85/NLP-Project-Full-Cycle-19-320.jpg)

![researcher [noun]

1. One who researches](https://image.slidesharecdn.com/lang-detect-161011092815/85/NLP-Project-Full-Cycle-23-320.jpg)

![researcher [noun]

1. One who researches

research [noun]

1. Diligent inquiry or

examination to seek or revise

facts, principles, theories,

applications, etc.; laborious

or continued search after

truth](https://image.slidesharecdn.com/lang-detect-161011092815/85/NLP-Project-Full-Cycle-24-320.jpg)

![Averaged Perceptron

def train(model, number_iter, examples):

for i in range(number_iter):

for features, true_tag in examples:

guess = model.predict(features)

if guess != true_tag:

for f in features:

model.weights[f][true_tag] += 1

model.weights[f][guess] -= 1

random.shuffle(examples)](https://image.slidesharecdn.com/lang-detect-161011092815/85/NLP-Project-Full-Cycle-38-320.jpg)

![ML-based Parsing

The parser starts with an empty stack, and a buffer index at 0, with no

dependencies recorded. It chooses one of the valid actions, and applies it to

the state. It continues choosing actions and applying them until the stack is

empty and the buffer index is at the end of the input.

SHIFT = 0; RIGHT = 1; LEFT = 2

MOVES = [SHIFT, RIGHT, LEFT]

def parse(words, tags):

n = len(words)

deps = init_deps(n)

idx = 1

stack = [0]

while stack or idx < n:

features = extract_features(words, tags, idx, n, stack, deps)

scores = score(features)

valid_moves = get_valid_moves(i, n, len(stack))

next_move = max(valid_moves, key=lambda move: scores[move])

idx = transition(next_move, idx, stack, parse)

return tags, parse](https://image.slidesharecdn.com/lang-detect-161011092815/85/NLP-Project-Full-Cycle-39-320.jpg)