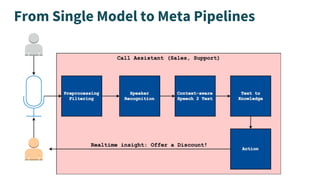

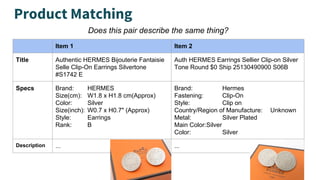

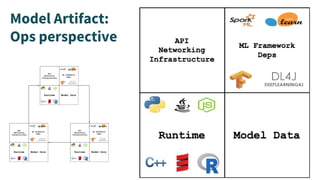

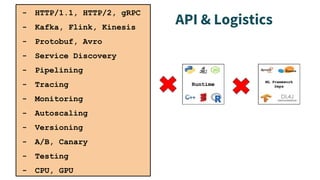

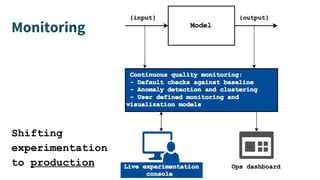

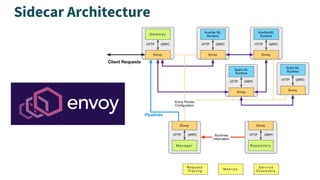

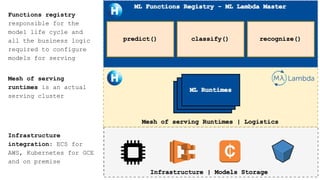

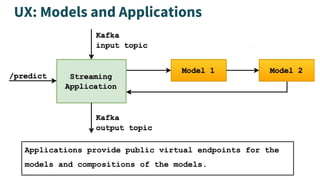

The document discusses the mission of Hydrosphere.io to accelerate machine learning deployment, detailing their open-source products like Mist, ML Lambda, and Sonar. It emphasizes a modular multi-runtime serving architecture that supports various machine learning models and technologies, highlighting the importance of versioning, testing, and microservices in model management. The document outlines their business model of subscription services and consulting for deployment and serving applications.